import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data #数据集

# 读取数据

mnist = input_data.read_data_sets('MNIST.data',one_hot=True)

# 站位

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

# model 五层

w1 = tf.get_variable('w1',shape=[784,512],initializer=tf.contrib.layers.xavier_initializer())

b1 = tf.Variable(tf.random_normal([512]))

a1 = tf.nn.relu(tf.matmul(x,w1) + b1)

w2 = tf.get_variable('w2',shape=[512,512],initializer=tf.contrib.layers.xavier_initializer())

b2 = tf.Variable(tf.random_normal([512]))

a2 = tf.nn.relu(tf.matmul(a1,w2) + b2)

w3 = tf.get_variable('w3',shape=[512,512],initializer=tf.contrib.layers.xavier_initializer())

b3 = tf.Variable(tf.random_normal([512]))

a3 = tf.nn.relu(tf.matmul(a2,w3) + b3)

w4 = tf.get_variable('w4',shape=[512,512],initializer=tf.contrib.layers.xavier_initializer())

b4 = tf.Variable(tf.random_normal([512]))

a4 = tf.nn.relu(tf.matmul(a3,w4) + b4)

w5 = tf.get_variable('w5',shape=[512,10],initializer=tf.contrib.layers.xavier_initializer())

b5 = tf.Variable(tf.random_normal([10]))

a5 = tf.nn.softmax(tf.matmul(a4,w5) + b5)

# 代价

cost = -tf.reduce_mean(tf.reduce_sum(y*tf.log(a5),axis=1))

cost_history = []

# 优化器

optimizer = tf.train.AdamOptimizer(learning_rate=0.0005).minimize(cost)

# 开启会话

sess = tf.Session()

sess.run(tf.global_variables_initializer())

# 周期

training = 15

# 批次

batch_size = 100

# 迭代更新参数

for i in range(training):

avg_cost = 0

batch = int(mnist.train.num_examples/batch_size) #每批次次数

for k in range(batch):

# 训练集 特征和标签

batch_x,batch_y = mnist.train.next_batch(batch_size)

c,_ = sess.run([cost,optimizer],feed_dict={x:batch_x,y:batch_y})

avg_cost += c/batch

print(i,c)

cost_history.append(avg_cost)

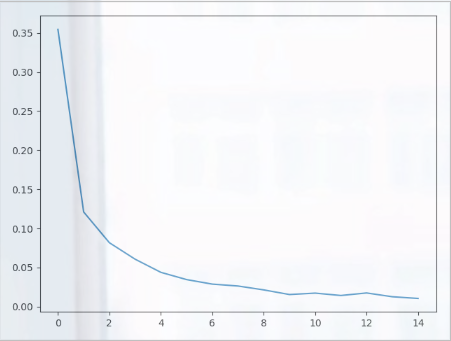

# 画图 代价曲线

plt.plot(cost_history)

plt.show()

# 准确率

accuracy = tf.reduce_mean(tf.cast(tf.equal(tf.argmax(a5,1),tf.argmax(y,1)),tf.float32))

print(sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels}))

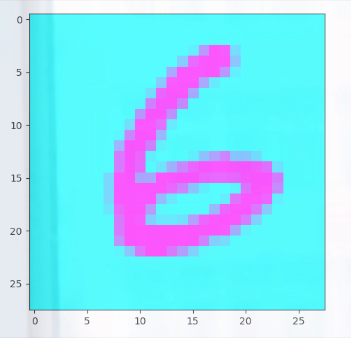

import random

#随机个图片

r = random.randint(0,mnist.test.num_examples-1)

# 标签图片下标

print(sess.run(tf.argmax(mnist.test.labels[r:r+1],1)))

# 预测图片下标

print(sess.run(tf.argmax(a5,1),feed_dict={x:mnist.test.images[r:r+1]}))

# 展示图片

plt.imshow(mnist.test.images[r:r+1].reshape(28,28),cmap='cool')

plt.show()

0 0.28348652

1 0.03937961

2 0.01301315

3 0.10233385

4 0.05613676

5 0.028325982

6 0.07927568

7 0.046484355

8 0.0050286734

9 0.024036586

10 0.012736168

11 0.016650086

12 0.007918741

13 0.013027511

14 0.0048584314

0.9807

[6]

[6]

最后

以上就是爱听歌大炮最近收集整理的关于深度学习之BP算法③——手写数字底层五层隐藏层(TensorFlow)的全部内容,更多相关深度学习之BP算法③——手写数字底层五层隐藏层(TensorFlow)内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复