训练环境 NVIDIA GTX1060 6G 显卡

1. 去kaggel官方网址下载cat dog数据

稍后我把数据传到github上,项目也可以去github上面下载demo

2. 划分训练集、测试集

下在好的数据里面有trainset 25000张,其中猫狗对半,,每一张下面带有标签“cat.x.jpg”或者“dog.x.jpg”,testset 15000张,testset不带有标签。testset是在trianset中随机选取20%,对应脚本如下:

(对应文件名为:create_valset.py)

# -*- coding:utf-8 -*-

__author__ = 'Zubin'

import os

import shutil

import random

root_dir = '/home/hzb/PycharmProjects/cat_dog/data_cat_dog/train/' #注意自己的文件存放路径

output_dir = '/home/hzb/PycharmProjects/cat_dog/data_cat_dog/valset/'

ref = 1

for root, dirs, files in os.walk(root_dir):

number_of_files = len(os.listdir(root))

if number_of_files > ref:

ref_copy = int(round(0.2 * number_of_files)) # 随机筛选20%的图片到新建的文件夹当中

for i in xrange(ref_copy):

chosen_one = random.choice(os.listdir(root))

file_in_track = root

file_to_copy = file_in_track + '/' + chosen_one

if os.path.isfile(file_to_copy) == True:

shutil.copy(file_to_copy, output_dir)

print file_to_copy

else:

for i in xrange(len(files)):

track_list = root

file_in_track = files[i]

file_to_copy = track_list + '/' + file_in_track

if os.path.isfile(file_to_copy) == True:

shutil.copy(file_to_copy, output_dir)

print file_to_copy

print 'Finished !'

3. 生成trainset 、testset txt标签文件

使用的是shell脚本,在终端对应文件路径下执行命令

1>生成trainset标签文件(文件名为:create_flielist.sh)

# /home/hzb/PycharmProjects/cat_dog/caffes-and-dogs-master sh

DATA=input/train/

WORK=input

echo "create train.txt..."

rm -rf $DATA/train.txt

find $DATA -name cat.*.jpg | cut -d '/' -f3 | sed "s/$/ 0/">>$DATA/train.txt

find $DATA -name dog.*.jpg | cut -d '/' -f3 | sed "s/$/ 1/">>$DATA/tmp.txt

cat $DATA/tmp.txt>>$DATA/train.txt

rm -rf $DATA/tmp.txt

mv $DATA/train.txt $WORK/

echo "Done..."

2>生成testset标签文件(文件名为:create_flielist_test.sh)

# /home/hzb/PycharmProjects/cat_dog/caffes-and-dogs-master sh

DATA=input/test

WORK=input

echo "create test.txt......."

rm -rf $DATA/test.txt

find $DATA -name cat.*.jpg | cut -d '/' -f3 | sed "s/$/ 0/">>$DATA/test.txt

find $DATA -name dog.*.jpg | cut -d '/' -f3 | sed "s/$/ 1/">>$DATA/tmp.txt

cat $DATA/tmp.txt>>$DATA/test.txt

rm -rf $DATA/tmp.txt

mv $DATA/test.txt $WORK/

echo "Done........"

4. 把trainset、testset转换成LMDB格式

1>trainset脚本(文件名为:create_lmdb_train.sh)

# /home/hzb/PycharmProjects/cat_dog sh

DATA=data_cat_dog

WORK=data_cat_dog

TOOLS=/home/hzb/caffe/build/tools

RESIZE=true

if $RESIZE; then

RESIZE_HEIGHT=32

RESIZE_WIDTH=32

else

RESIZE_HEIGHT=0

RESIZE_WIDTH=0

fi

echo "Creating train lmdb..."

rm -rf $DATA/catdog_train_lmdb

GLOG_logtostderr=1 $TOOLS/convert_imageset

--resize_height=$RESIZE_HEIGHT

--resize_width=$RESIZE_WIDTH

--shuffle

/home/hzb/PycharmProjects/cat_dog/data_cat_dog/train/

$WORK/train.txt

$DATA/img_train_lmdb

echo "Creating val lmdb..."

#GLOG_logtostderr=1 $TOOLS/convert_imageset

# --resize_height=$RESIZE_HEIGHT

# --resize_width=$RESIZE_WIDTH

# --shuffle

# $VAL_DATA_ROOT

# $DATA/val.txt

# $EXAMPLE/face_val_lmdb

echo "Done."

生成的文件img_train_lmdb下含有:data.mdb和lock.mdb文件

2>testset脚本(文件名为:create_lmdb_test.sh)

DATA=data_cat_dog

WORK=data_cat_dog

TOOLS=/home/hzb/caffe/build/tools

RESIZE=true

if $RESIZE; then

RESIZE_HEIGHT=32#图片统一缩放成32×32大小

RESIZE_WIDTH=32

else

RESIZE_HEIGHT=0

RESIZE_WIDTH=0

fi

#echo "Creating train lmdb..."

#rm -rf $DATA/catdog_train_lmdb

#GLOG_logtostderr=1 $TOOLS/convert_imageset

# --resize_height=$RESIZE_HEIGHT

# --resize_width=$RESIZE_WIDTH

# --shuffle

# /home/hzb/PycharmProjects/cat_dog/data_cat_dog/train/

# $WORK/train.txt

# $DATA/img_train_lmdb

echo "Creating val lmdb..."

#rm -rf $DATA/catdog_test_lmdb

GLOG_logtostderr=1 $TOOLS/convert_imageset

--resize_height=$RESIZE_HEIGHT

--resize_width=$RESIZE_WIDTH

--shuffle

/home/hzb/PycharmProjects/cat_dog/data_cat_dog/valset/

$WORK/test.txt

$DATA/img_test_lmdb

echo "Done."

生成的文件img_test_lmdb下含有:data.mdb和lock.mdb文件

5. 把trainset生成均值

图片做归一化处理 (image-meanvalue)/255,在对应文件路路径下现新建好mean_file文件夹

#!/usr/bin/env sh

# Compute the mean image from the imagenet training lmdb

TOOLS=/home/hzb/caffe/build/tools

DATA=/home/hzb/PycharmProjects/cat_dog

rm -rf $DATA/data_cat_dog/mean_file/mean.binaryproto

$TOOLS/compute_image_mean $DATA/data_cat_dog/img_train_lmdb

$DATA/data_cat_dog/mean_file/mean.binaryproto

echo "Done."

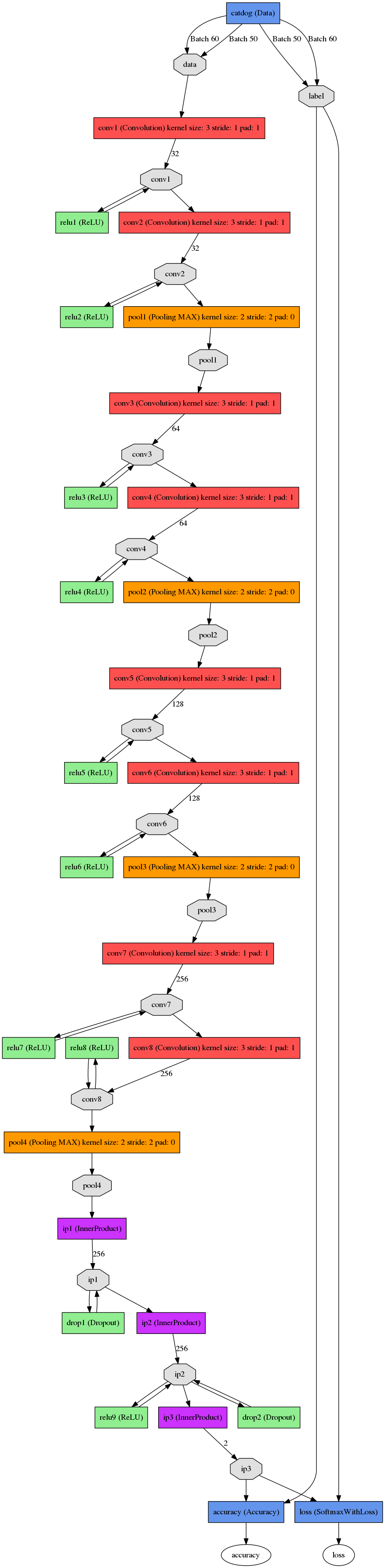

网络的结构

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1.0#局部学习率

#在这没有设置惩罚系数

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 32

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "conv1"

top: "conv1"

}

layer {

name: "conv2"

type: "Convolution"

bottom: "conv1"

top: "conv2"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 32

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu2"

type: "ReLU"

bottom: "conv2"

top: "conv2"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv2"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv3"

type: "Convolution"

bottom: "pool1"

top: "conv3"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu3"

type: "ReLU"

bottom: "conv3"

top: "conv3"

}

layer {

name: "conv4"

type: "Convolution"

bottom: "conv3"

top: "conv4"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu4"

type: "ReLU"

bottom: "conv4"

top: "conv4"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv4"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv5"

type: "Convolution"

bottom: "pool2"

top: "conv5"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu5"

type: "ReLU"

bottom: "conv5"

top: "conv5"

}

layer {

name: "conv6"

type: "Convolution"

bottom: "conv5"

top: "conv6"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "conv6"

top: "conv6"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv6"

top: "pool3"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv7"

type: "Convolution"

bottom: "pool3"

top: "conv7"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "conv7"

top: "conv7"

}

layer {

name: "conv8"

type: "Convolution"

bottom: "conv7"

top: "conv8"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu8"

type: "ReLU"

bottom: "conv8"

top: "conv8"

}

layer {

name: "pool4"

type: "Pooling"

bottom: "conv8"

top: "pool4"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "pool4"

top: "ip1"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

inner_product_param {

num_output: 256

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "drop1"

type: "Dropout"

bottom: "ip1"

top: "ip1"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

inner_product_param {

num_output: 256

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu9"

type: "ReLU"

bottom: "ip2"

top: "ip2"

}

layer {

name: "drop2"

type: "Dropout"

bottom: "ip2"

top: "ip2"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "ip3"

type: "InnerProduct"

bottom: "ip2"

top: "ip3"

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

inner_product_param {

num_output: 2

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "ip3"

bottom: "label"

top: "accuracy"

include{

phase:TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "ip3"

bottom: "label"

top: "loss"

}

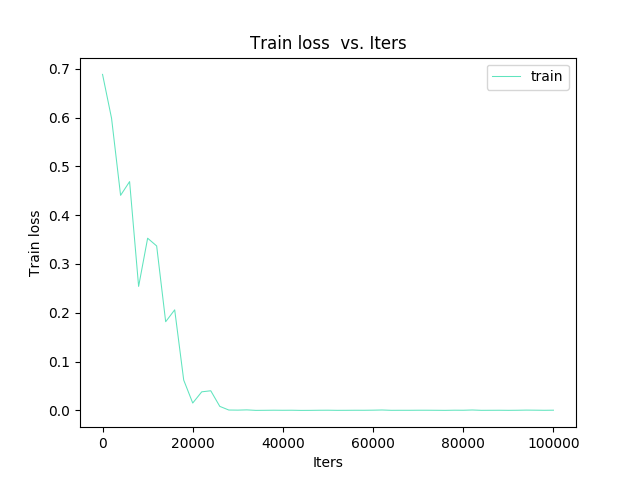

参数设置

参数设置

net: "/home/hzb/PycharmProjects/cat_dog/train_test.prototxt"

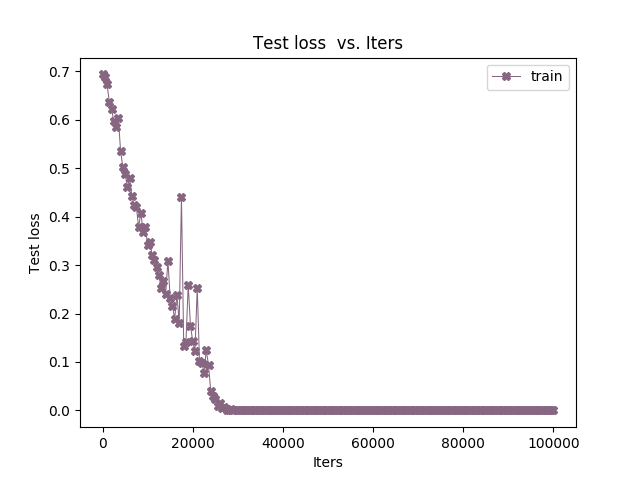

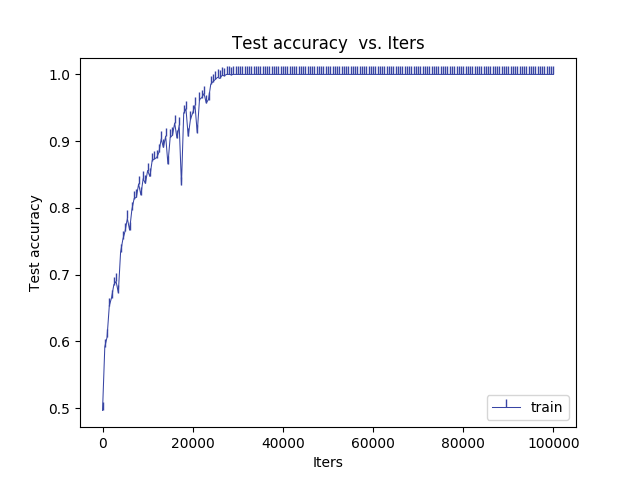

test_iter: 50

test_interval: 500#迭代500次测试一次

base_lr:0.001

lr_policy: "inv"

gamma:0.0001

max_iter:100000#最大迭代次数为100000次

momentum:0.9

weight_decay: 0.0005

power:0.75

display: 2000#迭代2000次打印一次输出信息

snapshot:5000#迭代5000次保存一下模型

snapshot_prefix: "/home/hzb/PycharmProjects/cat_dog/model"#模型保存的路径

solver_mode: GPU

6.最后一步进行训练

训练过程会把训练日志保存在对应文件路径中。tee 命令后为你所要保存log日志文件的路径

# /home/hzb/caffe sh

./build/tools/caffe train --solver=/home/hzb/PycharmProjects/cat_dog/solver.prototxt 2>&1| tee /home/hzb/PycharmProjects/cat_dog/train.log

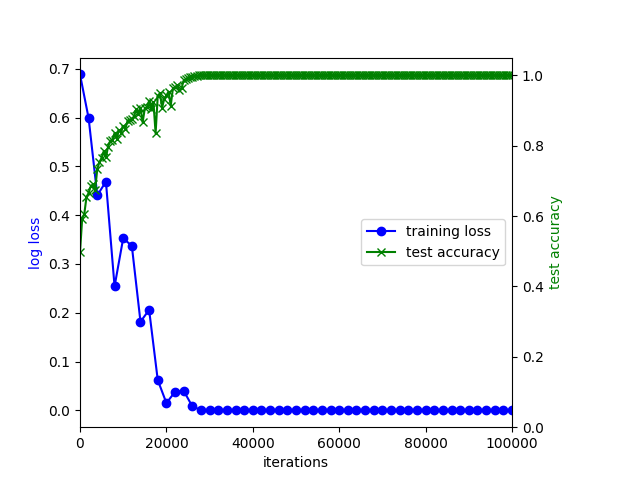

训练结束后根据日志生成train-loss 、test-accuracy

###脚本生成准确率 损失函数图

import math

import os

import re

import sys

import matplotlib.pyplot as plt

import numpy as np

import pylab

from mpl_toolkits.axes_grid1 import host_subplot

from pylab import figure, show, legend

# read the log file

fp = open('/home/hzb/PycharmProjects/cat_dog/train.log', 'r')

train_iterations = []

train_loss = []

test_iterations = []

test_accuracy = []

for ln in fp:

# get train_iterations and train_loss

if '] Iteration ' in ln and 'loss = ' in ln:

arr = re.findall(r'ion d+.*?', ln)

train_iterations.append(int(arr[0][4:]))

train_loss.append(float(ln.strip().split(' = ')[-1]))

# get test_iteraitions

if '] Iteration' in ln and 'Testing net (#0)' in ln:

arr = re.findall(r'ion bd+b,', ln)

test_iterations.append(int(arr[0].strip(',')[4:]))

# get test_accuracy

if '#0:' in ln and 'accuracy =' in ln:

test_accuracy.append(float(ln.strip().split(' = ')[-1]))

fp.close()

host = host_subplot(111)

plt.subplots_adjust(right=0.8) # ajust the right boundary of the plot window

par1 = host.twinx()

# set labels

host.set_xlabel("iterations")

host.set_ylabel("log loss")

par1.set_ylabel("test accuracy")

# plot curves

p1, = host.plot(train_iterations, train_loss, 'ob-', label="training loss")

p2, = par1.plot(test_iterations, test_accuracy, 'xg-', label="test accuracy")

#p3, = par1.plot(test_iterations, test_accuracy, 'xg-', label="validation accuracy")

# set location of the legend,

# 1->rightup corner, 2->leftup corner, 3->leftdown corner

# 4->rightdown corner, 5->rightmid ...

host.legend(loc=5)

# set label color

host.axis["left"].label.set_color(p1.get_color())

par1.axis["right"].label.set_color(p2.get_color())

# set the range of x axis of host and y axis of par1

host.set_xlim([0, 100000])

par1.set_ylim([0., 1.05])

plt.draw()

plt.show()

github code (https://github.com/ZubinHuang/cat-VS-dog)

参考链接](https://blog.csdn.net/mdjxy63/article/details/78946455)

最后

以上就是洁净世界最近收集整理的关于caffe框架下的二分类(猫狗大战 cat VS dog)ubuntu环境的全部内容,更多相关caffe框架下的二分类(猫狗大战内容请搜索靠谱客的其他文章。

![[Kaggle] dogs-vs-cats之制作数据集[1]](https://file2.kaopuke.com:8081/files_image/reation/bcimg13.png)

发表评论 取消回复