我是靠谱客的博主 直率蜗牛,这篇文章主要介绍视觉SLAM十四讲CH7课后习题10_1转载于:视觉SLAM十四讲(第二版)第7讲习题解答 - 知乎大家好,这里是Philip~最近在学习高博的《视觉SLAM十四讲》(第二版),以下是对第7讲习题的解答,如有错误或不全面的地方还请大家指正。 1. 除了本书介绍的ORB特征点,你还能找到哪些特征点?请说说SIFT或SURF的…https://zhuanlan.zhihu.com/p/38942086410. *在Ceres 中实现PnP 和ICP 的优化。 10icp.cpp:执行结果:,现在分享给大家,希望可以做个参考。

转载于:视觉SLAM十四讲(第二版)第7讲习题解答 - 知乎大家好,这里是Philip~最近在学习高博的《视觉SLAM十四讲》(第二版),以下是对第7讲习题的解答,如有错误或不全面的地方还请大家指正。 1. 除了本书介绍的ORB特征点,你还能找到哪些特征点?请说说SIFT或SURF的… https://zhuanlan.zhihu.com/p/38942086410. *在Ceres 中实现PnP 和ICP 的优化。

https://zhuanlan.zhihu.com/p/38942086410. *在Ceres 中实现PnP 和ICP 的优化。

代码链接:https://github.com/Philipcjh/HW-of-SLAMBOOK2/blob/main/hw7/p10PnP.cpp

在原作者的基础上我加了CMakeLists.txt文件,方便大家能够在Ubuntu上编译执行。具体的代码注释没有写,如果有大神写完了,到时候可以私信我,把他分享给我,我将感激不尽。

10pnp.cpp:

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <ceres/ceres.h>

#include <ceres/rotation.h>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include "sophus/se3.hpp"

#include <chrono>

using namespace std;

using namespace Eigen;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

// 像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p, const Mat &K);

//Ref:http://www.ceres-solver.org/nnls_tutorial.html#bundle-adjustment

struct PnPReprojectionError {

PnPReprojectionError(Point2f pts_2d, Point3f pts_3d)

: _pts_2d(pts_2d), _pts_3d(pts_3d) {}

template <typename T>

bool operator()(const T* const rotation_vector,

const T* const translation_vector,

T* residuals) const {

T p_transformed[3], p_origin[3];

p_origin[0]=T(_pts_3d.x);

p_origin[1]=T(_pts_3d.y);

p_origin[2]=T(_pts_3d.z);

ceres::AngleAxisRotatePoint(rotation_vector, p_origin, p_transformed);

//旋转后加上平移向量

p_transformed[0] += translation_vector[0];

p_transformed[1] += translation_vector[1];

p_transformed[2] += translation_vector[2];

//归一化

T xp = p_transformed[0] / p_transformed[2];

T yp = p_transformed[1] / p_transformed[2];

double fx=520.9, fy=521.0, cx=325.1, cy=249.7;

// Compute final projected point position.

T predicted_x = fx * xp + cx;

T predicted_y = fy * yp + cy;

// The error is the difference between the predicted and observed position.

residuals[0] = T(_pts_2d.x) - predicted_x;

residuals[1] = T(_pts_2d.y) - predicted_y;

return true;

}

// 2,3,3指输出维度(residuals)为2

//待优化变量(rotation_vector,translation_vector)维度分别为3

static ceres::CostFunction* Create(const Point2f _pts_2d,

const Point3f _pts_3d) {

return (new ceres::AutoDiffCostFunction<PnPReprojectionError, 2, 3, 3>(

new PnPReprojectionError(_pts_2d, _pts_3d)));

}

Point2f _pts_2d;

Point3f _pts_3d;

};

// 通过引入Sophus库简化计算,并使用雅克比矩阵的解析解代替自动求导

class PnPSE3ReprojectionError : public ceres::SizedCostFunction<2, 6> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

PnPSE3ReprojectionError(Eigen::Vector2d pts_2d, Eigen::Vector3d pts_3d) :

_pts_2d(pts_2d), _pts_3d(pts_3d) {}

virtual ~PnPSE3ReprojectionError() {}

virtual bool Evaluate(

double const* const* parameters, double *residuals, double **jacobians) const {

Eigen::Map<const Eigen::Matrix<double,6,1>> se3(*parameters);

Sophus::SE3d T = Sophus::SE3d::exp(se3);

Eigen::Vector3d Pc = T * _pts_3d;

Eigen::Matrix3d K;

double fx = 520.9, fy = 521.0, cx = 325.1, cy = 249.7;

K << fx, 0, cx,

0, fy, cy,

0, 0, 1;

Eigen::Vector2d error = _pts_2d - (K * Pc).hnormalized();

residuals[0] = error[0];

residuals[1] = error[1];

if(jacobians != NULL) {

if(jacobians[0] != NULL) {

Eigen::Map<Eigen::Matrix<double, 2, 6, Eigen::RowMajor>> J(jacobians[0]);

double x = Pc[0];

double y = Pc[1];

double z = Pc[2];

double x2 = x*x;

double y2 = y*y;

double z2 = z*z;

//雅克比矩阵推导看书187页公式(7.46)

J(0,0) = -fx/z;

J(0,1) = 0;

J(0,2) = fx*x/z2;

J(0,3) = fx*x*y/z2;

J(0,4) = -fx-fx*x2/z2;

J(0,5) = fx*y/z;

J(1,0) = 0;

J(1,1) = -fy/z;

J(1,2) = fy*y/z2;

J(1,3) = fy+fy*y2/z2;

J(1,4) = -fy*x*y/z2;

J(1,5) = -fy*x/z;

}

}

return true;

}

private:

const Eigen::Vector2d _pts_2d;

const Eigen::Vector3d _pts_3d;

};

int main(int argc, char **argv){

if (argc != 5) {

cout << "usage: pose_estimation_3d2d img1 img2 depth1 depth2" << endl;

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

assert(img_1.data && img_2.data && "Can not load images!");

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

// 建立3D点

Mat d1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED); // 深度图为16位无符号数,单通道图像

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts_3d;

vector<Point2f> pts_2d;

vector<Vector3d> pts_3d_eigen;

vector<Vector2d> pts_2d_eigen;

for (DMatch m:matches) {

ushort d = d1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

if (d == 0) // bad depth

continue;

float dd = d / 5000.0;

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

pts_3d.push_back(Point3f(p1.x * dd, p1.y * dd, dd));//第一个相机观察到的3D点坐标

pts_2d.push_back(keypoints_2[m.trainIdx].pt);//特征点在第二个相机中的投影

pts_3d_eigen.push_back(Vector3d(p1.x * dd, p1.y * dd, dd));

pts_2d_eigen.push_back(Vector2d(keypoints_2[m.trainIdx].pt.x, keypoints_2[m.trainIdx].pt.y));

}

cout << "3d-2d pairs: " << pts_3d.size() << endl;

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

Mat r, t;

solvePnP(pts_3d, pts_2d, K, Mat(), r, t, false); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法

Mat R;

cv::Rodrigues(r, R); // r为旋转向量形式,用Rodrigues公式转换为矩阵

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in opencv cost time: " << time_used.count() << " seconds." << endl;

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t << endl;

cout << endl;

//ceres求解PnP, 使用自动求导

cout << "以下是ceres求解(自动求导)" << endl;

double r_ceres[3]={0,0,0};

double t_ceres[3]={0,0,0};

ceres::Problem problem;

for (size_t i = 0; i < pts_2d.size(); ++i) {

ceres::CostFunction* cost_function =

PnPReprojectionError::Create(pts_2d[i],pts_3d[i]);

problem.AddResidualBlock(cost_function,

nullptr /* squared loss */,

r_ceres,

t_ceres);

}

t1 = chrono::steady_clock::now();

ceres::Solver::Options options;

options.linear_solver_type = ceres::DENSE_SCHUR;

options.minimizer_progress_to_stdout = true;

ceres::Solver::Summary summary;

ceres::Solve(options, &problem, &summary);

std::cout << summary.BriefReport() << "n";

Mat r_ceres_cv=(Mat_<double>(3, 1) <<r_ceres[0], r_ceres[1], r_ceres[2]);

Mat t_ceres_cv=(Mat_<double>(3, 1) <<t_ceres[0], t_ceres[1], t_ceres[2]);

cv::Rodrigues(r_ceres_cv, R);

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t_ceres_cv << endl;

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in ceres cost time: " << time_used.count() << " seconds." << endl<< endl;

//ceres求解PnP, 使用雅克比矩阵的解析解

cout << "以下是ceres求解(雅克比矩阵给出解析解)" << endl;

Sophus::Vector6d se3;

se3<<0,0,0,0,0,0;// 初始化非常重要

ceres::Problem problem_;

for(int i=0; i<pts_3d_eigen.size(); ++i) {

ceres::CostFunction *cost_function;

cost_function = new PnPSE3ReprojectionError(pts_2d_eigen[i], pts_3d_eigen[i]);

problem_.AddResidualBlock(cost_function, NULL, se3.data());

}

t1 = chrono::steady_clock::now();

ceres::Solver::Options options_;

options_.dynamic_sparsity = true;

options_.max_num_iterations = 100;

options_.sparse_linear_algebra_library_type = ceres::SUITE_SPARSE;

options_.minimizer_type = ceres::TRUST_REGION;

options_.linear_solver_type = ceres::SPARSE_NORMAL_CHOLESKY;

options_.trust_region_strategy_type = ceres::DOGLEG;

options_.minimizer_progress_to_stdout = true;

options_.dogleg_type = ceres::SUBSPACE_DOGLEG;

ceres::Solver::Summary summary_;

ceres::Solve(options_, &problem_, &summary_);

std::cout << summary_.BriefReport() << "n";

std::cout << "estimated pose: n" << Sophus::SE3d::exp(se3).matrix() << std::endl;

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in ceres cost time: " << time_used.count() << " seconds." << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;

// used in OpenCV3

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" );

// Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" );

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<DMatch> match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f n", max_dist);

printf("-- Min dist : %f n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

CMakeLists.txt:

cmake_minimum_required(VERSION 2.8)

project(ch7_2)

set(CMAKE_BUILD_TYPE "Release")

add_definitions("-DENABLE_SSE")

#set(CMAKE_CXX_FLAGS "-std=c++11 -O2 ${SSE_FLAGS} -msse4")

set(CMAKE_CXX_FLAGS "-std=c++14 -O2 ${SSE_FLAGS} -msse4")

list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake)

find_package(OpenCV 3 REQUIRED)

find_package(G2O REQUIRED)

find_package(Sophus REQUIRED)

find_package(Ceres REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRS}

${G2O_INCLUDE_DIRS}

${Sophus_INCLUDE_DIRS}

${CERES_LIBRARIES}

"/usr/local/include/eigen3/"

)

add_executable(10pnp 10pnp.cpp)

target_link_libraries(10pnp

g2o_core g2o_stuff

${OpenCV_LIBS} ${CERES_LIBRARIES} fmt)

add_executable(10icp 10icp.cpp)

target_link_libraries(10icp

g2o_core g2o_stuff

${OpenCV_LIBS} ${CERES_LIBRARIES} fmt)

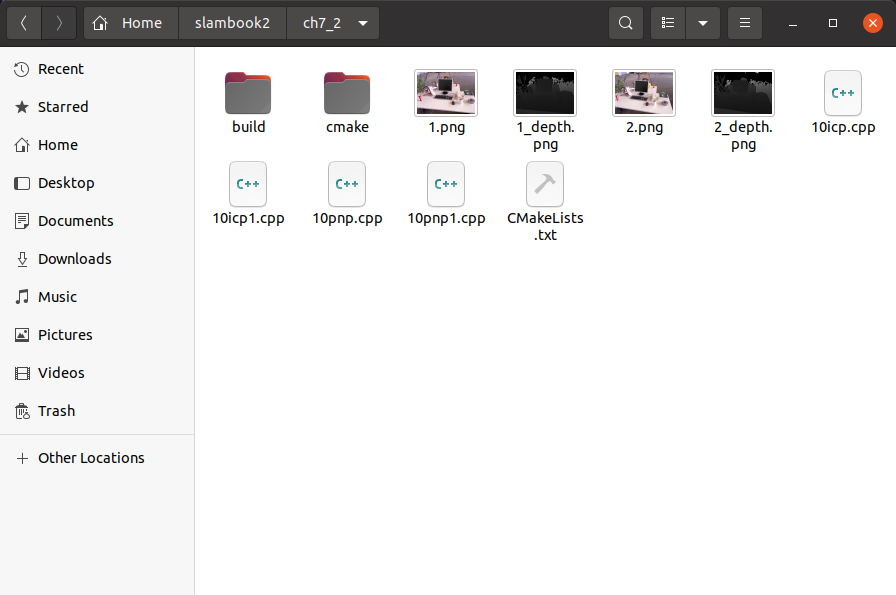

注意:我重新创建了一个文件夹,文件分布如下所示:

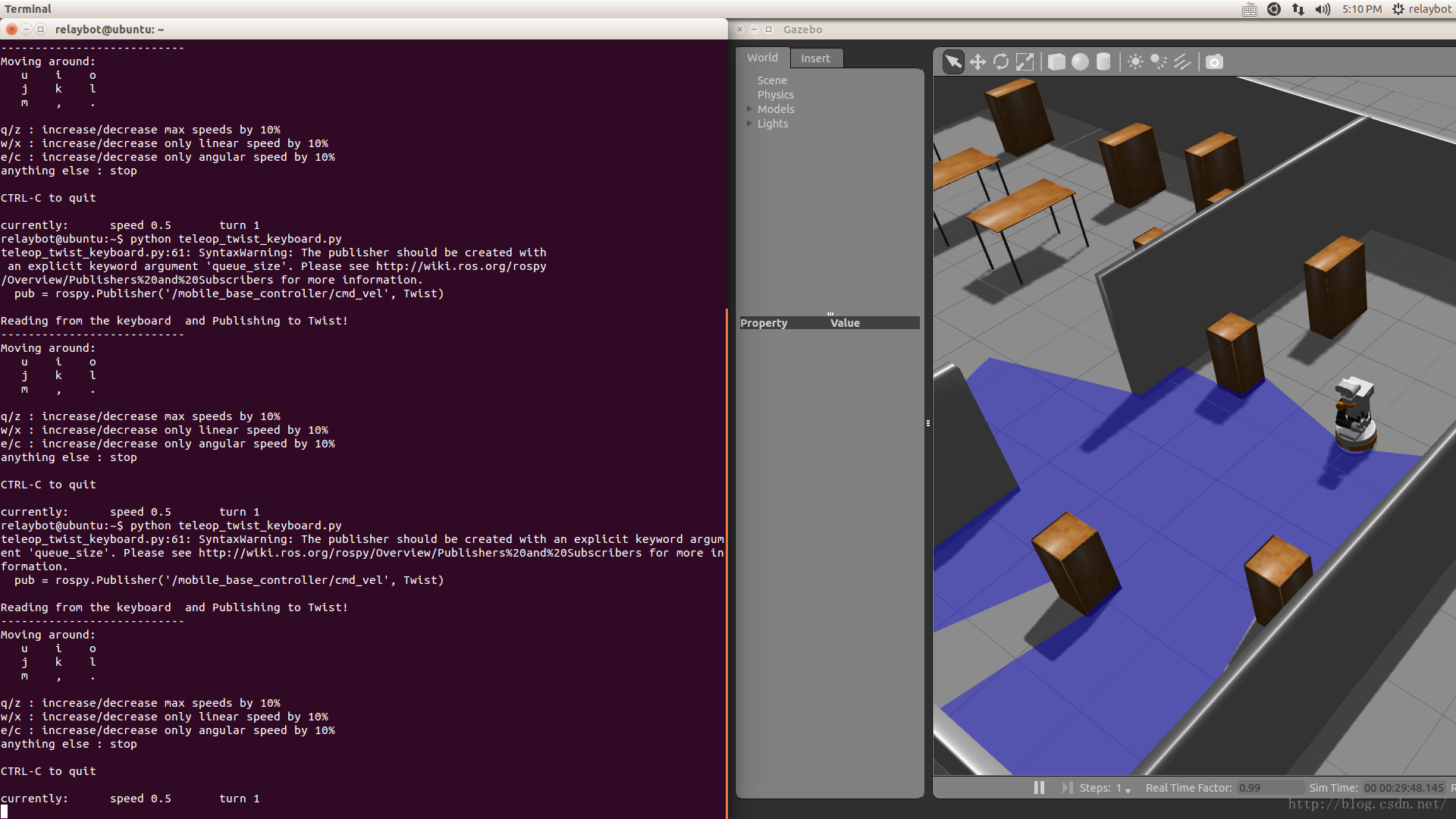

执行结果:

./10pnp 1.png 2.png 1_depth.png 2_depth.png

-- Max dist : 94.000000

-- Min dist : 4.000000

一共找到了79组匹配点

3d-2d pairs: 75

solve pnp in opencv cost time: 0.000406887 seconds.

R=

[0.9979059095501266, -0.05091940089110201, 0.03988747043653948;

0.04981866254253315, 0.9983623157438158, 0.02812094175376485;

-0.04125404886078184, -0.0260749135288436, 0.998808391202765]

t=

[-0.1267821389557959;

-0.008439496817520764;

0.06034935748888207]

以下是ceres求解(自动求导)

iter cost cost_change |gradient| |step| tr_ratio tr_radius ls_iter iter_time total_time

0 2.025888e+04 0.00e+00 6.83e+05 0.00e+00 0.00e+00 1.00e+04 0 4.41e-05 1.92e-04

1 1.650410e+02 2.01e+04 1.47e+04 1.51e-01 9.99e-01 3.00e+04 1 9.68e-05 3.09e-04

2 1.498819e+02 1.52e+01 4.80e+01 7.30e-03 1.00e+00 9.00e+04 1 4.70e-05 3.63e-04

Ceres Solver Report: Iterations: 3, Initial cost: 2.025888e+04, Final cost: 1.498819e+02, Termination: CONVERGENCE

R=

[0.9979061714739172, -0.05092444873781777, 0.03987447121929216;

0.04982431246493198, 0.9983622113118902, 0.02811463874620321;

-0.04124088774099816, -0.02606905340019193, 0.9988090876804998]

t=

[-0.1267625259978171;

-0.008424980436889882;

0.06034877257668213]

solve pnp in ceres cost time: 0.000447545 seconds.

以下是ceres求解(雅克比矩阵给出解析解)

iter cost cost_change |gradient| |step| tr_ratio tr_radius ls_iter iter_time total_time

0 2.025888e+04 0.00e+00 6.83e+05 0.00e+00 0.00e+00 1.00e+04 0 8.51e-05 1.07e-04

1 2.052736e+02 2.01e+04 3.79e+04 1.52e-01 9.97e-01 1.00e+04 1 1.70e-04 2.85e-04

2 1.500357e+02 5.52e+01 2.38e+03 8.62e-03 9.97e-01 1.00e+04 1 9.20e-05 3.84e-04

3 1.498820e+02 1.54e-01 7.50e+01 2.41e-04 9.99e-01 1.00e+04 1 8.80e-05 4.79e-04

4 1.498819e+02 1.63e-04 4.05e+00 8.96e-06 9.97e-01 1.00e+04 1 8.70e-05 5.72e-04

Ceres Solver Report: Iterations: 5, Initial cost: 2.025888e+04, Final cost: 1.498819e+02, Termination: CONVERGENCE

estimated pose:

0.997906 -0.0509194 0.0398875 -0.126782

0.0498187 0.998362 0.0281209 -0.00843933

-0.041254 -0.0260749 0.998808 0.0603495

0 0 0 1

solve pnp in ceres cost time: 0.000696333 seconds.代码链接:https://github.com/Philipcjh/HW-of-SLAMBOOK2/blob/main/hw7/p10ICP.cpp#L251

10icp.cpp:

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <ceres/ceres.h>

#include <ceres/rotation.h>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include "sophus/se3.hpp"

#include <chrono>

using namespace std;

using namespace Eigen;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches);

// 像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p, const Mat &K);

void pose_estimation_3d3d(

const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Mat &R, Mat &t

);

//Ref:http://www.ceres-solver.org/nnls_tutorial.html#bundle-adjustment

struct ICPReprojectionError {

ICPReprojectionError(Point3f pts1_3d, Point3f pts2_3d)

: _pts1_3d(pts1_3d), _pts2_3d(pts2_3d) {}

template <typename T>

bool operator()(const T* const rotation_vector,

const T* const translation_vector,

T* residuals) const {

T p_transformed[3], p_origin[3];

p_origin[0]=T(_pts2_3d.x);

p_origin[1]=T(_pts2_3d.y);

p_origin[2]=T(_pts2_3d.z);

ceres::AngleAxisRotatePoint(rotation_vector, p_origin, p_transformed);

//旋转后加上平移向量

p_transformed[0] += translation_vector[0];

p_transformed[1] += translation_vector[1];

p_transformed[2] += translation_vector[2];

//计算error

residuals[0] = T(_pts1_3d.x) - p_transformed[0];

residuals[1] = T(_pts1_3d.y) - p_transformed[1];

residuals[2] = T(_pts1_3d.z) - p_transformed[2];

return true;

}

// 3,3,3指输出维度(residuals)为3

//待优化变量(rotation_vector,translation_vector)维度分别为3

static ceres::CostFunction* Create(const Point3f _pts1_3d,

const Point3f _pts2_3d) {

return (new ceres::AutoDiffCostFunction<ICPReprojectionError, 3, 3, 3>(

new ICPReprojectionError(_pts1_3d, _pts2_3d)));

}

Point3f _pts1_3d;

Point3f _pts2_3d;

};

// 通过引入Sophus库简化计算,并使用雅克比矩阵的解析解代替自动求导

class ICPSE3ReprojectionError : public ceres::SizedCostFunction<3, 6> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW

ICPSE3ReprojectionError(Eigen::Vector3d pts1_3d, Eigen::Vector3d pts2_3d) :

_pts1_3d(pts1_3d), _pts2_3d(pts2_3d) {}

virtual ~ICPSE3ReprojectionError() {}

virtual bool Evaluate(

double const* const* parameters, double *residuals, double **jacobians) const {

Eigen::Map<const Eigen::Matrix<double,6,1>> se3(*parameters);

Sophus::SE3d T = Sophus::SE3d::exp(se3);

Eigen::Vector3d Pc = T * _pts2_3d;

Eigen::Vector3d error = _pts1_3d - Pc;

residuals[0] = error[0];

residuals[1] = error[1];

residuals[2] = error[2];

if(jacobians != NULL) {

if(jacobians[0] != NULL) {

Eigen::Map<Eigen::Matrix<double, 3, 6, Eigen::RowMajor>> J(jacobians[0]);

double x = Pc[0];

double y = Pc[1];

double z = Pc[2];

//雅克比矩阵推导看书187页公式(7.45),因为这里变换后的P'在计算error时是被减的,所以应该是(7.45)取负

J(0,0) = -1; J(0,1) = 0; J(0,2) = 0; J(0,3) = 0; J(0,4) = -z; J(0,5) = y;

J(1,0) = 0; J(1,1) = -1; J(1,2) = 0; J(1,3) = z; J(1,4) = 0; J(1,5) = -x;

J(2,0) = 0; J(2,1) = 0; J(2,2) = -1; J(2,3) = -y; J(2,4) = x; J(2,5) = 0;

}

}

return true;

}

private:

const Eigen::Vector3d _pts1_3d;

const Eigen::Vector3d _pts2_3d;

};

int main(int argc, char **argv){

if (argc != 5) {

cout << "usage: pose_estimation_3d3d img1 img2 depth1 depth2" << endl;

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);

vector<KeyPoint> keypoints_1, keypoints_2;

vector<DMatch> matches;

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);

cout << "一共找到了" << matches.size() << "组匹配点" << endl;

// 建立3D点

Mat depth1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED); // 深度图为16位无符号数,单通道图像

Mat depth2 = imread(argv[4], CV_LOAD_IMAGE_UNCHANGED); // 深度图为16位无符号数,单通道图像

Mat K = (Mat_<double>(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<Point3f> pts1, pts2;

vector<Vector3d> pts1_eigen, pts2_eigen;

for (DMatch m:matches) {

ushort d1 = depth1.ptr<unsigned short>(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

ushort d2 = depth2.ptr<unsigned short>(int(keypoints_2[m.trainIdx].pt.y))[int(keypoints_2[m.trainIdx].pt.x)];

if (d1 == 0 || d2 == 0) // bad depth

continue;

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);

Point2d p2 = pixel2cam(keypoints_2[m.trainIdx].pt, K);

float dd1 = float(d1) / 5000.0;

float dd2 = float(d2) / 5000.0;

pts1.push_back(Point3f(p1.x * dd1, p1.y * dd1, dd1));

pts2.push_back(Point3f(p2.x * dd2, p2.y * dd2, dd2));

pts1_eigen.push_back(Vector3d(p1.x * dd1, p1.y * dd1, dd1));

pts2_eigen.push_back(Vector3d(p2.x * dd2, p2.y * dd2, dd2));

}

cout << "3d-3d pairs: " << pts1.size() << endl;

Mat R, t;

pose_estimation_3d3d(pts1, pts2, R, t);

cout << "ICP via SVD results: " << endl;

cout << "R = " << R << endl;

cout << "t = " << t << endl;

cout << "R_inv = " << R.t() << endl;

cout << "t_inv = " << -R.t() * t << endl;

cout << endl;

//ceres求解PnP, 使用自动求导

cout << "以下是ceres求解(自动求导)" << endl;

double r_ceres[3]={0,0,0};

double t_ceres[3]={0,0,0};

ceres::Problem problem;

for (size_t i = 0; i < pts1.size(); ++i) {

ceres::CostFunction* cost_function =

ICPReprojectionError::Create(pts1[i],pts2[i]);

problem.AddResidualBlock(cost_function,

nullptr /* squared loss */,

r_ceres,

t_ceres);

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

ceres::Solver::Options options;

options.linear_solver_type = ceres::DENSE_SCHUR;

options.minimizer_progress_to_stdout = true;

ceres::Solver::Summary summary;

ceres::Solve(options, &problem, &summary);

std::cout << summary.BriefReport() << "n";

Mat r_ceres_cv=(Mat_<double>(3, 1) <<r_ceres[0], r_ceres[1], r_ceres[2]);

Mat t_ceres_cv=(Mat_<double>(3, 1) <<t_ceres[0], t_ceres[1], t_ceres[2]);

cv::Rodrigues(r_ceres_cv, R);

cout << "R=" << endl << R << endl;

cout << "t=" << endl << t_ceres_cv << endl;

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in ceres cost time: " << time_used.count() << " seconds." << endl;

//ceres求解PnP, 使用雅克比矩阵的解析解

cout << "以下是ceres求解(雅克比矩阵给出解析解)" << endl;

Sophus::Vector6d se3;

se3<<0,0,0,0,0,0;// 初始化非常重要

ceres::Problem problem_;

for(int i=0; i<pts1_eigen.size(); ++i) {

ceres::CostFunction *cost_function;

cost_function = new ICPSE3ReprojectionError(pts1_eigen[i], pts2_eigen[i]);

problem_.AddResidualBlock(cost_function, NULL, se3.data());

}

t1 = chrono::steady_clock::now();

ceres::Solver::Options options_;

options_.dynamic_sparsity = true;

options_.max_num_iterations = 100;

options_.sparse_linear_algebra_library_type = ceres::SUITE_SPARSE;

options_.minimizer_type = ceres::TRUST_REGION;

options_.linear_solver_type = ceres::SPARSE_NORMAL_CHOLESKY;

options_.trust_region_strategy_type = ceres::DOGLEG;

options_.minimizer_progress_to_stdout = true;

options_.dogleg_type = ceres::SUBSPACE_DOGLEG;

ceres::Solver::Summary summary_;

ceres::Solve(options_, &problem_, &summary_);

std::cout << summary_.BriefReport() << "n";

std::cout << "estimated pose: n" << Sophus::SE3d::exp(se3).matrix() << std::endl;

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "solve pnp in ceres cost time: " << time_used.count() << " seconds." << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector<KeyPoint> &keypoints_1,

std::vector<KeyPoint> &keypoints_2,

std::vector<DMatch> &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;

// used in OpenCV3

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr<FeatureDetector> detector = FeatureDetector::create ( "ORB" );

// Ptr<DescriptorExtractor> descriptor = DescriptorExtractor::create ( "ORB" );

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<DMatch> match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f n", max_dist);

printf("-- Min dist : %f n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

void pose_estimation_3d3d(const vector<Point3f> &pts1,

const vector<Point3f> &pts2,

Mat &R, Mat &t) {

Point3f p1, p2; // center of mass

int N = pts1.size();

for (int i = 0; i < N; i++) {

p1 += pts1[i];

p2 += pts2[i];

}

p1 = Point3f(Vec3f(p1) / N);

p2 = Point3f(Vec3f(p2) / N);

vector<Point3f> q1(N), q2(N); // remove the center

for (int i = 0; i < N; i++) {

q1[i] = pts1[i] - p1;

q2[i] = pts2[i] - p2;

}

// compute q1*q2^T

Eigen::Matrix3d W = Eigen::Matrix3d::Zero();

for (int i = 0; i < N; i++) {

W += Eigen::Vector3d(q1[i].x, q1[i].y, q1[i].z) * Eigen::Vector3d(q2[i].x, q2[i].y, q2[i].z).transpose();

}

cout << "W=" << W << endl;

// SVD on W

Eigen::JacobiSVD<Eigen::Matrix3d> svd(W, Eigen::ComputeFullU | Eigen::ComputeFullV);

Eigen::Matrix3d U = svd.matrixU();

Eigen::Matrix3d V = svd.matrixV();

cout << "U=" << U << endl;

cout << "V=" << V << endl;

Eigen::Matrix3d R_ = U * (V.transpose());

if (R_.determinant() < 0) {

R_ = -R_;

}

Eigen::Vector3d t_ = Eigen::Vector3d(p1.x, p1.y, p1.z) - R_ * Eigen::Vector3d(p2.x, p2.y, p2.z);

// convert to cv::Mat

R = (Mat_<double>(3, 3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}CMakeLists.txt

和上面一样

执行结果:

./10icp 1.png 2.png 1_depth.png 2_depth.png

-- Max dist : 94.000000

-- Min dist : 4.000000

一共找到了79组匹配点

3d-3d pairs: 72

W= 10.871 -1.01948 2.54771

-2.16033 3.85307 -5.77742

3.94738 -5.79979 9.62203

U= 0.558087 -0.829399 -0.0252034

-0.428009 -0.313755 0.847565

0.710878 0.462228 0.530093

V= 0.617887 -0.784771 -0.0484806

-0.399894 -0.366747 0.839989

0.676979 0.499631 0.540434

ICP via SVD results:

R = [0.9969452349468715, 0.05983347698056557, -0.05020113095482046;

-0.05932607657705309, 0.9981719679735133, 0.01153858699565957;

0.05079975545906246, -0.008525103184062521, 0.9986724725659557]

t = [0.144159841091821;

-0.06667849443812729;

-0.03009747273569774]

R_inv = [0.9969452349468715, -0.05932607657705309, 0.05079975545906246;

0.05983347698056557, 0.9981719679735133, -0.008525103184062521;

-0.05020113095482046, 0.01153858699565957, 0.9986724725659557]

t_inv = [-0.1461462958593589;

0.05767443542067568;

0.03806388018483625]

以下是ceres求解(自动求导)

iter cost cost_change |gradient| |step| tr_ratio tr_radius ls_iter iter_time total_time

0 1.199125e+00 0.00e+00 5.06e+00 0.00e+00 0.00e+00 1.00e+04 0 4.89e-05 2.00e-04

1 9.083313e-01 2.91e-01 3.38e-01 1.82e-01 9.93e-01 3.00e+04 1 8.99e-05 3.08e-04

2 9.077571e-01 5.74e-04 1.64e-03 4.20e-03 9.98e-01 9.00e+04 1 4.79e-05 3.63e-04

Ceres Solver Report: Iterations: 3, Initial cost: 1.199125e+00, Final cost: 9.077571e-01, Termination: CONVERGENCE

R=

[0.9969507083715436, 0.05977598782075687, -0.0501609046721651;

-0.05926864452294801, 0.998175330608928, 0.0115428393460838;

0.05075936222894979, -0.008534673034733091, 0.9986744447027271]

t=

[0.1440933285152241;

-0.06667828689784586;

-0.03010919342781287]

solve pnp in ceres cost time: 0.000474735 seconds.

以下是ceres求解(雅克比矩阵给出解析解)

iter cost cost_change |gradient| |step| tr_ratio tr_radius ls_iter iter_time total_time

0 1.199125e+00 0.00e+00 5.06e+00 0.00e+00 0.00e+00 1.00e+04 0 1.08e-04 1.31e-04

1 9.080573e-01 2.91e-01 2.09e-01 1.82e-01 9.94e-01 1.00e+04 1 1.59e-04 2.98e-04

2 9.077578e-01 3.00e-04 1.33e-02 5.82e-03 9.93e-01 1.00e+04 1 8.51e-05 3.91e-04

Ceres Solver Report: Iterations: 3, Initial cost: 1.199125e+00, Final cost: 9.077578e-01, Termination: CONVERGENCE

estimated pose:

0.996952 0.0597362 -0.0501911 0.144171

-0.059225 0.998177 0.0116122 -0.0669146

0.0507933 -0.00860423 0.998672 -0.0300734

0 0 0 1

solve pnp in ceres cost time: 0.000505383 seconds.

最后

以上就是直率蜗牛最近收集整理的关于视觉SLAM十四讲CH7课后习题10_1转载于:视觉SLAM十四讲(第二版)第7讲习题解答 - 知乎大家好,这里是Philip~最近在学习高博的《视觉SLAM十四讲》(第二版),以下是对第7讲习题的解答,如有错误或不全面的地方还请大家指正。 1. 除了本书介绍的ORB特征点,你还能找到哪些特征点?请说说SIFT或SURF的…https://zhuanlan.zhihu.com/p/38942086410. *在Ceres 中实现PnP 和ICP 的优化。 10icp.cpp:执行结果:的全部内容,更多相关视觉SLAM十四讲CH7课后习题10_1转载于:视觉SLAM十四讲(第二版)第7讲习题解答内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复