目录

一、简介

二、部署环境

2.1、系统要求

2.2、镜像仓库

三、安装部署

3.1、安装包下载:

3.2、安装步骤:

3.2.1、上传解压:

3.2.3、初始化环境:

3.2.4、导入镜像:

3.2.5、部署:

四、KubeSphere v3.1.0 镜像清单

一、简介

KubeSphere 是在 Kubernetes 之上构建的以应用为中心的多租户容器平台,提供全栈的 IT 自动化运维的能力,简化企业的 DevOps 工作流。KubeSphere 提供了 运维友好的向导式操作界面,帮助企业快速构建一个强大和功能丰富的容器云平台,包括 Kubernetes 资源管理、DevOps (CI/CD)、应用生命周期管理、微服务治理 (Service Mesh)、多租户管理、监控日志、告警通知、存储与网络管理、GPU support 等功能,未来还将提供 多集群管理、Network Policy、镜像仓库管理 等功能。KubeSphere 愿景是打造一个基于 Kubernetes 的云原生分布式操作系统,它的架构可以很方便地与云原生生态系统进行即插即用(plug-and-play)的集成。

二、部署环境

| 主机名 | ip地址 | 操作系统 |

| node1(master) | 192.168.99.11 | Centos_7.6 |

| node2 | 192.168.99.10 | Centos_7.6 |

| node3 | 192.168.99.24 | Centos_7.6 |

| node4 | 192.168.99.25 | Centos_7.6 |

2.1、系统要求

- 建议您使用干净的操作系统(不安装任何其他软件),否则可能会有冲突。

- 请确保每个节点的硬盘至少有 100G。

- 所有节点必须都能通过 SSH 访问。

- 所有节点时间和时区同步。

- 所有节点都应使用 sudo curl openssl ebtables socat ipset conntrack docker(当然离线安装包中包含了这些基础环境)

- 在基础环境安装好后需要设置docker 的存储目录,默认是存储在/var/lib/docker,为了后期可能出现空间不够的情况,建议使用添加卷、挂载或者是修改默认存储目录。

这四台都是纯净的机器,装好之后都只要设置网络环境以及关闭防火墙和seliunx,在KubeSphere v3.x以上的版本 都使用kk 进行安装,相比较之前的大大减少了了安装时的复杂程度,其实要比想象的安装简单的多,甚至比在线安装的更好用。

2.2、镜像仓库

1、docker registry

2、Harbor

这两个镜像仓都可以,如果使用registry的话最好是能支持https,如果使用harbor的话,在推送和拉取镜像的时候可能会报无权限或者是没有认证(没登录)。请参考:从私有仓库拉取镜像 | Kubernetes

三、安装部署

3.1、安装包下载:

安装包较大建议自行离线下载。

https://kubesphere-installer.pek3b.qingstor.com/offline/v3.1.0/kubesphere-all-v3.1.0-offline-linux-amd64.tar.gz3.2、安装步骤:

3.2.1、上传解压:

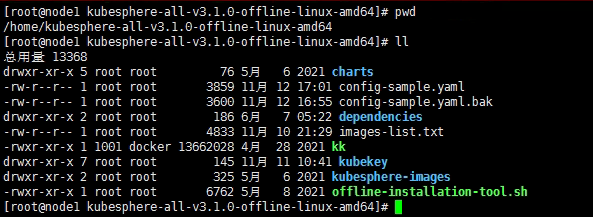

我这边是将所有比较大的文件直接都放到/home里面存储空间是非常大,就没有做挂载了。像配置文件和images-list 没安装之前是没有的,我这里已经安装过了。

3.2.2、创建集群配置文件:

修改生成的配置文件config-sample.yaml,这里我们指定版本,其他参数都是默认的,当然还可以配置其他参数,kk详细用法可参考:https://github.com/kubesphere/kubekey

./kk create config --with-kubesphere v3.1.0 config-sample.yaml 内容如下:

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 192.168.99.11, internalAddress: 192.168.99.11, user: root, password: 20211102@9911}

- {name: node2, address: 192.168.99.10, internalAddress: 192.168.99.10, user: root, password: 20211102@9910}

- {name: node3, address: 192.168.99.24, internalAddress: 192.168.99.24, user: root, password: 20211111@9924}

- {name: node4, address: 192.168.99.25, internalAddress: 192.168.99.25, user: root, password: 20211111@9925}

roleGroups:

etcd:

- node1

master:

- node1

worker:

- node1

- node2

- node3

- node4

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.19.8

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

privateRegistry: 192.168.99.11:8080/kube-install

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.0

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

redis:

enabled: false

redisVolumSize: 2Gi

openldap:

enabled: false

openldapVolumeSize: 2Gi

minioVolumeSize: 20Gi

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

es:

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true

port: 30880

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

devops:

enabled: true

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

ruler:

enabled: true

replicas: 2

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

storageClass: ""

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

notification:

enabled: false

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

这里需要注意的是 privateRegistry: 192.168.99.11:8080/kube-install 这就是镜像仓的地址,后面步骤推送和拉取使用都是这个地址,我这里使用的是harbor镜像仓库,在安装之前就需要先安装harbor了。同时还需要在/etc/docker/daemon.json 中添本地仓的配置。

vi /etc/docker/daemon.json{

"insecure-registries": ["192.168.99.11:8080"]

}

3.2.3、初始化环境:

初始化环境之前一定更要确保在当前安装机上能ssh到其他节点上,否则安装初始化环境会失败。

注意:如需使用kk创建自签名镜像仓库,则会在当前机器启动docker registry服务,请确保当前机器存在registry:2,如没有,需要自己下载registry的镜像文件,导入命令:docker load < registry.tar。

# 执行如下命令会对配置文件中所有节点安装依赖:

./kk init os -f config-sample.yaml -s ./dependencies/

# 如需使用kk创建自签名镜像仓库,可执行如下命令:(如果是使用docker rregistry是需要执行这个运行registry镜像仓服务)

./kk init os -f config-sample.yaml -s ./dependencies/ --add-images-repo3.2.4、导入镜像:

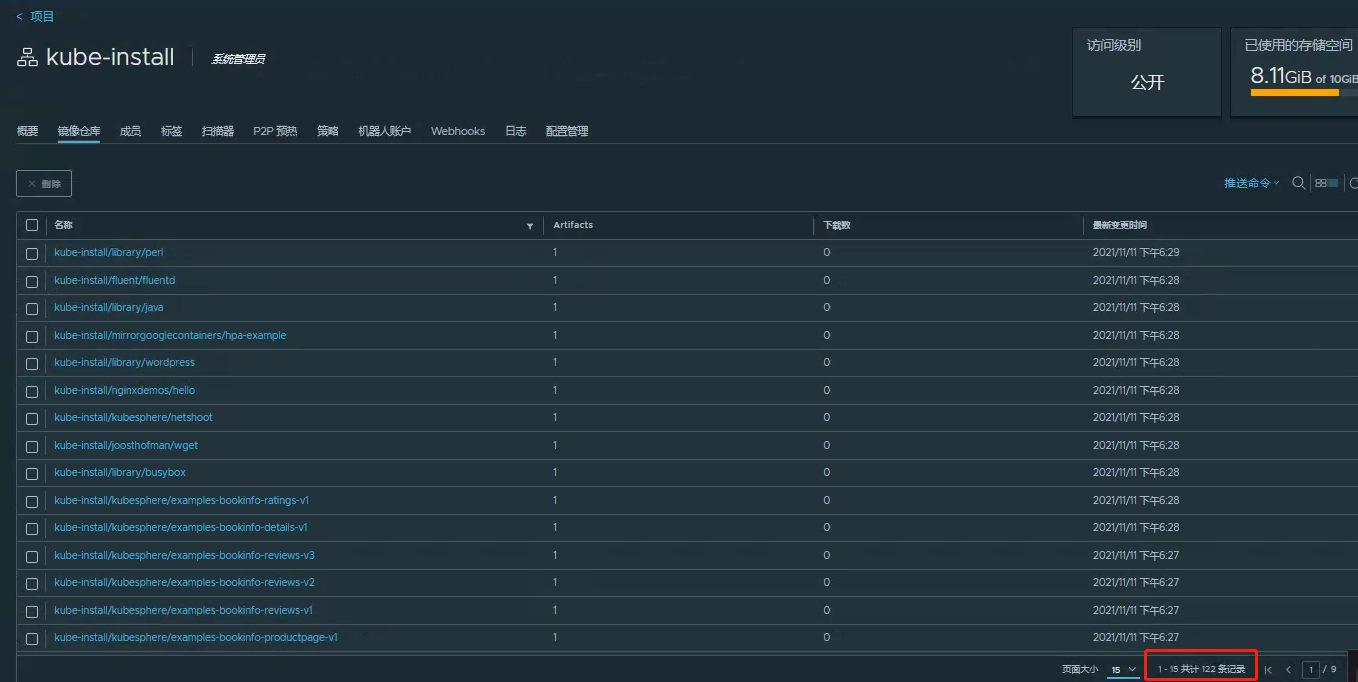

导入镜像时注意观察加载镜像和推送镜像是否都是完整的,这一步本人踩了很多坑由于存储空间的问题,最开始我是放在/root目录下 ,因为root目录默认挂载大小是50g,推送到后面发现有一些镜像不完整,从新安装docker里面的镜像和harbor里面的镜像有又无法彻底删除干净,只能重新安装docker和harbor。

./offline-installation-tool.sh -l images-list.txt -d ./kubesphere-images -r 192.168.99.11:8080/kube-install推送完后附上一张完整镜像数量图:

3.2.5、部署:

以上准备工作完成且再次检查配置文件无误后,执行安装。

./kk create cluster -f config-sample.yaml在安装过程中可以使用kubectl 查看安装日志:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

看到以下信息时,表明高可用集群已成功创建。

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.99.11:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2021-11-16 10:02:26

#####################################################四、KubeSphere v3.1.0 镜像清单

##k8s-images

kubesphere/kube-apiserver:v1.20.4

kubesphere/kube-scheduler:v1.20.4

kubesphere/kube-proxy:v1.20.4

kubesphere/kube-controller-manager:v1.20.4

kubesphere/kube-apiserver:v1.19.8

kubesphere/kube-scheduler:v1.19.8

kubesphere/kube-proxy:v1.19.8

kubesphere/kube-controller-manager:v1.19.8

kubesphere/kube-apiserver:v1.19.9

kubesphere/kube-scheduler:v1.19.9

kubesphere/kube-proxy:v1.19.9

kubesphere/kube-controller-manager:v1.19.9

kubesphere/kube-apiserver:v1.18.8

kubesphere/kube-scheduler:v1.18.8

kubesphere/kube-proxy:v1.18.8

kubesphere/kube-controller-manager:v1.18.8

kubesphere/kube-apiserver:v1.17.9

kubesphere/kube-scheduler:v1.17.9

kubesphere/kube-proxy:v1.17.9

kubesphere/kube-controller-manager:v1.17.9

kubesphere/pause:3.1

kubesphere/pause:3.2

kubesphere/etcd:v3.4.13

calico/cni:v3.16.3

calico/kube-controllers:v3.16.3

calico/node:v3.16.3

calico/pod2daemon-flexvol:v3.16.3

calico/typha:v3.16.3

kubesphere/flannel:v0.12.0

coredns/coredns:1.6.9

kubesphere/k8s-dns-node-cache:1.15.12

openebs/provisioner-localpv:2.3.0

openebs/linux-utils:2.3.0

kubesphere/nfs-client-provisioner:v3.1.0-k8s1.11

##csi-images

csiplugin/csi-neonsan:v1.2.0

csiplugin/csi-neonsan-ubuntu:v1.2.0

csiplugin/csi-neonsan-centos:v1.2.0

csiplugin/csi-provisioner:v1.5.0

csiplugin/csi-attacher:v2.1.1

csiplugin/csi-resizer:v0.4.0

csiplugin/csi-snapshotter:v2.0.1

csiplugin/csi-node-driver-registrar:v1.2.0

csiplugin/csi-qingcloud:v1.2.0

##kubesphere-images

kubesphere/ks-apiserver:v3.1.0

kubesphere/ks-console:v3.1.0

kubesphere/ks-controller-manager:v3.1.0

kubesphere/ks-installer:v3.1.0

kubesphere/kubectl:v1.19.0

redis:5.0.5-alpine

alpine:3.10.4

haproxy:2.0.4

nginx:1.14-alpine

minio/minio:RELEASE.2019-08-07T01-59-21Z

minio/mc:RELEASE.2019-08-07T23-14-43Z

mirrorgooglecontainers/defaultbackend-amd64:1.4

kubesphere/nginx-ingress-controller:v0.35.0

osixia/openldap:1.3.0

csiplugin/snapshot-controller:v2.0.1

kubesphere/kubefed:v0.7.0

kubesphere/tower:v0.2.0

kubesphere/prometheus-config-reloader:v0.42.1

kubesphere/prometheus-operator:v0.42.1

prom/alertmanager:v0.21.0

prom/prometheus:v2.26.0

prom/node-exporter:v0.18.1

kubesphere/ks-alerting-migration:v3.1.0

jimmidyson/configmap-reload:v0.3.0

kubesphere/notification-manager-operator:v1.0.0

kubesphere/notification-manager:v1.0.0

kubesphere/metrics-server:v0.4.2

kubesphere/kube-rbac-proxy:v0.8.0

kubesphere/kube-state-metrics:v1.9.7

openebs/provisioner-localpv:2.3.0

thanosio/thanos:v0.18.0

grafana/grafana:7.4.3

##kubesphere-logging-images

kubesphere/elasticsearch-oss:6.7.0-1

kubesphere/elasticsearch-curator:v5.7.6

kubesphere/fluentbit-operator:v0.5.0

kubesphere/fluentbit-operator:migrator

kubesphere/fluent-bit:v1.6.9

elastic/filebeat:6.7.0

kubesphere/kube-auditing-operator:v0.1.2

kubesphere/kube-auditing-webhook:v0.1.2

kubesphere/kube-events-exporter:v0.1.0

kubesphere/kube-events-operator:v0.1.0

kubesphere/kube-events-ruler:v0.2.0

kubesphere/log-sidecar-injector:1.1

docker:19.03

##istio-images

istio/pilot:1.6.10

istio/proxyv2:1.6.10

jaegertracing/jaeger-agent:1.17

jaegertracing/jaeger-collector:1.17

jaegertracing/jaeger-es-index-cleaner:1.17

jaegertracing/jaeger-operator:1.17.1

jaegertracing/jaeger-query:1.17

kubesphere/kiali:v1.26.1

kubesphere/kiali-operator:v1.26.1

##kubesphere-devops-images

kubesphere/ks-jenkins:2.249.1

jenkins/jnlp-slave:3.27-1

kubesphere/s2ioperator:v3.1.0

kubesphere/s2irun:v2.1.1

kubesphere/builder-base:v3.1.0

kubesphere/builder-nodejs:v3.1.0

kubesphere/builder-maven:v3.1.0

kubesphere/builder-go:v3.1.0

kubesphere/s2i-binary:v2.1.0

kubesphere/tomcat85-java11-centos7:v2.1.0

kubesphere/tomcat85-java11-runtime:v2.1.0

kubesphere/tomcat85-java8-centos7:v2.1.0

kubesphere/tomcat85-java8-runtime:v2.1.0

kubesphere/java-11-centos7:v2.1.0

kubesphere/java-8-centos7:v2.1.0

kubesphere/java-8-runtime:v2.1.0

kubesphere/java-11-runtime:v2.1.0

kubesphere/nodejs-8-centos7:v2.1.0

kubesphere/nodejs-6-centos7:v2.1.0

kubesphere/nodejs-4-centos7:v2.1.0

kubesphere/python-36-centos7:v2.1.0

kubesphere/python-35-centos7:v2.1.0

kubesphere/python-34-centos7:v2.1.0

kubesphere/python-27-centos7:v2.1.0

##openpitrix-images

kubesphere/openpitrix-jobs:v3.1.0

##weave-scope-images

weaveworks/scope:1.13.0

##kubeedge-images

kubeedge/cloudcore:v1.6.1

kubesphere/edge-watcher:v0.1.0

kubesphere/kube-rbac-proxy:v0.5.0

kubesphere/edge-watcher-agent:v0.1.0

##example-images-images

kubesphere/examples-bookinfo-productpage-v1:1.16.2

kubesphere/examples-bookinfo-reviews-v1:1.16.2

kubesphere/examples-bookinfo-reviews-v2:1.16.2

kubesphere/examples-bookinfo-reviews-v3:1.16.2

kubesphere/examples-bookinfo-details-v1:1.16.2

kubesphere/examples-bookinfo-ratings-v1:1.16.3

busybox:1.31.1

joosthofman/wget:1.0

kubesphere/netshoot:v1.0

nginxdemos/hello:plain-text

wordpress:4.8-apache

mirrorgooglecontainers/hpa-example:latest

java:openjdk-8-jre-alpine

fluent/fluentd:v1.4.2-2.0

perl:latest

该文章中如有错误地方还请大家指正,后续会对该文章进行细化以及出几篇相关问题的文章,还请大家期待。

参考文章:KubeKey 离线环境部署 KubeSphere v3.0.0 - KubeSphere 开发者社区

最后

以上就是鳗鱼棉花糖最近收集整理的关于kubesphere v3.1.0 离线集群部署一、简介二、部署环境三、安装部署四、KubeSphere v3.1.0 镜像清单的全部内容,更多相关kubesphere内容请搜索靠谱客的其他文章。

发表评论 取消回复