试想下如果前面配置的HAProxy主机192.168.0.9突然宕机或者网卡失效,那么虽然RabbitMQ集群没有任何故障,但是对于外界的客户端来说所有的连接都会被断开,结果将是灾难性的。确保负载均衡服务的可靠性同样显得十分的重要。这里就引入Keepalived工具,它能够通过自身健康检查、资源接管功能做高可用(双机热备),实现故障转移。

Keepalived采用VRRP(Virtual Router Redundancy Protocol,虚拟路由冗余协议),以软件的形式实现服务器热备功能。通常情况下是将两台Linux服务器组成一个热备组(Master和Backup),同一时间热备组内只有一台主服务器Master提供服务,同时Master会虚拟出一个公用的虚拟IP地址,简称VIP。这个VIP只存在在Master上并对外提供服务。如果Keepalived检测到Master宕机或者服务故障,备份服务器Backup会自动接管VIP称为Master,Keepalived并将原Master从热备组中移除。当原Master恢复后,会自动加入到热备组,默认再抢占称为Master,起到故障转移的功能。

Keepalived工作在OSI模型中的第3层、第4层和第7层。

工作在第3层是指Keepalived会定期向热备组中的服务器发送一个ICMP数据包来判断某台服务器是否故障,如果故障则将这台服务器从热备组移除。

工作在第4层是指Keepalived以TCP端口的状态判断服务器是否故障,比如检测RabbitMQ的5672端口,如果故障则将这台服务器从热备组中移除。

工作在第7层是指Keepalived根据用户设定的策略(通常是一个自定义的检测脚本)判断服务器上的程序是否正常运行,如果故障将这台服务器从热备组移除。

Keepalived的安装

首先需要去Keepalived的官网下载Keepalived的安装文件,目前最新的版本为:keepalived-1.3.5.tar.gz,下载地址为http://www.keepalived.org/download.html。

将keepalived-1.3.5.tar.gz解压并安装,详细步骤如下:

[root@node1 ~]# tar zxvf keepalived-1.3.5.tar.gz

[root@node1 ~]# cd keepalived-1.3.5

[root@node1 keepalived-1.3.5]# ./configure --prefix=/opt/keepalived --with-init=SYSV

#注:(upstart|systemd|SYSV|SUSE|openrc) #根据你的系统选择对应的启动方式

[root@node1 keepalived-1.3.5]# make

[root@node1 keepalived-1.3.5]# make install之后将安装过后的Keepalived加入系统服务中,详细步骤如下(注意千万不要输错命令):

#复制启动脚本到/etc/init.d/下

[root@node1 ~]# cp /opt/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

[root@node1 ~]# cp /opt/keepalived/etc/sysconfig/keepalived /etc/sysconfig

[root@node1 ~]# cp /opt/keepalived/sbin/keepalived /usr/sbin/

[root@node1 ~]# chmod +x /etc/init.d/keepalived

[root@node1 ~]# chkconfig --add keepalived

[root@node1 ~]# chkconfig keepalived on

#Keepalived默认会读取/etc/keepalived/keepalived.conf配置文件

[root@node1 ~]# mkdir /etc/keepalived

[root@node1 ~]# cp /opt/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/ 执行完之后就可以使用如下:

service keepalived restart

service keepalived start

service keepalived stop

service keepalived status这4个命令来重启、启动、关闭和查看keepalived状态。

配置

在安装的时候我们已经创建了/etc/keepalived目录,并将keepalived.conf配置文件拷贝到此目录下,如此Keepalived便可以读取这个默认的配置文件了。如果要将Keepalived与前面的HAProxy服务结合起来需要更改/etc/keepalived/keepalived.conf这个配置文件,在此之前先来看看本次配置需要完成的详情及目标。

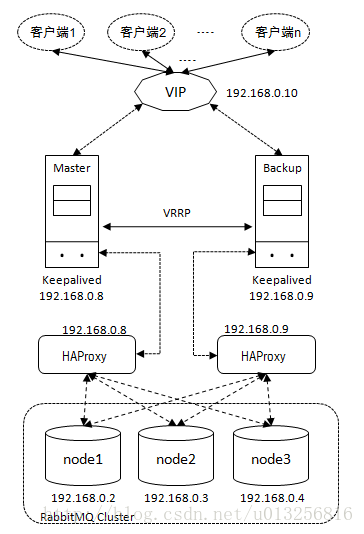

如图所示,两台Keepalived服务器之间通过VRRP进行交互,对外部虚拟出一个VIP为192.168.0.10。Keepalived与HAProxy部署在同一台机器上,两个Keepalived服务实例匹配两个HAProxy服务实例,这样通过Keeaplived实现HAProxy的双机热备。所以在上一小节的192.168.0.9的基础之上,还要再部署一台HAProxy服务,IP地址为192.168.0.8。整条调用链路为:客户端通过VIP建立通信链路;通信链路通过Keeaplived的Master节点路由到对应的HAProxy之上;HAProxy通过负载均衡算法将负载分发到集群中的各个节点之上。正常情况下客户端的连接通过图中左侧部分进行负载分发。当Keepalived的Master节点挂掉或者HAProxy挂掉无法恢复,那么Backup提升为Master,客户端的连接通过图中右侧部分进行负载分发。如果你追求要更高的可靠性,可以加入多个Backup角色的Keepalived节点来实现一主多从的多机热备。当然这样会提升硬件资源的成本,该如何抉择需要更细致的考恒,一般情况下双机热备的配备已足够满足应用需求。

接下来我们要修改/etc/keepalived/keepalived.conf文件,在Keepalived的Master上配置详情如下:

#Keepalived配置文件

global_defs {

router_id NodeA #路由ID, 主备的ID不能相同

}

#自定义监控脚本

vrrp_script chk_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

weight 2

}

vrrp_instance VI_1 {

state MASTER #Keepalived的角色。Master表示主服务器,从服务器设置为BACKUP

interface eth0 #指定监测网卡

virtual_router_id 1

priority 100 #优先级,BACKUP机器上的优先级要小于这个值

advert_int 1 #设置主备之间的检查时间,单位为s

authentication { #定义验证类型和密码

auth_type PASS

auth_pass root123

}

track_script {

chk_haproxy

}

virtual_ipaddress { #VIP地址,可以设置多个:

192.168.0.10

}

}Backup中的配置大致和Master中的相同,不过需要修改global_defs{}的router_id,比如置为NodeB;其次要修改vrrp_instance VI_1{}中的state为BACKUP;最后要将priority设置为小于100的值。注意Master和Backup中的virtual_router_id要保持一致。下面简要的展示下Backup的配置:

global_defs {

router_id NodeB

}

vrrp_script chk_haproxy {

...

}

vrrp_instance VI_1 {

state BACKUP

...

priority 50

...

}为了防止HAProxy服务挂了,但是Keepalived却还在正常工作而没有切换到Backup上,所以这里需要编写一个脚本来检测HAProxy服务的状态。当HAProxy服务挂掉之后该脚本会自动重启HAProxy的服务,如果不成功则关闭Keepalived服务,如此便可以切换到Backup继续工作。这个脚本就对应了上面配置中vrrp_script chk_haproxy{}的script对应的值,/etc/keepalived/check_haproxy.sh的内容如代码清单所示。

#!/bin/bash

if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ];then

haproxy -f /opt/haproxy-1.7.8/haproxy.cfg

fi

sleep 2

if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ];then

service keepalived stop

fi如此配置好之后,使用service keepalived start命令启动192.168.0.8和192.168.0.9中的Keepalived服务即可。之后客户端的应用可以通过192.168.0.10这个IP地址来接通RabbitMQ服务。

查看Keepalived的运行情况

可以通过tail -f /var/log/messages -n 200命令查看相应的Keepalived日志输出。Master启动日志如下:

Oct 4 23:01:51 node1 Keepalived[30553]: Starting Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2

Oct 4 23:01:51 node1 Keepalived[30553]: Unable to resolve default script username 'keepalived_script' - ignoring

Oct 4 23:01:51 node1 Keepalived[30553]: Opening file '/etc/keepalived/keepalived.conf'.

Oct 4 23:01:51 node1 Keepalived[30554]: Starting Healthcheck child process, pid=30555

Oct 4 23:01:51 node1 Keepalived[30554]: Starting VRRP child process, pid=30556

Oct 4 23:01:51 node1 Keepalived_healthcheckers[30555]: Opening file '/etc/keepalived/keepalived.conf'.

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: Registering Kernel netlink reflector

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: Registering Kernel netlink command channel

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: Registering gratuitous ARP shared channel

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: Opening file '/etc/keepalived/keepalived.conf'.

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: VRRP_Instance(VI_1) removing protocol VIPs.

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: Using LinkWatch kernel netlink reflector...

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Oct 4 23:01:51 node1 Keepalived_vrrp[30556]: VRRP_Instance(VI_1) Transition to MASTER STATE

Oct 4 23:01:52 node1 Keepalived_vrrp[30556]: VRRP_Instance(VI_1) Entering MASTER STATE

Oct 4 23:01:52 node1 Keepalived_vrrp[30556]: VRRP_Instance(VI_1) setting protocol VIPs.Master启动之后可以通过ip add show命令查看添加的VIP(加粗部分,Backup节点是没有VIP的):

[root@node1 ~]# ip add show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP qlen 1000

link/ether fa:16:3e:5e:7a:f7 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.8/18 brd 10.198.255.255 scope global eth0

inet 192.168.0.10/32 scope global eth0

inet6 fe80::f816:3eff:fe5e:7af7/64 scope link

valid_lft forever preferred_lft forever在Master节点执行 service keepalived stop模拟异常关闭的情况,观察Master的日志:

Oct 4 22:58:32 node1 Keepalived[27609]: Stopping

Oct 4 22:58:32 node1 Keepalived_vrrp[27611]: VRRP_Instance(VI_1) sent 0 priority

Oct 4 22:58:32 node1 Keepalived_vrrp[27611]: VRRP_Instance(VI_1) removing protocol VIPs.

Oct 4 22:58:32 node1 Keepalived_healthcheckers[27610]: Stopped

Oct 4 22:58:33 node1 Keepalived_vrrp[27611]: Stopped

Oct 4 22:58:33 node1 Keepalived[27609]: Stopped Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2

Oct 4 22:58:34 node1 ntpd[1313]: Deleting interface #13 eth0, 192.168.0.10#123, interface stats: received=0, sent=0, dropped=0, active_time=532 secs

Oct 4 22:58:34 node1 ntpd[1313]: peers refreshed对应的Master上的VIP也会消失:

[root@node1 ~]# ip add show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP qlen 1000

link/ether fa:16:3e:5e:7a:f7 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.8/18 brd 10.198.255.255 scope global eth0

inet6 fe80::f816:3eff:fe5e:7af7/64 scope link

valid_lft forever preferred_lft foreverMaster关闭后,Backup会提升为新的Master,对应的日志为:

Oct 4 22:58:15 node2 Keepalived_vrrp[2352]: VRRP_Instance(VI_1) Transition to MASTER STATE

Oct 4 22:58:16 node2 Keepalived_vrrp[2352]: VRRP_Instance(VI_1) Entering MASTER STATE

Oct 4 22:58:16 node2 Keepalived_vrrp[2352]: VRRP_Instance(VI_1) setting protocol VIPs.可以看到新的Master节点上虚拟出了VIP如下所示:

[root@node2 ~]# ip add show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP qlen 1000

link/ether fa:16:3e:23:ac:ec brd ff:ff:ff:ff:ff:ff

inet 192.168.0.9/18 brd 10.198.255.255 scope global eth0

inet 192.168.0.10/32 scope global eth0

inet6 fe80::f816:3eff:fe23:acec/64 scope link

valid_lft forever preferred_lft forever最后

以上就是单薄飞机最近收集整理的关于RabbitMQ负载均衡(3)——Keepalived+HAProxy实现高可用的负载均衡的全部内容,更多相关RabbitMQ负载均衡(3)——Keepalived+HAProxy实现高可用内容请搜索靠谱客的其他文章。

发表评论 取消回复