文章目录

- Kubernetes

- 一、环境准备(全部执行)空间 ????????????????????????

- 1、服务器的环境准备

- 2、本地机器准备

- 3、设置主机及解析添加(全部都执行)

- 4、系统优化(全部都执行)

- 5、主机之间进行做免密操作(全部包括自己本身)

- 6、配置镜像源(选其一)

- 7、安装常用工具包(全部执行)

- 8、主机时间与系统时间同步(集群时间必须一致)

- 9、系统内核更新 (升级Linux内核为4.44之上版本)

- 10、增加命令提示安装

- 11、设置日志保存方式(此步可跳过)

- 二、IPVS安装及模块调用(全部执行)✨✨✨

- 三、安装docker(全部执行)

- 四、安装kubelet(全部执行)

- 五、部署kubernetes集群 ????????????????????????

- 1、初始化master节点(master节点执行)

- 2、配置 kubernetes 用户信息(master节点执行)

- 3、安装集群网络插件(flannel)

- 【kube-flanne.yml】

- 【重新加入集群】

- 4、加入Kubernetes Node(master点执行)

- 5、错误方案解决(没有问题就不用管 OK)

- 6、测试kubernetes集群

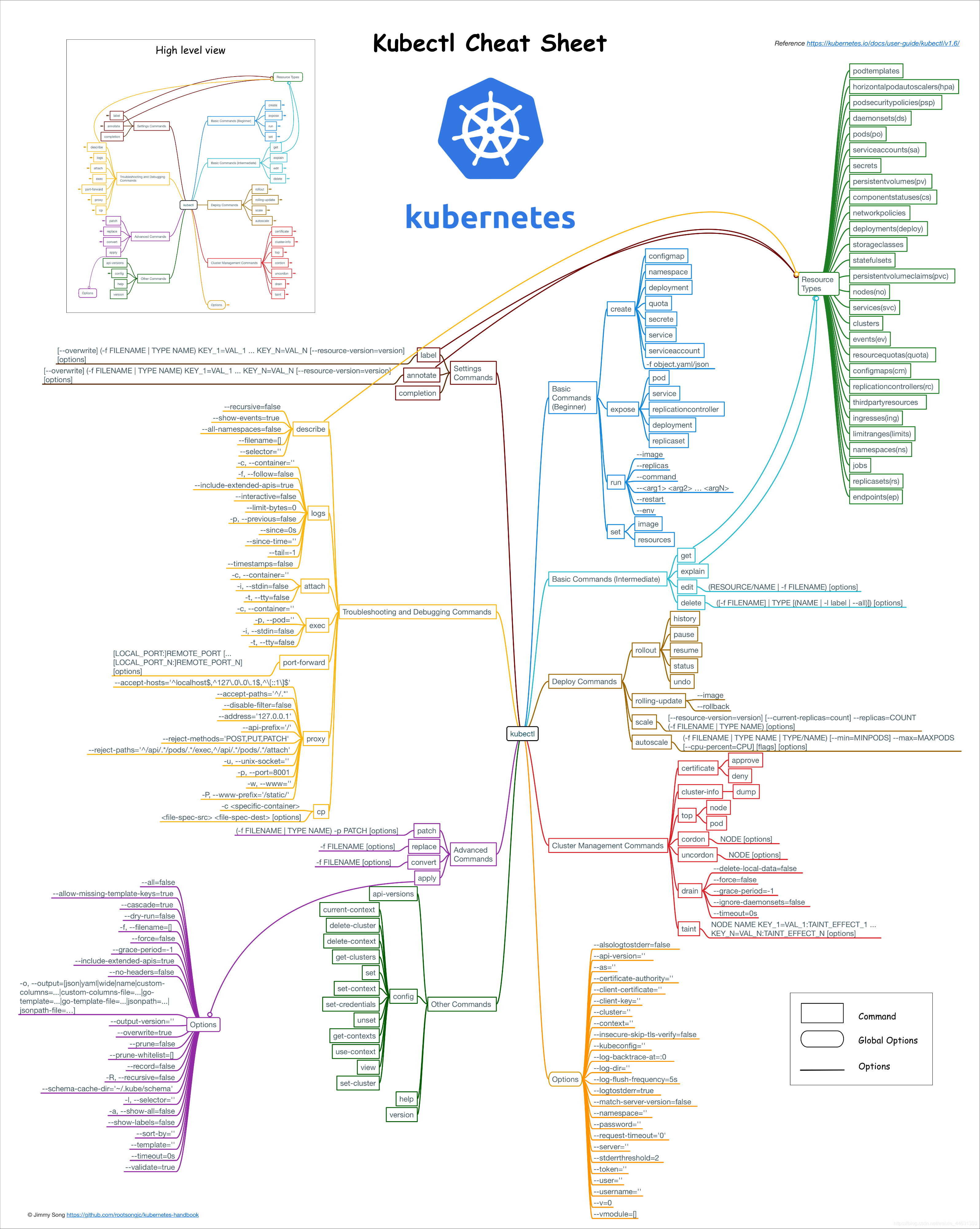

- 7、kubectl命令使用

- 【kubectl常用的指令】

- 六、部署 [Dashboard](https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/) 图形化

- 【dashboard的中文版】

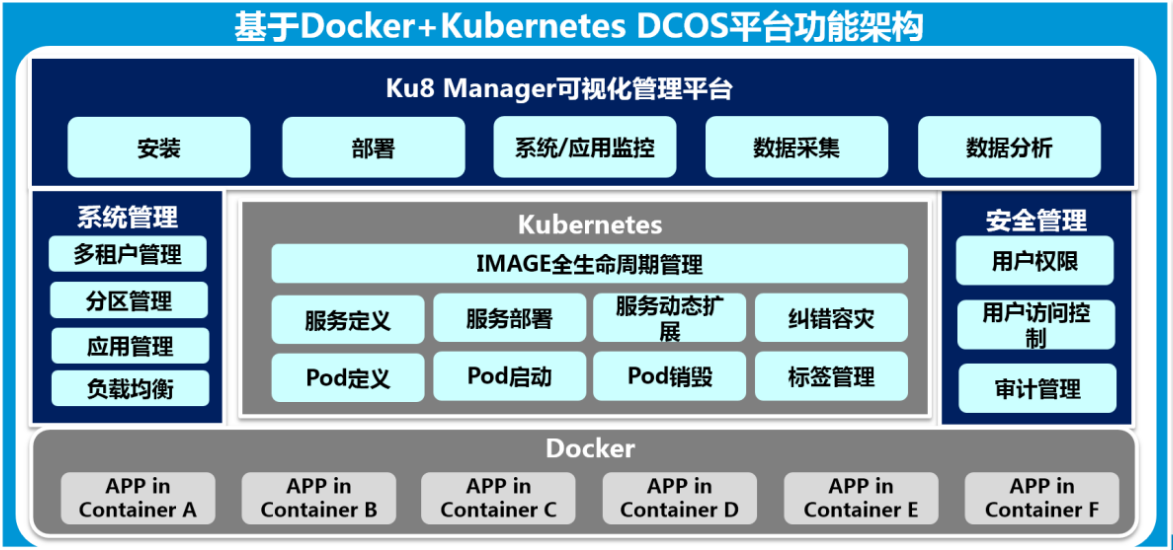

Kubernetes

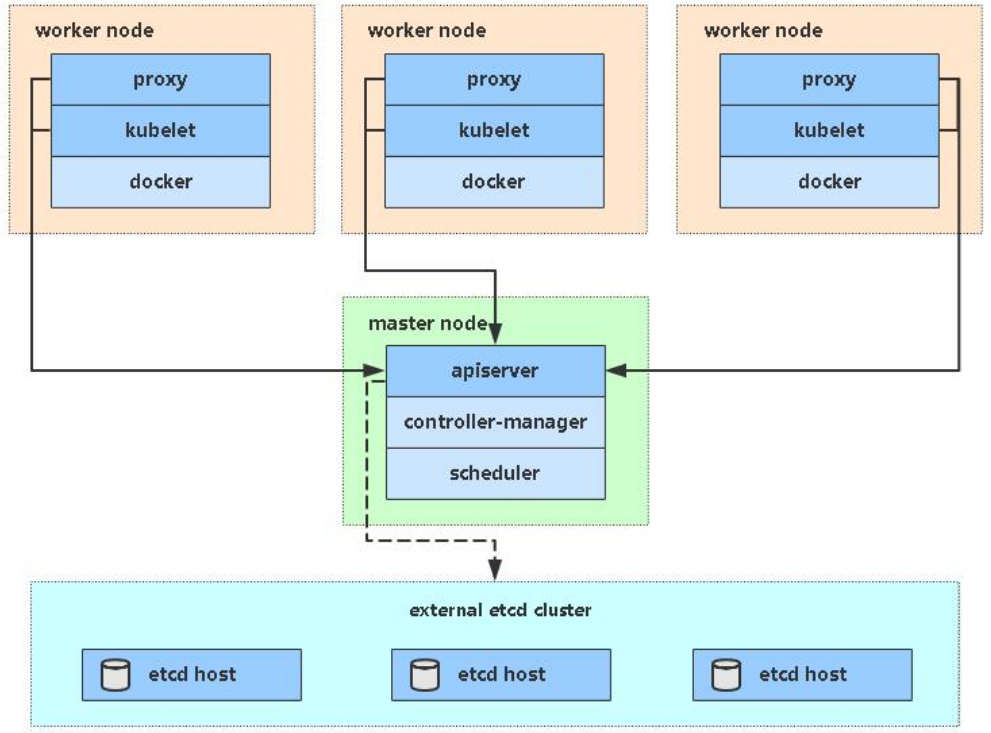

一、环境准备(全部执行)空间 ????????????????????????

1、服务器的环境准备

1》nod节点CPU核数必须是 : 大于等于2核2G ,否则k8s无法启动 ,如果不是,则在集群初始化时,后面后面增加参数: --ignore-preflight-errors=NumCPU

2》DNS网络: 最好设置为本地网络连通的DNS,否则网络不通,无法下载一些镜像

3》linux内核: linux内核必须是 4 版本以上就可以,建议最好是4.4之上的,因此必须把linux核心进行升级

4》准备3台虚拟机环境(或者3台云服务器)

k8s-m01: #此机器用来安装k8s-master的操作环境

k8s-nod01: #此机器用来安装k8s node节点的环境

k8s-nod02: #此机器用来安装k8s node节点的环境

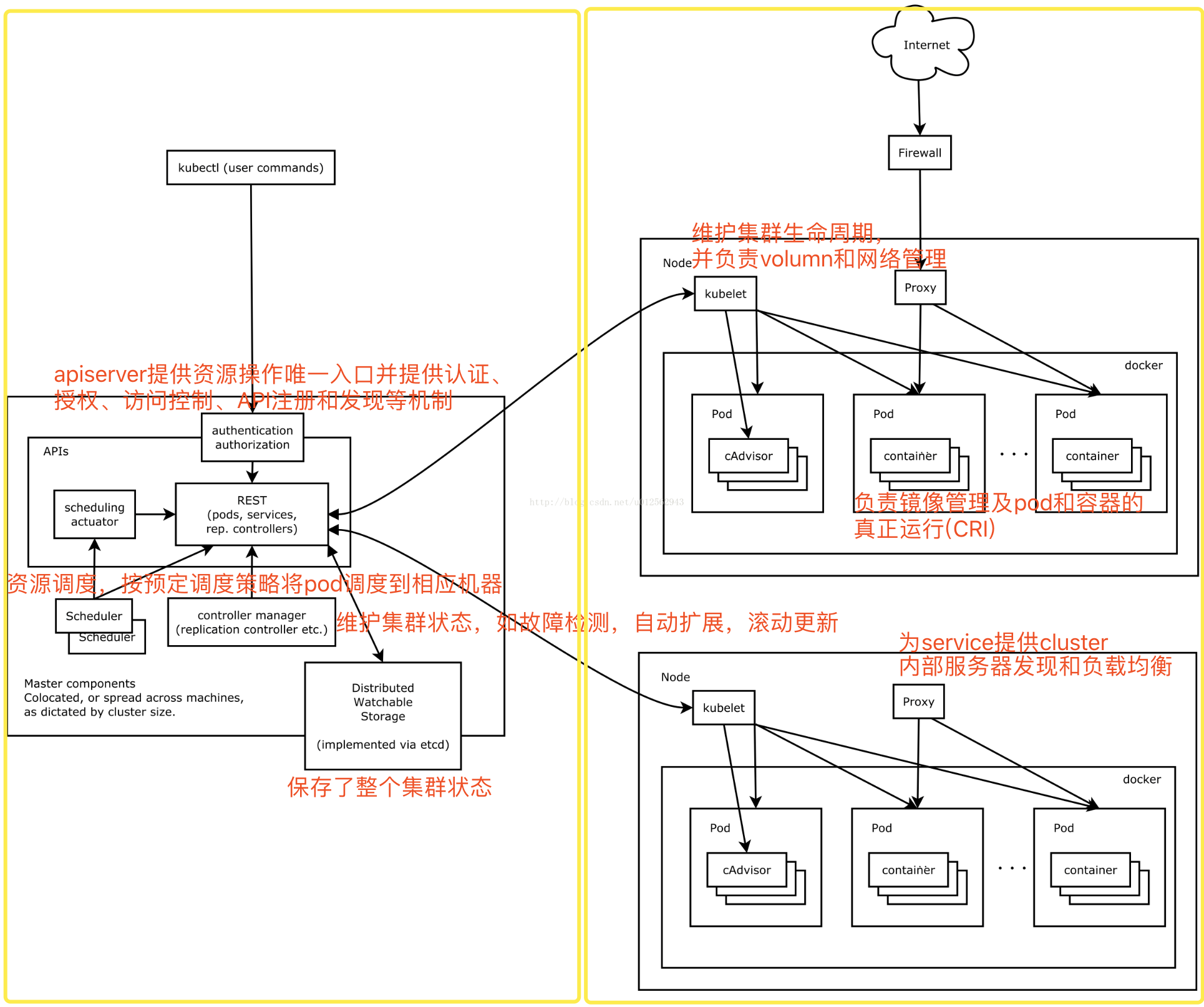

服务原理图:

2、本地机器准备

| 服务器 | IP | 主机名 |

|---|---|---|

| k8s-master | 192.168.15.55 | m01 |

| k8s-node1 | 192.168.15.56 | nod01 |

| k8s-node2 | 192.168.15.57 | nod02 |

3、设置主机及解析添加(全部都执行)

#设置主机名

[root@m01 ~]# hostnamectl set-hostname m01

[root@nod01 ~]# hostnamectl set-hostname nod1

[root@nod02 ~]# hostnamectl set-hostname nod2

#添加hosts解析(三台机器全执行)

[root@m01 ~]# cat >> /etc/hosts << EOF

192.168.15.55 m01

192.168.15.56 nod01

192.168.15.57 nod02

EOF

#查看是否添加(以防万一解析有问题)

[root@m01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.15.55 m01

192.168.15.56 nod01

192.168.15.57 nod02

4、系统优化(全部都执行)

1》 #关闭防火墙

[root@m01 ~]# systemctl disable --now firewalld

[root@nod01 ~]# systemctl disable --now firewalld

[root@nod02 ~]# systemctl disable --now firewalld

2》#关闭Selinux

[root@m01 ~]# setenforce 0

setenforce: SELinux is disabled

[root@nod01 ~]# setenforce 0

setenforce: SELinux is disabled

[root@nod02 ~]# setenforce 0

setenforce: SELinux is disabled

2》 #关闭swap交换分区

(临时关闭swap分区)

[root@m01 ~]# swapoff -a

(禁用永久关闭)

[root@m01 ~]# sed -i.bak '/swap/s/^/#/' /etc/fstab

(修改/etc/fstab 让kubelet忽略swap)

[root@m01 ~]#echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet

3》# 查看swap交换分区(确认关闭状态)

[root@m01 ~]# free -h

total used free shared buff/cache available

Mem: 1.9G 1.0G 77M 9.5M 843M 796M

Swap: 0B 0B 0B

5、主机之间进行做免密操作(全部包括自己本身)

#做免密操作(集群之间应该互相免交互)

[root@m01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

.......

....

root@m01 ~]# for i in m01 nod01 nod02;do ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i; done

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.

(if you think this is a mistake, you may want to use -f option)

.........

......

#测试是否免密成功

[root@m01 ~]# ssh m01 #使用主机名连接m01

Last login: Sun Aug 1 15:40:54 2021 from 192.168.15.1

[root@m01 ~]# exit 登出

Connection to m01 closed.

root@m01 ~]# ssh nod01 #使用主机名连接nod01

Last login: Sun Aug 1 15:40:56 2021 from 192.168.15.1

[root@nod01 ~]# exit 登出

Connection to nod01 closed.

[root@m01 ~]# ssh nod02 #使用主机名连接nod02

Last login: Sun Aug 1 15:40:58 2021 from 192.168.15.1

[root@nod02 ~]# exit 登出

Connection to nod02 closed.

6、配置镜像源(选其一)

#添加阿里云镜像源( 默认选择)~(^o^)/~

[root@m01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#添加华为镜像源

[root@m01 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

[root@m01 ~]# yum clean all

已加载插件:fastestmirror

正在清理软件源: base docker-ce-stable elrepo epel extras kubernetes updates

Cleaning up list of fastest mirrors

Other repos take up 11 M of disk space (use --verbose for details)

[root@m01 ~]# yum makecache

7、安装常用工具包(全部执行)

1)#更新系统

[root@m01 ~]# yum update -y --exclud=kernel*

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

epel/x86_64/metalink | 8.9 kB 0

..........

......

2)#安装常用软件工具包

[root@m01 ~]# yum install wget expect vim net-tools ntp bash-completion ipvsadm ipset jq iptables conntrack sysstat libseccomp -y

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirror-hk.koddos.net

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

........

....

8、主机时间与系统时间同步(集群时间必须一致)

1》#全部时间进行同步统一(方式一)

[root@m01 ~]# yum install ntpdate -y

[root@m01 ~]# ntpdate ntp1.aliyun.com

1 Aug 17:32:28 ntpdate[55595]: adjust time server 120.25.115.20 offset 0.045773 sec

[root@m01 ~]# hwclock --systohc

[root@m01 ~]# hwclock

2021年08月01日 星期日 17时34分05秒 -0.428788 秒

[root@m01 ~]# date

2021年 08月 01日 星期日 17:34:20 CST

2》#设置系统时区为中国/上海(方式二)

[root@m01 ~]# timedatectl set-timezone Asia/Shanghai

#将当前的 UTC 时间写入硬件时钟

[root@m01 ~]# timedatectl set-local-rtc 0

#重启依赖于系统时间的服务

[root@nod01 ~]# systemctl restart rsyslog

systemctl restart rsyslog

[root@m01 ~]# systemctl restart crond

9、系统内核更新 (升级Linux内核为4.44之上版本)

docker 对系统内核要求比较高,最好使用4.4之上

【kernel使用的仓库】

1》#安装包获取下载(选其一安装即可)

✨✨

[root@m01 ~]# wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.136-1.el7.elrepo.x86_64.rpm

[root@m01 ~]# wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-4.4.245-1.el7.el repo.x86_64.rpm

✨✨

[root@m01 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

获取http://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

准备中... ################################# [100%]

......

...

2》#安装内核

yum --enablerepo=elrepo-kernel install -y kernel-lt*

[root@m01 ~]# yum --enablerepo=elrepo-kernel install -y kernel-lt

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirror-hk.koddos.net

* elrepo-kernel: mirror-hk.koddos.net

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

.......

...

3》#查看当前的所有内核版本

[root@m01 ~]# cat /boot/grub2/grub.cfg | grep menuentry

if [ x"${feature_menuentry_id}" = xy ]; then

menuentry_id_option="--id"

menuentry_id_option=""

export menuentry_id_option

menuentry 'CentOS Linux (5.4.137-1.el7.elrepo.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-5.4.137-1.el7.elrepo.x86_64-advanced-507fc260-78cc-4ce0-8310-af00334de578' {

menuentry 'CentOS Linux (3.10.0-1160.36.2.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-1160.36.2.el7.x86_64-advanced-507fc260-78cc-4ce0-8310-af00334de578' {

menuentry 'CentOS Linux (3.10.0-693.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-693.el7.x86_64-advanced-507fc260-78cc-4ce0-8310-af00334de578' {

menuentry 'CentOS Linux (0-rescue-b9c18819be20424b8f84a2cad6ddf12e) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-0-rescue-b9c18819be20424b8f84a2cad6ddf12e-advanced-507fc260-78cc-4ce0-8310-af00334de578' {

4》#查看当前启动内核版本

[root@m01 ~]# grub2-editenv list

saved_entry=CentOS Linux (3.10.0-693.el7.x86_64) 7 (Core)

5》#修改启动内核版本,设置开机从新内核启动(默认调动版本)

grub2-set-default 'CentOS Linux (5.7.7-1.el7.elrepo.x86_64) 7 (Core)'

#注意:设置完内核后,需要重启服务器才会生效

6》#调到默认启动

[root@nod01 ~]# grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.4.137-1.el7.elrepo.x86_64

Found initrd image: /boot/initramfs-5.4.137-1.el7.elrepo.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-1160.36.2.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-1160.36.2.el7.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-693.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-693.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-b9c18819be20424b8f84a2cad6ddf12e

Found initrd image: /boot/initramfs-0-rescue-b9c18819be20424b8f84a2cad6ddf12e.img

done

#查看当前默认启动的内核

[root@m01 ~]# grubby --default-kernel

7》#重启后查询内核

[root@nod01 ~]# reboot

[root@nod01 ~]# uname -r

5.4.137-1.el7.elrepo.x86_64

10、增加命令提示安装

[root@m01 ~]# yum install -y bash-completion

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirrors.tuna.tsinghua.edu.cn

* epel: mirrors.bfsu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

[root@m01 ~]# source /usr/share/bash-completion/bash_completion

[root@m01 ~]# source <(kubectl completion bash)

[root@m01 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

11、设置日志保存方式(此步可跳过)

1)#创建保存日志的目录

[root@m01 ~]# mkdir /var/log/journal

2)#创建配置文件存放目录

[root@m01 ~]# mkdir /etc/systemd/journald.conf.d

3)#创建配置文件

[root@m01 ~]# cat > /etc/systemd/journald.conf.d/99-prophet.conf << EOF

[Journal]

Storage=persistent

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

SystemMaxUse=10G

SystemMaxFileSize=200M

MaxRetentionSec=2week

ForwardToSyslog=no

EOF

4)#重启systemd journald的配置

[root@m01 ~]# systemctl restart systemd-journald

二、IPVS安装及模块调用(全部执行)✨✨✨

1》#监控系统安装(ipvs)

[root@nod01 ~]# yum install -y conntrack-tools ipvsadm ipset conntrack libseccomp

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirror-hk.koddos.net

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

2》#IPVS模块加载

[root@nod01 ~]#cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

EOF

3》#模块文件授权及执行

[root@m01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

#查看监控模块

[root@nod01 ~]# lsmod | grep ip_vs

ip_vs_ftp 16384 0

nf_nat 40960 5 ip6table_nat,xt_nat,iptable_nat,xt_MASQUERADE,ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 155648 25 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 147456 6 xt_conntrack,nf_nat,xt_nat,nf_conntrack_netlink,xt_MASQUERADE,ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

libcrc32c 16384 5 nf_conntrack,nf_nat,btrfs,xfs,ip_vs

4》# 修改内核启动参数

[root@m01 ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

5》# 立即生效添加的内核参数

[root@nod01 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_syn_backlog = 16384

net.core.somaxconn = 16384

* Applying /etc/sysctl.conf ...

三、安装docker(全部执行)

1》 #卸载之前安装过得docker(若没有安装直接跳过此步)

[root@m01 ~]# sudo yum remove docker docker-common docker-selinux docker-engine

2》#安装docker需要的依赖包 (之前执行过,可以省略)

root@nod01 ~]# sudo yum install -y yum-utils device-mapper-persistent-data lvm2

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirror-hk.koddos.net

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

软件包 yum-utils-1.1.31-54.el7_8.noarch 已安装并且是最新版本

··········

......

3》 #安装docker镜像源

(添加Docker repository,这里改为国内阿里云yum源)

[root@nod01 ~]#yum-config-manager

--add-repo

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

(安装华为镜像源)

[root@nod01 ~]# wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

--2021-08-01 18:06:21-- https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

正在解析主机 repo.huaweicloud.com (repo.huaweicloud.com)... 218.92.219.17, 58.222.56.24, 117.91.188.35, ...

正在连接 repo.huaweicloud.com (repo.huaweicloud.com)|218.92.219.17|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:1919 (1.9K) [application/octet-stream]

正在保存至: “/etc/yum.repos.d/docker-ce.repo”

100%[=====================================================================================================>] 1,919 --.-K/s 用时 0s

2021-08-01 18:06:21 (612 MB/s) - 已保存 “/etc/yum.repos.d/docker-ce.repo” [1919/1919])

4》#安装docker

[root@nod01 ~]# yum install docker-ce -y

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirrors.tuna.tsinghua.edu.cn

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

..........

....

5》#配置镜像下载加速器

[root@docker ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://hahexyip.mirror.aliyuncs.com"]

}

EOF

6》 #启动docker并加入开机自启动

[root@m01 ~]# systemctl enable docker && systemctl start docker

7》#查看docker详细信息,也可看docker运行状态

[root@nod01 ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Build with BuildKit (Docker Inc., v0.5.1-docker)

scan: Docker Scan (Docker Inc., v0.8.0)

Server:

Containers: 7

Running: 6

........

...

四、安装kubelet(全部执行)

1》 #添加kubernetes镜像源

[root@nod01 ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2》 #安装kubeadm、kubelet、kubectl (版本更新频繁,指定版本号部署安装)

????????(默认安装) : yum install -y kubelet kubeadm kubectl

[root@nod01 ~]# yum install -y kubelet-1.21.2 kubeadm-1.21.2 kubectl-1.21.2 (不指定版本,默认安装最新版本)

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* elrepo: mirrors.tuna.tsinghua.edu.cn

* epel: mirror.sjtu.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

.........

.....

3》 #启动kubelet并加入开机自启动

[root@m01 ~]#systemctl enable --now kubelet

五、部署kubernetes集群 ????????????????????????

【详解keepalived高可用的实现】

1、初始化master节点(master节点执行)

1》 # master节点初始化 (方式一)

[root@m01 ~]# kubectl version #查看安装的版本(跳过此步)

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.2", GitCommit:"ca643a4d1f7bfe34773c74f79527be4afd95bf39", GitTreeState:"clean", BuildDate:"2021-07-15T21:04:39Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.2", GitCommit:"092fbfbf53427de67cac1e9fa54aaa09a28371d7", GitTreeState:"clean", BuildDate:"2021-06-16T12:53:14Z", GoVersion:"go1.16.5", Compiler:"gc", Platform:"linux/amd64"}

[root@nod01 ~]# kubeadm init #初始化master

--apiserver-advertise-address=192.168.15.55 #master的主机地址

--image-repository registry.aliyuncs.com/google_containers/k8sos #使用安装下载的镜像地址

--kubernetes-version v1.21.2 #指定的安装的版本,不指定,默认使用最新版本

--service-cidr=10.96.0.0/12

--pod-network-cidr=10.244.0.0/16

# --ignore-preflight-errors=all

ps : 可以先使用手动先下载镜像: kubeadm config images pull --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

2》 #master节点初始化 (方式二)

[root@nod01 ~]# vi kubeadm.conf

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.21.2

imageRepository: registry.aliyuncs.com/google_containers

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

#指定文件进行初始化

[root@nod01 ~]# kubeadm init --config kubeadm.conf --ignore-preflight-errors=all

3》#查看下载的image

[root@m01 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 08b152afcfae 10 days ago 133MB

registry.cn-hangzhou.aliyuncs.com/k8sos/kube-proxy v1.21.2 adb2816ea823 2 weeks ago 103MB

registry.cn-hangzhou.aliyuncs.com/k8sos/kube-apiserver v1.21.2 106ff58d4308 6 weeks ago 126MB

registry.cn-hangzhou.aliyuncs.com/k8sos/kube-scheduler v1.21.2 f917b8c8f55b 6 weeks ago 50.6MB

registry.cn-hangzhou.aliyuncs.com/k8sos/kube-controller-manager v1.21.2 ae24db9aa2cc 6 weeks ago 120MB

quay.io/coreos/flannel v0.14.0 8522d622299c 2 months ago 67.9MB

registry.cn-hangzhou.aliyuncs.com/k8sos/pause 3.4.1 0f8457a4c2ec 6 months ago 683kB

registry.cn-hangzhou.aliyuncs.com/k8sos/coredns v1.8.0 7916bcd0fd70 9 months ago 42.5MB

registry.cn-hangzhou.aliyuncs.com/k8sos/etcd 3.4.13-0 8855aefc3b26 11 months ago 253MB

--------------------------------------------------------------------------------------------------------------------------------------

#参数详解:

–apiserver-advertise-address #集群通告地址

–image-repository #由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

–kubernetes-version #K8s版本,与安装的一致

–service-cidr #集群内部虚拟网络,Pod统一访问入口

–pod-network-cidr #Pod网络,,与下面部署的CNI网络组件yaml中保持一致

----------------------------------------------------------------------------------------------------------------------------------

#注:若配置不够可以在以上命令后面加上--ignore-preflight-errors= NumCPU

ps : 初始化失败可以进行重置kubeadm:kubeadm reset

2、配置 kubernetes 用户信息(master节点执行)

#kubernetes集群认证文件初始化

[root@m01 ~]# mkdir -p $HOME/.kube

[root@m01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@m01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

ps : 如果是root用户,则可以使用:export KUBECONFIG=/etc/kubernetes/admin.conf(只能临时使用,不建议使用)

#查看当前的node

[root@m01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

m01 Ready control-plane,master 10m v1.21.3

3、安装集群网络插件(flannel)

kubernetes 需要使用第三方的网络插件来实现 kubernetes 的网络功能:

第三方网络插件有多种,常用的有 flanneld、calico 和 cannel(flanneld+calico),不同的网络组件,都提供 基本的网络功能,为各个 Node 节点提供 IP 网络等

1》#插件文件下载(方式一)

[root@m01 ~]#wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@m01 ~]# kubectl apply -f kube-flannel.yml #指定文件进行部署集群网络

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged configured

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.apps/kube-flannel-ds unchanged

2》#直接在指定URL部署网络插件(方式二)

[root@m01 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged configured

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.apps/kube-flannel-ds unchanged

3》 #查看集群状态

[root@m01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

m01 Ready control-plane,master 10m v1.21.3

【kube-flanne.yml】

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: registry.cn-hangzhou.aliyuncs.com/alvinos/flanned:v0.13.1-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: registry.cn-hangzhou.aliyuncs.com/alvinos/flanned:v0.13.1-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

【重新加入集群】

1) #创建token:

方式一:(直接使用命令快捷生成token, 如上所示)

[root@m01 ~]# kubeadm token create --print-join-command

方式二: (创建token)

[root@m01 ~]# kubeadm token create

[root@m01 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

750r73.ae9c3uhcy4hueyn9 18h 2021-08-02T16:11:49+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

sbzppu.xtedbbjwz3qu9agc 21h 2021-08-02T19:07:01+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

x4nurb.h7naw7lb7btzm194 18h 2021-08-02T15:56:02+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

[root@m01 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' #使用命令过滤截取出token

09ba151096839d7a9b4f363462f8f9d3e12682bca0ee56bcdd1114fabeca0868

2) #创建证书:(在master上生成用于新master加入的证书)

[root@m01 ~]# kubeadm init phase upload-certs --experimental-upload-certs

#添加新master,把红色部分加到--experimental-control-plane --certificate-key后

kubeadm join 172.31.182.156:8443 --token ortvag.ra0654faci8y8903

--discovery-token-ca-cert-hash sha256:04755ff1aa88e7db283c85589bee31fabb7d32186612778e53a536a297fc9010

--experimental-control-plane --certificate-key f8d1c027c01baef6985ddf24266641b7c64f9fd922b15a32fce40b6b4b21e47d

3) #kubernetes 集群卸载清理

kubeadm reset -f

modprobe -r ipip

lsmod

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

yum clean all

yum remove kube*

4、加入Kubernetes Node(master点执行)

(加入master前,注意细节,一步错,步步错、要注意观察 !!!)????????????????????????????????????????????????

1》 #集群命令生成(kubeadm init输出的kubeadm join命令) 注意看 ---->???? ???? ????【master点执行】

[root@m01 ~]# kubeadm token create --print-join-command #在master生成join命令

kubeadm join 192.168.15.55:6443 --token 750r73.ae9c3uhcy4hueyn9 --discovery-token-ca-cert-hash sha256:09ba151096839d7a9b4f363462f8f9d3e12682bca0ee56bcdd1114fabeca0868

ps :将上方生成的token复制到node节点上执行

注:默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,如下所示:

2》#也可以执行安装日志中的命令即可(此步略)

-----------------------------------------------------------------------------------------------------------

#创建token:

方式一:(直接使用命令快捷生成token, 如上所示)

[root@m01 ~]# kubeadm token create --print-join-command

方式二: (创建token)

[root@m01 ~]# kubeadm token create

[root@m01 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

750r73.ae9c3uhcy4hueyn9 18h 2021-08-02T16:11:49+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

sbzppu.xtedbbjwz3qu9agc 21h 2021-08-02T19:07:01+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

x4nurb.h7naw7lb7btzm194 18h 2021-08-02T15:56:02+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

[root@m01 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' #使用命令过滤截取出token

09ba151096839d7a9b4f363462f8f9d3e12682bca0ee56bcdd1114fabeca0868

#创建证书:

在master上生成用于新master加入的证书

kubeadm init phase upload-certs --experimental-upload-certs

添加新master,把红色部分加到--experimental-control-plane --certificate-key后

kubeadm join 172.31.182.156:8443 --token ortvag.ra0654faci8y8903

--discovery-token-ca-cert-hash sha256:04755ff1aa88e7db283c85589bee31fabb7d32186612778e53a536a297fc9010

--experimental-control-plane --certificate-key f8d1c027c01baef6985ddf24266641b7c64f9fd922b15a32fce40b6b4b21e47d

#kubernetes 集群卸载清理

kubeadm reset -f

modprobe -r ipip

lsmod

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

yum clean all

yum remove kube*

--------------------------------------------------------------------------------------------------------------------------------------------

2》#node加入集群 (复制之上生成命令token即可加入) -----> ???? ???? ????【node点执行】

[root@nod01 ~]# kubeadm join 192.168.15.55:6443 --token 750r73.ae9c3uhcy4hueyn9 --discovery-token-ca-cert-hash sha256:09ba151096839d7a9b4f363462f8f9d3e12682bca0ee56bcdd1114fabeca0868

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

.............

......

[root@nod02 ~]# kubeadm join 192.168.15.55:6443 --token 750r73.ae9c3uhcy4hueyn9 --discovery-token-ca-cert-hash sha256:09ba151096839d7a9b4f363462f8f9d3e12682bca0ee56bcdd1114fabeca0868

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

...........

......

----------------------------------------------------------------------------------------------------------------------------------------

################################## 检查集群状态 ####################################

1》#查看集群主机状态(只能在master节点查看) 方式一:

[root@m01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

m01 Ready control-plane,master 28m v1.21.3

nod01 Ready <none> 9m36s v1.21.3

nod02 Ready <none> 9m33s v1.21.3

2》#查看集群服务状态 (只能在master节点查看) 方式二:

[root@m01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-978bbc4b6-6p2zv 1/1 Running 0 12m

coredns-978bbc4b6-qg2g6 1/1 Running 0 12m

etcd-m01 1/1 Running 0 12m

kube-apiserver-m01 1/1 Running 0 12m

kube-controller-manager-m01 1/1 Running 0 12m

kube-flannel-ds-d8zjs 1/1 Running 0 7m49s

kube-proxy-5thp5 1/1 Running 0 12m

kube-scheduler-m01 1/1 Running 0 12m

3》#直接验证集群DNS 方式三:

[root@m01 ~]# kubectl run test -it --rm --image=busybox:1.28.3

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

5、错误方案解决(没有问题就不用管 OK)

1) #错误一:

The connection to the server localhost:8080 was refused - did you specify the right host or port?

#问题分析:(环境变量)

原因:kubernetes master没有与本机绑定,集群初始化的时候没有绑定,此时设置在本机的环境变量即可解决问题

#解决方案:

1》加入环境变量

方式一:编辑文件设置

[root@m01 ~]# vim /etc/profile #追加新的环境变量即可

export KUBECONFIG=/etc/kubernetes/admin.conf

方式二:使用命令直接追加文件内容

[root@m01 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

2》重载配置文件

[root@m01 ~]# source /etc/profile

----------------------------------------------------------------------------------------------------------------------------------------

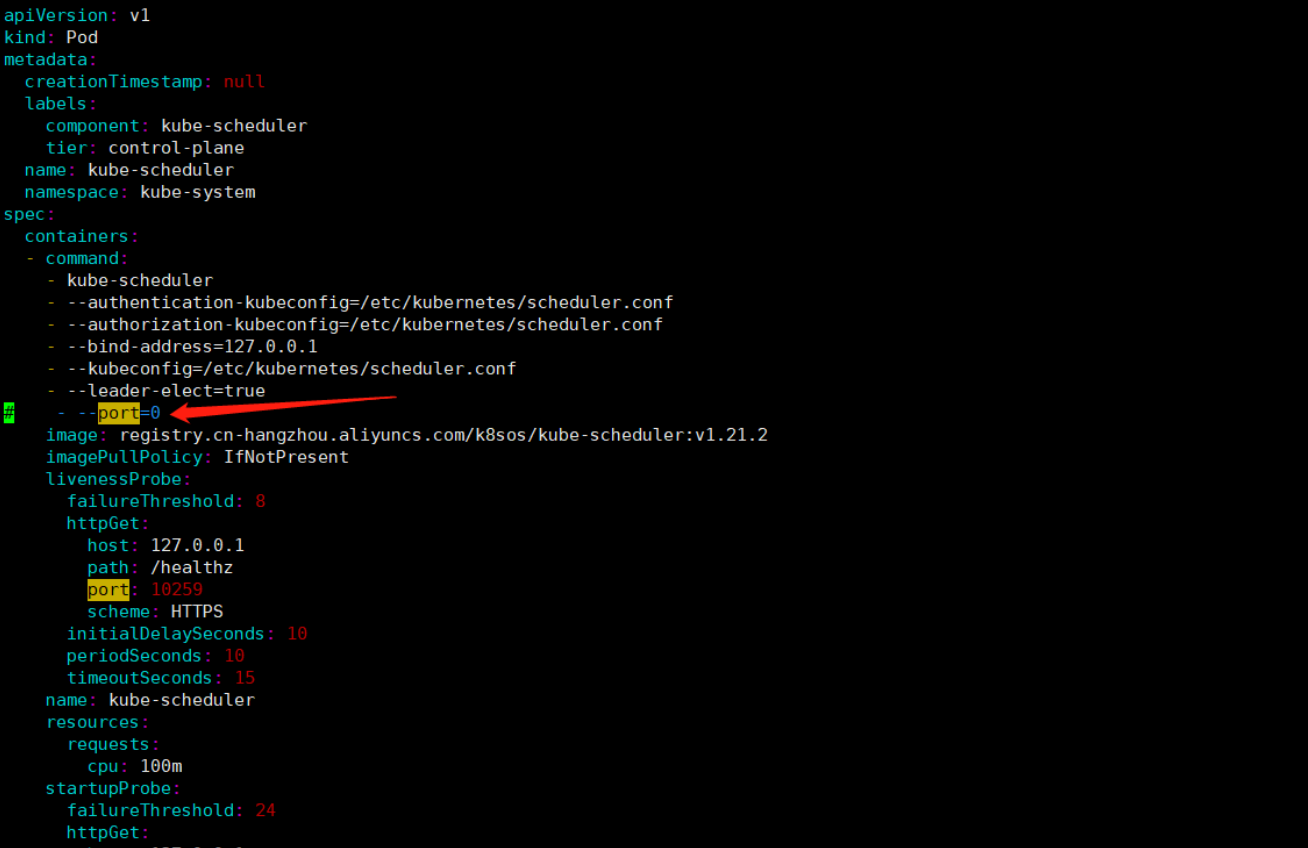

2)#错误二:

部署完master节点,检测组件的运行状态时,运行不健康(状态检查命令:kubectl get cs)

[root@m01 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

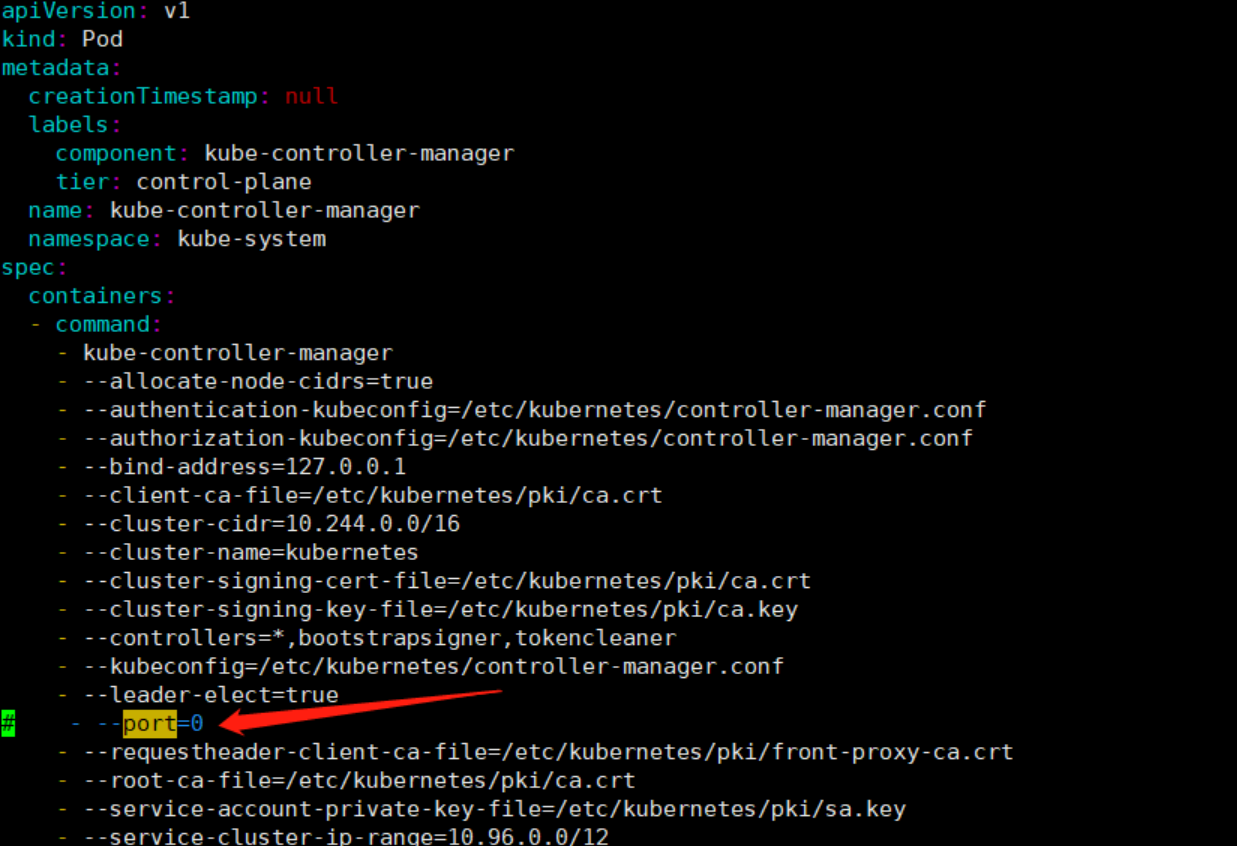

#原因分析:(端口问题)

这种状态 ,一般是/etc/kubernetes/manifests/下的kube-controller-manager.yaml和kube-scheduler.yaml文件端口问题,默认端口设置的是0,注释port即可

#解决方案如下图:(完成下图操作后执行重新启动服务)

[root@m01 ~]#systemctl restart kubelet.service

#重新检查服务状态

[root@m01 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

1》kube-controller-manager.yaml文件修改: 注释 - --port=0 即可

2》kube-scheduler.yaml文件修改:同样注释 - --port=0 即可

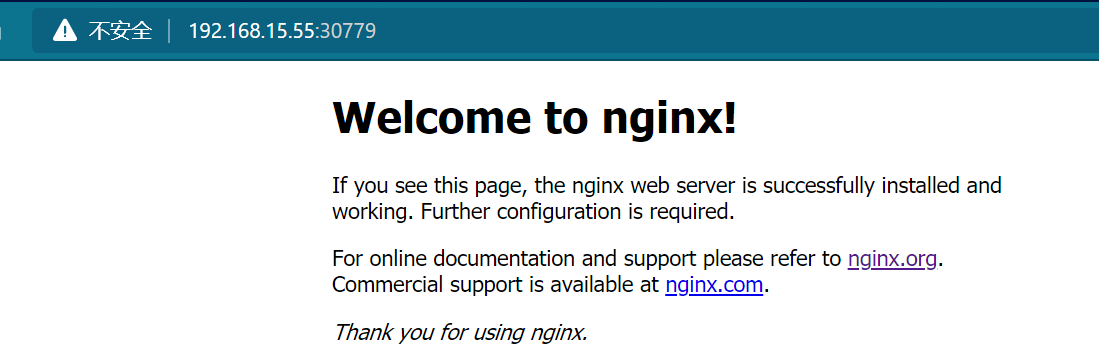

6、测试kubernetes集群

验证Pod工作

验证Pod网络通信

验证DNS解析

#方式一:

1》#集群创建服务nginx测试

[root@m01 ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created #部署创建nginx

2》#启动创建的实列,指定端口

[root@m01 ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed #启动已创建的nginx

3》#查看服务pod状态

[root@m01 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-pp4lk 1/1 Running 0 95s

pod/test 1/1 Running 0 5h21m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE #服务的状态

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5h42m

service/nginx NodePort 10.101.203.98 <none> 80:30779/TCP 59s

ps : 1) pod : 一个Pod(就像一群鲸鱼,或者一个豌豆夹)相当于一个共享context的配置组,一个Pod是一个容器环境下的“逻辑主机

2)svc :是service 一个svc表示一个服务,不懂自己悟

--------------------------------------------------------------------------------------------------------------------------------------------------------

#方式二:(简单点操作吧,如下所示????????????)

✨#首先使用docker拉取镜像

[root@m01 ~]# docker pull nginx

✨#然后查看docker镜像是否成功拉取(看,最新版nginx拉取完成,不指定版本,默认获取最新nginx)

docker.io/library/nginx:latest

[root@m01 ~]# docker images |grep nginx

nginx latest 08b152afcfae 10 days ago 133MB

✨#再然后创建Pod ,在master节点上运行一个镜像:--image=nginx ,并且启动2台机器 :--replicas=2 指定端口: --port=80

[root@m01 ~]# kubectl run my-nginx --image=nginx --replicas=2 --port=80

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/my-nginx created

✨#继续查看pod是否添加完成

[root@m01 ~]# kubectl get pod

✨#最后,没有最后了,执行下面就OK了 (☞゚ヮ゚)☞

------------------------------------------------------------------------------------------------------------------------------------------------

4》#浏览器测(试访问地址:http://NodeIP:Port)

#本地测试

[root@m01 ~]# curl http://10.101.203.98:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

#浏览器测试:http://192.168.15.55:30779

7、kubectl命令使用

【kubectl常用的指令】

???????? #对于以上问题,看似简单,实则不然,只会启服务,服务出问题怎么办,全靠kubectl不一定好(当一个pod宕机之后,不能全靠机器,可以检测分析发生的问题)

???????? 查看pod的详细信息:

格式: kubectl describe pod [pod名称]

示列:

[root@m01 ~]# kubectl describe pod nginx

Name: nginx-6799fc88d8-pp4lk

Namespace: default

Priority: 0

Node: nod02/192.168.15.57

Start Time: Sun, 01 Aug 2021 21:36:52 +0800

Labels: app=nginx

pod-template-hash=6799fc88d8

Annotations: <none>

Status: Running

IP: 10.244.2.2

IPs:

IP: 10.244.2.2

........

.....

???????? 进入到pod:(命令与docker十有八九相似,换汤不换药嘛,重在理解)

格式:

kubectl exec -it [pod名称] -n default bash (pod名称,使用全称,不然进不去,你懂得)

示列:

[root@m01 ~]# kubectl exec -it nginx-6799fc88d8-pp4lk -n default bash #进入pod,也进入容器

root@nginx-6799fc88d8-pp4lk:/# ls

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint.d etc lib media opt root sbin sys usr

root@nginx-6799fc88d8-pp4lk:/# exit

[root@m01 ~]#

???????? 删除pod:(需退出pod或者再开一个终端)

格式:

kubectl delete deployment [pod名称]

示列:

[root@m01 ~]# kubectl get deployment #先看看部署的服务

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 5h44m

redis 1/1 1 1 75m

[root@m01 ~]# kubectl delete deployment redis #使用命令删除一个pod

deployment.apps "redis" deleted

[root@m01 ~]# kubectl get deployment #再查看部署的pod,redis已经不在了

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 5h56m

ps : kubernetes 可能会产生垃圾或者僵尸pod,在删除rc的时候,相应的pod没有被删除,手动删除pod后会自动重新创建,这时一般需要先删除掉相关联的resources,先删除pod的话,马上会创建一个新的pod,因为deployment.yaml文件中定义了副本数量

(正确步骤:应先删除deployment,然后再删除pod)

???????? 删除pod与上面一个意思:(如果使用上面删除不干净,可以使用当前方式删除)

格式:

kubectl delete rc <name>

kubectl delete rs <name>

???????? 查看当前集群pod:

[root@m01 ~]# kubectl get rc 或者

[root@m01 ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-6799fc88d8 1 1 1 7h29m

#注:

1>Replication Controller(RC) RC是K8S中的另一个核心概念,应用托管在K8S后,K8S需要保证应用能够持续运行,这是RC的工作内容。

主要功能 确保pod数量:RC用来管理正常运行Pod数量,一个RC可以由一个或多个Pod组成,在RC被创建后,系统会根据定义好的副本数来创建Pod数量

2>被认为 是“升级版”的RC。RS也是用于保证与label selector匹配的pod数量维持在期望状态

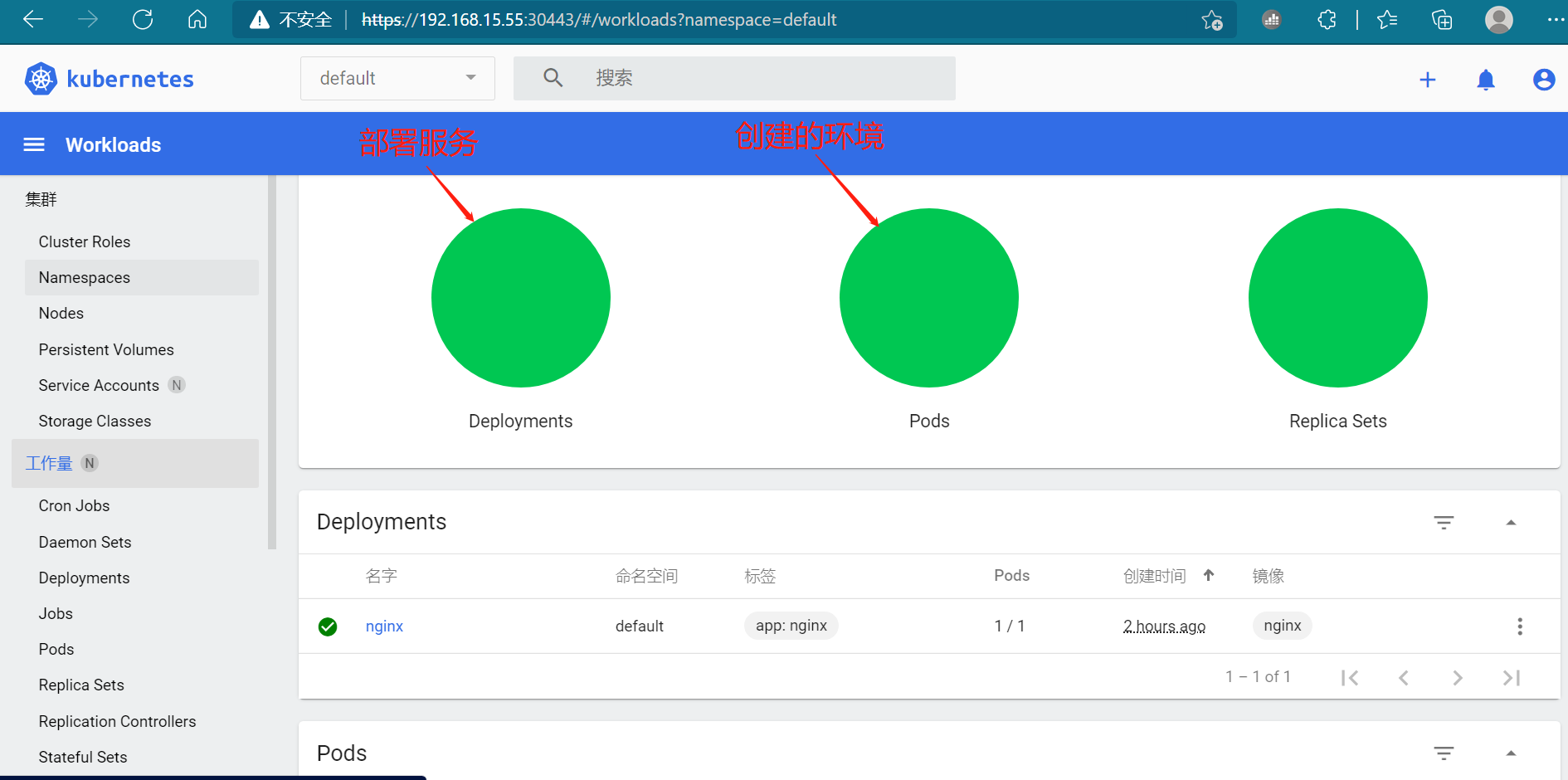

六、部署 Dashboard 图形化

Kubernetes Dashboard是Kubernetes集群的Web UI,用户可以通过Dashboard进行管理集群内所有资源对象

1》#下载安装Dashboard

[root@m01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

--2021-08-01 22:10:54-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.110.133, 185.199.109.133, ...

正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:7552 (7.4K) [text/plain]

正在保存至: “recommended.yaml”

100%[===================================================================================================>] 7,552 --.-K/s 用时 0s

2021-08-01 22:10:55 (33.9 MB/s) - 已保存 “recommended.yaml” [7552/7552])

[root@m01 ~]# ll |grep recommended.yaml

-rw-r--r-- 1 root root 7552 8月 1 22:10 recommended.yaml

2》dashboard配置文件使用

方式一:修改svc服务为NodePort类型

[root@m01 ~]# kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kubernetes-dashboard (直接使用命令更改)

(默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部)

方式二:配置文件更改

[root@m01 ~]# vim recommended.yaml #配置文件修改

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30443 #修改端口

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

...........

......

4》指定dashboard文件,创建dashboard(更新配置)

[root@m01 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard unchanged

serviceaccount/kubernetes-dashboard unchanged

service/kubernetes-dashboard configured

secret/kubernetes-dashboard-certs unchanged

secret/kubernetes-dashboard-csrf unchanged

secret/kubernetes-dashboard-key-holder unchanged

configmap/kubernetes-dashboard-settings unchanged

role.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

deployment.apps/kubernetes-dashboard unchanged

service/dashboard-metrics-scraper unchanged

deployment.apps/dashboard-metrics-scraper unchanged

5》#查看启动的pod

[root@m01 ~]# kubectl get pods -n kubernetes-dashboard #全部都在运行状态

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-5594697f48-ccdf4 1/1 Running 0 103s

kubernetes-dashboard-5c785c8bcf-rzjp9 1/1 Running 0 103s

6》Dashboard 支持 Kubeconfig 和 Token 两种认证方式:

######################################################################################

1)#Token认证方式登录(推荐方式一)

[root@m01 ~]# cat > dashboard-adminuser.yaml << EOF

> apiVersion: v1

> kind: ServiceAccount

> metadata:

> name: admin-user

> namespace: kubernetes-dashboard

>

> ---

> apiVersion: rbac.authorization.k8s.io/v1

> kind: ClusterRoleBinding

> metadata:

> name: admin-user

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: cluster-admin

> subjects:

> - kind: ServiceAccount

> name: admin-user

> namespace: kubernetes-dashboard

> EOF

2)#创建登录用户

[root@m01 ~]# kubectl apply -f dashboard-adminuser.yaml

serviceaccount/admin-user unchanged

clusterrolebinding.rbac.authorization.k8s.io/admin-user unchanged

#注解:上面创建了一个叫admin-user的服务账号,并放在kubernetes-dashboard 命名空间下,并将cluster-admin角色绑定到admin-user账户,这样admin-user账户就有了管理员的权限。默认情况下,kubeadm创建集群时已经创建了cluster-admin角色,我们直接绑定即可

####################################################################################

#执行yaml文件直接部署(方式二)

1)#文件下载

[root@m01 ~]# kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

2)#使用nodeport方式将dashboard服务暴露在集群外,指定使用30443端口 (自定义端口:30443)

[root@m01 ~]# kubectl patch svc kubernetes-dashboard -n kubernetes-dashboard

-p '{"spec":{"type":"NodePort","ports":[{"port":443,"targetPort":8443,"nodePort":30443}]}}'

service/kubernetes-dashboard patched (no change)

3)#查看服务是否运行

[root@m01 ~]# kubectl -n kubernetes-dashboard get pods

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-5594697f48-ccdf4 1/1 Running 0 84m

kubernetes-dashboard-5c785c8bcf-rzjp9 1/1 Running 0 84m

4)#查看服务(查看暴露的service,已修改为nodeport类型)

[root@m01 ~]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.96.47.198 <none> 8000/TCP 40m

kubernetes-dashboard NodePort 10.106.194.136 <none> 443:30443/TCP 82m

############################################################################################

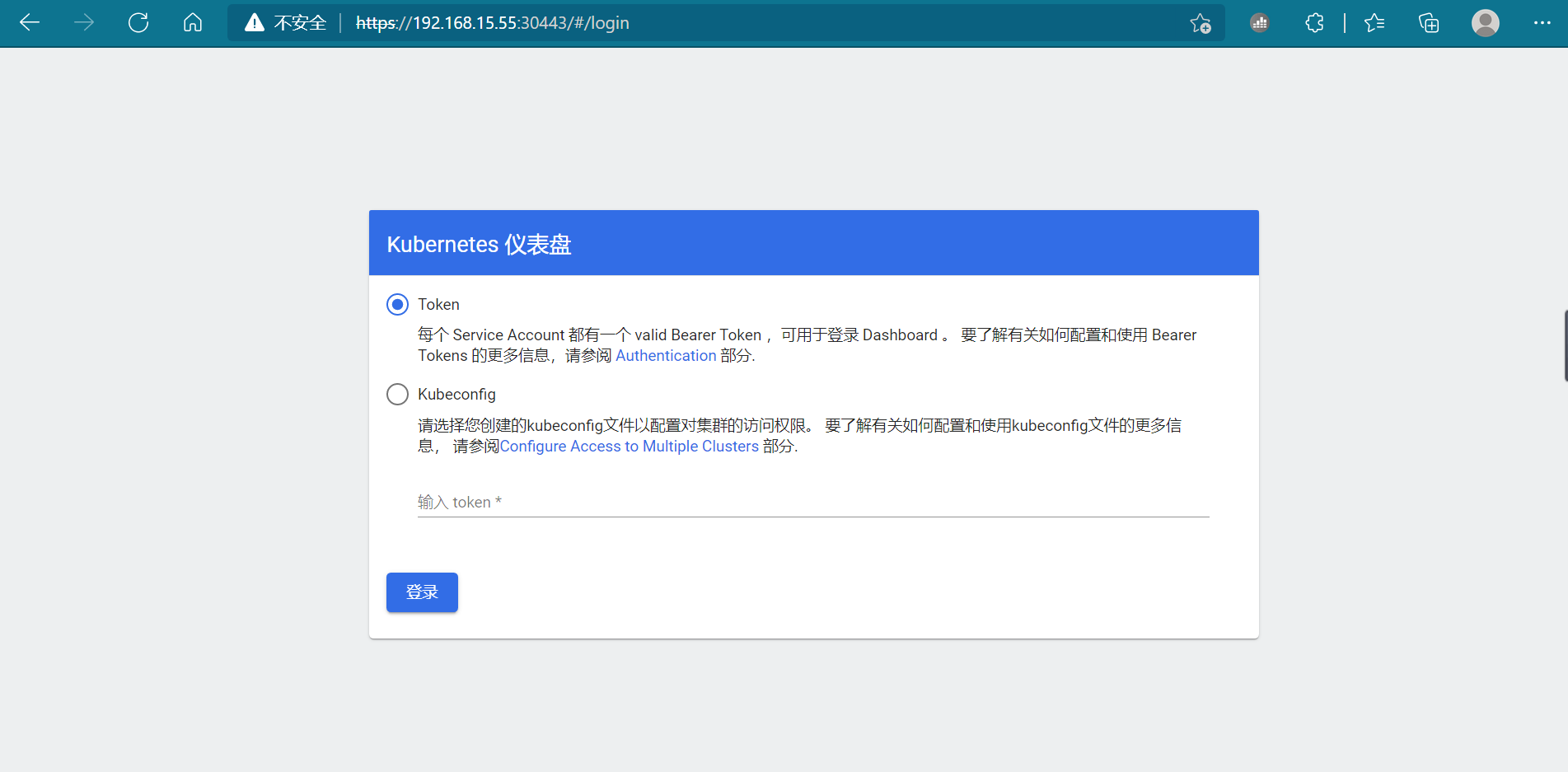

7》#浏览器访问登录: url:https://NodeIP:30443

#本地测试:

[root@m01 ~]# curl http://192.168.15.55:30443

#登录dashboard

http://192.168.15.55:30443

----------------------------------------------------------------------------------------------------------------------

重装Dashboard

(在kubernetes-dashboard.yaml所在路径下)

[root@m01 ~]#kubectl delete -f kubernetes-dashboard.yaml

[root@m01 ~]#kubectl create -f kubernetes-dashboard.yaml

查看所有的pod运行状态

[root@m01 ~]# kubectl get pod --all-namespaces

查看dashboard映射的端口

[root@m01 ~]# kubectl -n kube-system get service kubernetes-dashboard

----------------------------------------------------------------------------------------------------------------------------------

<<<<<<< 安装正常,跳过此页 ✌ >>>>>>>>>>>

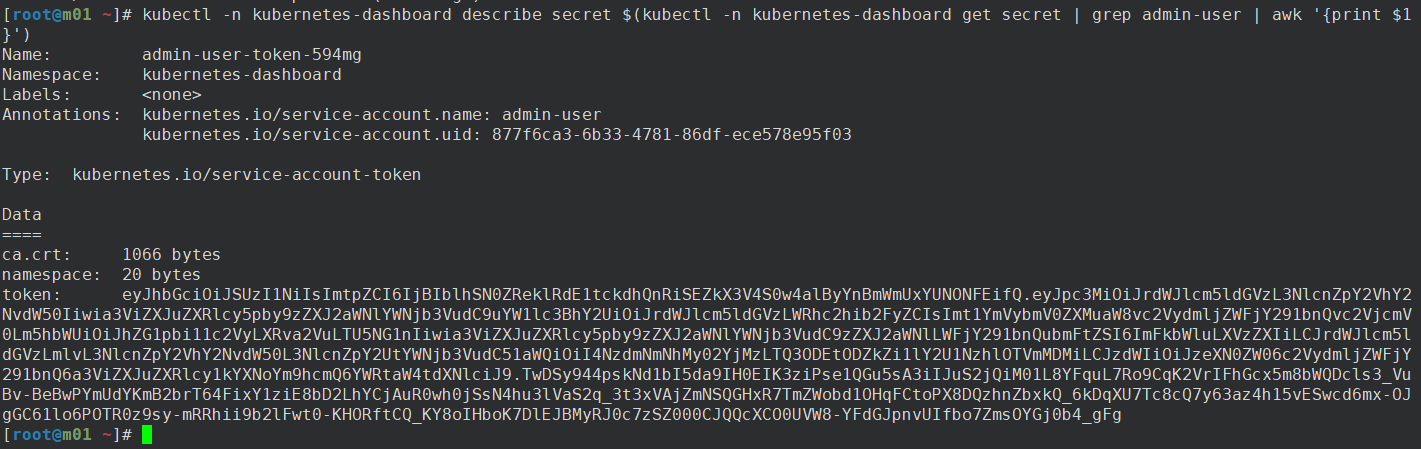

#查看admin-user账户的token

[root@m01 ~]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-594mg

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 877f6ca3-6b33-4781-86df-ece578e95f03

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjBIblhSN0ZReklRdE1tckdhQnRiSEZkX3V4S0w4alByYnBmWmUxYUNONFEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTU5NG1nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4NzdmNmNhMy02YjMzLTQ3ODEtODZkZi1lY2U1NzhlOTVmMDMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.TwDSy944pskNd1bI5da9IH0EIK3ziPse1QGu5sA3iIJuS2jQiM01L8YFquL7Ro9CqK2VrIFhGcx5m8bWQDcls3_VuBv-BeBwPYmUdYKmB2brT64FixY1ziE8bD2LhYCjAuR0wh0jSsN4hu3lVaS2q_3t3xVAjZmNSQGHxR7TmZWobd1OHqFCtoPX8DQzhnZbxkQ_6kDqXU7Tc8cQ7y63az4h15vESwcd6mx-OJgGC61lo6POTR0z9sy-mRRhii9b2lFwt0-KHORftCQ_KY8oIHboK7DlEJBMyRJ0c7zSZ000CJQQcXCO0UVW8-YFdGJpnvUIfbo7ZmsOYGj0b4_gFg

#获取到的Token复制到登录界面的Token输入框中,就可以登陆成功

#创建service account并绑定默认cluster-admin管理员集群角色

创建用户

[root@m01 ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

用户授权

[root@m01 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

获取用户Token

[root@m01 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-q2hh5

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 56eb680e-97c6-4684-a90a-5f2a96034cee

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjBIblhSN0ZReklRdE1tckdhQnRiSEZkX3V4S0w4alByYnBmWmUxYUNONFEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcTJoaDUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNTZlYjY4MGUtOTdjNi00Njg0LWE5MGEtNWYyYTk2MDM0Y2VlIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.Bc7mRXcRYU-5oSi3VAb0sBUnau2AAe4Gubrke62nAXaTwW9USzdW_q1s-P9wX-zD3OQ797yCfV-trel_E5gBp490syLcGKBNgGAT0RU1iIrTwJr_Hlyq9QKUBBv7Sm6A6Ln6CHpRohrBNZvc1yrobDYvORbJA1rJ8huPdnzuU30yMdlfilyN4YEyDf100MpTso6TR74tH4E-2ELaZEXU1ApISTgHZ5LSti-iUX1mRwgFqCUa_m_Vbrziu30YzpgWLZvfbisOn00fuHqRrub3dmqdBRQSdCywxvwluwliUEZ4fInh2Sp7mTO6M09SXza7fwM4WOKx2UhmQUiKwzIfig

ca.crt: 1066 bytes

namespace: 11 bytes

------------------------------------------------------------------------------------------------------------------------------------

dashboard的删除:

1》#使用pod删除dashboard

[root@m01 /opt]# kubectl -n kube-system delete $(kubectl -n kube-system get pod -o name | grep dashboard)

pod "kubernetes-dashboard-65ff5d4cc8-4t4cc" deleted

2》#强制删除dashboard

[root@m01 /opt]# kubectl delete pod kubernetes-dashboard-59f548c4c7-6b9nj -n kube-system --force --grace-period=0

3》#kubernetes-dashboard卸载

[root@m01 /opt]# kubectl delete deployment kubernetes-dashboard --namespace=kube-system

[root@m01 /opt]# kubectl delete service kubernetes-dashboard --namespace=kube-system

[root@m01 /opt]# kubectl delete role kubernetes-dashboard-minimal --namespace=kube-system

[root@m01 /opt]# kubectl delete rolebinding kubernetes-dashboard-minimal --namespace=kube-system

[root@m01 /opt]# kubectl delete sa kubernetes-dashboard --namespace=kube-system

[root@m01 /opt]# kubectl delete secret kubernetes-dashboard-certs --namespace=kube-system

[root@m01 /opt]# kubectl delete secret kubernetes-dashboard-csrf --namespace=kube-system

[root@m01 /opt]# kubectl delete secret kubernetes-dashboard-key-holder --namespace=kube-system

4》#编写成脚本执行删除(和上面一个意思)

[root@m01 /opt]# cat > dashboard_dalete.sh << EOF

#!/bin/bash

kubectl delete deployment kubernetes-dashboard --namespace=kube-system

kubectl delete service kubernetes-dashboard --namespace=kube-system

kubectl delete role kubernetes-dashboard-minimal --namespace=kube-system

kubectl delete rolebinding kubernetes-dashboard-minimal --namespace=kube-system

kubectl delete sa kubernetes-dashboard --namespace=kube-system

kubectl delete secret kubernetes-dashboard-certs --namespace=kube-system

kubectl delete secret kubernetes-dashboard-csrf --namespace=kube-system

kubectl delete secret kubernetes-dashboard-key-holder --namespace=kube-system

EOF

------------------------------------------------------------------------------------------------------

(访问出现以下问题,:把请求路径改为https://ip:端口去访问)

Client sent an HTTP request to an HTTPS server.

1》》获取token值????????????

2》》登录dashboard

3》》输入token值

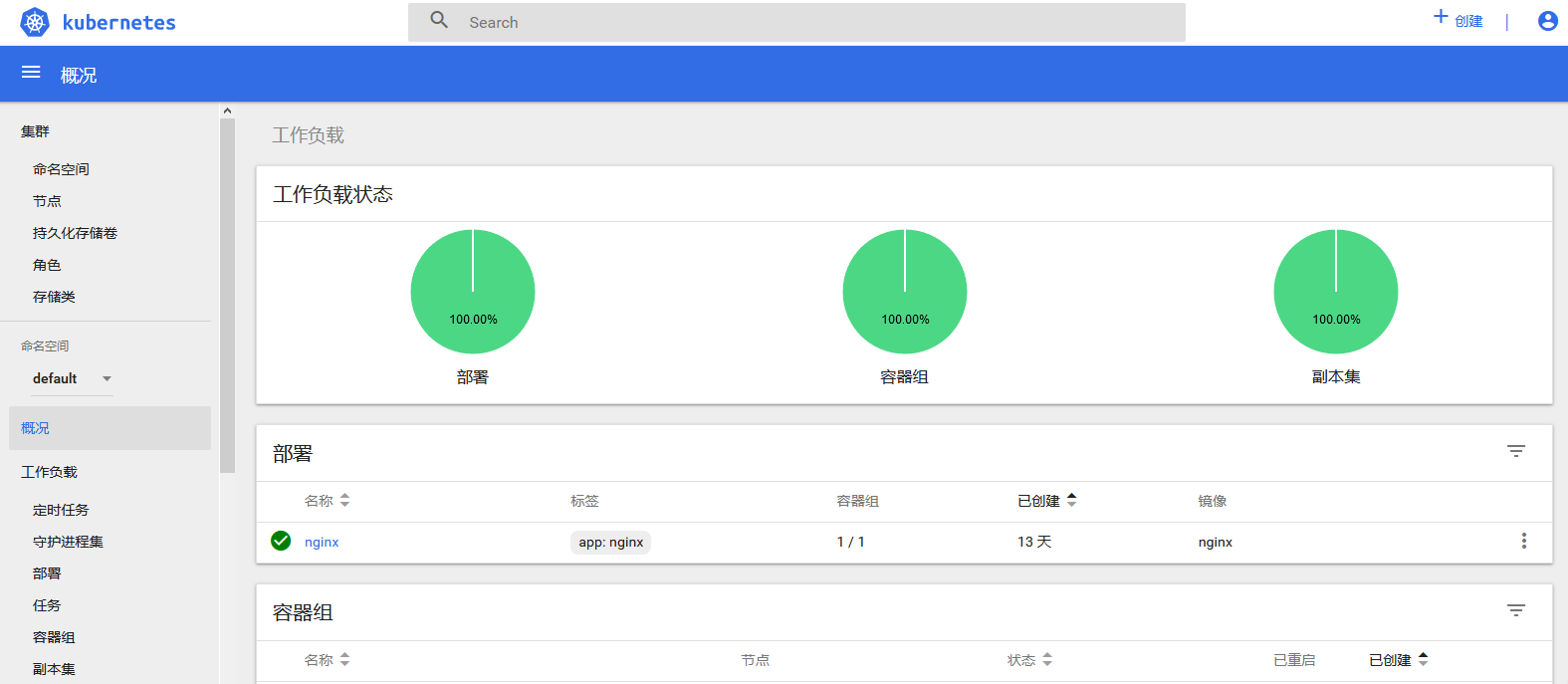

4》》登录后的仪表图????????????

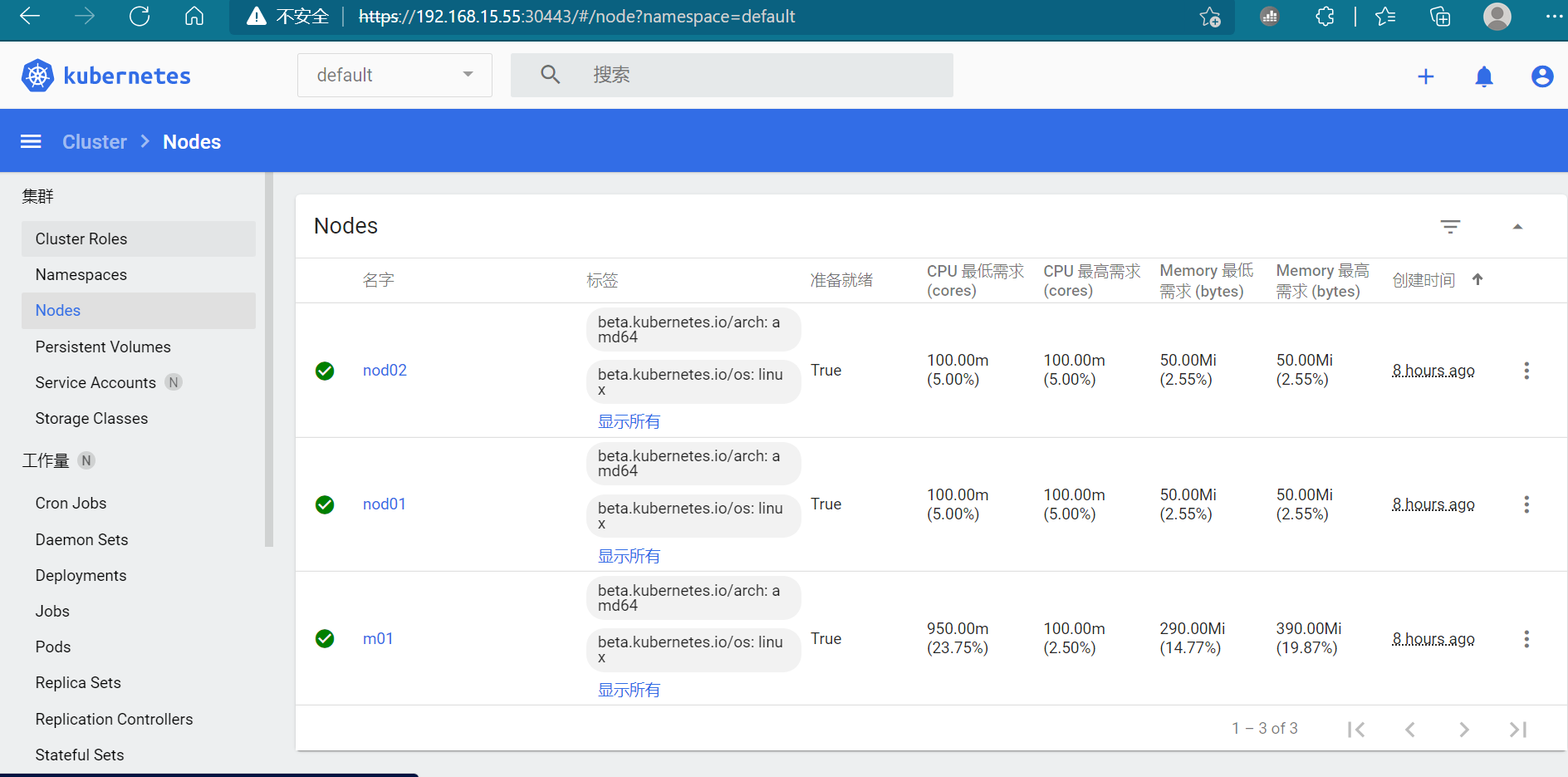

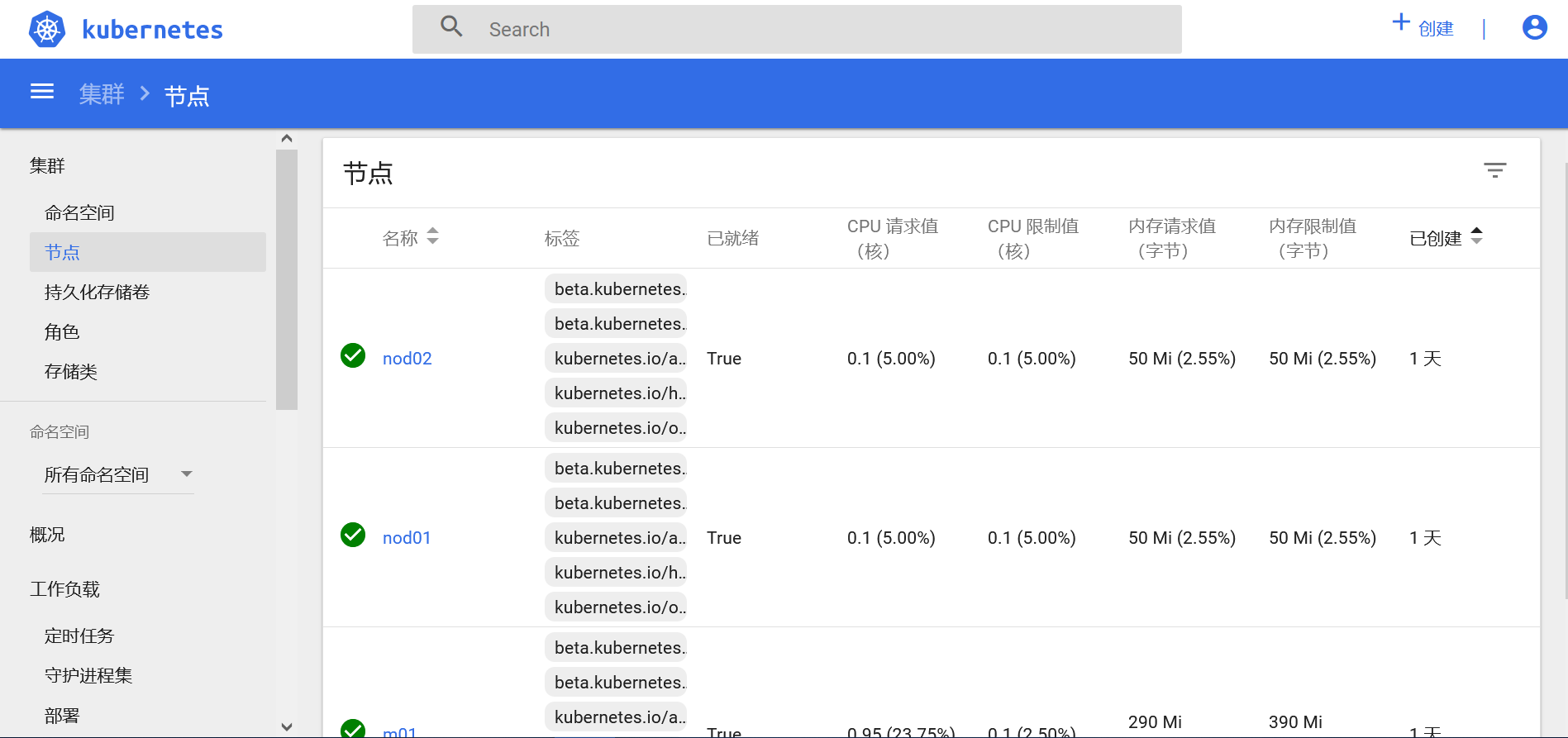

5》》当前节点控制状态

《《《《《 dashboard 管理的业务项目真的很多,不懂就看以上步骤,进行搭建 》》》》》 ???? ???? ????

【dashboard的中文版】

1》 下载dashboard文件

[root@m01 /opt]#wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml #模板文件

2》 镜像地址更改:

[root@m01 /opt]# cat kubernetes-dashboard.yaml

.........

containers:

- name: kubernetes-dashboard

#image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

image: registry.cn-shanghai.aliyuncs.com/hzl-images/kubernetes-dashboard-amd64:v1.6.3

........

.....

3》默认Dashboard只能集群内部访问,因此修改Service为NodePort类型,暴露到外部可以访问:

[root@m01 /opt]# cat kubernetes-dashboard.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30003 #更改端口

selector:

k8s-app: kubernetes-dashboard

4》使用命令获取token值

[root@m01 /opt]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

5》浏览器访问(这里有个坑,只有火狐可以直接打开,其他360(两种模式)、chrome、Edge都不行)

火狐浏览器访问:

https:192.168.15.55:30003

配置更改:

1.设置浏览器安全策略

2.将证书设置成系统信任

-----------------------------------------------------------------------------------------

访问浏览器遇到的错误:

1》用户权限问题:(使用此命令设置用户)

[root@m01 /opt]# kubectl create clusterrolebinding test:anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/test:anonymous created

2》获取token值

[root@m01 /opt]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

3》然后浏览器访问就出现了中的文页面

最后

以上就是潇洒蜻蜓最近收集整理的关于@Kubernetes(k8s)安装与集群服务部署详解的全部内容,更多相关@Kubernetes(k8s)安装与集群服务部署详解内容请搜索靠谱客的其他文章。

![训练记录学习笔记杂题乱做2017集训队作业 [144/156]2016集训队作业 [9/156]2015集训队作业 [5/91]2014集训队作业 [2/100]2013集训队作业 (好象找不到qaq)2012集训队作业 [0/108]2011集训队作业 [0/100]GP选做(以后想搬模拟赛。。。就不公开了)](https://www.shuijiaxian.com/files_image/reation/bcimg7.png)

发表评论 取消回复