一、简述

上篇分享workqueue工作队列接收、接管网卡数据接收中断下半部分,通过 pt_prev->func (skb, skb->dev, pt_prev, orig_dev) 函数,把接收的数据送往协议栈。

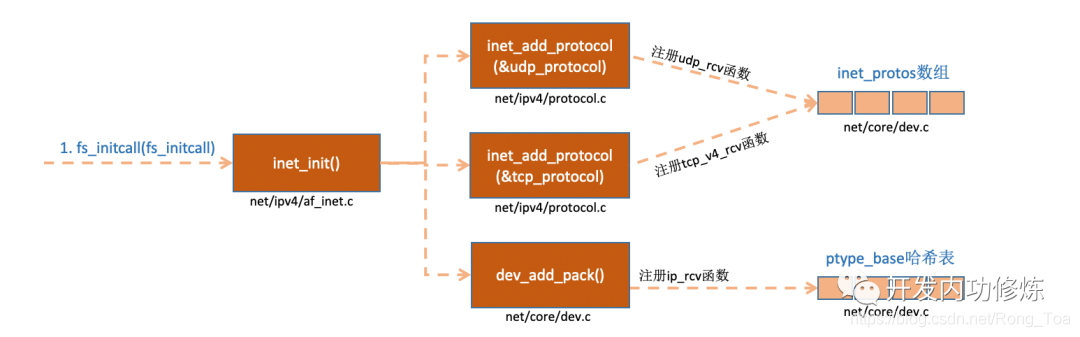

此部分分享协议栈接收数据部分内容。内核实现了网络层的ip协议,也实现了传输层的tcp协议和udp协议。这些协议对应的实现函数分别是ip_rcv(),tcp_v4_rcv()和udp_rcv()。和我们平时写代码的方式不一样的是,内核是通过注册的方式来实现的。Linux内核中的fs_initcall和subsys_initcall类似,也是初始化模块的入口。fs_initcall调用inet_init后开始网络协议栈注册。通过inet_init,将这些函数注册到了inet_protos和ptype_base数据结构中了。如下图:

协议栈把协议簇按照协议号分类、在内核中创建全局实例类型、即全局变量,内容如下:

协议栈把协议簇按照协议号分类、在内核中创建全局实例类型、即全局变量,内容如下:

static struct packet_type ip_packet_type __read_mostly = {

.type = cpu_to_be16(ETH_P_IP),

.func = ip_rcv,

};

#ifdef CONFIG_IP_MULTICAST

static const struct net_protocol igmp_protocol = {

.handler = igmp_rcv,

.netns_ok = 1,

};

#endif

static const struct net_protocol tcp_protocol = {

.early_demux = tcp_v4_early_demux,

.handler = tcp_v4_rcv,

.err_handler = tcp_v4_err,

.no_policy = 1,

.netns_ok = 1,

.icmp_strict_tag_validation = 1,

};

static const struct net_protocol udp_protocol = {

.early_demux = udp_v4_early_demux,

.handler = udp_rcv,

.err_handler = udp_err,

.no_policy = 1,

.netns_ok = 1,

};

static const struct net_protocol icmp_protocol = {

.handler = icmp_rcv,

.err_handler = icmp_err,

.no_policy = 1,

.netns_ok = 1,

};

struct packet_type ip_packet_type 帧类型为ETH_P_IP(0x0800) IP协议簇,由此推断如VLAN(8021Q)、ARP、MPLS、QinQ等以太网协议簇,应该采用相通管理逻辑实现。

IP协议簇的接收函数 ip_rcv() 函数。

IP协议簇子协议集都有独立空间来保存各子协议的处理函数,预处理、错误处理和正确处理不同的指针函数。

inet_init 初始化函数

static int __init inet_init(void)

{

struct inet_protosw *q;

struct list_head *r;

int rc = -EINVAL;

sock_skb_cb_check_size(sizeof(struct inet_skb_parm));

rc = proto_register(&tcp_prot, 1);

if (rc)

goto out;

rc = proto_register(&udp_prot, 1);

if (rc)

goto out_unregister_tcp_proto;

rc = proto_register(&raw_prot, 1);

if (rc)

goto out_unregister_udp_proto;

rc = proto_register(&ping_prot, 1);

if (rc)

goto out_unregister_raw_proto;

/*

* Tell SOCKET that we are alive...

*/

(void)sock_register(&inet_family_ops);

#ifdef CONFIG_SYSCTL

ip_static_sysctl_init();

#endif

/*

* Add all the base protocols.

*/

if (inet_add_protocol(&icmp_protocol, IPPROTO_ICMP) < 0)

pr_crit("%s: Cannot add ICMP protocoln", __func__);

if (inet_add_protocol(&udp_protocol, IPPROTO_UDP) < 0)

pr_crit("%s: Cannot add UDP protocoln", __func__);

if (inet_add_protocol(&tcp_protocol, IPPROTO_TCP) < 0)

pr_crit("%s: Cannot add TCP protocoln", __func__);

#ifdef CONFIG_IP_MULTICAST

if (inet_add_protocol(&igmp_protocol, IPPROTO_IGMP) < 0)

pr_crit("%s: Cannot add IGMP protocoln", __func__);

#endif

/* Register the socket-side information for inet_create. */

for (r = &inetsw[0]; r < &inetsw[SOCK_MAX]; ++r)

INIT_LIST_HEAD(r);

for (q = inetsw_array; q < &inetsw_array[INETSW_ARRAY_LEN]; ++q)

inet_register_protosw(q);

/*

* Set the ARP module up

*/

arp_init();

/*

Set the IP module up,ip_init()包含路由表的初始化

*

后期专门分享内核处理路由表子系统内容。

*/

ip_init();

tcp_v4_init();

/* Setup TCP slab cache for open requests. */

tcp_init();

/* Setup UDP memory threshold */

udp_init();

/* Add UDP-Lite (RFC 3828) */

udplite4_register();

ping_init();

ipv4_proc_init();

dev_add_pack(&ip_packet_type);

ip_tunnel_core_init();

rc = 0;

out:

return rc;

out_unregister_raw_proto:

proto_unregister(&raw_prot);

out_unregister_udp_proto:

proto_unregister(&udp_prot);

out_unregister_tcp_proto:

proto_unregister(&tcp_prot);

goto out;

}

fs_initcall(inet_init);

该初始化函数大致分为三部分:1. 创建协议簇及子协议全局实例对象;2. 初始化协议簇及子协议实例;3. 形成 ETH_P_IP 协议簇的全局实例。

子协议集实例和数组

/* 协议簇 数组 */

static struct inet_protosw inetsw_array[] =

{

{

.type =

SOCK_STREAM,

.protocol =

IPPROTO_TCP,

.prot =

&tcp_prot,

.ops =

&inet_stream_ops,

.flags =

INET_PROTOSW_PERMANENT |

INET_PROTOSW_ICSK,

},

{

.type =

SOCK_DGRAM,

.protocol =

IPPROTO_UDP,

.prot =

&udp_prot,

.ops =

&inet_dgram_ops,

.flags =

INET_PROTOSW_PERMANENT,

},

{

.type =

SOCK_DGRAM,

.protocol =

IPPROTO_ICMP,

.prot =

&ping_prot,

.ops =

&inet_sockraw_ops,

.flags =

INET_PROTOSW_REUSE,

},

{

.type =

SOCK_RAW,

.protocol =

IPPROTO_IP, /* wild card */

.prot =

&raw_prot,

.ops =

&inet_sockraw_ops,

.flags =

INET_PROTOSW_REUSE,

}

};

/* tcp protocol */

struct proto tcp_prot = {

.name

= "TCP",

.owner

= THIS_MODULE,

.close

= tcp_close,

.connect

= tcp_v4_connect,

.disconnect

= tcp_disconnect,

.accept

= inet_csk_accept,

.ioctl

= tcp_ioctl,

.init

= tcp_v4_init_sock,

.destroy

= tcp_v4_destroy_sock,

.shutdown

= tcp_shutdown,

.setsockopt

= tcp_setsockopt,

.getsockopt

= tcp_getsockopt,

.recvmsg

= tcp_recvmsg,

.sendmsg

= tcp_sendmsg,

.sendpage

= tcp_sendpage,

.backlog_rcv

= tcp_v4_do_rcv,

.release_cb

= tcp_release_cb,

.hash

= inet_hash,

.unhash

= inet_unhash,

.get_port

= inet_csk_get_port,

.enter_memory_pressure = tcp_enter_memory_pressure,

.stream_memory_free = tcp_stream_memory_free,

.sockets_allocated = &tcp_sockets_allocated,

.orphan_count

= &tcp_orphan_count,

.memory_allocated = &tcp_memory_allocated,

.memory_pressure = &tcp_memory_pressure,

.sysctl_mem

= sysctl_tcp_mem,

.sysctl_wmem

= sysctl_tcp_wmem,

.sysctl_rmem

= sysctl_tcp_rmem,

.max_header

= MAX_TCP_HEADER,

.obj_size

= sizeof(struct tcp_sock),

.slab_flags

= SLAB_DESTROY_BY_RCU,

.twsk_prot

= &tcp_timewait_sock_ops,

.rsk_prot

= &tcp_request_sock_ops,

.h.hashinfo

= &tcp_hashinfo,

.no_autobind

= true,

#ifdef CONFIG_COMPAT

.compat_setsockopt = compat_tcp_setsockopt,

.compat_getsockopt = compat_tcp_getsockopt,

#endif

#ifdef CONFIG_MEMCG_KMEM

.init_cgroup

= tcp_init_cgroup,

.destroy_cgroup

= tcp_destroy_cgroup,

.proto_cgroup

= tcp_proto_cgroup,

#endif

};

EXPORT_SYMBOL(tcp_prot);

/* ping protocol

*/

struct proto ping_prot = {

.name =

"PING",

.owner = THIS_MODULE,

.init =

ping_init_sock,

.close = ping_close,

.connect = ip4_datagram_connect,

.disconnect = udp_disconnect,

.setsockopt = ip_setsockopt,

.getsockopt = ip_getsockopt,

.sendmsg = ping_v4_sendmsg,

.recvmsg = ping_recvmsg,

.bind =

ping_bind,

.backlog_rcv = ping_queue_rcv_skb,

.release_cb = ip4_datagram_release_cb,

.hash =

ping_hash,

.unhash = ping_unhash,

.get_port = ping_get_port,

.obj_size = sizeof(struct inet_sock),

};

EXPORT_SYMBOL(ping_prot);

各子协议集实例中、定义该子协议各自的处理方法;至此读者可以简单画一下ETH_P_IP 协议簇的层次结构,势必对此协议簇了然于胸哈 ^=^

可能有些读者会问,以太网的数据是如何填充到这些实例中的呢?

看下面代码,inet_init() 函数调用的dev_add_pack(&ip_packet_type) 函数,把全局实例对象ip_packet_type 注册到 ptype_base 哈希表中。

void dev_add_pack(struct packet_type *pt)

{

struct list_head *head = ptype_head(pt);

spin_lock(&ptype_lock);

list_add_rcu(&pt->list, head);

spin_unlock(&ptype_lock);

}

EXPORT_SYMBOL(dev_add_pack);

static inline struct list_head *ptype_head(const struct packet_type *pt)

{

if (pt->type == htons(ETH_P_ALL))

return pt->dev ? &pt->dev->ptype_all : &ptype_all;

else

return pt->dev ? &pt->dev->ptype_specific :

&ptype_base[ntohs(pt->type) & PTYPE_HASH_MASK];

}

网络设备net_dev_init

全局变量 ptype_base【】哈希表,存储协议栈各处理函数空间。

//file: netcoredev.c

struct list_head ptype_base[PTYPE_HASH_SIZE]

__read_mostly; /* ETH_P_IP */

struct list_head ptype_all __read_mostly;

/* Taps

*/

static struct list_head offload_base __read_mostly; /* OffLoad

*/

static int __init net_dev_init(void)

{

int i, rc = -ENOMEM;

BUG_ON(!dev_boot_phase);

if (dev_proc_init())

goto out;

if (netdev_kobject_init())

goto out;

INIT_LIST_HEAD(&ptype_all); /* 全局哈希表 */

for (i = 0; i < PTYPE_HASH_SIZE; i++)

INIT_LIST_HEAD(&ptype_base[i]);

INIT_LIST_HEAD(&offload_base);

if (register_pernet_subsys(&netdev_net_ops))

goto out;

/*

* Initialise the packet receive queues.

*/

for_each_possible_cpu(i) {

struct softnet_data *sd = &per_cpu(softnet_data, i);

skb_queue_head_init(&sd->input_pkt_queue);

skb_queue_head_init(&sd->process_queue);

INIT_LIST_HEAD(&sd->poll_list);

sd->output_queue_tailp = &sd->output_queue;

#ifdef CONFIG_RPS

sd->csd.func = rps_trigger_softirq;

sd->csd.info = sd;

sd->cpu = i;

#endif

sd->backlog.poll = process_backlog;

sd->backlog.weight = weight_p;

}

dev_boot_phase = 0;

/* The loopback device is special if any other network devices

* is present in a network namespace the loopback device must

* be present. Since we now dynamically allocate and free the

* loopback device ensure this invariant is maintained by

* keeping the loopback device as the first device on the

* list of network devices.

Ensuring the loopback devices

* is the first device that appears and the last network device

* that disappears.

*/

if (register_pernet_device(&loopback_net_ops))

goto out;

if (register_pernet_device(&default_device_ops))

goto out;

open_softirq(NET_TX_SOFTIRQ, net_tx_action);

open_softirq(NET_RX_SOFTIRQ, net_rx_action);

hotcpu_notifier(dev_cpu_callback, 0);

dst_subsys_init();

rc = 0;

out:

return rc;

}

subsys_initcall(net_dev_init);

static struct pernet_operations __net_initdata netdev_net_ops = {

.init = netdev_init,

.exit = netdev_exit,

};

也即是说,网卡初始化时开辟 全局 ptype_base[] 哈希数组,网络协议初始化时,把协议填充到此哈希表中,workqueue 队列被worker_thread()执行时,是通过 ptype_base[] 哈希数组来获取协议栈处理函数的;

因此,skb中存放的是 ETH_P_IP 以太网IP协议簇时,pt_prev->func (skb, skb->dev, pt_prev, orig_dev) 函数即是 ip_rcv() 函数。

ip_rcv() 函数

/*

*

Main IP Receive routine.

*/

int ip_rcv(struct sk_buff *skb, struct net_device *dev, struct packet_type *pt, struct net_device *orig_dev)

{

/* When the interface is in promisc. mode, drop all the crap

* that it receives, do not try to analyse it.

*/

if (skb->pkt_type == PACKET_OTHERHOST)

goto drop;

......

/* NF_HOOK, NF_INET_PRE_ROUTING 钩子函数 */

return NF_HOOK(NFPROTO_IPV4, NF_INET_PRE_ROUTING,

net, NULL, skb, dev, NULL,

ip_rcv_finish);

csum_error:

IP_INC_STATS_BH(net, IPSTATS_MIB_CSUMERRORS);

inhdr_error:

IP_INC_STATS_BH(net, IPSTATS_MIB_INHDRERRORS);

drop:

kfree_skb(skb);

out:

return NET_RX_DROP;

}

看到 NF_HOOK(NFPROTO_IPV4, NF_INET_PRE_ROUTING,

net, NULL, skb, dev, NULL,

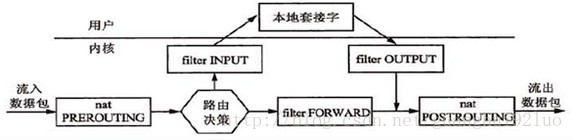

ip_rcv_finish);函数时;我们看到既熟悉、又陌生的NF_INET_PRE_ROUTING 字样;这个钩子函数就是有名的 iptables 的五链四表中的“路由前” 链。 函数 ip_rcv_finish() 也正是把 skb 送入ipv4协议处理流程,此篇分享暂时告一段段落。贴图一张,有助于感性认识协议栈。

函数 ip_rcv_finish() 也正是把 skb 送入ipv4协议处理流程,此篇分享暂时告一段段落。贴图一张,有助于感性认识协议栈。

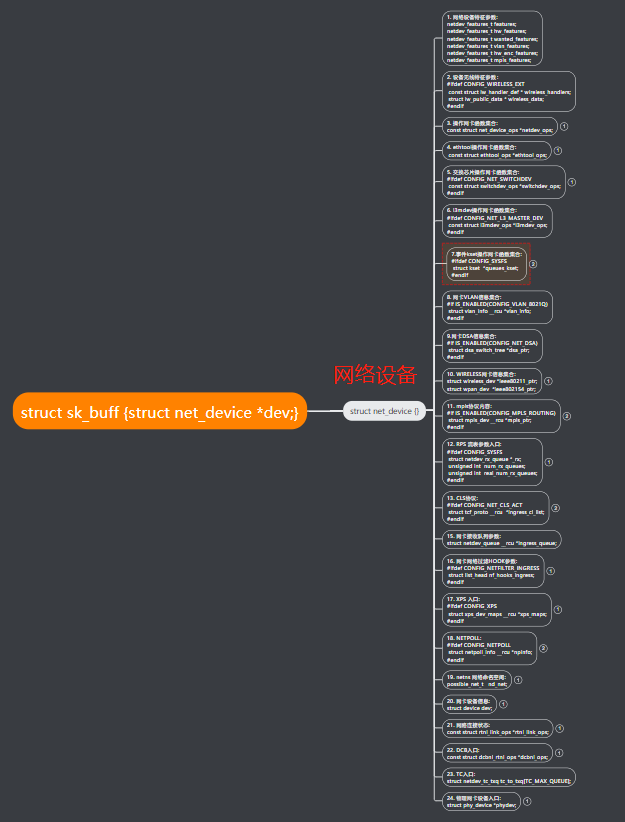

此图是struct net_device 结构体程序变量分类图,此图应该是看不清,也没有必要太清晰,需要您自己翻源码梳理、更容易形成全面的认识。

接下来会分享路由表部分的内容,加油、加油、加油。

接下来会分享路由表部分的内容,加油、加油、加油。

如读者发现有梳理偏差之处、请留言指正,谢谢。

最后

以上就是故意世界最近收集整理的关于8 -->详解《switch 数据接收驱动框架、mtk7621集成交换芯片mt7530》之四一、简述的全部内容,更多相关8内容请搜索靠谱客的其他文章。

发表评论 取消回复