From Wikipedia, the free encyclopedia

来自维基百科,免费的百科

Sensor fusion is combining of sensory data or data derived from disparate sources such that the resulting information has less uncertainty than would be possible when these sources were used individually. The term uncertainty reduction in this case can mean more accurate, more complete, or more dependable, or refer to the result of an emerging view, such as stereoscopic vision (calculation of depth information by combining two-dimensional images from two cameras at slightly different viewpoints).[1][2]

Sensor fusion(传感器融合)是将传感器数据或者从分散源产生的数据结合在一起,从而使结果信息跟各自独立使用时会少一些不确定性。术语“不确定性减小”在这里是指更加准确,更加完整,或者更加可靠,或者指一种新兴观点的结果,例如立体视觉(通过结合来自两个稍微不同的视点的照相机的两种维度的图片深度信息的计算)。

The data sources for a fusion process are not specified to originate from identical sensors. One can distinguish direct fusion, indirect fusion and fusion of the outputs of the former two. Direct fusion is the fusion of sensor data from a set of heterogeneous or homogeneous sensors, soft sensors, and history values of sensor data, while indirect fusion uses information sources like a priori knowledge about the environment and human input.

融合处理的数据源并不是特指由相同的传感器产生。一个融合处理过程可以识别直接融合,间接融合及前两者的输出的融合。直接融合是指从一系列的异构或同构传感器数据,软测量,以及传感器数据的历史数值的融合。而间接融合使用信息源比如环境以及人类输入的先验知识。

Sensor fusion is also known as (multi-sensor) Data fusion and is a subset of information fusion.

传感器融合也称为(多传感器)数据融合,并作为信息融合的子类。

Sensory fusion is simply defined as the unification of visual excitations from corresponding retinal images into a single visual perception a single visual image. Single vision is the hallmark of retinal correspondence Double vision is the hallmark of retinal disparity

传感器融合也简单地被定位为从相对应的视网膜图像视觉到单一视觉感知图像的激发的统一。单视觉是视网膜合作的标识,双视觉是视网膜视差的标识。

Contents 目录

1 Examples of sensors 传感器的示例

2 Sensor fusion algorithms 传感器融合算法

3 Example sensor fusion calculations 举例传感器融合计算

4 Centralized versus decentralized 集中与分散

5 Levels 层次

6 Applications 应用

7 See also 参阅

8 References 参考

9 External links 外部链接

1. Examples of sensors 传感器示例

Radar 雷达

Sonar and other acoustic 声呐和其他声响

Infra-red / thermal imaging camera 热红外/热成像相机

TV cameras 电视摄像机

Sonobuoys 声呐浮标

Seismic sensors 地震传感器

Magnetic sensors 磁传感器

Electronic Support Measures (ESM) 电子支持措施(ESM)

Phased array 相控阵

MEMS 微型机电系统

Accelerometers 加速度计

Global Positioning System (GPS) 全球定位系统

2. Sensor fusion algorithms 传感器融合算法

Sensor fusion is a term that covers a number of methods and algorithms, including: 传感器融合是一个术语,它包括很多方法和算法,包括:

Central Limit Theorem 中心极限定理

Kalman filter 卡尔曼滤波

Bayesian networks 贝叶斯网络

Dempster-Shafer D-S理论

3. Example sensor fusion calculations 举例传感器融合计算

Two example sensor fusion calculations are illustrated below. 两个示例传感器融合计算列举如下。

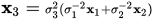

Let {textbf{x}}_1 and {textbf{x}}_2 denote two sensor measurements with noise variances scriptstylesigma_1^2 and scriptstylesigma_2^2 , respectively. One way of obtaining a combined measurement {textbf{x}}_3 is to apply the Central Limit Theorem, which is also employed within the Fraser-Potter fixed-interval smoother, namely [3] [4]

让x1和x2贡献两个传感器测量值带有噪声方差σ12和σ22,分别来说。获取融合测量值x3的一种方法是使用中心极限定理,它也被使用在弗雷泽波特固定区间光滑,即:

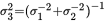

where scriptstylesigma_3^{2} = (scriptstylesigma_1^{-2} + scriptstylesigma_2^{-2})^{-1} is the variance of the combined estimate. It can be seen that the fused result is simply a linear combination of the two measurements weighted by their respective noise variances.

其中 是联合估计的方差。可以看出融合结果是简单的两种观测值以它们各自的噪声方差加权的线性结合。

是联合估计的方差。可以看出融合结果是简单的两种观测值以它们各自的噪声方差加权的线性结合。

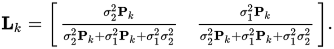

Another method to fuse two measurements is to use the optimal Kalman filter. Suppose that the data is generated by a first-order system and let {textbf{P}}_k denote the solution of the filter's Riccati equation. By applying Cramer's rule within the gain calculation it can be found that the filter gain is given by [4]

另一种方法是使用最优的卡尔曼滤波融合两种测量值。假定数据是由一阶系统产生,让Pk表示滤波器的黎卡提方程的结果。通过使用增益计算的克拉默法则可以发现滤波器增益可以由以下给出:

By inspection, when the first measurement is noise free, the filter ignores the second measurement and vice versa. That is, the combined estimate is weighted by the quality of the measurements.

通过检查,当第一个测量值是无噪声的,那么该滤波器就忽略了第二个测量值,反之亦然。也就是说,融合估计只由测量值的质量来估计。

4. Centralized versus decentralized 集中与分散

In sensor fusion, centralized versus decentralized refers to where the fusion of the data occurs. In centralized fusion, the clients simply forward all of the data to a central location, and some entity at the central location is responsible for correlating and fusing the data. In decentralized, the clients take full responsibility for fusing the data. "In this case, every sensor or platform can be viewed as an intelligent asset having some degree of autonomy in decision-making."[5]

在传感器融合中,集中与分散是指数据融合发生的地方。在集中融合中,用户简单地提交所有的数据到一个中心位置,某些中心位置的实体负责关联和融合数据。在分散融合中,用户端全权负责融合数据。“在该情况下,每一个传感器或平台可以被视为有某种程度自治决策制定的智能设备”。

Multiple combinations of centralized and decentralized systems exist.

多种集中和分散融合系统结合是存在的。

5. Levels 层次

There are several categories or levels of sensor fusion that are commonly used.* [6] [7] [8] [9] [10] [11]

常用的有多种种类或层次的传感器融合。

Level 0 – Data alignment 第0级 - 数据对齐(排列)

Level 1 – Entity assessment (e.g. signal/feature/object). 第1级 - 实体评定(比如,信号/特征/对象)

Tracking and object detection/recognition/identification 追踪以及对象检测/识别/辨认

Level 2 – Situation assessment 第2级 - 位置评定

Level 3 – Impact assessment 第3级 - 影响评定

Level 4 – Process refinement (i.e. sensor management) 第4级 - 处理提炼(例如,传感器管理)

Level 5 – User refinement 第5级 - 用户提炼

6. Applications 应用

One application of sensor fusion is GPS/INS, where Global Positioning System and Inertial Navigation System data is fused using various different methods, e.g. the Extended Kalman Filter. This is useful, for example, in determining the attitude of an aircraft using low-cost sensors.[12] Another example is using the Data fusion approach to determine the traffic state (low traffic, traffic jam, medium flow) using road side collected acoustic, image and sensor data.[13]

一个传感器融合应用是GPS/INS,全球定位系统和惯性导航系统数据是通过使用多种不同方法融合,例如,扩展卡尔曼滤波。这是有用的,例如,在使用低价的传感器的航空器的姿势确定中。另一个例子是通过收集路侧声学、图像以及传感器数据使用数据融合方法来决定交通状态(低流量,交通拥堵,中等流畅)

A practical example how to combine data of different displacement and position sensors in order to obtain high bandwidth at high resolution can be found in this master thesis.[14] One can see the applied methods of optimal filtering (in sense of minimizing e.g. the energy norm) or the MIMO Kalman filter.

一个实用的例子关于如何结合不同的替代和定位传感器数据来获取高精度的高带宽的方法可以在该硕士论文中找到。你可以看到最优滤波(求最小参数 如能量范数)或者MIMO卡尔曼滤波的应用方法。

7. See also参阅

Data fusion 数据融合

Image fusion 图像融合

Information integration 信息整合

Data mining 数据挖掘

Information: Information is not data 信息:信息不等于数据

Data (computing) 数据(计算)

multimodal integration 多种方式整合

Fisher's method for combining independent tests of significance Fisher的结合独立意义实验方法

Transducer Markup Language (TML) is an XML based markup language which enables sensor fusion. 传感器标记语言(TML)是一个使能传感器融合的基于XML的标记语言

Brooks – Iyengar algorithm 布鲁克斯–艾扬格算法

Inertial navigation system 惯性导航系统

Sensor Grid 传感器网络

Semantic Perception 语义认知

8. References 参考

1) Elmenreich, W. (2002). Sensor Fusion in Time-Triggered Systems, PhD Thesis (PDF). Vienna, Austria: Vienna University of Technology. p. 173.

2) Haghighat, M. B. A., Aghagolzadeh, A., & Seyedarabi, H. (2011). Multi-focus image fusion for visual sensor networks in DCT domain. Computers & Electrical Engineering, 37(5), 789-797.

3) Maybeck, S. (1982). Stochastic Models, Estimating, and Control. River Edge, NJ: Academic Press.

4) a b Einicke, G.A. (2012). Smoothing, Filtering and Prediction: Estimating the Past, Present and Future. Rijeka, Croatia: Intech. ISBN 978-953-307-752-9.

5)N. Xiong; P. Svensson (2002). "Multi-sensor management for information fusion: issues and approaches". Information Fusion. p. 3(2):163–186.

6) Rethinking JDL Data Fusion Levels

7) Blasch, E., Plano, S. (2003) “Level 5: User Refinement to aid the Fusion Process”, Proceedings of the SPIE, Vol. 5099.

8)J. Llinas; C. Bowman; G. Rogova; A. Steinberg; E. Waltz; F. White (2004). Revisiting the JDL data fusion model II. International Conference on Information Fusion. CiteSeerX: 10.1.1.58.2996.

9) Blasch, E. (2006) "Sensor, user, mission (SUM) resource management and their interaction with level 2/3 fusion" International Conference on Information Fusion.

10) http://defensesystems.com/articles/2009/09/02/c4isr1-sensor-fusion.aspx

11) Blasch, E., Steinberg, A., Das, S., Llinas, J., Chong, C.-Y., Kessler, O., Waltz, E., White, F. (2013) "Revisiting the JDL model for information Exploitation," International Conference on Information Fusion.

12) Gross, Jason; Yu Gu; Matthew Rhudy; Srikanth Gururajan; Marcello Napolitano (July 2012). "Flight Test Evaluation of Sensor Fusion Algorithms for Attitude Estimation". IEEE Transactions on Aerospace and Electronic Systems 48 (3): 2128–2139. doi:10.1109/TAES.2012.6237583.

13) Joshi, V., Rajamani, N., Takayuki, K., Prathapaneni, N., Subramaniam, L. V., (2013). Information Fusion Based Learning for Frugal Traffic State Sensing. Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence.

14) Piri, Daniel (2014). "Sensor Fusion in Nanopositioning". Vienna, Austria: Vienna University of Technology. p. 140.

J. L. Crowley and Y. Demazeau Principles and Techniques for Sensor Data Fusion Signal Processing, Volume 32, Issues 1–2, May 1993, Pages 5–27

9. External links 外部链接

International Society of Information Fusion 国际信息融合社会 http://www.isif.org/

Categories(类别): Robotic sensing(https://en.wikipedia.org/wiki/Category:Robotic_sensing) Computer data Sensors(https://en.wikipedia.org/wiki/Category:Computer_data)

Navigation menu(https://en.wikipedia.org/wiki/Category:Sensors)

https://en.wikipedia.org/wiki/Sensor_fusion

转载于:https://www.cnblogs.com/2008nmj/p/5941028.html

最后

以上就是朴素小土豆最近收集整理的关于Sensor fusion(传感器融合)的全部内容,更多相关Sensor内容请搜索靠谱客的其他文章。

发表评论 取消回复