从模拟数据集

到曲线拟合

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 5 21:21:58 2017

@author: wjw

模拟产生数据集,然后再进行拟合

"""

def nomalization(X):#不归一化时梯度下降时数值太大,报错

maxX = max(X)

minX = min(X)

normalized_X = []

for x in X:

normalized_X.append((x-minX)/(maxX-minX))

return normalized_X

def gradicent(X_train,Y_train,a,b,c,d):

#当估计函数为 a*x**3+b*x**2+c*x+d

n = 0

max_itor = 20000

alpha = 0.02

epslion = 1e-8

error1 = 0

error2 = 0

while True:

n += 1

if n>max_itor:break

for i in range(X_train.__len__()): #得到每一行的数据

x = X_train[i]

y = Y_train[i]

a -= (alpha*(a*(x**3)+b*x**2+c*x+d-y)*x**3)

b -= (alpha*(a*(x**3)+b*x**2+c*x+d-y)*x**2)

c -= (alpha*(a*(x**3)+b*x**2+c*x+d-y)*x)

d -= (alpha*(a*(x**3)+b*x**2+c*x+d-y))

error2 += (y-a*(x**3)+b*x**2+c*x+d)**2#先累加差异,再求平均差异

if n%1000==0:

print('times:%d'%(n))

print('error:%f,train_accuracy:%f'%(abs(error2-error1)/X_train.__len__(), calculate_acuracy(a,b,c,d,X_train,Y_train)))#前后平均差异之差

# print('train_accuracy:',calculate_acuracy(a,b,c,X_train,Y_train))

# break

if abs(error2-error1)<epslion:

print('congratulation!')

print('n:',n)

break

error1 = error2/X_train.__len__()

error2 = 0

# print(n)

return(a,b,c,d)

def calculate_acuracy(a,b,c,d,X,Y):

accuracy = 0

for i in range(X.__len__()):

x = X[i]

y = Y[i]

accuracy += (y-(a*(x**3)+b*x**2+c*x+d))**2

avg_accuracy = accuracy/X.__len__()

# print(avg_error)

return avg_accuracy

if __name__ == "__main__":

import numpy as np

import matplotlib.pyplot as plt

import math

from sklearn.cross_validation import train_test_split

x = np.arange(0,100,0.1)

y = list(map(lambda x:math.log2(x+1)+np.random.random(1)*0.3,x))

X_train,X_test,Y_train,Y_test = train_test_split(x,y,test_size=0.4,random_state=1)

for i in range(X_train.__len__()): #得到每一行的数据

plt.plot(X_train[i],Y_train[i],'ro')

X_train = nomalization(X_train)

A,B,C,D = gradicent(X_train,Y_train,0.1,0.1,0.2,0.2)

print(A,B,C,D)

X = np.arange(0,100,0.01)

normalized_X = nomalization(X)

Y = list(map(lambda x:A*(x**3)+B*x**2+C*x+D,normalized_X))

plt.plot(X,Y,color='blue')

plt.show()

X_test = nomalization(X_test)

avg_accuracy = calculate_acuracy(A,B,C,D,X_test,Y_test)

print('test_accuracy:',avg_accuracy)填坑:在梯度下降运算时,解释器warning数据超过计算范围,除非设置alpha很小。解决方法:因为在计算梯度是要计算x^3,且还要累加error可能导致超过范围,在x带入计算前,进行归一化处理,问题解决!

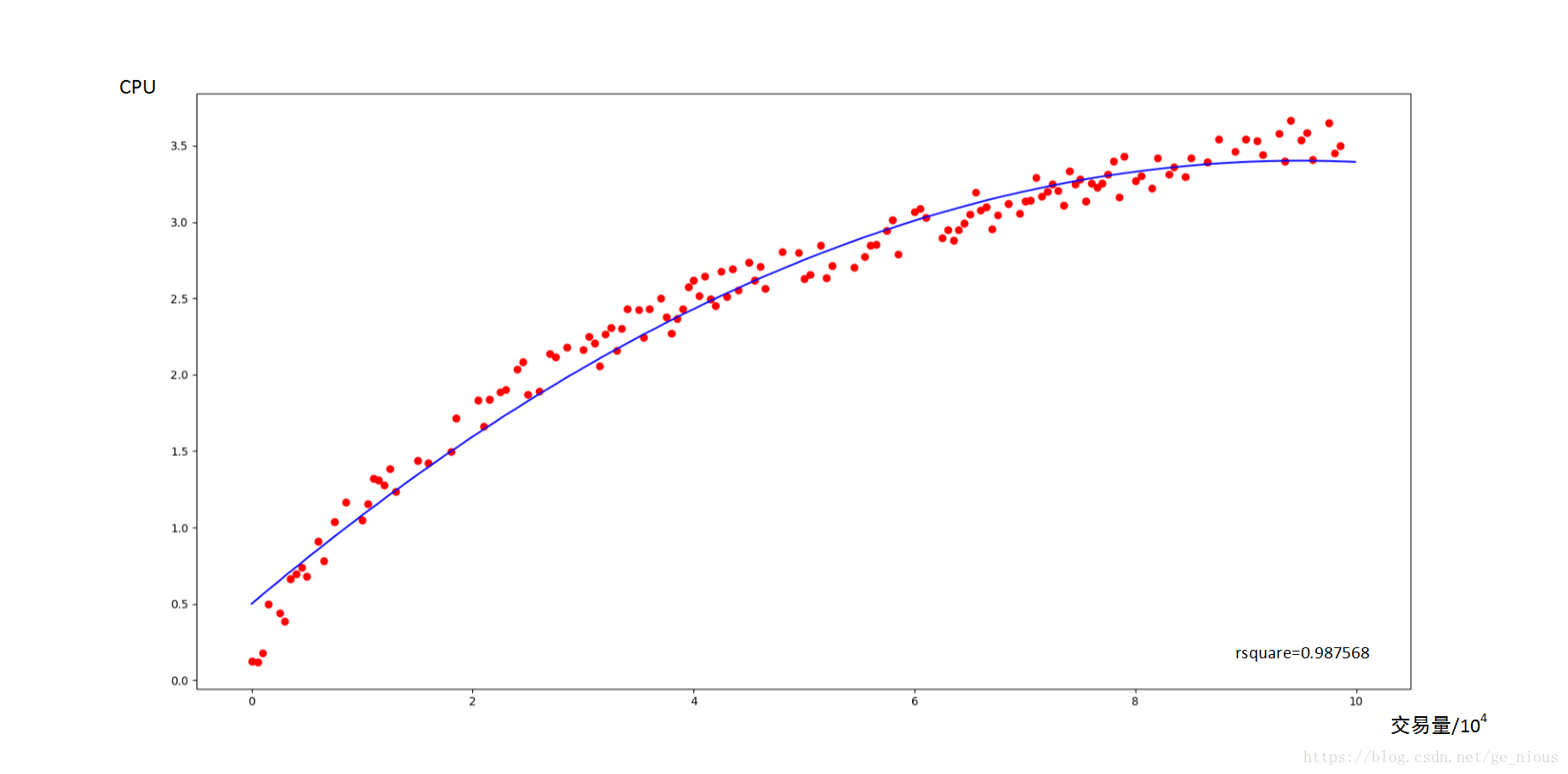

效果如下:

最后

以上就是靓丽镜子最近收集整理的关于gradient descent 的python实现的全部内容,更多相关gradient内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

![[Python]数据挖掘(1)、梯度下降求解逻辑回归——考核成绩分类ps:本博客内容根据唐宇迪的的机器学习经典算法 学习视频复制总结而来http://www.abcplus.com.cn/course/83/tasks逻辑回归](https://www.shuijiaxian.com/files_image/reation/bcimg14.png)

发表评论 取消回复