文章目录

- 前言

- 代码实现

- 1.载入库并判断是否有GPU

- 2.载入数据并对数据进行处理

- 3.导入VGG模型并构建训练程序

- 4.构建测试程序

- 测试结果及改进结果

- 改进

- 再次改进

- 参与练习赛

- 1.构建用于比赛数据集的测试程序

- 2.提交结果

- 总结

前言

这个作业为参加 Kaggle 于2013年举办的猫狗大战比赛的训练赛,判断一张输入图像是“猫”还是“狗”,使用在 ImageNet 上预训练 的 VGG 网络进行测试。因为原网络的分类结果是1000类,所以这里进行迁移学习,对原网络进行 fine-tune (即固定前面若干层,作为特征提取器,只重新训练最后两层)。使用Google Colab平台实现,之后按照比赛规定的格式输出,上传结果在线评测。

代码实现

1.载入库并判断是否有GPU

import numpy as np

import matplotlib.pyplot as plt

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import models,transforms,datasets

import time

import json

# 判断是否存在GPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print('Using gpu: %s ' % torch.cuda.is_available())

2.载入数据并对数据进行处理

# 获取数据

! wget http://fenggao-image.stor.sinaapp.com/dogscats.zip

! unzip dogscats.zip

# 对图像数据归一化

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

data_dir = './dogscats' # 图片文件路径

dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), vgg_format)

for x in ['train', 'valid']}

dset_sizes = {x: len(dsets[x]) for x in ['train', 'valid']}

dset_classes = dsets['train'].classes

# 数据分为训练集和有效性测试集

loader_train = torch.utils.data.DataLoader(dsets['train'], batch_size=64, shuffle=True, num_workers=6)

loader_valid = torch.utils.data.DataLoader(dsets['valid'], batch_size=5, shuffle=False, num_workers=6)

3.导入VGG模型并构建训练程序

# 导入VGG模型

model_vgg = models.vgg16(pretrained=True)

model_vgg_new = model_vgg;

# 为了在训练中冻结前面层的参数,需要设置 required_grad=False

for param in model_vgg_new.parameters():

param.requires_grad = False

# 我们的目标是使用预训练好的模型,把最后的 nn.Linear 层由1000类,替换为2类

model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 2)

model_vgg_new.classifier._modules['7'] = torch.nn.LogSoftmax(dim = 1)

model_vgg_new = model_vgg_new.to(device)

# print(model_vgg_new.classifier)

'''

第一步:创建损失函数和优化器

损失函数 NLLLoss() 的 输入 是一个对数概率向量和一个目标标签.

它不会为我们计算对数概率,适合最后一层是log_softmax()的网络.

'''

criterion = nn.NLLLoss()

# 学习率

lr = 0.001

# 随机梯度下降

optimizer_vgg = torch.optim.SGD(model_vgg_new.classifier[6].parameters(),lr = lr)

'''

第二步:训练模型

'''

def train_model(model,dataloader,size,epochs=1,optimizer=None):

model.train()

for epoch in range(epochs):

running_loss = 0.0

running_corrects = 0

count = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

optimizer = optimizer

optimizer.zero_grad()

loss.backward()

optimizer.step()

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

print('Training: No. ', count, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

torch.save(model, '/content/drive/MyDrive/Colab Notebooks/path1.pth')

# 模型训练

train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=1,

optimizer=optimizer_vgg)

4.构建测试程序

def test_model(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

all_classes = np.zeros(size)

all_proba = np.zeros((size,2))

i = 0

running_loss = 0.0

running_corrects = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

predictions[i:i+len(classes)] = preds.to('cpu').numpy()

all_classes[i:i+len(classes)] = classes.to('cpu').numpy()

all_proba[i:i+len(classes),:] = outputs.data.to('cpu').numpy()

i += len(classes)

print('Testing: No. ', i, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

return predictions, all_proba, all_classes

predictions, all_proba, all_classes = test_model(model_vgg_new,loader_valid,size=dset_sizes['valid'])

测试结果及改进结果

第一次训练

Training: No. 1664 process ... total: 1800

Training: No. 1728 process ... total: 1800

Training: No. 1792 process ... total: 1800

Training: No. 1800 process ... total: 1800

Loss: 0.0065 Acc: 0.8433

第一次验证

Testing: No. 1990 process ... total: 2000

Testing: No. 1995 process ... total: 2000

Testing: No. 2000 process ... total: 2000

Loss: 0.0487 Acc: 0.9455

可以看到预训练好的模型已经能较好的完成对猫与狗的分辨了

改进

我们对模型进行改进将优化器更改为Adam,epochs仍为1

optimizer_vgg = torch.optim.Adam(model_vgg_new.classifier[6].parameters(),lr = lr)

第二次训练

Training: No. 1664 process ... total: 1800

Training: No. 1728 process ... total: 1800

Training: No. 1792 process ... total: 1800

Training: No. 1800 process ... total: 1800

Loss: 0.0024 Acc: 0.9411

第二次验证

Testing: No. 1985 process ... total: 2000

Testing: No. 1990 process ... total: 2000

Testing: No. 1995 process ... total: 2000

Testing: No. 2000 process ... total: 2000

Loss: 0.0106 Acc: 0.9780

可以看出将优化器更改为Adam后,模型的分辨能力显著提升了!

再次改进

第三次改进,模型优化器仍为Adam,将epochs更改为20

optimizer_vgg = torch.optim.Adam(model_vgg_new.classifier[6].parameters(),lr = lr)

train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=20,

optimizer=optimizer_vgg)

第三次训练

Training: No. 1600 process ... total: 1800

Training: No. 1664 process ... total: 1800

Training: No. 1728 process ... total: 1800

Training: No. 1792 process ... total: 1800

Training: No. 1800 process ... total: 1800

Loss: 0.0004 Acc: 0.9906

第三次验证

Testing: No. 1985 process ... total: 2000

Testing: No. 1990 process ... total: 2000

Testing: No. 1995 process ... total: 2000

Testing: No. 2000 process ... total: 2000

Loss: 0.0097 Acc: 0.9795

可以看到提升epochs也能使模型效果有所提升,保存此次模型为path3.pth

参与练习赛

1.构建用于比赛数据集的测试程序

device = torch.device("cuda:0" )

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

dsets_mine = datasets.ImageFolder(r'/content/drive/MyDrive/test1', vgg_format)

loader_test = torch.utils.data.DataLoader(dsets_mine, batch_size=1, shuffle=False, num_workers=0)

# 加载之前保存的path3.pth

model_vgg_new = torch.load(r'/content/drive/MyDrive/Colab Notebooks/path3.pth')

model_vgg_new = model_vgg_new.to(device)

dic = {}

def test2(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

cnt = 0

for inputs,_ in dataloader:

inputs = inputs.to(device)

outputs = model(inputs)

_,preds = torch.max(outputs.data,1)

#这里是切割路径,因为dset中的数据不是按1-2000顺序排列的

key = dsets_mine.imgs[cnt][0].split("\")[-1].split('.')[0]

dic[key] = preds[0]

cnt = cnt +1

print(cnt) # 看进度

test2(model_vgg_new,loader_test,size=2000)

# 生成csv文件

with open("/content/drive/MyDrive/Colab Notebooks/result.csv",'a+') as f:

for key in range(2000):

f.write("{},{}n".format(key,dic["/content/drive/MyDrive/test1/test/"+str(key)]))

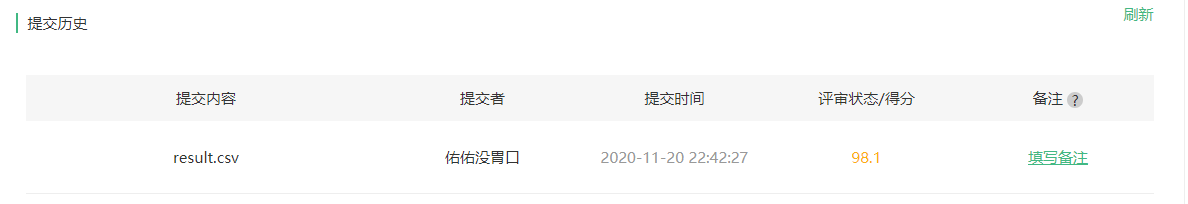

2.提交结果

总结

1.通过将优化器由SGD更改为Adam,并增加epochs次数,使训练出的模型分辨能力显著的增强了,本次epochs只增加到了20,继续增加应该还会有提升。

2.由于设备限制,本次使用的是精简过的训练集,如果能使用2w张图片的训练集,效果可能会更好。

3.在工程实践中,迁移学习能够使工作量大大减小,好滴很!

4.我好菜,还需要学习更多。

最后

以上就是自然玫瑰最近收集整理的关于第四次作业:猫狗大战挑战赛前言代码实现测试结果及改进结果参与练习赛总结的全部内容,更多相关第四次作业内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复