对如下实现face landmark的一些经典深度学习方法进行简单汇总:

Face Alignment at 3000 FPS via Regressing Local Binary Features

Joint Cascade Face Detection and Alignment

One Millisecond Face Alignment with an Ensemble of Regression Trees

Deep Convolutional Network Cascade for Facial Point Detection

Extensive Facial Landmark Localization with Coarse-to-fine Convolutional Network Cascade

Facial Landmark Detection by Deep Multi-task Learning

Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks

Facial Landmark Detection with Tweaked Convolutional Neural Networks

Deep Alignment Network: A convolutional neural network for robust face alignment

参考资料:https://blog.csdn.net/u013948010/article/details/80520540

对论文内容整理以便以后查阅,主要关注每种算法的精度和速度测试部分,以及不同算法之间的比较。

以下为对原文截取整理的内容:

Face Alignment at 3000 FPS via Regressing Local Binary Features

1.发表时间:2014年

In this work, we have presented a novel approach to learning local binary features for highly accurate and extremely fast face alignment.

2.相关数据集: We use three more recent and challenging ones.—LFPW、Helen、300-W

3.速度:It achieves over 3,000fps on a desktop or 300fps on a mobile phone for locating a few dozens of landmarks.

4.精确性评估指标:Following the standard, we use the inter-pupil distance normalized landmark error. For each dataset we report the error averaged over all landmarks and images. Note that the error is represented as a percentage of the pupil-distance, and we drop the notation% in the reported results for clarity.

5. LBF、SDM、ESR三种算法比较:Comparison with state-of-the-art methods

Our main competitors are the shape regression based methods, including explicit shape regression (ESR) and supervised descent method (SDM).

Table1 reports the errors and speeds (frames per second or FPS) of all compared methods on three datasets.

精确度比较

Overall, the regression-based approaches are significantly better than ASM-based methods.

速度比较

Our approach, ESR, and SDM are all implemented in C++ and tested on a single core i7-2600 CPU. The speed of other methods is quoted from the original papers. While ESR and SDM are already the fastest face alignment methods in the literature, our method has a even larger advantage in terms of speed. Our fast version is dozens of times faster and achieves thousands of FPS for a large number of landmarks. The high speed comes from the sparse binary features. As each testing sample has only a small number of non-zero entries in its high dimensional features, the shape update is performed only a few times by efficient look up table and vector addition, instead of matrix multiplication in the global linear regression. The surprisingly high performance makes our approach especially attractive for applications with limited computational power. For example, our method runs in about 300 FPS on a mobile. This opens up new opportunities for online face applications on mobile phone.

Joint Cascade Face Detection and Alignment

1.发表时间:2014

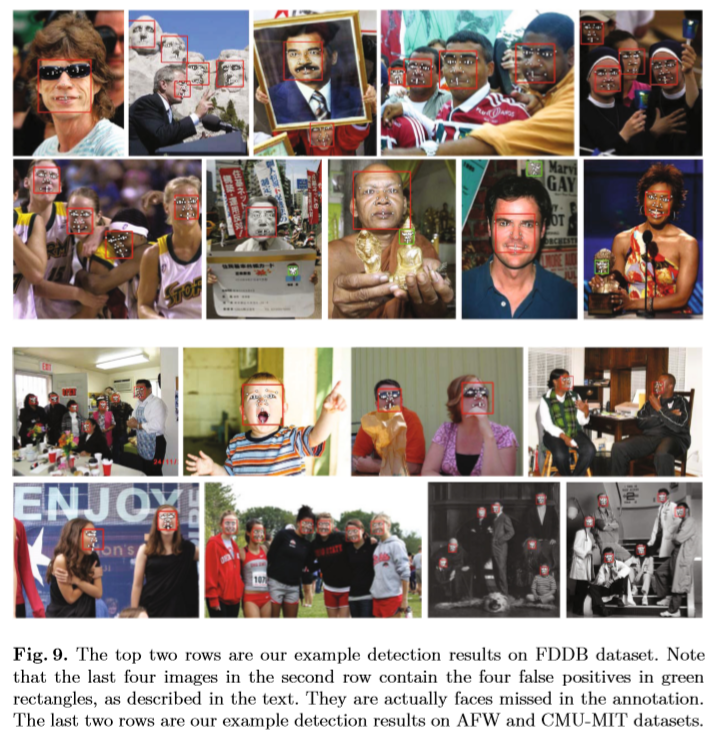

We present a new state-of-the-art approach for face detection. The key idea is to combine face alignment with detection, observing that aligned face shapes provide better features for face classification. To make this combination more effective, our approach learns the two tasks jointly in the same cascade framework, by exploiting recent advances in face alignment. Such joint learning greatly enhances the capability of cascade detection and still retains its realtime performance. Extensive experiments show that our approach achieves the best accuracy on challenging datasets, where all existing solutions are either inaccurate or too slow.

2.相关数据集:

For evaluation we use three challenging public datasets: FDDB, AFW and CMU-MIT.

3.Effect of Alignment for Cascade Detection

The effect of alignment is verified by comparing our detector with a baseline detector without the alignment part. We compared the two detectors on FDDB dataset.

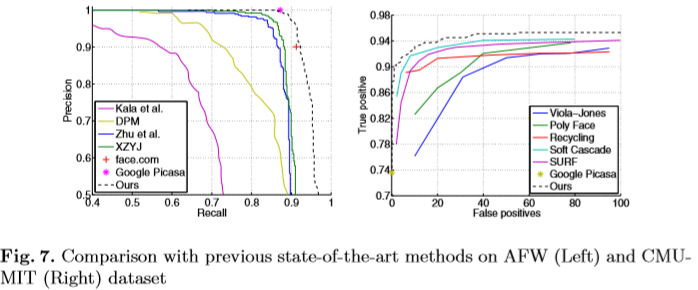

4.Comparison with the State-of-the-Art

5.Evaluation of Face Alignment

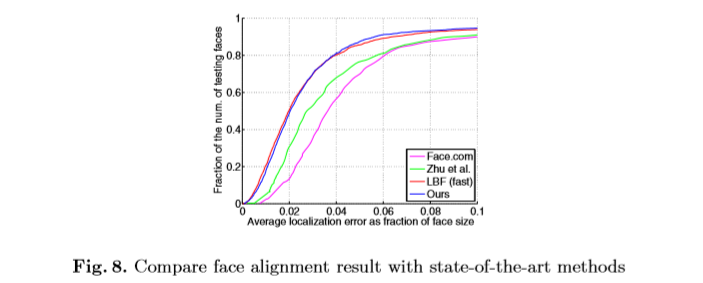

Our detector also outputs aligned face shapes as a by product. We evaluate the alignment accuracy on AFW dataset using the same settings in. We compare with the following methods: 1) face.com alignment system; 2) Zhu et al.; 3) method in. The results of first two methods are from. The last is the state-of-the-art face alignment method. We implemented this method and used our detected faces as its input. Figure 8 shows that our face alignment accuracy is comparable to that in, not surprisingly, and is better than the first two.

6. Efficiency

Our detector is more efficient in terms of computation time and memory. We compare our detector with OpenCV detector and Zhu et al. For all methods, we detect faces larger than 80×80 in a VGA image. Our detector takes 28.6 milliseconds using single thread on a 2.93 GHz CPU. This performance is more than 1000 times faster than Zhu et al. [32], which takes 33.8 seconds on the same image. Our detector approximates the speed of Viola-Jones detector in OpenCV, which is 23.0 milliseconds.

In the runtime, our detector needs only 15MB memory. Comparing with other methods, Li et al. requires around 150MB memory and Shen et al. requires 866MB memory, our detector is more practical for real scenarios such as mobile applications or on embedded devices.

One Millisecond Face Alignment with an Ensemble of Regression Trees

发表时间:2014年

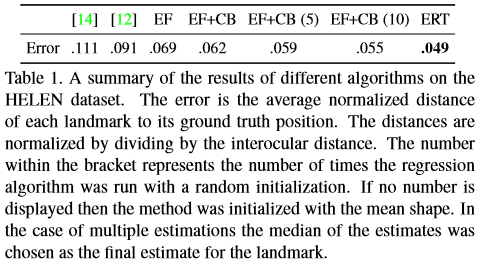

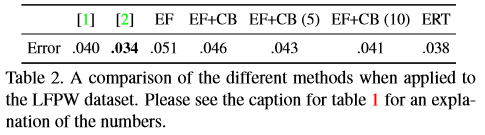

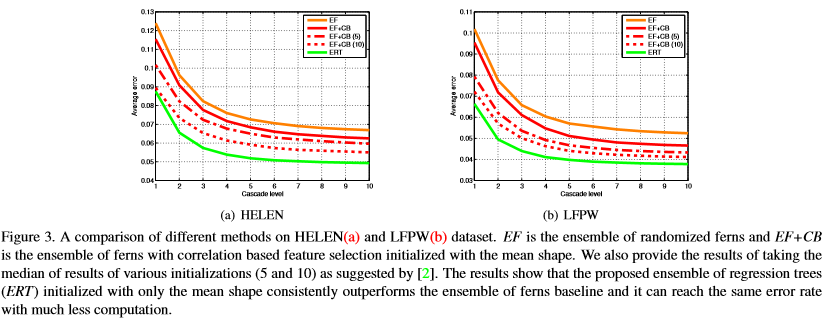

Baselines: To accurately benchmark the performance of our proposed method, an ensemble of regression trees (ERT) we created two more baselines. The first is based on randomized ferns with random feature selection (EF) and the other is a more advanced version of this with correlation based feature selection (EF+CB) which is our reimplementation of. All the parameters are fixed for all three approaches.

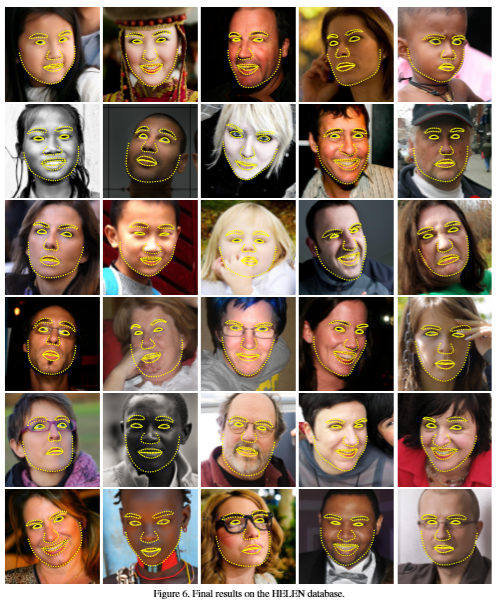

Performance:The runtime complexity of the algorithm on a single image is constant O(TKF). The complexity of the training time depends linearly on the number of training data O(NDTKFS) where N is the number of training data and D is dimension of the targets. In practice with a single CPU our algorithm takes about an hour to train on the HELEN dataset and at runtime it only takes about one millisecond per image.

Database: Most of the experimental results reported are for the HELEN face database which we found to be the most challenging publicly available dataset. It consists of a total of 2330 images, each of which is annotated with 194 landmarks. As suggested by the authors we use 2000 images for training data and the rest for testing. We also report final results on the popular LFPW database which consists of 1432 images. Unfortunately, we could only download 778 training images and 216 valid test images which makes our results not directly comparable to those previously reported on this dataset.

Comparison:

评估指标:The error is the average normalized distance of each landmark to its ground truth position. The distances are normalized by dividing by the interocular distance.

Figure 3 shows the average error at different levels of the cascade which shows that ERT can reduce the error much faster than other baselines.

We described how an ensemble of regression trees can be used to regress the location of facial landmarks from a sparse subset of intensity values extracted from an input image. The presented framework is faster in reducing the error compared to previous work and can also handle partial or uncertain labels. While major components of our algorithm treat different target dimensions as independent variables, a natural extension of this work would be to take advantage of the correlation of shape parameters for more efficient training and a better use of partial labels.

Deep Convolutional Network Cascade for Facial Point Detection

发表时间:2013年

We propose a new approach for estimation of the positions of facial key points with three-level carefully designed convolutional networks.

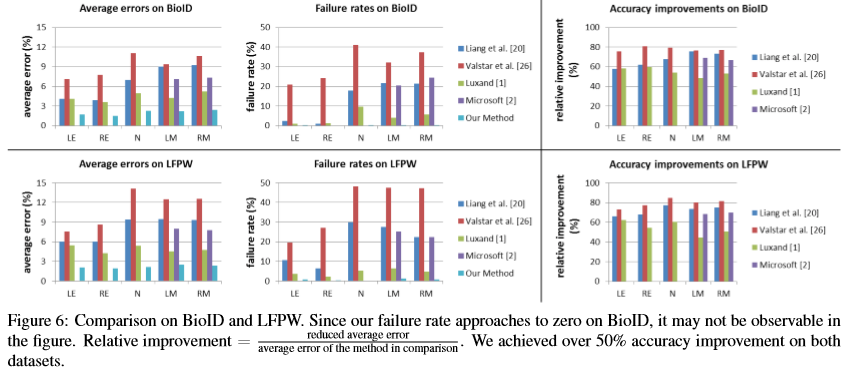

Comparison with other methods

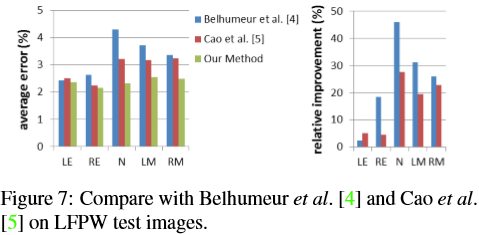

We compare with state-of-the-art methods and latest commercial software on two public datasets, BioID and LFPW. BioID contains face images collected in lab conditions while LFPW contains face images from the web. On both test sets, we use the model trained on the dataset described in Section 5.1. To be consistent with most previous works, we used the bi-ocular distance to normalize detection errors and redefine the failure rate as the proportion of cases whose normalized errors are larger than 10%. The results are summarized in Figure 6.

We compare with Component based Discriminative Search [20], Boosted Regression with Markov Networks, and two latest commercial software, Luxand Face SDK and Microsoft Research Face SDK. Since Microsoft Research face SDK does not detect eye centers and nose tip, we only compare mouth corners with it. Our method reduces the detection errors significantly, and our failure rate approaches to zero.

Belhumeur et al. and Cao et al. reported results on LFPW test images, and the latter defined the current state-of-the-art on these images. We also evaluate our algorithm on the 249 LFPW test images in order to compare with the two best methods on this dataset. Figure 7 shows the comparison results on average errors and our relative accuracy improvements over the two methods. More than 20% relative accuracy improvement is achieved for nose tip and two mouth corners.

速度:The C++ implementation of our algorithm takes 0.12 second to process one image on a 3.30GHz CPU. The system can be easily parallelized since convolutional networks at each level are independent.

最大优势:精度高

Extensive Facial Landmark Localization with Coarse-to-fine Convolutional Network Cascade

发表时间:2013年

We present a new approach to localize extensive facial landmarks with a coarse-to-fine convolutional network cascade.

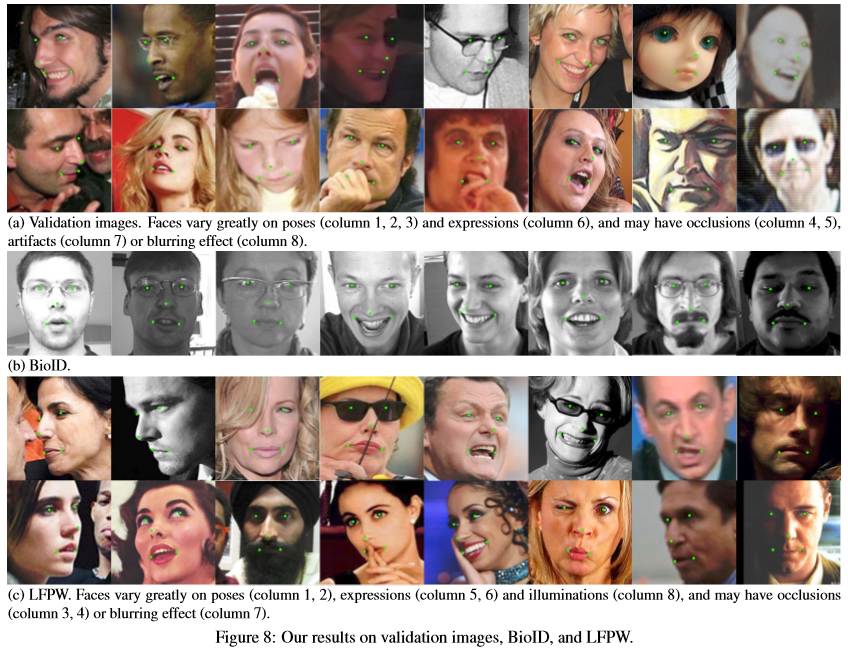

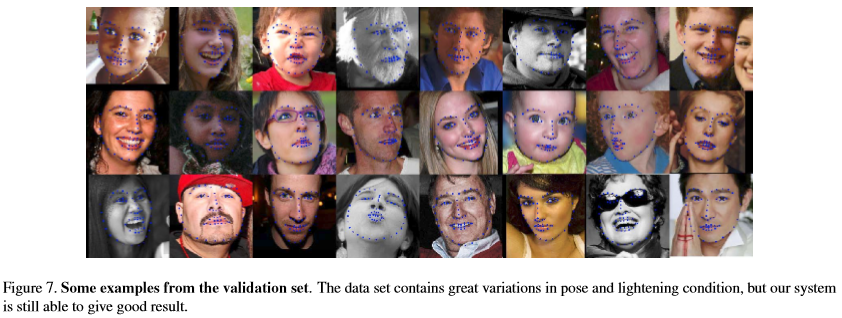

We conducted our experiments on a dataset containing 3837 images provided by the 300-Faces in the Wild Challenge. The images and annotations come from AFW, LFPW, HELEN, and IBUG. A subset of 500 images are randomly selected as our validation set.

两个评估指标:

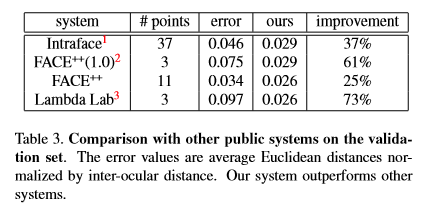

Two performance metrics are used on the validation set: the first one is the average distance between the predicted landmark positions and the ground truth normalized by inter-ocular distances. The second one is the cumulative error curve that plots the percentage of points against the normalized distance.

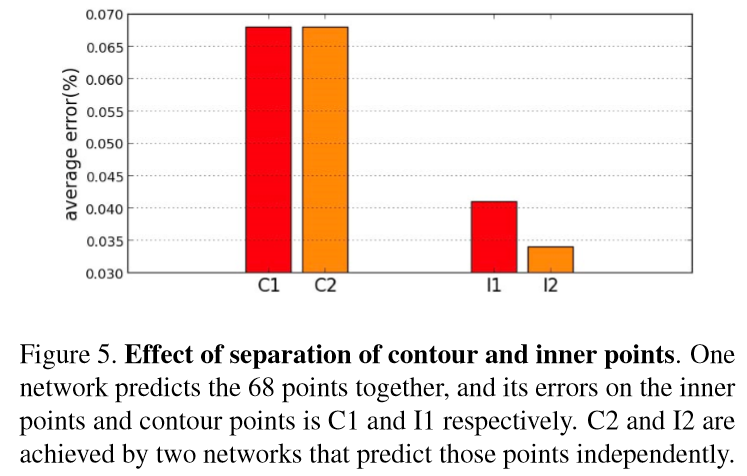

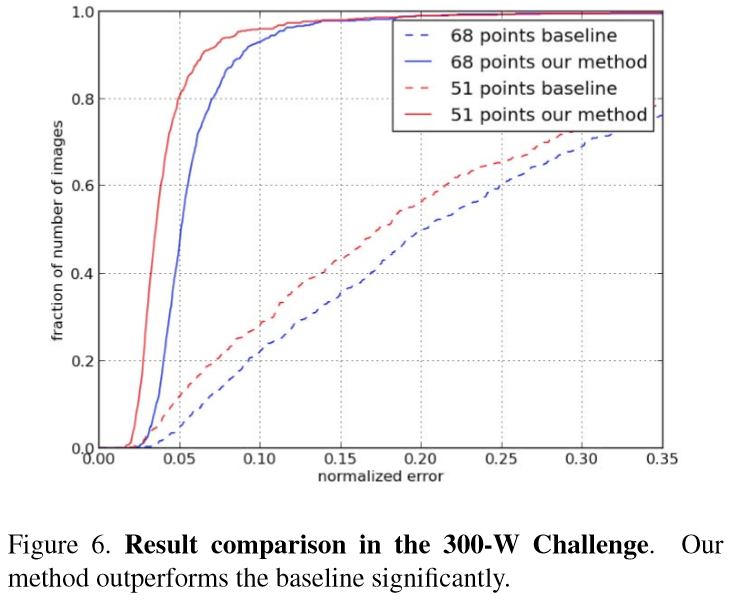

Validation of our method

Separating the contour from inner points is essential to our performance.

Comparison with other methods

Figure 7 gives some examples taken from the validation set. Our system is able to handle images that contain great variation in pose and lightening condition. It can predict the shape of the face even in the presence of occlusion. Despite the success, chance for further improvement still exists, especially for the points on the eyebrow or face contour.

在DCNN的基础上实现了68个人脸关键点检测。

We propose a new automatic system for facial landmark localization. In our method, four DCNN levels are carefully designed to form a coarse-to-fine network cascade. To validate the effectiveness of our design, we show that our system can achieve leading performance in the 300-W facial landmark localization challenge.

Facial Landmark Detection by Deep Multi-task Learning

发表时间:2014年

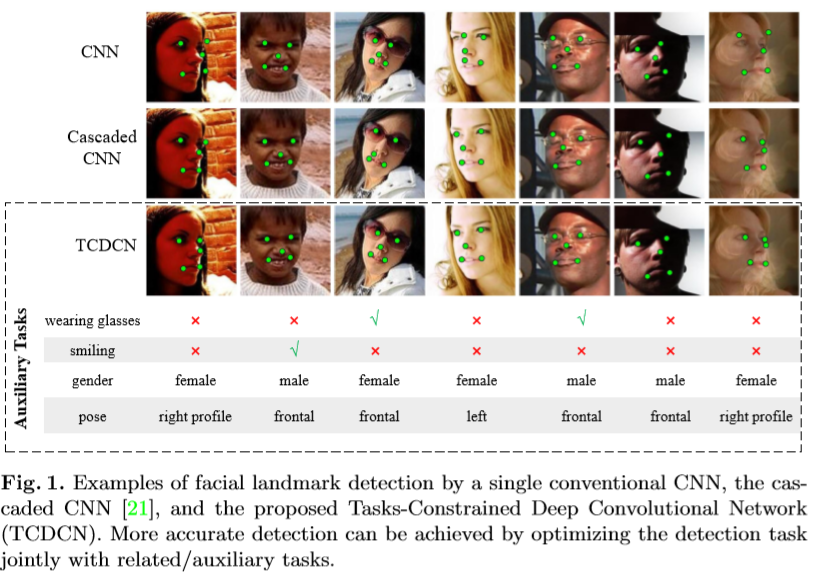

Instead of treating the detection task as a single and independent problem, we investigate the possibility of improving detection robustness through multi-task learning.

Extensive evaluations show that the proposed task-constrained learning (i) outperforms existing methods, especially in dealing with faces with severe occlusion and pose variation, and (ii) reduces model complexity drastically compared to the state-of-the-art method based on cascaded deep model

更好地识别严重遮挡以及姿势变化的面部;与其他基于cascaded deep model的算法下相比模型复杂性大大降低。

评估指标-Evaluation metrics: In all cases, we report our results on two popular metrics, including mean error and failure rate. The mean error is measured by the distances between estimated landmarks and the ground truths, normalizing with respect to the inter-ocular distance. Mean error larger than 10% is reported as a failure.

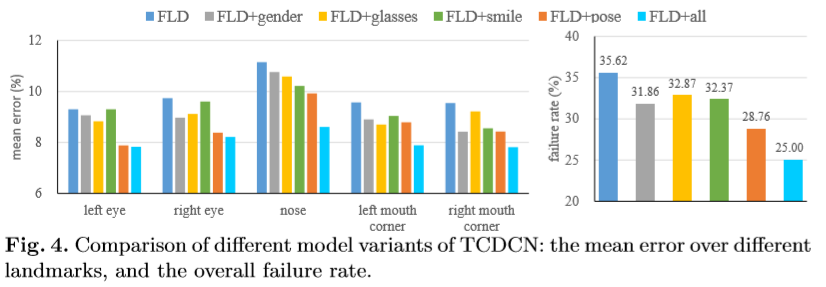

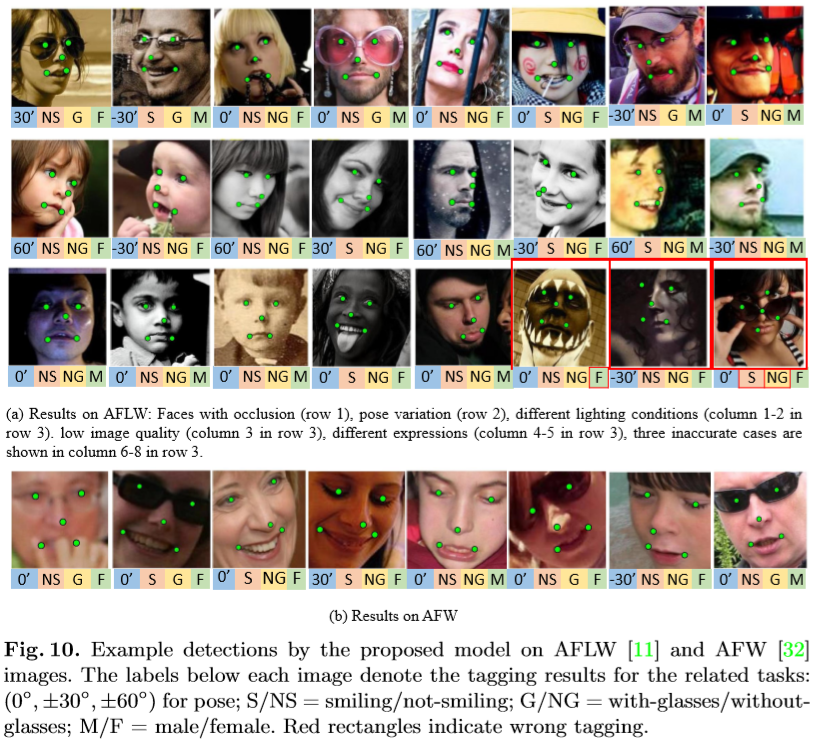

Evaluating the Effectiveness of Learning with Related Task

We employ the popular AFLW for evaluation. This dataset is selected because it is more challenging than other datasets, such as LFPW. For example, AFLW has larger pose variations (39% of faces are non-frontal in our testing images) and severe partial occlusions. We selected 3,000 faces randomly from AFLW for testing.

It is evident from Figure 4 that optimizing landmark detection with related tasks are beneficial. In particular, FLD+all outperforms FLD by a large margin, with a reduction over 10% in failure rate.

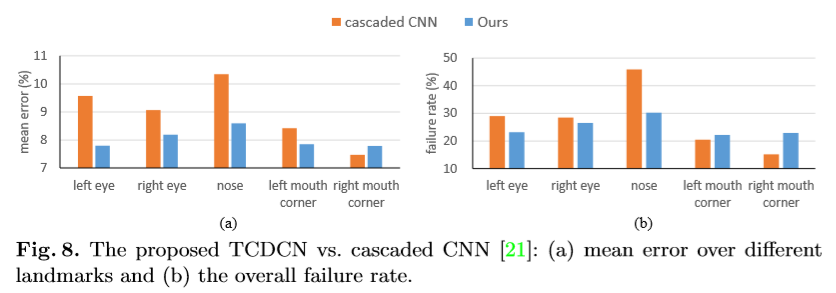

Comparison with the Cascaded CNN

Although both the TCDCN and the cascaded CNN are built upon CNN, we show that the proposed model can achieve better detection accuracy with a significantly lower computational cost.

Landmark localization accuracy

Computational efficiency

The cascaded CNN requires 0.12s to process an image on an Intel Core i5 CPU, whilst TCDCN only takes 17ms, which is 7 times faster. The TCDCN costs 1.5ms on a NVIDIA GTX760 GPU.

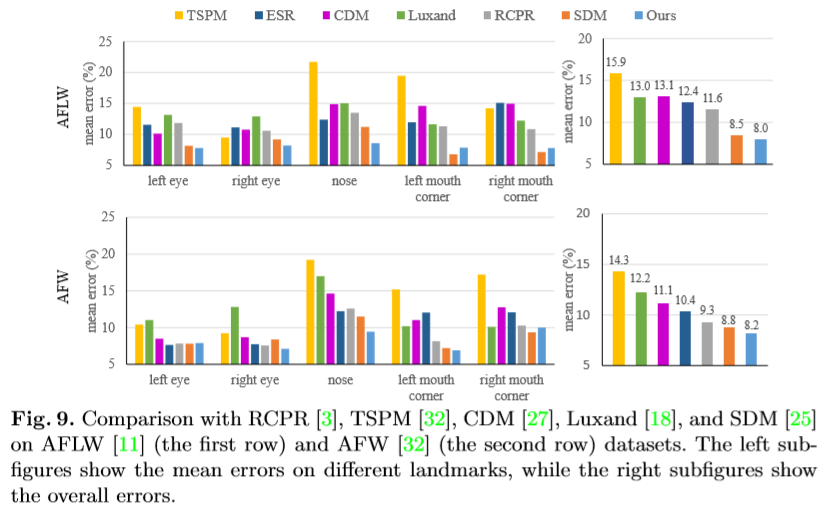

Comparison with other State-of-the-art Methods

Thanks to multi-task learning, the proposed model is more robust to faces with severe occlusions and large pose variations compared to existing methods.

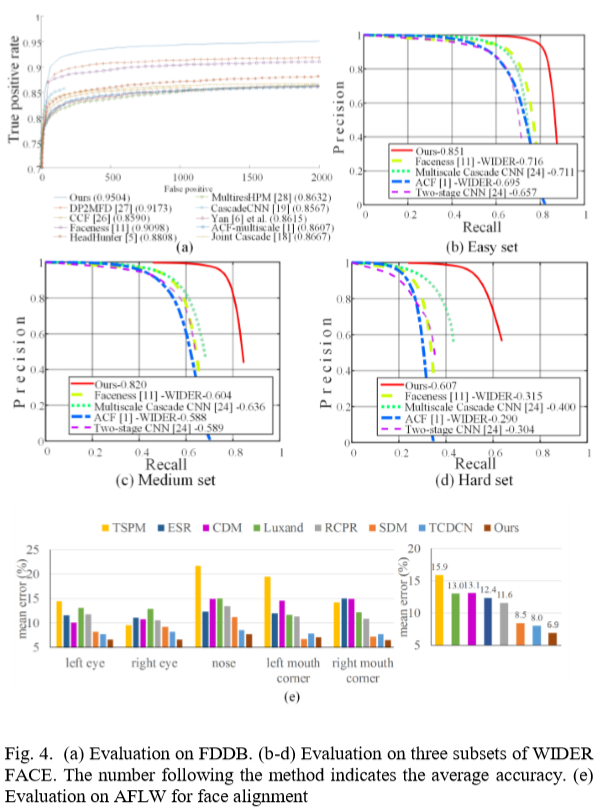

Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks

发表时间:2016年

两个任务:face detection and alignment

In this paper, we propose a deep cascaded multi-task framework which exploits the inherent correlation between them to boost up their performance.

FDDB and WIDER FACE benchmarks for face detection, and AFLW benchmark for face alignment

Given the cascade structure, our method can achieve very fast speed in joint face detection and alignment. It takes 16fps on a 2.60GHz CPU and 99fps on GPU (Nvidia Titan Black). Our implementation is currently based on un-optimized MATLAB code.

MTCNN在TCDCN的基础上速度和精度都有一定的提升。

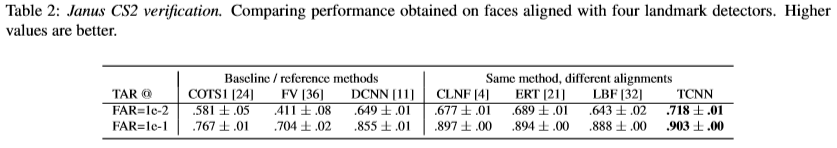

Facial Landmark Detection with Tweaked Convolutional Neural Networks

发表时间:2016

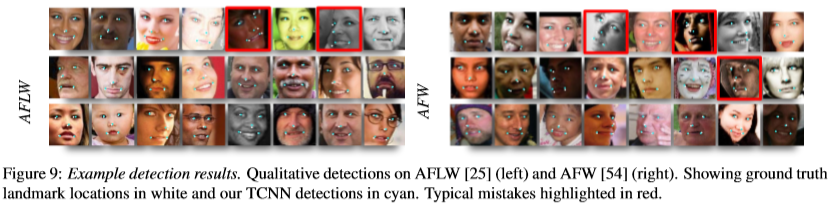

We present a novel convolutional neural network (CNN) design for facial landmark coordinate regression. The resulting Tweaked CNN model (TCNN) harnesses the robustness of CNNs for landmark detection, in an appearance-sensitive manner without training multi-part or multi-scale models.

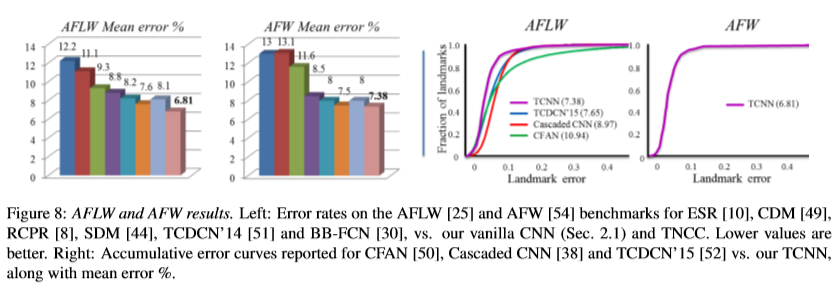

评估标准Evaluation criteria. In our tests we use the detector error rate to report accuracy. It is computed by normalizing the mean distance between predicted to ground truth landmark locations to a percent of the inter-ocular distance.

Deep Alignment Network: A convolutional neural network for robust face alignment

发表时间:2017

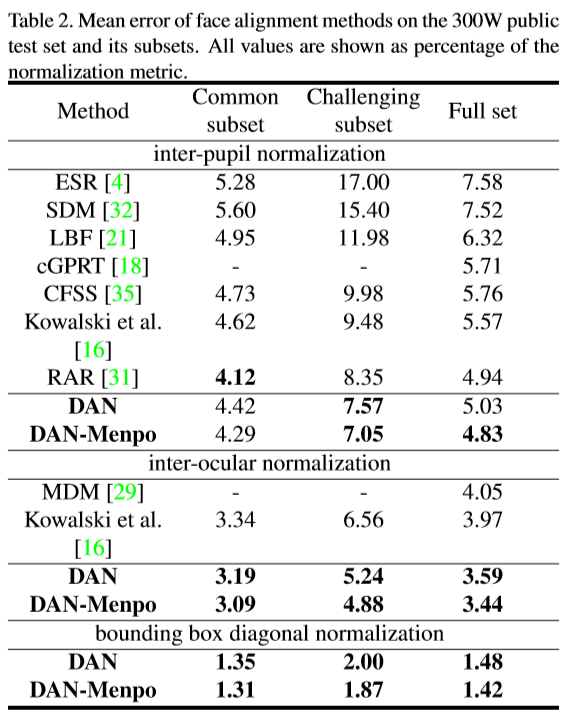

In this paper, we propose Deep Alignment Network (DAN), a robust face alignment method based on a deep neural network architecture. DAN consists of multiple stages, where each stage improves the locations of the facial landmarks estimated by the previous stage. Our method uses entire face images at all stages, contrary to the recently proposed face alignment methods that rely on local patches. This is possible thanks to the use of landmark heatmaps which provide visual information about landmark locations estimated at the previous stages of the algorithm. The use of entire face images rather than patches allows DAN to handle face images with large variation in head pose and difficult initializations.

Datasets In order to evaluate our method we perform experiments on the data released for the 300W competition and the recently introduced Menpo challenge dataset. The test data consists of the remaining datasets: IBUG, 300W private test set, test sets of LFPW, HELEN. The Menpo challenge dataset consists of semi-frontal and profile face image datasets. In our experiments we only use the semi-frontal dataset.

Error measures For evaluating our method on the test datasets we use three metrics: the mean error, the area under the cumulative error distribution curve (AUCα) and the failure rate.

The Python implementation runs at 73 fps for images processed in parallel and at 45 fps for images processed sequentially on a GeForce GTX 1070 GPU.

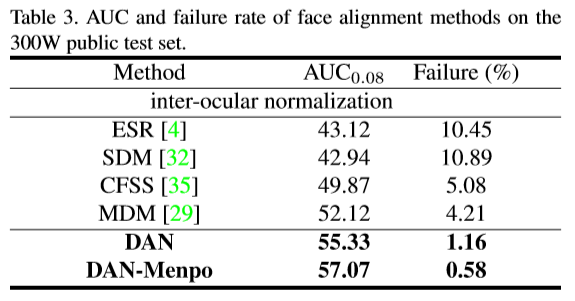

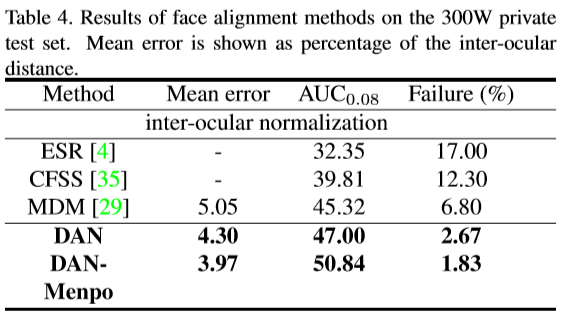

Comparison with state-of-the-art

We compare the DAN model with state-of-the-art methods on all of the test sets of the 300W competition data.

最后

以上就是痴情镜子最近收集整理的关于face landmark summary1的全部内容,更多相关face内容请搜索靠谱客的其他文章。

发表评论 取消回复