回顾

读懂Pytorch版本的Faster-RCNN代码 (一) generate_anchors.py

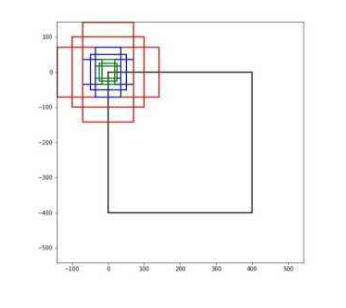

上一篇博客简单讲述了Faster RCNN的构成和原理,以及RPN模块的generate_anchors.py的代码部分,回顾一下generate_anchors的主要作用是根据一个base anchor来生成9个不同尺度和纵横比的待选框,如下图所示:

今天继续来学习RPN模块的其他代码部分

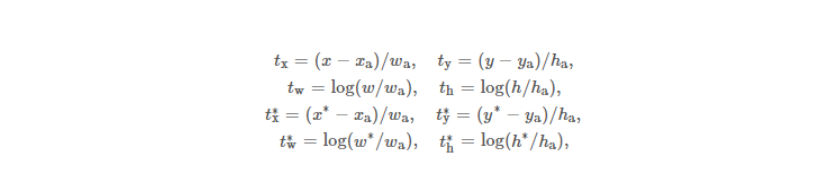

有两个需要提前知道的地方,首先,计算偏移量的公式,也就是计算GT和anchors之间差距的公式:

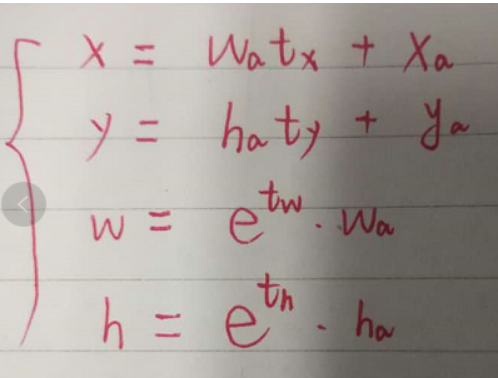

第二个是如何根据偏移量计算预测框,公式如下:

下一个比较基本的模块是

1.bbox_transform.py

这部分主要完成的功能有四个:

- 计算一个anchors和GT之间的偏移量

- 得到一个偏移量和anchors将他按照偏移量进行调整

- 剪裁proposals让越界的边框限制在图像之内

- 计算anchors和GT之间的IOU值

# --------------------------------------------------------

# Fast R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick

# --------------------------------------------------------

# --------------------------------------------------------

# Reorganized and modified by Jianwei Yang and Jiasen Lu

# --------------------------------------------------------

import torch

import numpy as np

import pdb

# ==========================================

# 函数输入: 两个区域的左下角右上角坐标,一个是预测的ROI,另一个是ground_truth

# 函数输出: x, y, w, h的偏移量

# ==========================================

# 这个函数主要计算了一个给定的ROI和一个ground_truth之间的偏移量大小

# 前8行代码分别计算了ROI以及ground_truth的宽度w高度h以及中心点坐标x,y,具体的计算方法和上一篇# 博客中计算过程相同

def bbox_transform(ex_rois, gt_rois):

# 这里计算了一个ROI的w, h, x, y

ex_widths = ex_rois[:, 2] - ex_rois[:, 0] + 1.0

ex_heights = ex_rois[:, 3] - ex_rois[:, 1] + 1.0

ex_ctr_x = ex_rois[:, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, 1] + 0.5 * ex_heights

# 这里计算了一个ground_truth的w, h, x, y

gt_widths = gt_rois[:, 2] - gt_rois[:, 0] + 1.0

gt_heights = gt_rois[:, 3] - gt_rois[:, 1] + 1.0

gt_ctr_x = gt_rois[:, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, 1] + 0.5 * gt_heights

# 通过以上两步已经计算出了ROI和GT的四个指标,下面开始计算偏移量,这个偏移量就是按照论文中

# 定义的公式去计算

# 对于x和y就是两个的坐标相减分别处以宽度和高度

# 对于w和h的偏移量就是两个的商取log值

targets_dx = (gt_ctr_x - ex_ctr_x) / ex_widths

targets_dy = (gt_ctr_y - ex_ctr_y) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths)

targets_dh = torch.log(gt_heights / ex_heights)

targets = torch.stack(

(targets_dx, targets_dy, targets_dw, targets_dh),1)

return targets

# ========================================

# 函数输入:生成的一系列区域以及GT的左下右上坐标,其中ex_rois可以不是batch

# 函数输出:返回计算他们之间的偏移量

# ========================================

# 这个函数和上一个完成的是相同的任务,但是区别是可以进行batch操作

# 其中ex_rois可以传入batch的,也可以传单个图像的一系列ROIs,但是GT都是以batch传入的

def bbox_transform_batch(ex_rois, gt_rois):

# 这里判断了一下ex_rois是不是批量的,也就是batch_size的形式

# 如果是2,证明不是batch的形式

# 然后计算了这一系列的ex_rois的w,h,x,y

# 举个例子,比如ex_rois是[10, 4],gt_rois是[10, 10, 4]

# 那么对于ex算出来的w是[10],然后通过torch.view操作变成了(1, 10),再通过.expand_as变成了

# [10, 10]这样就保证了维度相同,可以运算了

if ex_rois.dim() == 2:

ex_widths = ex_rois[:, 2] - ex_rois[:, 0] + 1.0

ex_heights = ex_rois[:, 3] - ex_rois[:, 1] + 1.0

ex_ctr_x = ex_rois[:, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, 1] + 0.5 * ex_heights

gt_widths = gt_rois[:, :, 2] - gt_rois[:, :, 0] + 1.0

gt_heights = gt_rois[:, :, 3] - gt_rois[:, :, 1] + 1.0

gt_ctr_x = gt_rois[:, :, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, :, 1] + 0.5 * gt_heights

targets_dx = (gt_ctr_x - ex_ctr_x.view(1,-1).expand_as(gt_ctr_x)) / ex_widths

targets_dy = (gt_ctr_y - ex_ctr_y.view(1,-1).expand_as(gt_ctr_y)) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths.view(1,-1).expand_as(gt_widths))

targets_dh = torch.log(gt_heights / ex_heights.view(1,-1).expand_as(gt_heights))

# 这里是如果ex也是batch的形式就不用那么复杂直接计算就可以,返回的结果是(10, 10, 4)

elif ex_rois.dim() == 3:

ex_widths = ex_rois[:, :, 2] - ex_rois[:, :, 0] + 1.0

ex_heights = ex_rois[:,:, 3] - ex_rois[:,:, 1] + 1.0

ex_ctr_x = ex_rois[:, :, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, :, 1] + 0.5 * ex_heights

gt_widths = gt_rois[:, :, 2] - gt_rois[:, :, 0] + 1.0

gt_heights = gt_rois[:, :, 3] - gt_rois[:, :, 1] + 1.0

gt_ctr_x = gt_rois[:, :, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, :, 1] + 0.5 * gt_heights

targets_dx = (gt_ctr_x - ex_ctr_x) / ex_widths

targets_dy = (gt_ctr_y - ex_ctr_y) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths)

targets_dh = torch.log(gt_heights / ex_heights)

else:

raise ValueError('ex_roi input dimension is not correct.')

# 这里是对结果的拼接

targets = torch.stack(

(targets_dx, targets_dy, targets_dw, targets_dh),2)

return targets

# =======================================

# 函数输入:没经过回归变化的boxes,每个类别的偏移量,batch_size(没用到)

# 函数输出:每个类别的pred_boxes的左下右上的坐标

#========================================

# 这个函数是计算pred_boxes的过程,就是计算我预测出的boxes

# 其中boxes是没变换之前的anchor,deltas是x,y,w,h上的偏移量

# 由于faster_RCNN是对每一个类别预测一个偏移量,所以delta的大小是classnum*4

def bbox_transform_inv(boxes, deltas, batch_size):

# 首先计算boxes的w,h,x,y的坐标

widths = boxes[:, :, 2] - boxes[:, :, 0] + 1.0

heights = boxes[:, :, 3] - boxes[:, :, 1] + 1.0

ctr_x = boxes[:, :, 0] + 0.5 * widths

ctr_y = boxes[:, :, 1] + 0.5 * heights

# 由于里面有每个类别的偏移量,所以这里::4的意思是没4步取一个值,最终是去了所有类别的

# dx,dy,dw,dh

dx = deltas[:, :, 0::4]

dy = deltas[:, :, 1::4]

dw = deltas[:, :, 2::4]

dh = deltas[:, :, 3::4]

# 这里还是举一个例子,例如batchsize=10,一个图像又10个anchors,类别数量是2

# dx这些维度都是(10, 10, 2)

# widths是(10, 10)这样维度不同没有办法计算所以需要在最后加一个维度就是第二个10后面

# 所以这时候通过unsqueeze(2),就变成了(10, 10, 2)维度相同,可以按照公式计算

# 最终的输出就是(batch_size, roi_number, class_number)的维度

# 这里开始根据公式来计算预测的bbox坐标,根据公式

pred_ctr_x = dx * widths.unsqueeze(2) + ctr_x.unsqueeze(2)

pred_ctr_y = dy * heights.unsqueeze(2) + ctr_y.unsqueeze(2)

pred_w = torch.exp(dw) * widths.unsqueeze(2)

pred_h = torch.exp(dh) * heights.unsqueeze(2)

# 这里再将预测框的格式转换为左下角右上角坐标的形式

pred_boxes = deltas.clone()

# x1

pred_boxes[:, :, 0::4] = pred_ctr_x - 0.5 * pred_w

# y1

pred_boxes[:, :, 1::4] = pred_ctr_y - 0.5 * pred_h

# x2

pred_boxes[:, :, 2::4] = pred_ctr_x + 0.5 * pred_w

# y2

pred_boxes[:, :, 3::4] = pred_ctr_y + 0.5 * pred_h

return pred_boxes

#=========================================

# 函数输入:一系列的待选框左下右上坐标,图像大小,batch_size

# 函数输出:限制边界后的bbox

#=========================================

# 这个函数是将boxes的边界限制在图像范围之内,防止那些越界的边界框

# 由于也是batch的形式,会有一个维度是batch_size

def clip_boxes_batch(boxes, im_shape, batch_size):

"""

Clip boxes to image boundaries.

"""

# 这里面先取了一下每个图像有几个rois,因为这个是boxes的第二维

num_rois = boxes.size(1)

# 这里判断了一下,如果预测框已经小于零了证明已经出界了,这时候让他们等于0

boxes[boxes < 0] = 0

# batch_x = (im_shape[:,0]-1).view(batch_size, 1).expand(batch_size, num_rois)

# batch_y = (im_shape[:,1]-1).view(batch_size, 1).expand(batch_size, num_rois)

# 由于比如256大小的图像范围是0-255,所以-1来得到坐标的最大值

batch_x = im_shape[:, 1] - 1

batch_y = im_shape[:, 0] - 1

# 分别判断左下右上的坐标是否越过最大值,如果越过就让他等于最大值

boxes[:,:,0][boxes[:,:,0] > batch_x] = batch_x

boxes[:,:,1][boxes[:,:,1] > batch_y] = batch_y

boxes[:,:,2][boxes[:,:,2] > batch_x] = batch_x

boxes[:,:,3][boxes[:,:,3] > batch_y] = batch_y

return boxes

# =================================================

# 函数输入:预测框的坐标,图像大小(这里可以使不同大小的batch的形式),batch_size

# 函数输出:限制之后的预测框

# =================================================

# 上面的是对于boxes是batch的形式,但是图像是相同大小的,而这个就可以图像大小不同

# 根据不同的大小指定自己的边界

def clip_boxes(boxes, im_shape, batch_size):

# 迭代每一个图像大小,进行限制

# .clamp函数就是限制函数,参数是最小值最大值,这样将他们限制在图像中

for i in range(batch_size):

boxes[i,:,0::4].clamp_(0, im_shape[i, 1]-1)

boxes[i,:,1::4].clamp_(0, im_shape[i, 0]-1)

boxes[i,:,2::4].clamp_(0, im_shape[i, 1]-1)

boxes[i,:,3::4].clamp_(0, im_shape[i, 0]-1)

return boxes

#====================================================

# 函数输入:一系列的anchors和一系列的gt的左下右上坐标

# 函数输出:这一系列anchors和gt之间交并比IOU返回的结果是(N, K)大小的,即两两之间都做了比较

#====================================================

# 这个代码是计算anchors和ground_truth重叠的面积,也就是IOU值

def bbox_overlaps(anchors, gt_boxes):

"""

anchors: (N, 4) ndarray of float

gt_boxes: (K, 4) ndarray of float

overlaps: (N, K) ndarray of overlap between boxes and query_boxes

"""

# N是anchors的数量,K是gt_boxes的数量

N = anchors.size(0)

K = gt_boxes.size(0)

# 这里首先先将两个区域的面积计算出来,然后将gt的面积转化为(1, k)的维度

# 将anchors的维度转换为(N, 1)的维度

gt_boxes_area = ((gt_boxes[:,2] - gt_boxes[:,0] + 1) *

(gt_boxes[:,3] - gt_boxes[:,1] + 1)).view(1, K)

anchors_area = ((anchors[:,2] - anchors[:,0] + 1) *

(anchors[:,3] - anchors[:,1] + 1)).view(N, 1)

# 这里将待选框和gt转换为同一维度都是(N,K,4)

boxes = anchors.view(N, 1, 4).expand(N, K, 4)

query_boxes = gt_boxes.view(1, K, 4).expand(N, K, 4)

# 这里找到右上x坐标与左下x坐标的最小值以及右上x坐标的最大值

# 这样就能保正得到的数不是负数

iw = (torch.min(boxes[:,:,2], query_boxes[:,:,2]) -

torch.max(boxes[:,:,0], query_boxes[:,:,0]) + 1)

# 如果小于0,证明两个框没有交集,则等于零

iw[iw < 0] = 0

# 同样,找到y坐标的最小最大值,然后得到h的最大值

ih = (torch.min(boxes[:,:,3], query_boxes[:,:,3]) -

torch.max(boxes[:,:,1], query_boxes[:,:,1]) + 1)

ih[ih < 0] = 0

# 这样根据交并比的公式,ua计算了两个区域的面积总和

# 用iw*ih得到相交区域的面积,除以总面积,得到交并比,即IOU

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

# 返回最大的IOU

return overlaps

#==============================================

# 函数输入:batch_size形式的gt,anchors可以是batch也可以不是

# 函数输出:batch形式的ROIs, 维度为batch_size, N, K

#==============================================

# 这个函数还是计算IOU,但是gt可以是batch_size的形式

def bbox_overlaps_batch(anchors, gt_boxes):

"""

anchors: (N, 4) ndarray of float

# 这里多的一维是这个gt物体的类别

gt_boxes: (b, K, 5) ndarray of float

overlaps: (N, K) ndarray of overlap between boxes and query_boxes

"""

# 首先计算出batch_size的大小

batch_size = gt_boxes.size(0)

# 这里判断一下anchors是不是batch的形式,如果是两维证明不是batch的形式

if anchors.dim() == 2:

# N和K还是看一张图有多少个anchor和ground_truth

N = anchors.size(0)

K = gt_boxes.size(1)

# 这里的contiguous相当于对原来的tensor进行了一下深拷贝,其实就相当于reshape

anchors = anchors.view(1, N, 4).expand(batch_size, N, 4).contiguous()

gt_boxes = gt_boxes[:,:,:4].contiguous()

# 这里计算出gt的宽和高,并且同时计算出面积,将其面积变成(batch, 1, k)的维度

gt_boxes_x = (gt_boxes[:,:,2] - gt_boxes[:,:,0] + 1)

gt_boxes_y = (gt_boxes[:,:,3] - gt_boxes[:,:,1] + 1)

gt_boxes_area = (gt_boxes_x * gt_boxes_y).view(batch_size, 1, K)

# 同样,这里计算出anchors的宽和高,也计算出面积,维度是(batch, N, 1)

anchors_boxes_x = (anchors[:,:,2] - anchors[:,:,0] + 1)

anchors_boxes_y = (anchors[:,:,3] - anchors[:,:,1] + 1)

anchors_area = (anchors_boxes_x * anchors_boxes_y).view(batch_size, N, 1)

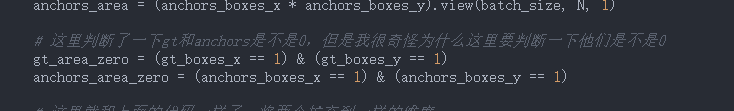

# 这里判断了一下gt和anchors是不是0,但是我很奇怪为什么这里要判断一下他们是不是0

gt_area_zero = (gt_boxes_x == 1) & (gt_boxes_y == 1)

anchors_area_zero = (anchors_boxes_x == 1) & (anchors_boxes_y == 1)

# 这里就和上面的代码一样了,将两个扩充到一样的维度

boxes = anchors.view(batch_size, N, 1, 4).expand(batch_size, N, K, 4)

query_boxes = gt_boxes.view(batch_size, 1, K, 4).expand(batch_size, N, K, 4)

#计算宽度

iw = (torch.min(boxes[:,:,:,2], query_boxes[:,:,:,2]) -

torch.max(boxes[:,:,:,0], query_boxes[:,:,:,0]) + 1)

iw[iw < 0] = 0

# 计算高度

ih = (torch.min(boxes[:,:,:,3], query_boxes[:,:,:,3]) -

torch.max(boxes[:,:,:,1], query_boxes[:,:,:,1]) + 1)

ih[ih < 0] = 0

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

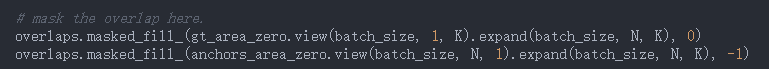

# 这里是做的一个填补,如果gt是0,让这部分的IOU变成0

# 如果anchors是0,那么就用-1去替换 IOU的值

# mask the overlap here.

overlaps.masked_fill_(gt_area_zero.view(batch_size, 1, K).expand(batch_size, N, K), 0)

overlaps.masked_fill_(anchors_area_zero.view(batch_size, N, 1).expand(batch_size, N, K), -1)

elif anchors.dim() == 3:

N = anchors.size(1)

K = gt_boxes.size(1)

# anchor的最后一维如果是4那么就是四个坐标

if anchors.size(2) == 4:

anchors = anchors[:,:,:4].contiguous()

# 不然的或就是后四个数是坐标,现在还没理解第一维是什么

else:

anchors = anchors[:,:,1:5].contiguous()

# 得到GT的前思维坐标

gt_boxes = gt_boxes[:,:,:4].contiguous()

# 后面的计算过程和前面的基本上没有差别了

gt_boxes_x = (gt_boxes[:,:,2] - gt_boxes[:,:,0] + 1)

gt_boxes_y = (gt_boxes[:,:,3] - gt_boxes[:,:,1] + 1)

gt_boxes_area = (gt_boxes_x * gt_boxes_y).view(batch_size, 1, K)

anchors_boxes_x = (anchors[:,:,2] - anchors[:,:,0] + 1)

anchors_boxes_y = (anchors[:,:,3] - anchors[:,:,1] + 1)

anchors_area = (anchors_boxes_x * anchors_boxes_y).view(batch_size, N, 1)

gt_area_zero = (gt_boxes_x == 1) & (gt_boxes_y == 1)

anchors_area_zero = (anchors_boxes_x == 1) & (anchors_boxes_y == 1)

boxes = anchors.view(batch_size, N, 1, 4).expand(batch_size, N, K, 4)

query_boxes = gt_boxes.view(batch_size, 1, K, 4).expand(batch_size, N, K, 4)

iw = (torch.min(boxes[:,:,:,2], query_boxes[:,:,:,2]) -

torch.max(boxes[:,:,:,0], query_boxes[:,:,:,0]) + 1)

iw[iw < 0] = 0

ih = (torch.min(boxes[:,:,:,3], query_boxes[:,:,:,3]) -

torch.max(boxes[:,:,:,1], query_boxes[:,:,:,1]) + 1)

ih[ih < 0] = 0

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

# mask the overlap here.

overlaps.masked_fill_(gt_area_zero.view(batch_size, 1, K).expand(batch_size, N, K), 0)

overlaps.masked_fill_(anchors_area_zero.view(batch_size, N, 1).expand(batch_size, N, K), -1)

else:

raise ValueError('anchors input dimension is not correct.')

return overlaps

对于上述代码尚有两个疑问:

这里为什么要判断一下gt和anchors是不是0,gt是真是框,肯定不会存在0这个问题啊

第二个是为什么要在这里对真是框是0的补成0,anchors是0的补成-1

这两个问题在解读后续代码时再理解把

2.proposal_layer.py

这部分主要完成的功能是:

- 将特征图中的anchors映射回原图像

- 根据偏移量对proposals进行调整,对越界的地方进行剪裁

- 按照评分对前景图像进行排序,选择K个最高的送入NMS网络,将定义好的前N个NMS的输出保存下来,再计算出不符合规定的高度和宽度大小的边框。

from __future__ import absolute_import

# --------------------------------------------------------

# Faster R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick and Sean Bell

# --------------------------------------------------------

# --------------------------------------------------------

# Reorganized and modified by Jianwei Yang and Jiasen Lu

# --------------------------------------------------------

import torch

import torch.nn as nn

import numpy as np

import math

import yaml

from model.utils.config import cfg

from .generate_anchors import generate_anchors

from .bbox_transform import bbox_transform_inv, clip_boxes, clip_boxes_batch

# from model.nms.nms_wrapper import nms

from model.roi_layers import nms

import pdb

DEBUG = False

class _ProposalLayer(nn.Module):

"""

Outputs object detection proposals by applying estimated bounding-box

transformations to a set of regular boxes (called "anchors").

"""

# 这个类的作用是通过将估计的边界框经过变换,得到一组常规框,也就是anchors

def __init__(self, feat_stride, scales, ratios):

# 先进行初始化

super(_ProposalLayer, self).__init__()

# feat_stride是图像缩小了多少倍,如果图像特征维度是原来的1/16,那么这个数就是16

self._feat_stride = feat_stride

# 这句话调用了generate_anchors,通过指定的scales和ratios来生成定义中的那些基础框

self._anchors = torch.from_numpy(generate_anchors(scales=np.array(scales),

ratios=np.array(ratios))).float()

# 这里计算了基础的anchors的数量,大小是ratios的数量乘以scales的数量

self._num_anchors = self._anchors.size(0)

# rois blob: holds R regions of interest, each is a 5-tuple

# (n, x1, y1, x2, y2) specifying an image batch index n and a

# rectangle (x1, y1, x2, y2)

# top[0].reshape(1, 5)

#

# # scores blob: holds scores for R regions of interest

# if len(top) > 1:

# top[1].reshape(1, 1, 1, 1)

def forward(self, input):

# Algorithm:

#

# for each (H, W) location i

# generate A anchor boxes centered on cell i

# apply predicted bbox deltas at cell i to each of the A anchors

# clip predicted boxes to image

# remove predicted boxes with either height or width < threshold

# sort all (proposal, score) pairs by score from highest to lowest

# take top pre_nms_topN proposals before NMS

# apply NMS with threshold 0.7 to remaining proposals

# take after_nms_topN proposals after NMS

# return the top proposals (-> RoIs top, scores top)

# _num_anchors的第一个通道是他是背景的概率

# 第二个通道是他是前景的概率

# the first set of _num_anchors channels are bg probs

# the second set are the fg probs

# scores是一个四维的tensor[batch_size, 18, 14, 14]

# 其中的第一维不用说了是batch_size

# 第二维是18,因为按照fasterRCNN一个特征点生成9个anchors,18中前9个是他是背景的概率

# 后九个是他是前景的概率,后面的14*14是因为在特征提取后的特征图是14*14维的

scores = input[0][:, self._num_anchors:, :, :] #(batch_size, 9, 14, 14)

# input的第二维是偏移量

bbox_deltas = input[1]

# input的第三维是图像的信息

im_info = input[2]

# 这里没用到这个,不过据说是前景还是背景

cfg_key = input[3]

# 这里从config文件里提取了一些超参数

# 这个参数是在NMS处理之前我们要保留评分前多少的boxes

pre_nms_topN = cfg[cfg_key].RPN_PRE_NMS_TOP_N

# 这个参数是在应用了NMS之后我们要保留前多少个评分boxes

post_nms_topN = cfg[cfg_key].RPN_POST_NMS_TOP_N

# 这个参数是NMS应用的阈值

nms_thresh = cfg[cfg_key].RPN_NMS_THRESH

# 这个参数是你最终映射回原图的宽和高都要大于这个值

min_size = cfg[cfg_key].RPN_MIN_SIZE

# 计算了一下偏移量的第一维,得到的是batch_size

batch_size = bbox_deltas.size(0)

# 这两个数就是特征图的高度和宽度,按照论文中的模型就是14*14

feat_height, feat_width = scores.size(2), scores.size(3)

# 这里是要把他做成网格的形式,先做一个从0到13的数组,然后乘以stride,这样就是原图中

# x的一系列坐标

shift_x = np.arange(0, feat_width) * self._feat_stride

# 对y做同样的处理

shift_y = np.arange(0, feat_height) * self._feat_stride

# 这里是把x和y的坐标展开

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

# 然后将xy进行合并,得到4*196的结果,再进行转置,最终得到196*4的维度

# 然后将其转换为float的形式这些就是特征点转换到原图的中心点坐标

shifts = torch.from_numpy(np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose())

shifts = shifts.contiguous().type_as(scores).float()

# A是一个特征点anchors的数量,论文中为9

A = self._num_anchors

# K是shifts的第一维,也就是196,其实相当于在原图中划分了196个区域

K = shifts.size(0)

# 这里也把anchors转换为和scores一样的数据类型

self._anchors = self._anchors.type_as(scores)

# anchors = self._anchors.view(1, A, 4) + shifts.view(1, K, 4).permute(1, 0, 2).contiguous()

# 把以0, 0生成的那些标准anchor的坐标和原图中的中心点坐标相加,就得到了原图中的待选框

# 这里计算出的结果是(196, 9, 4)

anchors = self._anchors.view(1, A, 4) + shifts.view(K, 1, 4)

# 然后将他们展开成(1, 196*9, 4), 并且扩大到batch_size的大小

anchors = anchors.view(1, K * A, 4).expand(batch_size, K * A, 4)

# Transpose and reshape predicted bbox transformations to get them

# into the same order as the anchors:

# 这里的delta是(batch_size, 9*4, 14, 14)的大小,将他转换成和anchors一样的形式

bbox_deltas = bbox_deltas.permute(0, 2, 3, 1).contiguous()

# 转换为(batch_size, 196 * 4, 4)

bbox_deltas = bbox_deltas.view(batch_size, -1, 4)

# Same story for the scores:

# 同样scores:(batch_size, 9, 14, 14)->(batch_size, 14, 14, 9)->(batch_size, 14*14*9)

scores = scores.permute(0, 2, 3, 1).contiguous()

scores = scores.view(batch_size, -1)

# Convert anchors into proposals via bbox transformations

# 用之前写过的bbox_transorm_inv将经过修正的proposals得到

proposals = bbox_transform_inv(anchors, bbox_deltas, batch_size)

# 2. clip predicted boxes to image

# 这里是将proposals限制到图像内

proposals = clip_boxes(proposals, im_info, batch_size)

# proposals = clip_boxes_batch(proposals, im_info, batch_size)

# assign the score to 0 if it's non keep.

# keep = self._filter_boxes(proposals, min_size * im_info[:, 2])

# trim keep index to make it euqal over batch

# keep_idx = torch.cat(tuple(keep_idx), 0)

# scores_keep = scores.view(-1)[keep_idx].view(batch_size, trim_size)

# proposals_keep = proposals.view(-1, 4)[keep_idx, :].contiguous().view(batch_size, trim_size, 4)

# _, order = torch.sort(scores_keep, 1, True)

# 这里先将scores存到keep里面(bs, 14*14*9, 1)

scores_keep = scores

# 这里是经过修正后的proposals

proposals_keep = proposals

# 这里把是前景的分数进行排序,1代表以第2维进行排序,True代表从大到小

# 返回的第一维是排好的tensor,第二维是index,这里只要index

_, order = torch.sort(scores_keep, 1, True)

# 这里先定义了输出的tensor,一个(batch_size, post_nms_topN, 5)大小的全0矩阵

output = scores.new(batch_size, post_nms_topN, 5).zero_()

for i in range(batch_size):

# # 3. remove predicted boxes with either height or width < threshold

# # (NOTE: convert min_size to input image scale stored in im_info[2])

# 这里获取了一张图像的proposals以及其是前景的评分

proposals_single = proposals_keep[i] #[14*14*9, 4]

scores_single = scores_keep[i] # [14*14*9, 1]

# # 4. sort all (proposal, score) pairs by score from highest to lowest

# # 5. take top pre_nms_topN (e.g. 6000)

# 这里计算出了一张图像的所有proposals前景评分从大到小的排名

order_single = order[i]

# 这里判断了一下,如果输入NMS的排序个数大于零,并且小于scores_deep的元素个数

# 这里我觉得应该和scores_single来进行比较,不然scores_keep的数量是乘以batch_size的

# 需要注意的是,并不是所有图像最终特征矩阵都是14*14,而是举了个例子,对于一张1000*600的图像来说,proposals的个数是20000左右

if pre_nms_topN > 0 and pre_nms_topN < scores_keep.numel():

order_single = order_single[:pre_nms_topN]

# 然后这里得到一个图像满足排名的proposal以及分数

proposals_single = proposals_single[order_single, :]

scores_single = scores_single[order_single].view(-1,1)

# 6. apply nms (e.g. threshold = 0.7)

# 7. take after_nms_topN (e.g. 300)

# 8. return the top proposals (-> RoIs top)

# 这里经过nms的操作得到这张图像保留下来的proposal

keep_idx_i = nms(proposals_single, scores_single.squeeze(1), nms_thresh)

keep_idx_i = keep_idx_i.long().view(-1)

# 这里取到的是经过nms保留下来的proposals以及他们的分数

if post_nms_topN > 0:

keep_idx_i = keep_idx_i[:post_nms_topN]

proposals_single = proposals_single[keep_idx_i, :]

scores_single = scores_single[keep_idx_i, :]

# padding 0 at the end.

# 这里output的第三维之所以是5,因为第一维是加入了batch_size的序号,后面才是坐标

num_proposal = proposals_single.size(0)

output[i,:,0] = i

output[i,:num_proposal,1:] = proposals_single

# 最后返回output结果

return output

def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass

def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass

def _filter_boxes(self, boxes, min_size):

"""Remove all boxes with any side smaller than min_size."""

ws = boxes[:, :, 2] - boxes[:, :, 0] + 1

hs = boxes[:, :, 3] - boxes[:, :, 1] + 1

# 这是保证之前说的,得到的proposals必须要保证宽度高度大于一个值才可以保留,这里反回了判断的真假值

keep = ((ws >= min_size.view(-1,1).expand_as(ws)) & (hs >= min_size.view(-1,1).expand_as(hs)))

return keep

3.anchor_target_layer.py

from __future__ import absolute_import

# --------------------------------------------------------

# Faster R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick and Sean Bell

# --------------------------------------------------------

# --------------------------------------------------------

# Reorganized and modified by Jianwei Yang and Jiasen Lu

# --------------------------------------------------------

import torch

import torch.nn as nn

import numpy as np

import numpy.random as npr

from model.utils.config import cfg

from .generate_anchors import generate_anchors

from .bbox_transform import clip_boxes, bbox_overlaps_batch, bbox_transform_batch

import pdb

DEBUG = False

try:

long # Python 2

except NameError:

long = int # Python 3

# 这个类主要对RPN的输出进行加工,对anchors打上标签,并且与ground_truth进行对比,计算他们之间的偏差

class _AnchorTargetLayer(nn.Module):

"""

Assign anchors to ground-truth targets. Produces anchor classification

labels and bounding-box regression targets.

"""

def __init__(self, feat_stride, scales, ratios):

super(_AnchorTargetLayer, self).__init__()

# 首先进行初始化,feat_stride是与原图之间的比例,scale和ratio就是anchors的纵横比和大小

self._feat_stride = feat_stride

self._scales = scales

anchor_scales = scales

# 使用generate_anchors来生成anchors

self._anchors = torch.from_numpy(generate_anchors(scales=np.array(anchor_scales), ratios=np.array(ratios))).float()

# 得到anchors的数量,按照论文里这里是9

self._num_anchors = self._anchors.size(0)

# allow boxes to sit over the edge by a small amount

# 允许box在边缘超过多少

self._allowed_border = 0 # default is 0

def forward(self, input):

# Algorithm:

#

# for each (H, W) location i

# generate 9 anchor boxes centered on cell i

# apply predicted bbox deltas at cell i to each of the 9 anchors

# filter out-of-image anchors

# 第一维是RPN分类得分

rpn_cls_score = input[0]

# 第二维是ground_truth (batch_size, gt的数量,5)5的前四维是坐标,最后一维是类别

gt_boxes = input[1]

# 第三维是图像

im_info = input[2]

# 第四维是框的数量

num_boxes = input[3]

# map of shape (..., H, W)

# rpn_cls_score的第三维第四维是这个特征向量的长和宽

height, width = rpn_cls_score.size(2), rpn_cls_score.size(3)

# 得到batch_size

batch_size = gt_boxes.size(0)

# 又取了一遍,没啥用,还是获取特征框的高和宽

# 和上段代码之前一样,还是将其还原到原图,然后做成网格的形式

feat_height, feat_width = rpn_cls_score.size(2), rpn_cls_score.size(3)

shift_x = np.arange(0, feat_width) * self._feat_stride

shift_y = np.arange(0, feat_height) * self._feat_stride

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shifts = torch.from_numpy(np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose())

shifts = shifts.contiguous().type_as(rpn_cls_score).float()

# A是anchors的数量,正常是一个点9个

A = self._num_anchors

# 这里是一共有多少个anchors的数量

K = shifts.size(0)

# 这里和上一个代码也相同,得到原图中的所有anchors

self._anchors = self._anchors.type_as(gt_boxes) # move to specific gpu.

all_anchors = self._anchors.view(1, A, 4) + shifts.view(K, 1, 4)

all_anchors = all_anchors.view(K * A, 4)

# 这里是一共有多少个anchor,K是锚点的数量,每个锚点都是9个

total_anchors = int(K * A)

# 这里判断了一下,过滤掉越界的边框,条件是左下角坐标必须大于0,右上角坐标小于图像的宽和高的最大值,这里允许边界框是压线的

keep = ((all_anchors[:, 0] >= -self._allowed_border) &

(all_anchors[:, 1] >= -self._allowed_border) &

(all_anchors[:, 2] < long(im_info[0][1]) + self._allowed_border) &

(all_anchors[:, 3] < long(im_info[0][0]) + self._allowed_border))

# 这里把所有不符合的anchors都过滤掉了,也就是越界的那些边框,得到符合规定的边框的索引

inds_inside = torch.nonzero(keep).view(-1)

#根据index来取到保留下来的边框

# keep only inside anchors

anchors = all_anchors[inds_inside, :]

# 这里定义了三个label,1代表positive也就是前景,0代表背景,-1代表不关注

# label: 1 is positive, 0 is negative, -1 is dont care

# labels初始化,大小是(batch_size, 保留下来的框的数量),初始用-1填补

labels = gt_boxes.new(batch_size, inds_inside.size(0)).fill_(-1)

# 这个inside是论文中对正样本进行回归的参数,大小是(batch_size, 保留下的框的数量),初始化为0

bbox_inside_weights = gt_boxes.new(batch_size, inds_inside.size(0)).zero_()

# 用来平衡RPN分类和回归的权重????大小一样

bbox_outside_weights = gt_boxes.new(batch_size, inds_inside.size(0)).zero_()

# 计算anchors和gt_boxes的IOU,返回的是(batch_size, 一个图中anchors的数量, 一个图中gt的数量)

overlaps = bbox_overlaps_batch(anchors, gt_boxes)

# 这里获取了对于每一个batch,对应每个anchor最大的那个IOU,(batch_size, anchors的数量)

max_overlaps, argmax_overlaps = torch.max(overlaps, 2)

# 这里返回对应每个gt,最大的IOU值

gt_max_overlaps, _ = torch.max(overlaps, 1)

# 这个参数默认是False 意思是先把符合负样本的标记为0

if not cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# 如果这个选出来的anchor小于设定的negetive阈值,则让他是negative

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0

# 如果gt_max_overlaps是0,则让他等于一个很小的值1*10^-5????

gt_max_overlaps[gt_max_overlaps==0] = 1e-5

keep = torch.sum(overlaps.eq(gt_max_overlaps.view(batch_size,1,-1).expand_as(overlaps)), 2)

if torch.sum(keep) > 0:

labels[keep>0] = 1

# fg label: above threshold IOU

labels[max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = 1

if cfg.TRAIN.RPN_CLOBBER_POSITIVES:

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0

# 这里是前景需要的训练数量,前景占的比例 * 一个batch_size一共需要多少数量

num_fg = int(cfg.TRAIN.RPN_FG_FRACTION * cfg.TRAIN.RPN_BATCHSIZE)

# 这里经过计算,得到目前已经确定的前景和背景的数量

sum_fg = torch.sum((labels == 1).int(), 1)

sum_bg = torch.sum((labels == 0).int(), 1)

# 这里对一个batch_size进行迭代,看看选择的前景和背景数量是够符合规定要求

for i in range(batch_size):

# 如果得到的正样本太多,则需要二次采样

# subsample positive labels if we have too many

# 如果正样本的数量超过了预期的设置

if sum_fg[i] > num_fg:

# 首先获取所有的非零元素的索引

fg_inds = torch.nonzero(labels[i] == 1).view(-1)

# torch.randperm seems has a bug on multi-gpu setting that cause the segfault.

# See https://github.com/pytorch/pytorch/issues/1868 for more details.

# use numpy instead.

#rand_num = torch.randperm(fg_inds.size(0)).type_as(gt_boxes).long()

# 然后将他们用随机数的方式进行排列

rand_num = torch.from_numpy(np.random.permutation(fg_inds.size(0))).type_as(gt_boxes).long()

# 这里就去前num_fg个作为正样本,其他的设置成-1也就是不关心

disable_inds = fg_inds[rand_num[:fg_inds.size(0)-num_fg]]

labels[i][disable_inds] = -1

# num_bg = cfg.TRAIN.RPN_BATCHSIZE - sum_fg[i]

num_bg = cfg.TRAIN.RPN_BATCHSIZE - torch.sum((labels == 1).int(), 1)[i]

# 如果得到的负样本太多,也要进行二次采样

# subsample negative labels if we have too many

# 下面就是和上面一样的方法,对越界的那些样本设置为-1

if sum_bg[i] > num_bg:

bg_inds = torch.nonzero(labels[i] == 0).view(-1)

#rand_num = torch.randperm(bg_inds.size(0)).type_as(gt_boxes).long()

rand_num = torch.from_numpy(np.random.permutation(bg_inds.size(0))).type_as(gt_boxes).long()

disable_inds = bg_inds[rand_num[:bg_inds.size(0)-num_bg]]

labels[i][disable_inds] = -1

# 假设每个batch_size的gt_boxes都是20的话

# [0, 20, 40, .......(batch_size-1)*20]

offset = torch.arange(0, batch_size)*gt_boxes.size(1)

# argmax_overlaps本来是每个anchor对应最大IOU的索引

# 这里就相当于把他们加上20,大小不变

argmax_overlaps = argmax_overlaps + offset.view(batch_size, 1).type_as(argmax_overlaps)

# 这里也相当于把gt_boxes给展开了

# gt_boxes.view(-1, 5)相当于转换成(batch_size*20, 5)

# argmax_overlaps.view(-1) ->(batch_size, anchor的数量)

# gt_boxes.view(-1,5)[argmax_overlaps.view(-1), :]这就是选出与每个anchorIOU最大的GT

# 然后把anchors和与他们IOU最大的gt放入形参,计算他们之间的偏移量

# 得到(batch_size, anchors的数量, 4)

bbox_targets = _compute_targets_batch(anchors, gt_boxes.view(-1,5)[argmax_overlaps.view(-1), :].view(batch_size, -1, 5))

# use a single value instead of 4 values for easy index.

# 所有前景的anchors,将他们的权重初始化

bbox_inside_weights[labels==1] = cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS[0]

# 这个参数默认定义的是-1,如果小于零,positive和negative的权重设置成相同的

# 都是1/num_example

if cfg.TRAIN.RPN_POSITIVE_WEIGHT < 0:

num_examples = torch.sum(labels[i] >= 0)

positive_weights = 1.0 / num_examples.item()

negative_weights = 1.0 / num_examples.item()

# 这里正常来说应该是另一种设置权重的方法,但是作者没有写,在这里附上一段tensorflow版本的代码

#positive_weights = (cfg.TRAIN.RPN_POSITIVE_WEIGHT /

np.sum(labels == 1))

#negative_weights = ((1.0 - cfg.TRAIN.RPN_POSITIVE_WEIGHT) /

np.sum(labels == 0))

else:

assert ((cfg.TRAIN.RPN_POSITIVE_WEIGHT > 0) &

(cfg.TRAIN.RPN_POSITIVE_WEIGHT < 1))

# 并没有实现这里

# 这里是outside_weights的设置,就是上面计算的

bbox_outside_weights[labels == 1] = positive_weights

bbox_outside_weights[labels == 0] = negative_weights

# 因为之前取labels的操作都是在对于图像范围内的边框进行的,这里要将图像外的都补成-1

# 这样输出的就是和totalanchors一样大小的

# batch_size * total_anchors

labels = _unmap(labels, total_anchors, inds_inside, batch_size, fill=-1)

# 同样,最其他的三个变量,也用相同的方式补全,这里是用0去填补

bbox_targets = _unmap(bbox_targets, total_anchors, inds_inside, batch_size, fill=0)

bbox_inside_weights = _unmap(bbox_inside_weights, total_anchors, inds_inside, batch_size, fill=0)

bbox_outside_weights = _unmap(bbox_outside_weights, total_anchors, inds_inside, batch_size, fill=0)

outputs = []

# 这里把labels变形了一下转换为(batch_size, 1, A * height, width)

labels = labels.view(batch_size, height, width, A).permute(0,3,1,2).contiguous()

labels = labels.view(batch_size, 1, A * height, width)

outputs.append(labels)

# 这里把bbox也展开了(batch_size, height, width, A*4)->(batch_size, 4*A, height, width)

bbox_targets = bbox_targets.view(batch_size, height, width, A*4).permute(0,3,1,2).contiguous()

outputs.append(bbox_targets)

# 这里计算了一下anchors的总数

anchors_count = bbox_inside_weights.size(1)

# 把inside_weights也转换为4维 (batch_size, anchors_count, 4)

bbox_inside_weights = bbox_inside_weights.view(batch_size,anchors_count,1).expand(batch_size, anchors_count, 4)

# 然后再展开(batch_size, height, width, 4 * A)->(batch_size, 4*A, height, width)

bbox_inside_weights = bbox_inside_weights.contiguous().view(batch_size, height, width, 4*A)

.permute(0,3,1,2).contiguous()

outputs.append(bbox_inside_weights)

# 对于outside也做成相同的形式,添加到output

bbox_outside_weights = bbox_outside_weights.view(batch_size,anchors_count,1).expand(batch_size, anchors_count, 4)

bbox_outside_weights = bbox_outside_weights.contiguous().view(batch_size, height, width, 4*A)

.permute(0,3,1,2).contiguous()

outputs.append(bbox_outside_weights)

return outputs

def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass

def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass

# 这个函数就是将数据还原到原来的大小,对于原来没有处理的数据填补上fill的值

def _unmap(data, count, inds, batch_size, fill=0):

""" Unmap a subset of item (data) back to the original set of items (of

size count) """

if data.dim() == 2:

ret = torch.Tensor(batch_size, count).fill_(fill).type_as(data)

ret[:, inds] = data

else:

ret = torch.Tensor(batch_size, count, data.size(2)).fill_(fill).type_as(data)

ret[:, inds,:] = data

return ret

# 这里调用了bbox_transform计算偏移量

def _compute_targets_batch(ex_rois, gt_rois):

"""Compute bounding-box regression targets for an image."""

return bbox_transform_batch(ex_rois, gt_rois[:, :, :4])

4.proposal_target_layer_cascade.py

from __future__ import absolute_import

# --------------------------------------------------------

# Faster R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick and Sean Bell

# --------------------------------------------------------

# --------------------------------------------------------

# Reorganized and modified by Jianwei Yang and Jiasen Lu

# --------------------------------------------------------

import torch

import torch.nn as nn

import numpy as np

import numpy.random as npr

from ..utils.config import cfg

from .bbox_transform import bbox_overlaps_batch, bbox_transform_batch

import pdb

class _ProposalTargetLayer(nn.Module):

"""

Assign object detection proposals to ground-truth targets. Produces proposal

classification labels and bounding-box regression targets.

这里将目标检测框分配给ground_truth,生成分类标签以及边框回归

"""

def __init__(self, nclasses):

super(_ProposalTargetLayer, self).__init__()

# 这里还是进行初始化,对一些变量进行赋值

# 这是类别的数量

self._num_classes = nclasses

# 这里是进行标准化的均值和标准差

self.BBOX_NORMALIZE_MEANS = torch.FloatTensor(cfg.TRAIN.BBOX_NORMALIZE_MEANS)

self.BBOX_NORMALIZE_STDS = torch.FloatTensor(cfg.TRAIN.BBOX_NORMALIZE_STDS)

# 这里有定义了一个权重,BBOX_INSIDE_WEIGHTS和上一个的RPN_BBOX_INSIDE_WEIGHTS有什么区别??

self.BBOX_INSIDE_WEIGHTS = torch.FloatTensor(cfg.TRAIN.BBOX_INSIDE_WEIGHTS)

def forward(self, all_rois, gt_boxes, num_boxes):

# 重新定义了一下数据类型

self.BBOX_NORMALIZE_MEANS = self.BBOX_NORMALIZE_MEANS.type_as(gt_boxes)

self.BBOX_NORMALIZE_STDS = self.BBOX_NORMALIZE_STDS.type_as(gt_boxes)

self.BBOX_INSIDE_WEIGHTS = self.BBOX_INSIDE_WEIGHTS.type_as(gt_boxes)

# 这里初始化了一个和gt_boxes一样大小的变量

gt_boxes_append = gt_boxes.new(gt_boxes.size()).zero_()

# 将gt_boxes的坐标一次赋给新的gt_boxes_append

gt_boxes_append[:,:,1:5] = gt_boxes[:,:,:4]

# 将rois和gt_boxes_append合并到一起

# Include ground-truth boxes in the set of candidate rois

all_rois = torch.cat([all_rois, gt_boxes_append], 1)

num_images = 1

# 计算出每一个图像有多少个ROIs

rois_per_image = int(cfg.TRAIN.BATCH_SIZE / num_images)

# 用前景占得比例乘以所有的ROIs得到前景的数量,为了避免出先小数进行了四舍五入--round

fg_rois_per_image = int(np.round(cfg.TRAIN.FG_FRACTION * rois_per_image))

# 如果前景的数量是0,那么让他等于1?

fg_rois_per_image = 1 if fg_rois_per_image == 0 else fg_rois_per_image

labels, rois, bbox_targets, bbox_inside_weights = self._sample_rois_pytorch(

all_rois, gt_boxes, fg_rois_per_image,

rois_per_image, self._num_classes)

bbox_outside_weights = (bbox_inside_weights > 0).float()

return rois, labels, bbox_targets, bbox_inside_weights, bbox_outside_weights

def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass

def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass

def _get_bbox_regression_labels_pytorch(self, bbox_target_data, labels_batch, num_classes):

"""Bounding-box regression targets (bbox_target_data) are stored in a

compact form b x N x (class, tx, ty, tw, th)

This function expands those targets into the 4-of-4*K representation used

by the network (i.e. only one class has non-zero targets).

Returns:

bbox_target (ndarray): b x N x 4K blob of regression targets

bbox_inside_weights (ndarray): b x N x 4K blob of loss weights

"""

# labels的第一维是batch_size

# 第二维是每个图像的rois数量

batch_size = labels_batch.size(0)

rois_per_image = labels_batch.size(1)

# 每个labels的分类信息

clss = labels_batch

# 初始化一个bbox_target(batch_size, rois_per_image, 4)

# bbox_inside_weight(batch_size, rois_per_image, 4)

bbox_targets = bbox_target_data.new(batch_size, rois_per_image, 4).zero_()

bbox_inside_weights = bbox_target_data.new(bbox_targets.size()).zero_()

for b in range(batch_size):

# assert clss[b].sum() > 0

# 如果一个图像的所有分类标签都为0,不做操作

if clss[b].sum() == 0:

continue

# 否则取到所有非零的坐标

inds = torch.nonzero(clss[b] > 0).view(-1)

# 否则遍历每个非零的类别,将他们的偏移量设置成bbox_target_data

# 将他们的权重设置成INSIDE的权重

for i in range(inds.numel()):

ind = inds[i]

bbox_targets[b, ind, :] = bbox_target_data[b, ind, :]

bbox_inside_weights[b, ind, :] = self.BBOX_INSIDE_WEIGHTS

return bbox_targets, bbox_inside_weights

# 这里计算了rois和gt之间的偏移量

def _compute_targets_pytorch(self, ex_rois, gt_rois):

"""Compute bounding-box regression targets for an image."""

assert ex_rois.size(1) == gt_rois.size(1)

assert ex_rois.size(2) == 4

assert gt_rois.size(2) == 4

# 得到batch_size的数量,以及每张图像的rois的数量

batch_size = ex_rois.size(0)

rois_per_image = ex_rois.size(1)

# 调用bbox_transorm计算偏移量

targets = bbox_transform_batch(ex_rois, gt_rois)

# 这里如果要进行标准化,则对偏移量根据预设好的均值和方差进行标准化

if cfg.TRAIN.BBOX_NORMALIZE_TARGETS_PRECOMPUTED:

# Optionally normalize targets by a precomputed mean and stdev

targets = ((targets - self.BBOX_NORMALIZE_MEANS.expand_as(targets))

/ self.BBOX_NORMALIZE_STDS.expand_as(targets))

return targets

def _sample_rois_pytorch(self, all_rois, gt_boxes, fg_rois_per_image, rois_per_image, num_classes):

# 生成一个包含前景和背景的随机样本

"""Generate a random sample of RoIs comprising foreground and background

examples.

"""

# overlaps: (rois x gt_boxes)

# 首先计算出rois和gt之间的IOU值,(batch_size, rois的数量, gt的数量)

overlaps = bbox_overlaps_batch(all_rois, gt_boxes)

# 找出每个gt对应最大的IOU的rois,以及他们的索引

max_overlaps, gt_assignment = torch.max(overlaps, 2)

# 计算出batch_size, proposals, 以及每个图像的gt的数量

batch_size = overlaps.size(0)

num_proposal = overlaps.size(1)

num_boxes_per_img = overlaps.size(2)

# 和之前的操作一样,如果每个图像的GT都是20,

# 得到(0, 20, 40, ......(batch_size-1)-1)

offset = torch.arange(0, batch_size)*gt_boxes.size(1)

# 把每个索引都和他对应相加,大小依旧是(batch_size, gt的数量)

offset = offset.view(-1, 1).type_as(gt_assignment) + gt_assignment

# changed indexing way for pytorch 1.0

# label初始化成(batch_size, GT的数量),取到gtbox的第五维,也就是类别信息

labels = gt_boxes[:,:,4].contiguous().view(-1)[(offset.view(-1),)].view(batch_size, -1)

# 定义三个变量,(batch_size, rois_per_image)

# (batch_size, rois_per_image, 5)

labels_batch = labels.new(batch_size, rois_per_image).zero_()

rois_batch = all_rois.new(batch_size, rois_per_image, 5).zero_()

gt_rois_batch = all_rois.new(batch_size, rois_per_image, 5).zero_()

# Guard against the case when an image has fewer than max_fg_rois_per_image

# foreground RoIs

for i in range(batch_size):

# 这里计算了一下一张图像满足大于阈值的前景的数量

fg_inds = torch.nonzero(max_overlaps[i] >= cfg.TRAIN.FG_THRESH).view(-1)

# 计算了一下有多少满足前景的rois

fg_num_rois = fg_inds.numel()

# 同样通过阈值的限制选出背景

# Select background RoIs as those within [BG_THRESH_LO, BG_THRESH_HI)

bg_inds = torch.nonzero((max_overlaps[i] < cfg.TRAIN.BG_THRESH_HI) &

(max_overlaps[i] >= cfg.TRAIN.BG_THRESH_LO)).view(-1)

bg_num_rois = bg_inds.numel()

# 如果背景和前景满足阈值的都大于0

if fg_num_rois > 0 and bg_num_rois > 0:

# sampling fg

# 这张图像选出定义的每张图像和每张图像真是的满足阈值的rois的最小值

fg_rois_per_this_image = min(fg_rois_per_image, fg_num_rois)

# torch.randperm seems has a bug on multi-gpu setting that cause the segfault.

# See https://github.com/pytorch/pytorch/issues/1868 for more details.

# use numpy instead.

#rand_num = torch.randperm(fg_num_rois).long().cuda()

# 进行了一个随其采样,得到随机采样的前景样本

rand_num = torch.from_numpy(np.random.permutation(fg_num_rois)).type_as(gt_boxes).long()

fg_inds = fg_inds[rand_num[:fg_rois_per_this_image]]

# 背景的数量就是预定义每张图像roi的数量减去得到的前景的数量

# sampling bg

bg_rois_per_this_image = rois_per_image - fg_rois_per_this_image

# Seems torch.rand has a bug, it will generate very large number and make an error.

# We use numpy rand instead.

#rand_num = (torch.rand(bg_rois_per_this_image) * bg_num_rois).long().cuda()

# 这是取地板操作,生成一系列[0, 1)的数*fg_num_rois然后取地板,得到的都是在(0, bg_num_rois)的数,一共生成了bg_rois_per_this_image个不同的

rand_num = np.floor(np.random.rand(bg_rois_per_this_image) * bg_num_rois)

rand_num = torch.from_numpy(rand_num).type_as(gt_boxes).long()

bg_inds = bg_inds[rand_num]

# 如果fg的数量大于0,bg的数量等于0

elif fg_num_rois > 0 and bg_num_rois == 0:

# sampling fg

#rand_num = torch.floor(torch.rand(rois_per_image) * fg_num_rois).long().cuda()

# 这是取地板操作,生成一系列[0, 1)的数*fg_num_rois然后取地板

rand_num = np.floor(np.random.rand(rois_per_image) * fg_num_rois)

rand_num = torch.from_numpy(rand_num).type_as(gt_boxes).long()

# 取了rois_per_image个前景,0个背景

fg_inds = fg_inds[rand_num]

fg_rois_per_this_image = rois_per_image

bg_rois_per_this_image = 0

# 不然则取rois_per_image个背景,0个前景

elif bg_num_rois > 0 and fg_num_rois == 0:

# sampling bg

#rand_num = torch.floor(torch.rand(rois_per_image) * bg_num_rois).long().cuda()

rand_num = np.floor(np.random.rand(rois_per_image) * bg_num_rois)

rand_num = torch.from_numpy(rand_num).type_as(gt_boxes).long()

bg_inds = bg_inds[rand_num]

bg_rois_per_this_image = rois_per_image

fg_rois_per_this_image = 0

else:

raise ValueError("bg_num_rois = 0 and fg_num_rois = 0, this should not happen!")

# The indices that we're selecting (both fg and bg)

# 把选出来的前景和背景拼接到一起

keep_inds = torch.cat([fg_inds, bg_inds], 0)

# 取到这些选出来的rois的标签

# Select sampled values from various arrays:

labels_batch[i].copy_(labels[i][keep_inds])

# Clamp labels for the background RoIs to 0

# 如果有bg的话,把bg的标签全部置为0

if fg_rois_per_this_image < rois_per_image:

labels_batch[i][fg_rois_per_this_image:] = 0

# 这里保存所有选出来的标签,第三维的第一个数设置成batch的数值

rois_batch[i] = all_rois[i][keep_inds]

rois_batch[i,:,0] = i

# 每个batch_size,与gt的IOU最大值的rois里面经过筛选保存下来的部分

gt_rois_batch[i] = gt_boxes[i][gt_assignment[i][keep_inds]]

# 计算这些筛选出来的roi和gt的偏移量

bbox_target_data = self._compute_targets_pytorch(

rois_batch[:,:,1:5], gt_rois_batch[:,:,:4])

# 再经过上述函数,得到bbox_targets, 以及权重

bbox_targets, bbox_inside_weights =

self._get_bbox_regression_labels_pytorch(bbox_target_data, labels_batch, num_classes)

return labels_batch, rois_batch, bbox_targets, bbox_inside_weights

5.rpn.py

from __future__ import absolute_import

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from model.utils.config import cfg

from .proposal_layer import _ProposalLayer

from .anchor_target_layer import _AnchorTargetLayer

from model.utils.net_utils import _smooth_l1_loss

import numpy as np

import math

import pdb

import time

class _RPN(nn.Module):

""" region proposal network """

def __init__(self, din):

super(_RPN, self).__init__()

#这个就是上一个特征提取网络输出的通道数

self.din = din # get depth of input feature map, e.g., 512

# 下面的都是预先定义好的超参数,纵横比,规模,以及特征图和原图的比例

self.anchor_scales = cfg.ANCHOR_SCALES

self.anchor_ratios = cfg.ANCHOR_RATIOS

self.feat_stride = cfg.FEAT_STRIDE[0]

'''

这里定义了RPN网络的卷积操作,对于输入的特征,输出通道为512,使用3*3的卷积核,padding=1, 所以输出的尺度不变

'''

# define the convrelu layers processing input feature map

self.RPN_Conv = nn.Conv2d(self.din, 512, 3, 1, 1, bias=True)

'''

这里处理前景背景的分类,对于ratio和scale都是3的,nc_score_out=3*3*2=18

也就是对于每一种anchor,都有两种概率,前景概率,背景概率

'''

# define bg/fg classifcation score layer

self.nc_score_out = len(self.anchor_scales) * len(self.anchor_ratios) * 2 # 2(bg/fg) * 9 (anchors)

# 这里又进行了一次1*1的卷积,得到18通道,代表上面说的18个类

self.RPN_cls_score = nn.Conv2d(512, self.nc_score_out, 1, 1, 0)

# 这里是回归层,9个anchor每个有4个坐标,所以4*9,同样进行1*1卷积

# define anchor box offset prediction layer

self.nc_bbox_out = len(self.anchor_scales) * len(self.anchor_ratios) * 4 # 4(coords) * 9 (anchors)

self.RPN_bbox_pred = nn.Conv2d(512, self.nc_bbox_out, 1, 1, 0)

# 这里定义了推荐层

# 处理掉了很多不符合规定的anchors

# define proposal layer

self.RPN_proposal = _ProposalLayer(self.feat_stride, self.anchor_scales, self.anchor_ratios)

# 这里结合了gt的信息,把和GT的IOU值太低的也去掉了

# define anchor target layer

self.RPN_anchor_target = _AnchorTargetLayer(self.feat_stride, self.anchor_scales, self.anchor_ratios)

# 回归和分类的loss都初始化为0

self.rpn_loss_cls = 0

self.rpn_loss_box = 0

# 这个是代表可以不用实例化这个对象就可以调用里面的方法

@staticmethod

# 这就是一个reshape函数,把x的第二维设置为d,第三维设置为原来的第二第三维乘积/d

def reshape(x, d):

input_shape = x.size()

x = x.view(

input_shape[0],

int(d),

int(float(input_shape[1] * input_shape[2]) / float(d)),

input_shape[3]

)

return x

def forward(self, base_feat, im_info, gt_boxes, num_boxes):

# base_feat是上一个提取特征操作提取出的特征图

batch_size = base_feat.size(0)

# return feature map after convrelu layer

# 使用卷积通道数变成512,并用relu进行激活

rpn_conv1 = F.relu(self.RPN_Conv(base_feat), inplace=True)

# get rpn classification score

rpn_cls_score = self.RPN_cls_score(rpn_conv1)

# reshape一下,让他第二个维度等于2

rpn_cls_score_reshape = self.reshape(rpn_cls_score, 2)

# 然后在他的第二个维度进行softmax得到前景背景的概率

rpn_cls_prob_reshape = F.softmax(rpn_cls_score_reshape, 1)

# 在把他reshape回来

rpn_cls_prob = self.reshape(rpn_cls_prob_reshape, self.nc_score_out)

# 这里得到4*9=36个方式上的bbox偏移量

# get rpn offsets to the anchor boxes

rpn_bbox_pred = self.RPN_bbox_pred(rpn_conv1)

# 默认值就是TRAIN

# proposal layer

cfg_key = 'TRAIN' if self.training else 'TEST'

# 这里是第一步筛选,把评分比较低的,以及越界的都去掉了

# 经过筛选和NMS得到2000个待选框(bs, 2000, 5)

rois = self.RPN_proposal((rpn_cls_prob.data, rpn_bbox_pred.data,

im_info, cfg_key))

self.rpn_loss_cls = 0

self.rpn_loss_box = 0

# 生成训练的标签以及计算损失

# generating training labels and build the rpn loss

if self.training:

assert gt_boxes is not None

# 得到二次筛选后的proposals

rpn_data = self.RPN_anchor_target((rpn_cls_score.data, gt_boxes, im_info, num_boxes))

# 转换为(bs, anchors数量, 2)

# compute classification loss

rpn_cls_score = rpn_cls_score_reshape.permute(0, 2, 3, 1).contiguous().view(batch_size, -1, 2)

# 返回每个anchor是属于背景还是前景的label

rpn_label = rpn_data[0].view(batch_size, -1)

# 取索引,首先不等于-1,然后非零的索引

rpn_keep = Variable(rpn_label.view(-1).ne(-1).nonzero().view(-1))

rpn_cls_score = torch.index_select(rpn_cls_score.view(-1,2), 0, rpn_keep)

# 这里是一个索引搜索,把rpn_keep索引里面的数都取出来赋值给rpn_label

rpn_label = torch.index_select(rpn_label.view(-1), 0, rpn_keep.data)

rpn_label = Variable(rpn_label.long())

# 计算两者交叉熵得到损失

self.rpn_loss_cls = F.cross_entropy(rpn_cls_score, rpn_label)

# 计算了一下前景的数量

fg_cnt = torch.sum(rpn_label.data.ne(0))

# 计算回归的损失

rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights = rpn_data[1:]

# compute bbox regression loss

rpn_bbox_inside_weights = Variable(rpn_bbox_inside_weights)

rpn_bbox_outside_weights = Variable(rpn_bbox_outside_weights)

rpn_bbox_targets = Variable(rpn_bbox_targets)

# 使用smooth_l1_loss计算回归损失

self.rpn_loss_box = _smooth_l1_loss(rpn_bbox_pred, rpn_bbox_targets, rpn_bbox_inside_weights,

rpn_bbox_outside_weights, sigma=3, dim=[1,2,3])

return rois, self.rpn_loss_cls, self.rpn_loss_box

这里只是所有代码的理解和注释,但是对于RPN整体的理解还不够连贯,下一次再写一篇关于RPN具体如何实现的解读,把代码串联起来。。

最后

以上就是负责白云最近收集整理的关于最详细的Faster-RCNN代码解读Pytorch版 (二) RPN部分回顾1.bbox_transform.py2.proposal_layer.py3.anchor_target_layer.py4.proposal_target_layer_cascade.py5.rpn.py的全部内容,更多相关最详细的Faster-RCNN代码解读Pytorch版内容请搜索靠谱客的其他文章。

发表评论 取消回复