基于在最近的研究Qt语音识别平台下。在此记录12

首先,语音识别做三件事

1.记录用户的语音文件到本地

2.将用户语音编码 使用flac或者speex进行编码

3.使用第三方语音识别API或者SDK进行分析识别语音

眼下做的比較简单就是使用flac文件对wav音频文件进行编码

基于Mac OSX和Win 7平台的

win 7下使用flac.exe,详细exe帮助,读者能够使用flac.exe --help > help.txt 重定向到一个help文件里,方便查阅.

mac osx以下安装flac.dmg的安装包就可以使用flac命令

我们先看音频的录入

Qt集成了音频模块

/*

* Based on Qt Example

* PCM2WAV is not mine, I found it in Google and modified it.

*/

#include "speechInput.h"

#include <QtEndian>

#include <QDebug>

#include <QPainter>

WavPcmFile::WavPcmFile(const QString & name, const QAudioFormat & format_, QObject *parent_)

: QFile(name, parent_), format(format_)

{

}

bool WavPcmFile::hasSupportedFormat()

{

return (format.sampleSize() == 8

&& format.sampleType() == QAudioFormat::UnSignedInt)

|| (format.sampleSize() > 8

&& format.sampleType() == QAudioFormat::SignedInt

&& format.byteOrder() == QAudioFormat::LittleEndian);

}

bool WavPcmFile::open()

{

if (!hasSupportedFormat()) {

setErrorString("Wav PCM supports only 8-bit unsigned samples "

"or 16-bit (or more) signed samples (in little endian)");

return false;

} else {

if (!QFile::open(ReadWrite | Truncate))

return false;

writeHeader();

return true;

}

}

void WavPcmFile::writeHeader()

{

QDataStream out(this);

out.setByteOrder(QDataStream::LittleEndian);

// RIFF chunk

out.writeRawData("RIFF", 4);

out << quint32(0); // Placeholder for the RIFF chunk size (filled by close())

out.writeRawData("WAVE", 4);

// Format description chunk

out.writeRawData("fmt ", 4);

out << quint32(16); // "fmt " chunk size (always 16 for PCM)

out << quint16(1); // data format (1 => PCM)

out << quint16(format.channelCount());

out << quint32(format.sampleRate());

out << quint32(format.sampleRate() * format.channelCount()

* format.sampleSize() / 8 ); // bytes per second

out << quint16(format.channelCount() * format.sampleSize() / 8); // Block align

out << quint16(format.sampleSize()); // Significant Bits Per Sample

// Data chunk

out.writeRawData("data", 4);

out << quint32(0); // Placeholder for the data chunk size (filled by close())

Q_ASSERT(pos() == 44); // Must be 44 for WAV PCM

}

void WavPcmFile::close()

{

// Fill the header size placeholders

quint32 fileSize = size();

QDataStream out(this);

// RIFF chunk size

seek(4);

out << quint32(fileSize - 8);

// data chunk size

seek(40);

out << quint32(fileSize - 44);

QFile::close();

}

AudioInfo::AudioInfo(const QAudioFormat &format, QObject *parent, const QString &filename)

: QIODevice(parent)

, m_format(format)

, m_maxAmplitude(0)

, m_level(0.0)

{

switch (m_format.sampleSize()) {

case 8:

switch (m_format.sampleType()) {

case QAudioFormat::UnSignedInt:

m_maxAmplitude = 255;

break;

case QAudioFormat::SignedInt:

m_maxAmplitude = 127;

break;

default:

break;

}

break;

case 16:

switch (m_format.sampleType()) {

case QAudioFormat::UnSignedInt:

m_maxAmplitude = 65535;

break;

case QAudioFormat::SignedInt:

m_maxAmplitude = 32767;

break;

default:

break;

}

break;

default:

break;

}

m_file = new WavPcmFile(filename,format,this);

}

AudioInfo::~AudioInfo()

{

}

void AudioInfo::start()

{

m_file->open();

open(QIODevice::WriteOnly);

}

void AudioInfo::stop()

{

close();

m_file->close();

}

qint64 AudioInfo::readData(char *data, qint64 maxlen)

{

Q_UNUSED(data)

Q_UNUSED(maxlen)

return 0;

}

qint64 AudioInfo::writeData(const char *data, qint64 len)

{

if (m_maxAmplitude) {

Q_ASSERT(m_format.sampleSize() % 8 == 0);

const int channelBytes = m_format.sampleSize() / 8;

const int sampleBytes = m_format.channelCount() * channelBytes;

Q_ASSERT(len % sampleBytes == 0);

const int numSamples = len / sampleBytes;

quint16 maxValue = 0;

const unsigned char *ptr = reinterpret_cast<const unsigned char *>(data);

for (int i = 0; i < numSamples; ++i) {

for(int j = 0; j < m_format.channelCount(); ++j) {

quint16 value = 0;

if (m_format.sampleSize() == 8 && m_format.sampleType() == QAudioFormat::UnSignedInt) {

value = *reinterpret_cast<const quint8*>(ptr);

} else if (m_format.sampleSize() == 8 && m_format.sampleType() == QAudioFormat::SignedInt) {

value = qAbs(*reinterpret_cast<const qint8*>(ptr));

} else if (m_format.sampleSize() == 16 && m_format.sampleType() == QAudioFormat::UnSignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qFromLittleEndian<quint16>(ptr);

else

value = qFromBigEndian<quint16>(ptr);

} else if (m_format.sampleSize() == 16 && m_format.sampleType() == QAudioFormat::SignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qAbs(qFromLittleEndian<qint16>(ptr));

else

value = qAbs(qFromBigEndian<qint16>(ptr));

}

maxValue = qMax(value, maxValue);

ptr += channelBytes;

}

}

maxValue = qMin(maxValue, m_maxAmplitude);

m_level = qreal(maxValue) / m_maxAmplitude;

}

m_file->write(data,len);

emit update();

return len;

}

RenderArea::RenderArea(QWidget *parent)

: QPushButton(parent)

{

setBackgroundRole(QPalette::Base);

setAutoFillBackground(true);

m_level = 0;

setMinimumHeight(30);

setMinimumWidth(80);

}

void RenderArea::paintEvent(QPaintEvent * /* event */)

{

QPainter painter(this);

QPixmap pixmap = QPixmap(":/images/button_default.png").scaled(this->size());

painter.drawPixmap(this->rect(), pixmap);

// painter.setPen(Qt::black);

// painter.drawRect(QRect(painter.viewport().left(),

// painter.viewport().top(),

// painter.viewport().right()-20,

// painter.viewport().bottom()-20));

if (m_level == 0.0)

return;

painter.setPen(Qt::darkGray);

int pos = ((painter.viewport().right()-20)-(painter.viewport().left()+11))*m_level;

for (int i = 0; i < 10; ++i) {

int x1 = painter.viewport().left()+11;

int y1 = painter.viewport().top()+10+i;

int x2 = painter.viewport().left()+20+pos;

int y2 = painter.viewport().top()+10+i;

if (x2 < painter.viewport().left()+10)

x2 = painter.viewport().left()+10;

painter.drawLine(QPoint(x1+10, y1+10),QPoint(x2+10, y2+10));

}

}

void RenderArea::setLevel(qreal value)

{

m_level = value;

repaint();

}

主要是写wav文件的

最后一个类是一个widget,能够检測音频的波动情况

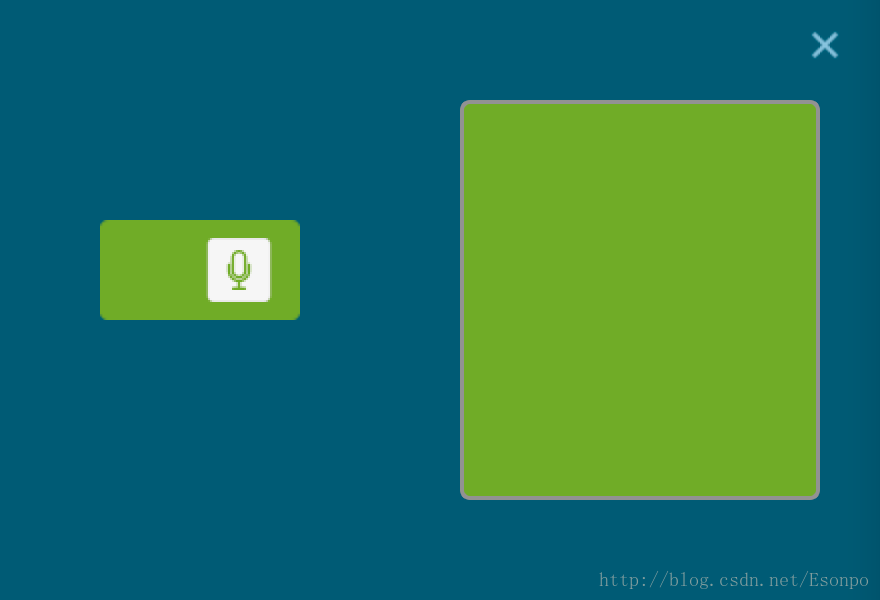

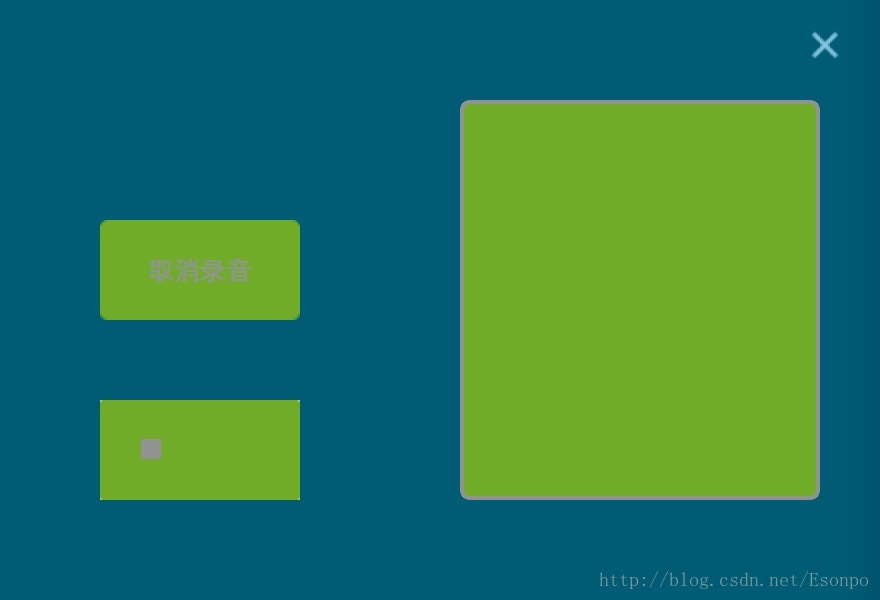

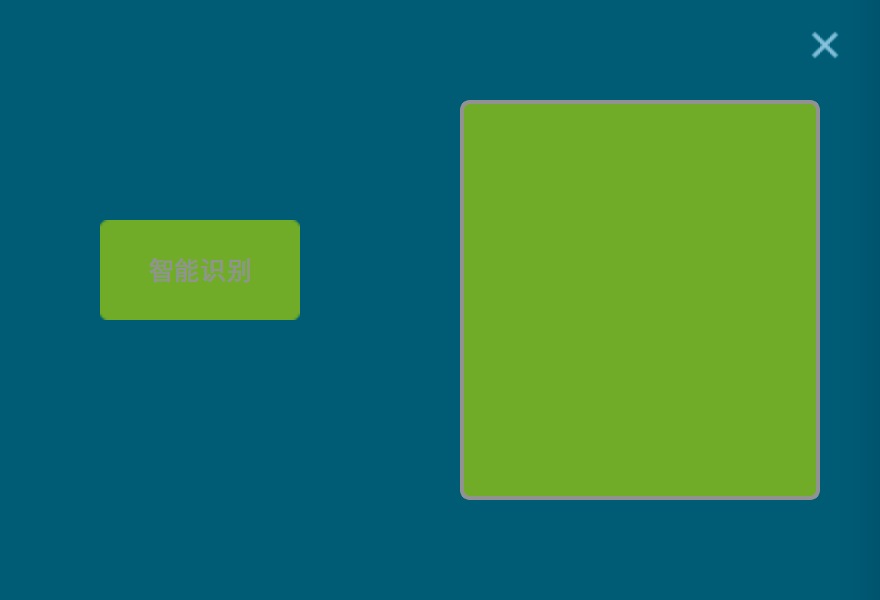

mianWidget主要是操作语音识别等功能

#include "widget.h"

#include <QApplication>

mainWidget::mainWidget(QWidget *parent)

: QWidget(parent),curI(0),canmove(false),speechInput_AudioInput(NULL),

flacEncoder(NULL)

{

this->setFixedSize(440,300);

this->setWindowFlags(Qt::FramelessWindowHint);

curPath = QApplication::applicationDirPath();

curPath.chop(30);

frame_Speech = new QPushButton(this);

connect(frame_Speech,SIGNAL(clicked()),this,SLOT(pushButton_Speech_clicked()));

frame_Speech->resize(100,50);

frame_Speech->move(50,110);

frame_Speech->setStyleSheet("QPushButton {

color: grey;

image: url(:/images/speech_n.png) ;

image-position: right center;

border-image: url(:/images/button_default.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:pressed {

image: url(:/images/speech_p.png);

image-position: right center;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:hover {

color:grey;

image: url(:/images/speech_p.png);

image-position: right center;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}");

qDebug()<<"11111111111"<<endl;

cancel_btn = new QPushButton(this);

connect(cancel_btn,SIGNAL(clicked()),this,SLOT(pushButton_SpeechCancel_clicked()));

cancel_btn->resize(100,50);

cancel_btn->move(50,110);

cancel_btn->setText(QString::fromUtf8("取消录音"));

cancel_btn->setStyleSheet("QPushButton {

color: grey;

border-image: url(:/images/button_default.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:pressed {

color: grey;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:hover {

color:grey;

image-position: right center;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}");

cancel_btn->setEnabled(false);

cancel_btn->hide();

qDebug()<<"222222222222"<<endl;

textEdit_Input = new QTextEdit(this);

textEdit_Input->resize(180,200);

textEdit_Input->move(230,50);

textEdit_Input->setStyleSheet("QTextEdit {

border: 2px solid gray;

border-radius: 5px;

padding: 0 8px;

background: "#649f12";

selection-background-color: darkgray;

}"

"QScrollBar:vertical {

border: 1px solid grey;

background: #32CC99;

width: 13px;

margin: 22px 0 22px 0;

}"

"QScrollBar::handle:vertical {

background: darkgray;

min-height: 20px;

}"

"QScrollBar::add-line:vertical {

border: 2px solid grey;

background: #32CC99;

height: 20px;

subcontrol-position: bottom;

subcontrol-origin: margin;

}"

"QScrollBar::sub-line:vertical {

border: 2px solid grey;

background: #32CC99;

height: 20px;

subcontrol-position: top;

subcontrol-origin: margin;

}"

"QScrollBar::up-arrow:vertical, QScrollBar::down-arrow:vertical {

border: 2px solid grey;

width: 3px;

height: 3px;

background: darkgray;

}"

"QScrollBar::add-page:vertical, QScrollBar::sub-page:vertical {

background: none;

}");

qDebug()<<"33333333333"<<endl;

label_Speech_Waiting = new QPushButton(this);

//connect(label_Speech_Waiting,SIGNAL(clicked()),this,SLOT(pushButton_SpeechCancel_clicked()));

label_Speech_Waiting->resize(100,50);

label_Speech_Waiting->move(50,110);

label_Speech_Waiting->setText(QString::fromUtf8("智能识别"));

label_Speech_Waiting->setStyleSheet("QPushButton {

color: grey;

border-image: url(:/images/button_default.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:pressed {

color: grey;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:hover {

color: grey;

border-image: url(:/images/button_press.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}");

label_Speech_Waiting->hide();

protocol = new Protocol();

connect(protocol,SIGNAL(Signal_SPEECH(int,QString,double)),this,SLOT(slotGoogleApiData(int,QString,double)));

speechArea = new RenderArea(this);

speechArea->resize(100,50);

speechArea->move(50,200);

speechArea->hide();

close_btn = new QPushButton(this);

connect(close_btn,SIGNAL(clicked()),this,SLOT(pushButton_Close_Clicked()));

close_btn->resize(25,25);

close_btn->move(this->width()-40,10);

close_btn->setStyleSheet("QPushButton {

color: grey;

border-image: url(:/images/close_d.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:pressed {

color: grey;

border-image: url(:/images/close_h.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}"

"QPushButton:hover {

color: grey;

border-image: url(:/images/close_p.png) 3 10 3 10;

border-top: 3px transparent;

border-bottom: 3px transparent;

border-right: 10px transparent;

border-left: 10px transparent;

}");

timer = new QTimer(this);

connect(timer, SIGNAL(timeout()), this, SLOT(slotUpdate()));

//timer->start(500);

}

mainWidget::~mainWidget()

{

}

// Init Speech Input

void mainWidget::initializeAudioInput()

{

speechInput_AudioFormat.setSampleRate(16000);

speechInput_AudioFormat.setChannelCount(1);

speechInput_AudioFormat.setSampleSize(16);

speechInput_AudioFormat.setSampleType(QAudioFormat::SignedInt);

speechInput_AudioFormat.setByteOrder(QAudioFormat::LittleEndian);

speechInput_AudioFormat.setCodec("audio/pcm");

speechInput_AudioDeviceInfo = QAudioDeviceInfo::defaultInputDevice();

bool isSupport = speechInput_AudioDeviceInfo.isFormatSupported(speechInput_AudioFormat);

qDebug()<<"isSupport "<<isSupport<<curPath;

if(!isSupport)

{

speechInput_AudioFormat = speechInput_AudioDeviceInfo.nearestFormat(speechInput_AudioFormat);

}

curName = QDateTime::currentDateTime().toString("yyyyMMddhhmmss");

curName.append(".wav");

speechInput_AudioInfo = new AudioInfo(speechInput_AudioFormat, this, curPath+"/lib/"+curName);

connect(speechInput_AudioInfo, SIGNAL(update()), SLOT(refreshRender()));

qDebug()<<"isSupport======= "<<speechInput_AudioInfo->errorString();

createAudioInput();

}

// Create Audio Input

void mainWidget::createAudioInput()

{

speechInput_AudioInput = new QAudioInput(speechInput_AudioDeviceInfo, speechInput_AudioFormat, this);

//connect(speechInput_AudioInput,SIGNAL(stateChanged(QAudio::State)),this,SLOT(slotStateChanged(QAudio::State)));

connect(speechInput_AudioInput,SIGNAL(notify()),this,SLOT(slotNotify()));

qDebug()<<"createAudioInput";

if(speechInput_AudioInput != NULL)

{

qDebug()<<"createAudioInput333";

speechInput_AudioInfo->start();

qDebug()<<"createAudioInput1111";

speechInput_AudioInput->start(speechInput_AudioInfo);

qDebug()<<"createAudioInput222";

}

}

// Stop Audio Input

void mainWidget::stopAudioInput()

{

speechInput_AudioInput->stop();

speechInput_AudioInfo->stop();

speechInput_AudioInput->deleteLater();

speechInput_AudioInput = NULL;

}

// Refresh Render

void mainWidget::refreshRender()

{

speechArea->setLevel(speechInput_AudioInfo->level());

speechArea->repaint();

}

void mainWidget::slotGoogleApiData(int, QString str, double)

{

if(str != ""){

label_Speech_Waiting->hide();

textEdit_Input->setText(str);

}else{

label_Speech_Waiting->hide();

}

label_Speech_Waiting->setEnabled(true);

frame_Speech->show();

speechArea->hide();

}

/*

* QAudio::ActiveState 0 Audio data is being processed, this state is set after start() is called and while audio data is available to be processed.

QAudio::SuspendedState 1 The audio device is in a suspended state, this state will only be entered after suspend() is called.

QAudio::StoppedState 2 The audio device is closed, not processing any audio data

QAudio::IdleState

*/

void mainWidget::slotStateChanged(QAudio::State status)

{

//qDebug()<<"slotStateChanged "<<status<<speechInput_AudioInput->error();

switch (status) {

case QAudio::SuspendedState:

break;

case QAudio::ActiveState:

//QTimer::singleShot(5000,this,SLOT(pushButton_SpeechCancel_clicked()));

break;

case QAudio::StoppedState:

if (speechInput_AudioInput->error() != QAudio::NoError)

{

textEdit_Input->setText(QString::fromUtf8("音频设备未安装"));

} else

{

//QTimer::singleShot(5000,this,SLOT(pushButton_SpeechCancel_clicked()));

}

break;

default:

break;

}

speechInput_AudioInput->error();

}

void mainWidget::slotUpdate()

{

curI +=0.1;

qDebug()<<"slotUpdate "<<curI;

if(curI >1.0)

{

timer->stop();

return;

}

if(speechArea != NULL)

{

qreal i = qrand()%10;

qreal ii = i/10;

qDebug()<<"slotUpdate I "<<curI;

speechArea->setLevel(curI);

speechArea->repaint();

}

}

void mainWidget::slotNotify()

{

// qDebug()<<" slotNotify "<<speechInput_AudioInput->error();

}

void mainWidget::paintEvent(QPaintEvent *)

{

QPainter painter(this);

QPixmap pixmap = QPixmap(":/images/main_bg.png").scaled(this->size());

painter.drawPixmap(this->rect(), pixmap);

}

void mainWidget::mousePressEvent(QMouseEvent *e)

{

if( (e->pos().x()>= 10) && (e->pos().y()<=45) && (e->pos().x()<=this->x()-40))

{

canmove = true;

}

else

{

canmove = false;

e->accept();

return;

}

oldPos = e->pos();

e->accept();

}

void mainWidget::mouseMoveEvent(QMouseEvent *e)

{

if(canmove)

{

//qDebug()<<this->pos()<<"n"<<this->y();

move(e->globalPos() - oldPos);

}

e->accept();

}

// Send Request

void mainWidget::flacEncoderFinished(int exitCode, QProcess::ExitStatus exitStatus)

{

qDebug()<<"flacEncoderFinished "<<exitStatus<<"n"<<exitCode;

if (exitStatus == QProcess::NormalExit)

{

QByteArray flacData = flacEncoder->readAll();

protocol->Request_SPEECH(flacData);

}

//label_Speech_Waiting->hide();

//frame_Speech->hide();

flacEncoder->deleteLater();

flacEncoder = NULL;

}

// Speech Input Button - Start Speech

void mainWidget::pushButton_Speech_clicked()

{

initializeAudioInput();

qDebug()<<"pushButton_Speech_clicked";

textEdit_Input->setReadOnly(true);

//label_Speech_Waiting->show();

frame_Speech->hide();

speechArea->show();

cancel_btn->show();

cancel_btn->setEnabled(true);

//QTimer::singleShot(5000,this,SLOT(pushButton_SpeechCancel_clicked()));

}

void mainWidget::pushButton_Close_Clicked()

{

if(speechInput_AudioInput != NULL)

{

stopAudioInput();

}

qApp->quit();

}

// Speech Input Button - Stop Speech

// Call flacEncoder

void mainWidget::pushButton_SpeechCancel_clicked()

{

frame_Speech->hide();

cancel_btn->hide();

speechArea->hide();

label_Speech_Waiting->show();

label_Speech_Waiting->setEnabled(false);

stopAudioInput();

//delete speechInput_AudioInput;

//label_Speech_Waiting->setText(QString::fromUtf8("识别中..."));

//QString program = "./lib/flac.exe";

/*

* flac.exe -c --totally-silent -f -8 test.wav

*/

QStringList arguments;

#ifdef Q_OS_WIN

QString program = "./lib/flac.exe";

#else Q_OS_MAC

QString program = "/usr/local/bin/flac";

#endif

arguments << "-c" << "-f" << "-8" << curPath +"/lib/"+curName;

flacEncoder = new QProcess(this);

connect(flacEncoder,SIGNAL(finished(int,QProcess::ExitStatus)),this,SLOT(flacEncoderFinished(int, QProcess::ExitStatus)));

flacEncoder->start(program, arguments);

qDebug()<<"arguments "<<program<<" "<<arguments;

}

录入音频后获取,然后再调用google speech api,返回识别结果

api调用演示样例:

#include "protocol.h"

Protocol::Protocol(QObject *parent) :

QObject(parent),Nt_SPEECH(NULL)

{

}

void Protocol::Request_SPEECH(QByteArray & audioData)

{

qDebug()<<"audioData "<<audioData.length();

if (!Nt_SPEECH)

{

QNetworkRequest request;

QString speechAPI = "http://www.google.com/speech-api/v1/recognize?xjerr=1&client=chromium&lang=zh-CN&maxresults=1";

request.setUrl(speechAPI);

request.setRawHeader("User-Agent", "Mozilla/5.0");

request.setRawHeader("Content-Type", "audio/x-flac; rate=16000");

//qDebug(audioData);

Nt_SPEECH = NetworkMGR.post(request, audioData);

connect(Nt_SPEECH, SIGNAL(readyRead()), this, SLOT(Read_SPEECH()));

}

}

//*

// *{

// "status":0, /* 结果代码 */

// "id":"c421dee91abe31d9b8457f2a80ebca91-1", /* 识别编号 */

// "hypotheses": /* 如果,即结果 */

// [

// {

// "utterance":"下午好", /* 话语 */

// "confidence":0.2507637 /* 信心,即精确度 */

// },

// {

// "utterance":"午好", /* 话语 */

// "confidence":0.2507637 /* 信心。即精确度 */

// }

// ]

//}

//

void Protocol::Read_SPEECH()

{

QString content = QString::fromUtf8( Nt_SPEECH->readAll() );

qDebug()<<"content: "<<content;

emit Signal_SPEECH(0,content,0);

disconnect(Nt_SPEECH, SIGNAL(readyRead()), this, SLOT(Read_SPEECH()));

//delete Nt_SPEECH;

Nt_SPEECH = NULL;

}

初始化界面

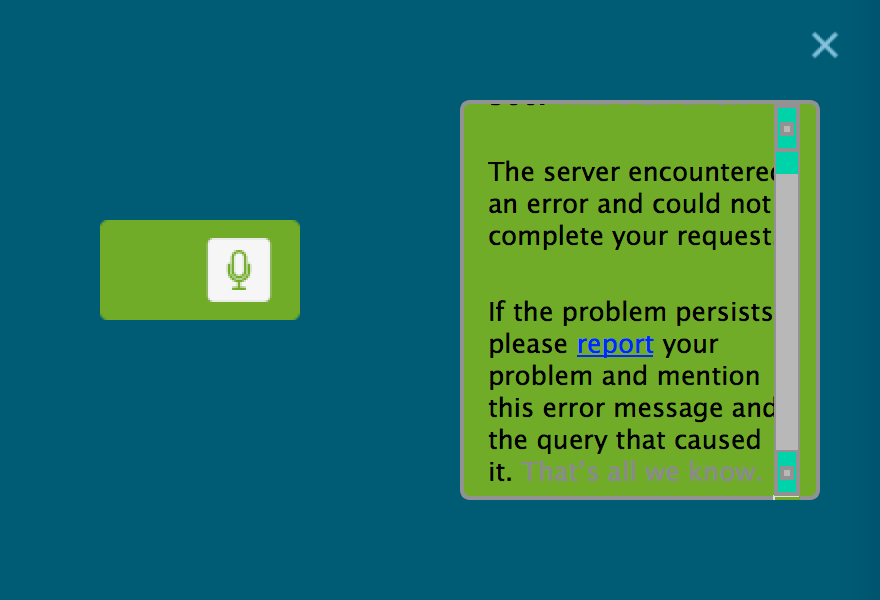

最后一张是未识别,看来音频文件在噪音多的情况下 识别是非常不满意

PS:主要参考基于google speech api,谢谢,我要被移植到变化win 7平台和mac osx平台

转载于:https://www.cnblogs.com/mfrbuaa/p/4573184.html

最后

以上就是爱笑微笑最近收集整理的关于基于Qt语音识别功能的全部内容,更多相关基于Qt语音识别功能内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复