一,神经网络逐层加深有Degradiation问题,准确率先上升到饱和,再加深会下降,这不是过拟合,是测试集和训练集同时下降的。网络过深导致的梯度弥散或消失。为了解决深度网络的退化问题,何凯明等人提出了残差结构,这个结构解决了网络越深,训练误差反而提升的问题,使得网络理论上可以无限深。

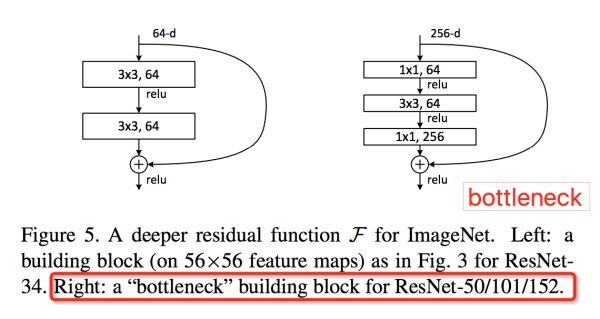

二,残差网络的核心是bottleneck网络结构及恒等映射,注意Channel维度变化: ,宛如一个中间细两端粗的瓶颈,所以称为“bottleneck”。这种结构相比VGG,早已经被证明是非常效的,能够更好的提取图像特征。

@slim.add_arg_scope

def bottleneck(inputs, depth, depth_bottleneck, stride, rate=1,

outputs_collections=None, scope=None):

"""Bottleneck residual unit variant with BN before convolutions.

This is the full preactivation residual unit variant proposed in [2]. See

Fig. 1(b) of [2] for its definition. Note that we use here the bottleneck

variant which has an extra bottleneck layer.

When putting together two consecutive ResNet blocks that use this unit, one

should use stride = 2 in the last unit of the first block.

Args:

inputs: A tensor of size [batch, height, width, channels].

depth: The depth of the ResNet unit output.

depth_bottleneck: The depth of the bottleneck layers.

stride: The ResNet unit's stride. Determines the amount of downsampling of

the units output compared to its input.

rate: An integer, rate for atrous convolution.

outputs_collections: Collection to add the ResNet unit output.

scope: Optional variable_scope.

Returns:

The ResNet unit's output.

"""

"""

核心残差学习单元

输入tensor给出一个直连部分和残差部分加和的输出tensor

:param inputs: 输入tensor

:param depth: Block类参数,输出tensor通道

:param depth_bottleneck: Block类参数,中间卷积层通道数

:param stride: Block类参数,降采样步长, 3个卷积只有中间层采用非1步长去降采样。

:param outputs_collections: 节点容器collection

:return: 输出tensor

"""

with tf.variable_scope(scope, 'bottleneck_v2', [inputs]) as sc:

depth_in = slim.utils.last_dimension(inputs.get_shape(), min_rank=4)

# 获取输入tensor的最后一个维度(通道)

preact = slim.batch_norm(inputs, activation_fn=tf.nn.relu, scope='preact')

# 对输入正则化处理,并激活,因在卷积之前,成为预激活

if depth == depth_in:

shortcut = resnet_utils.subsample(inputs, stride, 'shortcut')

# 如果输入tensor通道数等于输出tensor通道数

# 降采样使输入tensor使之宽高等于输出tensor

else:

shortcut = slim.conv2d(preact, depth, [1, 1], stride=stride,

normalizer_fn=None, activation_fn=None,

scope='shortcut')

# 否则,使用尺寸为1*1的卷积核改变其通道数,

# 同时调整宽高匹配输出tensor

#'residual残差部分

residual = slim.conv2d(preact, depth_bottleneck, [1, 1], stride=1,

scope='conv1')

residual = resnet_utils.conv2d_same(residual, depth_bottleneck, 3, stride,

rate=rate, scope='conv2')

residual = slim.conv2d(residual, depth, [1, 1], stride=1,

normalizer_fn=None, activation_fn=None,

scope='conv3')

output = shortcut + residual

return slim.utils.collect_named_outputs(outputs_collections,

sc.name,

output)

总结:批正则化数据

shortcut分量处理:调整输入tensor使之和输出tensor深度一致,宽高一致

residual分量处理:11/1卷积->33/自定步长(所以上面需要调整shortcut宽高)卷积->1*1/1卷积

shortcut + residual 作为最终输出,注意是Add,不是concat。

class Block(collections.namedtuple('Block', ['scope', 'unit_fn', 'args'])):

"""A named tuple describing a ResNet block.

Its parts are:

scope: The scope of the `Block`.

unit_fn: The ResNet unit function which takes as input a `Tensor` and

returns another `Tensor` with the output of the ResNet unit.

args: A list of length equal to the number of units in the `Block`. The list

contains one (depth, depth_bottleneck, stride) tuple for each unit in the

block to serve as argument to unit_fn.

"""

"""

使用collections.namedtuple设计ResNet基本模块组的name tuple,并用它创建Block的类 只包含数据结构,不包含具体方法。

定义一个典型的Block,需要输入三个参数:

scope:Block的名称

unit_fn:ResNet V2中的残差学习单元生成函数

args:Block的args(输出深度,瓶颈深度,瓶颈步长)

"""

def subsample(inputs, factor, scope=None):

"""Subsamples the input along the spatial dimensions.

Args:

inputs: A `Tensor` of size [batch, height_in, width_in, channels].

factor: The subsampling factor.

scope: Optional variable_scope.

Returns:

output: A `Tensor` of size [batch, height_out, width_out, channels] with the

input, either intact (if factor == 1) or subsampled (if factor > 1).

"""

"""

如果factor为1,则不做修改直接返回inputs;如果不为1,则使用 slim.max_pool2d最大池化来实现,通过1*1的池化尺寸,factor作步长,实 现降采样。

param inputs: A 4-D tensor of size [batch, height_in, width_in, channels] param factor: 采样因子

param scope: 域名

return: 采样结果

"""

if factor == 1:

return inputs

else:

return slim.max_pool2d(inputs, [1, 1], stride=factor, scope=scope)

def conv2d_same(inputs, num_outputs, kernel_size, stride, rate=1, scope=None):

"""Strided 2-D convolution with 'SAME' padding.

When stride > 1, then we do explicit zero-padding, followed by conv2d with

'VALID' padding.

Note that

net = conv2d_same(inputs, num_outputs, 3, stride=stride)

is equivalent to

net = slim.conv2d(inputs, num_outputs, 3, stride=1, padding='SAME')

net = subsample(net, factor=stride)

whereas

net = slim.conv2d(inputs, num_outputs, 3, stride=stride, padding='SAME')

is different when the input's height or width is even, which is why we add the

current function. For more details, see ResnetUtilsTest.testConv2DSameEven().

Args:

inputs: A 4-D tensor of size [batch, height_in, width_in, channels].

num_outputs: An integer, the number of output filters.

kernel_size: An int with the kernel_size of the filters.

stride: An integer, the output stride.

rate: An integer, rate for atrous convolution.

scope: Scope.

Returns:

output: A 4-D tensor of size [batch, height_out, width_out, channels] with

the convolution output.

"""

"""

卷积层实现,有更简单的写法,这样做其实是为了提高效率

:param inputs: 输入tensor

:param num_outputs: 输出通道

:param kernel_size: 卷积核尺寸

:param stride: 卷积步长

:param scope: 节点名称

:return: 输出tensor

"""

if stride == 1:

return slim.conv2d(inputs, num_outputs, kernel_size, stride=1, rate=rate,

padding='SAME', scope=scope)

else:

kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

inputs = tf.pad(inputs,

[[0, 0], [pad_beg, pad_end], [pad_beg, pad_end], [0, 0]])

return slim.conv2d(inputs, num_outputs, kernel_size, stride=stride,

rate=rate, padding='VALID', scope=scope)

@slim.add_arg_scope

def stack_blocks_dense(net, blocks, multi_grid, output_stride=None,

outputs_collections=None):

"""Stacks ResNet `Blocks` and controls output feature density.

First, this function creates scopes for the ResNet in the form of

'block_name/unit_1', 'block_name/unit_2', etc.

Second, this function allows the user to explicitly control the ResNet

output_stride, which is the ratio of the input to output spatial resolution.

This is useful for dense prediction tasks such as semantic segmentation or

object detection.

Most ResNets consist of 4 ResNet blocks and subsample the activations by a

factor of 2 when transitioning between consecutive ResNet blocks. This results

to a nominal ResNet output_stride equal to 8. If we set the output_stride to

half the nominal network stride (e.g., output_stride=4), then we compute

responses twice.

Control of the output feature density is implemented by atrous convolution.

Args:

net: A `Tensor` of size [batch, height, width, channels].

blocks: A list of length equal to the number of ResNet `Blocks`. Each

element is a ResNet `Block` object describing the units in the `Block`.

output_stride: If `None`, then the output will be computed at the nominal

network stride. If output_stride is not `None`, it specifies the requested

ratio of input to output spatial resolution, which needs to be equal to

the product of unit strides from the start up to some level of the ResNet.

For example, if the ResNet employs units with strides 1, 2, 1, 3, 4, 1,

then valid values for the output_stride are 1, 2, 6, 24 or None (which

is equivalent to output_stride=24).

outputs_collections: Collection to add the ResNet block outputs.

Returns:

net: Output tensor with stride equal to the specified output_stride.

Raises:

ValueError: If the target output_stride is not valid.

"""

"""

示例,Block('block1',bottleneck,[(256,64,1)]*2 + [(256,64,2)])

:param net: A `Tensor` of size [batch, height, width, channels].

:param blocks: 是之前定义的Block的class的列表

:param outputs_collections: 收集各个end_points的collections

:return: Output tensor

"""

# The current_stride variable keeps track of the effective stride of the

# activations. This allows us to invoke atrous convolution whenever applying

# the next residual unit would result in the activations having stride larger

# than the target output_stride.

current_stride = 1

# The atrous convolution rate parameter.

rate = 1

for block in blocks:

with tf.variable_scope(block.scope, 'block', [net]) as sc:

for i, unit in enumerate(block.args):

if output_stride is not None and current_stride > output_stride:

raise ValueError('The target output_stride cannot be reached.')

with tf.variable_scope('unit_%d' % (i + 1), values=[net]):

# If we have reached the target output_stride, then we need to employ

# atrous convolution with stride=1 and multiply the atrous rate by the

# current unit's stride for use in subsequent layers.

if output_stride is not None and current_stride == output_stride:

# Only uses atrous convolutions with multi-graid rates in the last (block4) block

if block.scope == "block4":

net = block.unit_fn(net, rate=rate * multi_grid[i], **dict(unit, stride=1))

else:

net = block.unit_fn(net, rate=rate, **dict(unit, stride=1))

rate *= unit.get('stride', 1)

else:

net = block.unit_fn(net, rate=1, **unit)

current_stride *= unit.get('stride', 1)

net = slim.utils.collect_named_outputs(outputs_collections, sc.name, net)

'''

这个方法会返回本次添加的tensor对象,

意义是为tensor添加一个别名,并收集进collections中

实现如下

if collections:

append_tensor_alias(outputs,alias)

ops.add_to_collections(collections,outputs)

return outputs

据说本方法位置已经被转移到这里了,

from tensorflow.contrib.layers.python.layers import utils

utils.collect_named_outputs()

'''

if output_stride is not None and current_stride != output_stride:

raise ValueError('The target output_stride cannot be reached.')

return net

def resnet_arg_scope(weight_decay=0.0001,# L2权重衰减速率

is_training=True,

batch_norm_decay=0.997,# BN的衰减速率

batch_norm_epsilon=1e-5, # BN的epsilon默认1e-5

batch_norm_scale=True, # BN的scale默认值

activation_fn=tf.nn.relu,

use_batch_norm=True):

"""Defines the default ResNet arg scope.

TODO(gpapan): The batch-normalization related default values above are

appropriate for use in conjunction with the reference ResNet models

released at https://github.com/KaimingHe/deep-residual-networks. When

training ResNets from scratch, they might need to be tuned.

Args:

weight_decay: The weight decay to use for regularizing the model.

batch_norm_decay: The moving average decay when estimating layer activation

statistics in batch normalization.

batch_norm_epsilon: Small constant to prevent division by zero when

normalizing activations by their variance in batch normalization.

batch_norm_scale: If True, uses an explicit `gamma` multiplier to scale the

activations in the batch normalization layer.

activation_fn: The activation function which is used in ResNet.

use_batch_norm: Whether or not to use batch normalization.

Returns:

An `arg_scope` to use for the resnet models.

"""

# 定义batch normalization(标准化)的参数字典

batch_norm_params = {

'decay': batch_norm_decay, # Decay for the moving averages.

'epsilon': batch_norm_epsilon,

# 就是在归一化时,除以方差时,防止方差为0而加上的一个数

'scale': batch_norm_scale,

'updates_collections': None,

'is_training': is_training,

'fused': True, # Use fused batch norm if possible.

}

# updates_collections该参数有一个默认值,ops.GraphKeys.UPDATE_OPS,当取默认值时,slim会在当前批训练完成后再更新均

# 值和方差,这样会存在一个问题,就是当前批数据使用的均值和方差总是慢一拍,最后导致训练出来的模

# 型性能较差。所以,一般需要将该值设为None,这样slim进行批处理时,会对均值和方差进行即时更新, 批处理使用的就是最新的均值和方差。

# 另外,不论是即使更新还是一步训练后再对所有均值方差一起更新,对测试数据是没有影响的,即测试数

# 据使用的都是保存的模型中的均值方差数据,但是如果你在训练中需要测试,而忘了将is_training这个值改成false,那么这批测试数据将会综合当前批数据的均值方差和训练数据的均值方差。而这样做应该是不正确的。

with slim.arg_scope(

[slim.conv2d],

weights_regularizer=slim.l2_regularizer(weight_decay),

# 权重正则器设置为L2正则

weights_initializer=slim.variance_scaling_initializer(),

activation_fn=activation_fn,

normalizer_fn=slim.batch_norm if use_batch_norm else None,

normalizer_params=batch_norm_params):

with slim.arg_scope([slim.batch_norm], **batch_norm_params):

# The following implies padding='SAME' for pool1, which makes feature

# alignment easier for dense prediction tasks. This is also used in

# https://github.com/facebook/fb.resnet.torch. However the accompanying

# code of 'Deep Residual Learning for Image Recognition' uses

# padding='VALID' for pool1. You can switch to that choice by setting

# slim.arg_scope([slim.max_pool2d], padding='VALID').

with slim.arg_scope([slim.max_pool2d], padding='SAME') as arg_sc:

return arg_sc

"""

网络结构主函数

:param inputs: 输入tensor

:param blocks: Block类列表

:param num_classes: 输出类别数

:param global_pool: 是否最后一层全局平均池化

:param include_root_block: 是否最前方添加7*7卷积和最大池化

:param reuse: 是否重用

:param scope: 整个网络名称

:return:

"""

def resnet_v2(inputs,

blocks,

num_classes=None,

is_training=True,

global_pool=True,

output_stride=None,

include_root_block=True,

spatial_squeeze=True,

reuse=None,

scope=None):

"""Generator for v2 (preactivation) ResNet models.

This function generates a family of ResNet v2 models. See the resnet_v2_*()

methods for specific model instantiations, obtained by selecting different

block instantiations that produce ResNets of various depths.

Training for image classification on Imagenet is usually done with [224, 224]

inputs, resulting in [7, 7] feature maps at the output of the last ResNet

block for the ResNets defined in [1] that have nominal stride equal to 32.

However, for dense prediction tasks we advise that one uses inputs with

spatial dimensions that are multiples of 32 plus 1, e.g., [321, 321]. In

this case the feature maps at the ResNet output will have spatial shape

[(height - 1) / output_stride + 1, (width - 1) / output_stride + 1]

and corners exactly aligned with the input image corners, which greatly

facilitates alignment of the features to the image. Using as input [225, 225]

images results in [8, 8] feature maps at the output of the last ResNet block.

For dense prediction tasks, the ResNet needs to run in fully-convolutional

(FCN) mode and global_pool needs to be set to False. The ResNets in [1, 2] all

have nominal stride equal to 32 and a good choice in FCN mode is to use

output_stride=16 in order to increase the density of the computed features at

small computational and memory overhead, cf. http://arxiv.org/abs/1606.00915.

Args:

inputs: A tensor of size [batch, height_in, width_in, channels].

blocks: A list of length equal to the number of ResNet blocks. Each element

is a resnet_utils.Block object describing the units in the block.

num_classes: Number of predicted classes for classification tasks.

If 0 or None, we return the features before the logit layer.

is_training: whether batch_norm layers are in training mode.

global_pool: If True, we perform global average pooling before computing the

logits. Set to True for image classification, False for dense prediction.

output_stride: If None, then the output will be computed at the nominal

network stride. If output_stride is not None, it specifies the requested

ratio of input to output spatial resolution.

include_root_block: If True, include the initial convolution followed by

max-pooling, if False excludes it. If excluded, `inputs` should be the

results of an activation-less convolution.

spatial_squeeze: if True, logits is of shape [B, C], if false logits is

of shape [B, 1, 1, C], where B is batch_size and C is number of classes.

To use this parameter, the input images must be smaller than 300x300

pixels, in which case the output logit layer does not contain spatial

information and can be removed.

reuse: whether or not the network and its variables should be reused. To be

able to reuse 'scope' must be given.

scope: Optional variable_scope.

Returns:

net: A rank-4 tensor of size [batch, height_out, width_out, channels_out].

If global_pool is False, then height_out and width_out are reduced by a

factor of output_stride compared to the respective height_in and width_in,

else both height_out and width_out equal one. If num_classes is 0 or None,

then net is the output of the last ResNet block, potentially after global

average pooling. If num_classes is a non-zero integer, net contains the

pre-softmax activations.

end_points: A dictionary from components of the network to the corresponding

activation.

Raises:

ValueError: If the target output_stride is not valid.

"""

with tf.variable_scope(scope, 'resnet_v2', [inputs], reuse=reuse) as sc:

end_points_collection = sc.original_name_scope + '_end_points'

# 字符串,用于命名collection名字

with slim.arg_scope([slim.conv2d, bottleneck,

resnet_utils.stack_blocks_dense],

outputs_collections=end_points_collection):

# 为新的收集器取名

with slim.arg_scope([slim.batch_norm], is_training=is_training):

net = inputs

if include_root_block:

if output_stride is not None:

if output_stride % 4 != 0:

raise ValueError('The output_stride needs to be a multiple of 4.')

output_stride /= 4

# We do not include batch normalization or activation functions in

# conv1 because the first ResNet unit will perform these. Cf.

# Appendix of [2].

with slim.arg_scope([slim.conv2d],

activation_fn=None, normalizer_fn=None):

net = resnet_utils.conv2d_same(net, 64, 7, stride=2, scope='conv1')

net = slim.max_pool2d(net, [3, 3], stride=2, scope='pool1')

# 至此图片缩小为1/4

net = slim.utils.collect_named_outputs(end_points_collection, 'pool2', net)

# 读取blocks数据结构,生成残差结构

net = resnet_utils.stack_blocks_dense(net, blocks, output_stride)

# This is needed because the pre-activation variant does not have batch

# normalization or activation functions in the residual unit output. See

# Appendix of [2].

net = slim.batch_norm(net, activation_fn=tf.nn.relu, scope='postnorm')

# Convert end_points_collection into a dictionary of end_points.

# 将collection转化为dict

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

# 为dict添加节点

end_points['pool3'] = end_points[scope + '/block1']

end_points['pool4'] = end_points[scope + '/block2']

end_points['pool5'] = net

return net, end_points

resnet_v2.default_image_size = 224

def resnet_v2_block2(scope, base_depth, num_units, stride):

"""Helper function for creating a resnet_v2 bottleneck block.

Args:

scope: The scope of the block.

base_depth: The depth of the bottleneck layer for each unit.

num_units: The number of units in the block.

stride: The stride of the block, implemented as a stride in the last unit.

All other units have stride=1.

Returns:

A resnet_v2 bottleneck block.

"""

#使用了Block命名元组类

return resnet_utils.Block(scope, bottleneck, [{

5 'depth': base_depth * 4,

'depth_bottleneck': base_depth,

'stride': 1

}] * (num_units - 1) + [{

'depth': base_depth *4,

'depth_bottleneck': base_depth,

'stride': stride

}])

resnet_v2.default_image_size = 224

def resnet_v2_50(inputs,

num_classes=None,

is_training=True,

global_pool=True,

output_stride=None,

spatial_squeeze=True,

reuse=None,

scope='resnet_v2_50'):

"""ResNet-50 model of [1]. See resnet_v2() for arg and return description."""

#resnet 50对应的4block结构,类似可以实现resnet101,152

blocks = [

resnet_v2_block('block1', base_depth=64, num_units=3, stride=2),

resnet_v2_block('block2', base_depth=128, num_units=4, stride=2),

resnet_v2_block('block3', base_depth=256, num_units=6, stride=2),

resnet_v2_block('block4', base_depth=512, num_units=3, stride=1),

]

return resnet_v2(inputs, blocks, num_classes, is_training=is_training,

global_pool=global_pool, output_stride=output_stride,

include_root_block=True, spatial_squeeze=spatial_squeeze,

reuse=reuse, scope=scope)

resnet_v2_50.default_image_size = resnet_v2.default_image_size

最后

以上就是顺利爆米花最近收集整理的关于Tensorflow ResnetV2代码解读的全部内容,更多相关Tensorflow内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复