目的:

利用Java实现爬取淘宝、京东、天猫某商品信息。

导入jsoup包

jsoup 是一款Java 的HTML解析器,可直接解析某个URL地址、HTML文本内容。它提供了一套非常省力的API,可通过DOM,CSS以及类似于jQuery的操作方法来取出和操作数据,我本人使用的是1.11.3版本。

当前版本的jsoup API文档:https://tool.oschina.net/apidocs/apidoc?api=jsoup-1.6.3

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.11.3</version>

</dependency>

自定义常量

public class UrlConst {

/**

* 淘宝商品信息接口url

*/

public static final String PRODUCT_TAOBAO_GET = "https://odin.re.taobao.com/search_tbuad?_noSEC=true&catid=&frcatid=&ac=hU/XF8+10BsCAXGMTsIzFMOF&ip=113.140.78.194&wangwangid=&offset=&count=10&pid=430672_1006&refpid=mm_26632258_3504122_32538762&buckid=&clk1=79e0aebf83b1a06d7ab4e329dc3c96c6&elemtid=1&propertyid=&loc=&gprice=&ismall=&page=&creativeid=&feature_names=spGoldMedal%2CspIsNew%2CpromoPrice%2CfeedbackContent%2CfeedbackNick%2Ctags%2CfeedbackCount%2CdsrDescribe%2CdsrDescribeGap%2CdsrService%2CdsrServiceGap%2CdsrDeliver%2CdsrDeliverGap&reqFields=eurl%2CimgUrl%2Cismall%2CitemId%2Cloc%2Cprice%2CsellCount%2CpromoPrice%2CpromoName%2CsellerPayPostfee%2Ctitle%2CdsrDeliver%2CdsrDescribe%2CdsrService%2CdsrDescribeGap%2CdsrServiceGap%2CdsrDeliverGap%2CspGoldMedal%2Cisju%2CpriceDiscount%2CwangwangId%2Credkeys&sbid=&ua=Mozilla%2F5.0%20(Windows%20NT%2010.0%3B%20Win64%3B%20x64)%20AppleWebKit%2F537.36%20(KHTML%2C%20like%20Gecko)%20Chrome%2F79.0.3945.117%20Safari%2F537.36&pvoff=&X-Client-Scheme=https&keyword=";

/**

* 京东商品信息接口url

*/

public static final String PRODUCT_JINGDONG_GET = "https://search.jd.com/Search?enc=utf-8&keyword=";

/**

* 天猫商品信息接口url

*/

public static final String PRODUCT_TIANMAO_GET = "https://list.tmall.com/search_product.htm?type=p&from=.list.pc_1_searchbutton&q=";

}

商品pojo

public class Product {

private String price; //价格

private String title; //标题

private String url; //链接

private String photo; //图片

public Product() {

}

public Product(String price, String title, String url, String photo) {

this.price = price;

this.title = title;

this.url = url;

this.photo = photo;

}

public String getPhoto() {

return photo;

}

public void setPhoto(String photo) {

this.photo = photo;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public String getPrice() {

return price;

}

public void setPrice(String price) {

this.price = price;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

}

爬取商品工具类(只查询前10个商品)

public class UrlUtils {

private UrlUtils (){}

private static CloseableHttpClient httpclient = HttpClients.createDefault();

/*

* keyWord:商品关键字

*/

public static List<Product> soupTmallDetail(String keyWord) {

List<Product> list = ListUtils.newArrayList();

try {

String url = UrlConst.PRODUCT_TIANMAO_GET + keyWord;

HttpGet httpGet = new HttpGet(url);

httpGet.setHeader("user-agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36");

CloseableHttpResponse response = httpclient.execute(httpGet);

HttpEntity entity = response.getEntity();

if (response.getStatusLine().getStatusCode() == 200) {

String html = EntityUtils.toString(entity, Consts.UTF_8);

// 提取HTML得到商品信息结果

Document doc = Jsoup.parse(html);

Elements products = doc.select("div[class='product-iWrap']");

int i = 0;

while (i < products.size() && i < 10) {

Element e = products.get(i);

list.add(new Product(

e.select("p[class='productPrice']").select("em").attr("title"),

e.select("p[class='productTitle']").select("a").attr("title"),

e.select("div[class='productImg-wrap']").select("a").attr("href"),

e.select("div[class='productImg-wrap']").select("img").attr("src")));

i++;

}

}

return list;

} catch (Exception e) {

e.printStackTrace();

} finally {

return list;

}

}

/*

* keyWord:商品关键字

*/

public static List<Product> soupTaoBaoDetail(String keyWord) {

List<Product> list = ListUtils.newArrayList();

try {

String url = UrlConst.PRODUCT_TAOBAO_GET + keyWord;

HttpGet httpGet = new HttpGet(url);

httpGet.setHeader("user-agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36");

CloseableHttpResponse response = httpclient.execute(httpGet);

HttpEntity entity = response.getEntity();

if (response.getStatusLine().getStatusCode() == 200) {

String json = EntityUtils.toString(entity, Consts.UTF_8);

JSONObject jsonObject = JSON.parseObject(json);

JSONArray jsonArray = jsonObject.getJSONObject("data").getJSONArray("data1");

int i = 0;

while (i < jsonArray.size() && i < 10) {

JSONObject p = (JSONObject) jsonArray.get(i);

list.add(new Product(

p.getString("price"),

p.getString("title"),

p.getString("eurl"),

p.getString("imgUrl")));

i++;

}

}

return list;

} catch (Exception e) {

e.printStackTrace();

} finally {

return list;

}

}

/*

* keyWord:商品关键字

*/

public static List<Product> soupJingDongDetail(String keyWord) {

List<Product> list = ListUtils.newArrayList();

try {

String url = UrlConst.PRODUCT_JINGDONG_GET+keyWord;

HttpGet httpGet = new HttpGet(url);

httpGet.setHeader("user-agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.75 Safari/537.36");

CloseableHttpResponse response = httpclient.execute(httpGet);

HttpEntity entity = response.getEntity();

if (response.getStatusLine().getStatusCode() == 200) {

String html = EntityUtils.toString(entity, Consts.UTF_8);

// 提取HTML得到商品信息结果

Document doc = Jsoup.parse(html);

Elements products = doc.select("div[class='gl-i-wrap']");

int i = 0;

while (i < products.size() && i < 10) {

Element e = products.get(i);

list.add(new Product(

e.select("div[class='p-price']").select("i").text(),

e.select("div[class='p-name p-name-type-2']").select("em").text().replace("<font class="skcolor_ljg">","").replace("</font>",""),

e.select("div[class='p-img']").select("a").attr("href"),

e.select("div[class='p-img']").select("img").attr("data-lazy-img")));

i++;

}

}

return list;

} catch (Exception e) {

e.printStackTrace();

} finally {

return list;

}

}

}

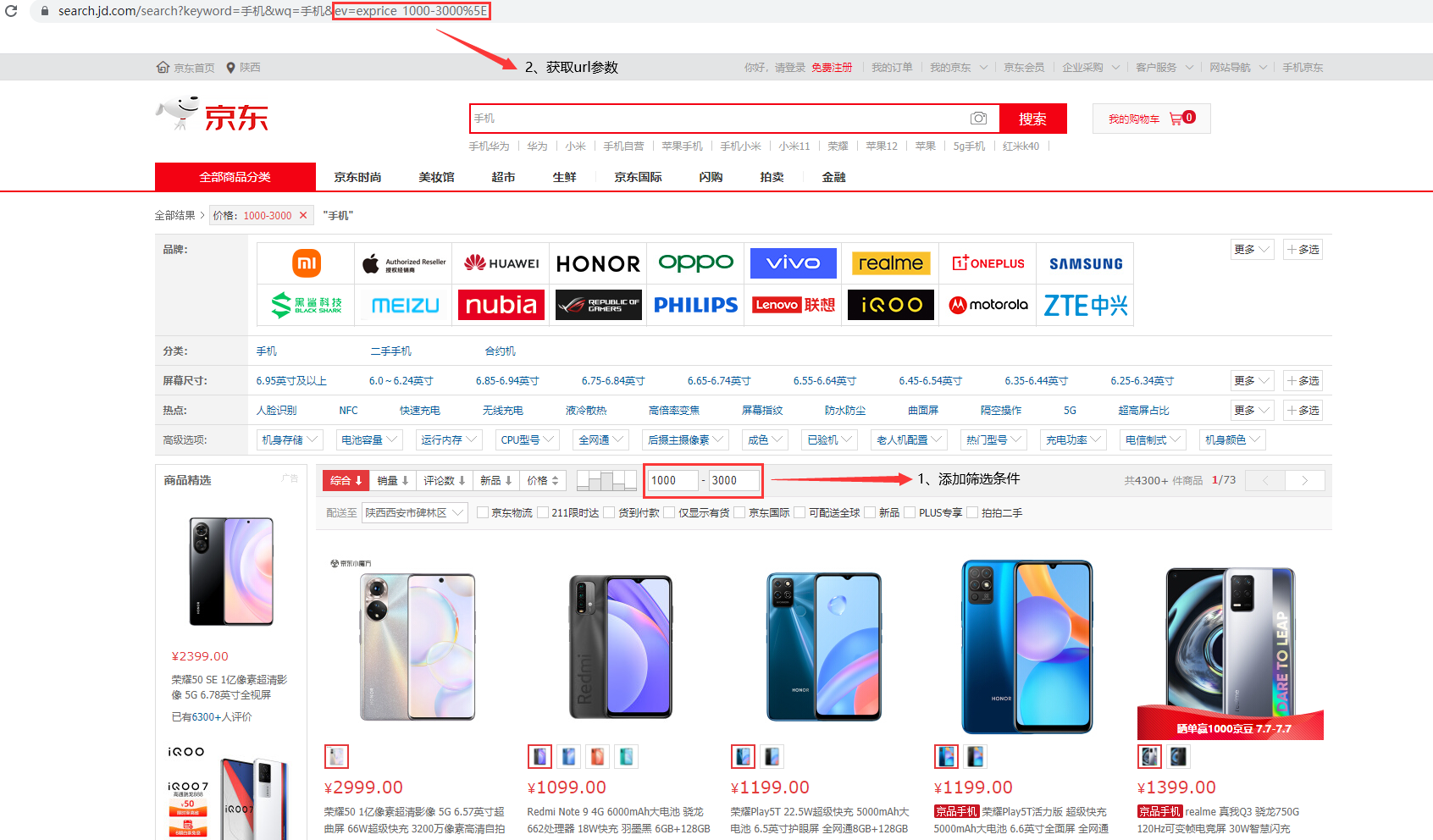

大家可以根据需要更改查询的数量以及添加条件,例如需要给京东的查询数据加上价格区间,我们需要进入京东页面,捕捉参数进行动态修改url参数即可。

最后

以上就是清秀鸭子最近收集整理的关于Java 爬虫爬取京东、天猫、淘宝商品数据工具类的全部内容,更多相关Java内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

![[语录]足球解说员贺炜语录贺炜语录](https://www.shuijiaxian.com/files_image/reation/bcimg11.png)

发表评论 取消回复