JAVA爬取某东、某宝以及某宁商品详细数据(一)

- 写在最前

- 反爬策略问题解决

- 请求头配置

- 无代理模式

- 代理模式

- 某东

- JDHTML解析

- JD商品解析

- 天猫

- TMHTML解析

- 苏宁

- SNHTML解析

写在最前

防止篇幅过长,对于如何爬取某东、某宝以及某宁的历史价格数据,会在下一篇博客中进行总结:https://blog.csdn.net/qq_40921561/article/details/110950930

反爬策略问题解决

某东、某宝以及某宁电商网站平台,对于爬虫还算比较友好,唯一的问题就是有些数据是动态加载的,爬虫无法通过解析html页面获取数据。(比如某东的商品价格信息)

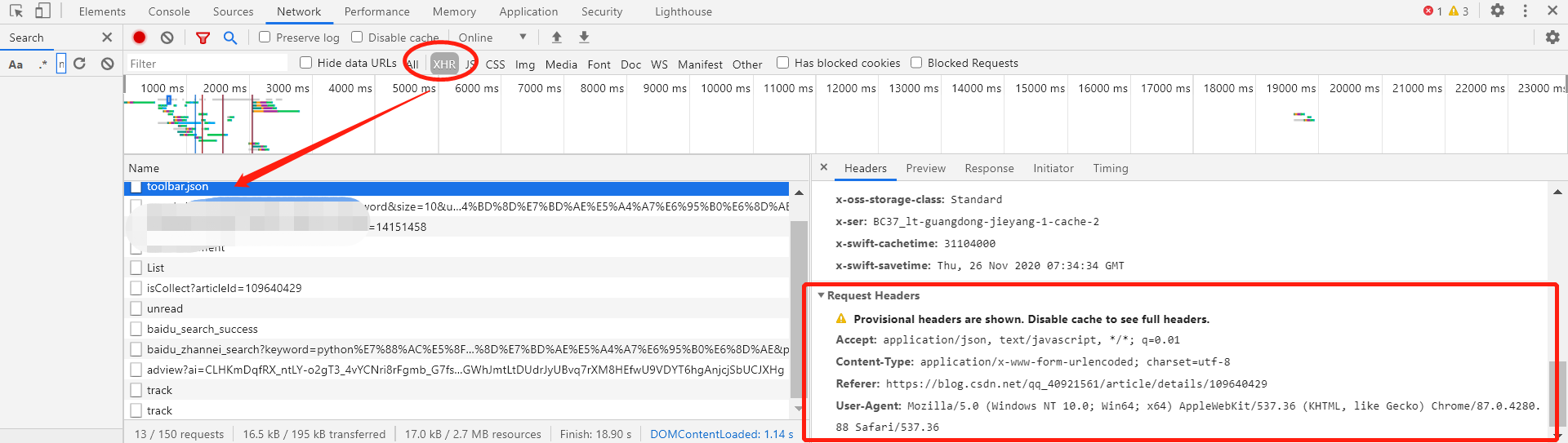

请求头配置

这些请求头配置都是公共的配置,对于请求头,各大电商都没有做严格的反爬校验(某宝会校验user-agent,但是加上去也不影响)。

另外,设置超时处理,一定要设置,不仅仅是为了防止后台一直在请求,收不到任何反馈信息,无法定位问题,还防止瞬时多次请求,IP被封。

对于user-agent随便打开一个网页,F12找到请求头信息中的user-agent。

对于大批量数据的爬取,代理池的设置是必不可少的。代理池的作用就是,将请求的IP“伪装”成代理池中的IP进行请求,提高爬取上限。代理池可以用云代理或者本地代理池,对于个人或者小的需求项目,用本地代理池就可以。在这里推荐一下快刺代理(具体的问谷哥或者度娘,这里不做具体说明)

以下便是对于url请求地址进行请求并返回html代码的工具接口

无代理模式

/**

* @Description: 获取页面的htmlgetHtml

* @param url 地址

* @param headerMap 头部信息

*/

public static String getHtml(String url, Map<String, Object> headerMap)

{

String entity = null;

CloseableHttpClient httpClient = HttpClients.createDefault();

//设置超时处理

RequestConfig config = RequestConfig.custom().setConnectTimeout(3000).

setSocketTimeout(3000).build();

HttpGet httpGet = new HttpGet(url);

httpGet.setConfig(config);

//设置头部信息

if (null != headerMap.get("accept"))

{

httpGet.setHeader("Accept", headerMap.get("accept").toString());

}

if (null != headerMap.get("encoding"))

{

httpGet.setHeader("Accept-Encoding", headerMap.get("encoding").toString());

}

if (null != headerMap.get("language"))

{

httpGet.setHeader("Accept-Language", headerMap.get("language").toString());

}

if (null != headerMap.get("host"))

{

httpGet.setHeader("Host", headerMap.get("host").toString());

}

if (null != headerMap.get("referer"))

{

httpGet.setHeader("Referer", headerMap.get("referer").toString());

}

//固定项

httpGet.setHeader("Connection", "keep-alive");

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.66 Safari/537.36");

try {

//客户端执行httpGet方法,返回响应

CloseableHttpResponse httpResponse = httpClient.execute(httpGet);

//得到服务响应状态码

if (httpResponse.getStatusLine().getStatusCode() == 200) {

entity = EntityUtils.toString(httpResponse.getEntity(), "utf-8");

}

httpResponse.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

return entity;

}

代理模式

private static Logger logger = Logger.getLogger(HttpClientUtils.class);

/**

* @Description: 代理 获取页面的htmlgetHtml

* @param url 地址

* @param ip

* @param port

* @param headerMap 头部信息

*/

public static String getHtml(String url, String ip, String port, Map<String, Object> headerMap)

{

String entity = null;

CloseableHttpClient httpClient = HttpClients.createDefault();

logger.info(">>>>>>>>>此时线程: " + Thread.currentThread().getName() + " 爬取所使用的代理为: "

+ ip + ":" + port);

HttpHost proxy = new HttpHost(ip, Integer.parseInt(port));

RequestConfig config = RequestConfig.custom().setProxy(proxy).setConnectTimeout(3000).

setSocketTimeout(3000).build();

HttpGet httpGet = new HttpGet(url);

httpGet.setConfig(config);

//设置头部信息

if (null != headerMap.get("accept"))

{

httpGet.setHeader("Accept", headerMap.get("accept").toString());

}

if (null != headerMap.get("encoding"))

{

httpGet.setHeader("Accept-Encoding", headerMap.get("encoding").toString());

}

if (null != headerMap.get("language"))

{

httpGet.setHeader("Accept-Language", headerMap.get("language").toString());

}

if (null != headerMap.get("host"))

{

httpGet.setHeader("Host", headerMap.get("host").toString());

}

//固定项

httpGet.setHeader("Connection", "keep-alive");

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.66 Safari/537.36");

try {

//客户端执行httpGet方法,返回响应

CloseableHttpResponse httpResponse = httpClient.execute(httpGet);

//得到服务响应状态码

if (httpResponse.getStatusLine().getStatusCode() == 200) {

entity = EntityUtils.toString(httpResponse.getEntity(), "utf-8");

}

httpResponse.close();

httpClient.close();

} catch (IOException e) {

entity = null;

}

return entity;

}

对于获取价格(最高价、最低价)以及历史数据,会在下一篇博客中详细说明。https://blog.csdn.net/qq_40921561/article/details/110950930

某东

各个VO类不做详细赘述,这里的示例都是无代理的爬取。(实际跑的时候最好加代理池)。另外,为了加快爬取速度,这里建议改成多线程。(方便做介绍,在页面解析处,我会用多线程的方式对爬取的每页数据的所有商品进行解析)

JDHTML解析

/**

* @Description: JDItemDetail 爬取京东商品信息

* @param name 要查询的商品名字

* @return

*/

private List<ItemDetailsVO> JDItemDetail(String name){

List<ItemDetailsVO> itemDetailsVOs = new LinkedList<ItemDetailsVO>();

//分页查找商品数据 取前10页

for (int i = 1; i <= 10 ; i++) {

//京东分页 page 为 1 3 5 .....

// 对应第一页 第二页....

String url="https://search.jd.com/Search?keyword=" + name + "&enc=utf-8&page="+(2*i-1);

String html = "";

try

{

html = HttpClientUtils.doGet(url);

parseJDIndex(itemDetailsVOs, html);

} catch (IOException e)

{

throw ExceptionFactory.iOStremException();

}

}

return itemDetailsVOs;

}

JD商品解析

对于刚学习java的同学,这里的CountDownLatch不可少,具体:开了多线程之后,如果不对每个线程做限制,每个线程跑完接口并不会停止,最后获取不到数据。

/**

* @Description: parseJDIndex 解析京东页面

* @param html

* @throws IOException

* @throws InterruptedException

*/

@SuppressWarnings("null")

private static void parseJDIndex(List<ItemDetailsVO> itemDetailsVOs, String html) throws IOException{

CountDownLatch jdThreadSignal = new CountDownLatch(3);

Document document = Jsoup.parse(html);

//商品列表

Elements elements = document.select("#J_goodsList>ul>li");

if(elements!=null||elements.size()!=0){

//这里为了体现快速测试只取每页的前三个元素

//取全部元素可以改成

//for (Element element : elements) {

for (int i=0;i<3;i++) {

//获得每个li的pid dataa-pid

//狗东改了,就很气 data-sku

Element element = elements.get(i);

new Thread( new Runnable() {

@Override

public void run() {

try

{

String pid = element.attr("data-sku");

ItemDetailsVO itemDetailsVO = parsePid(pid);

itemDetailsVOs.add(itemDetailsVO);

} catch (IOException e)

{

throw ExceptionFactory.iOStremException();

}

jdThreadSignal.countDown();

}

}).start();

}

}

try

{

jdThreadSignal.await();

} catch (InterruptedException e)

{

throw ExceptionFactory.threadCountException();

}

}

/**

* @Description: parseIndex 获得某个页面的详细数据

* @param pid

* @return

*/

private static ItemDetailsVO parsePid(String pid) throws IOException {

//拼接url 进入商品详情页

String productUrl="https://item.jd.com/"+pid+".html";

String productHtml = HttpClientUtils.doGet(productUrl);

Document document = Jsoup.parse(productHtml);

ItemDetailsVO itemDetailsVO = new ItemDetailsVO();

itemDetailsVO.setImgLogo("../img/jdLogo.png");

//获得商品标题

if(document.select("div.sku-name").size()>0){

itemDetailsVO.setTitle(document.select("div.sku-name").get(0).text());

}

//获得商品品牌

itemDetailsVO.setBrand(document.select("#parameter-brand li").attr("title"));

//获得商品名称

itemDetailsVO.setItemName(document.select("[class=parameter2 p-parameter-list] li:first-child").attr("title"));

//获取商品店铺

itemDetailsVO.setStoreName(document.select("div.name a").attr("title"));

//获得店铺类别

itemDetailsVO.setStoreType(document.select("[class=name goodshop EDropdown] em").text());

//获取图片

itemDetailsVO.setImgUrl(document.select("#spec-img").attr("data-origin"));

//获得商品链接

String itemUrl = "https://item.jd.com/" + pid + ".html";

itemDetailsVO.setItemUrl(itemUrl);

//评论炸了 狗东 记得修复 不许刷水军

itemDetailsVO.setCommentNum("1000+");

itemDetailsVO.setSales("100+");

//获取商品价格

parsePrice(itemDetailsVO, itemUrl);

itemDetailsVO.setPid(pid);

return itemDetailsVO;

}

天猫

页面请求同上,只是网址不同而已,具体解析如下:

String name="";

"https://list.tmall.com/search_product.htm?q=" + name;

TMHTML解析

/**

* @Description: parseTMIndex 解析天猫页面

* @param html

*/

private static void parseTMIndex(List<ItemDetailsVO> itemDetailsVOs, String html) {

CountDownLatch tmThreadSignal = new CountDownLatch(3);

Document document = Jsoup.parse(html);

Elements ulList = document.select("div[class='view grid-nosku']");

Elements liList = ulList.select("div[class='product']");

//这里为了体现快速测试只取每页的前三个元素

//取全部元素可以改成

//for (Element element : liList ) {

for (int i=0;i<3;i++) {

Element item = liList.get(i);

new Thread(new Runnable()

{

@Override

public void run()

{

ItemDetailsVO itemDetailsVO = new ItemDetailsVO();

itemDetailsVO.setImgLogo("../img/tmLogo.png");

// 商品ID

String id = item.select("div[class='product']").select("p[class='productStatus']").select("span[class='ww-light ww-small m_wangwang J_WangWang']").attr("data-item");

itemDetailsVO.setPid(id);

// 商品名称

String name = item.select("p[class='productTitle']").select("a").attr("title");

itemDetailsVO.setTitle(name);

name = name.substring(name.indexOf("】")+1).split(" ")[0];

//商品名字和商品品牌

if (name.contains("/")) {

name = name.substring(0, name.indexOf("/"));

}else {

//对过长的名字和品牌进行截取

if (name.length()>6)

{

char c = 0;

if (name.charAt(0)>255)

{

for (int j = 0; j < name.length(); j++)

{

if (name.charAt(j)<=255)

{

c = name.charAt(j);

break;

}

}

}else {

for (int j = 0; j < name.length(); j++)

{

if (name.charAt(j)>255)

{

c = name.charAt(j);

break;

}

}

}

if (c!=0)

name = name.substring(0,name.indexOf(c));

}

}

itemDetailsVO.setBrand(name);

itemDetailsVO.setItemName(name);

// 商品价格

itemDetailsVO.setPrice(item.select("p[class='productPrice']").select("em").attr("title"));

// 商品网址

itemDetailsVO.setItemUrl("https://detail.tmall.com/item.htm?id="+id);

Elements spanList = item.select("p[class='productStatus']").select("span");

// 商品销量

itemDetailsVO.setSales(spanList.get(0).select("em").text());

// 商品评价数

itemDetailsVO.setCommentNum(spanList.get(1).select("a").text());

// 商品店铺

itemDetailsVO.setStoreName(spanList.get(2).attr("data-nick"));

// 商品图片网址

itemDetailsVO.setImgUrl(item.select("div[class='productImg-wrap']").select("a").select("img").attr("src"));

try

{

parsePrice(itemDetailsVO, "https://detail.tmall.com/item.htm?id="+id);

} catch (ParseException e)

{

throw ExceptionFactory.parseException();

} catch (IOException e)

{

throw ExceptionFactory.iOStremException();

}

itemDetailsVOs.add(itemDetailsVO);

tmThreadSignal.countDown();

}

}).start();

}

try

{

tmThreadSignal.await();

} catch (InterruptedException e)

{

throw ExceptionFactory.threadCountException();

}

}

苏宁

苏宁请求网址如下:

String url = "https://search.suning.com/"+name+"/";

SNHTML解析

/**

* @Description: parseSNIndex 解析苏宁页面

* @param html

*/

private static void parseSNIndex(List<ItemDetailsVO> itemDetailsVOs, String html) {

CountDownLatch snThreadSignal = new CountDownLatch(3);

Document document = Jsoup.parse(html);

Elements liElements = document.select("div[class='product-list clearfix']").select("ul").select("li");

for (int i=0;i<3;i++) {

Element element = liElements.get(i);

new Thread(new Runnable()

{

@Override

public void run()

{

ItemDetailsVO itemDetailsVO = new ItemDetailsVO();

itemDetailsVO.setImgLogo("../img/snLogo.png");

Element elementMain = element.select("div.item-bg").select("div.product-box").select("div.res-img").select("div.img-block").get(0);

Element elementPrice = element.select("div.item-bg").select("div.product-box").select("div.res-info").select("div.price-box").select("span").get(0);

Element elementTitle = element.select("div.item-bg").select("div.product-box").select("div.res-info").select("div.title-selling-point").select("a").get(0);

Element elementCommonts = element.select("div.item-bg").select("div.product-box").select("div.res-info").select("[class=evaluate-old clearfix]").select("div.info-evaluate").select("a").get(0);

//商品pid

itemDetailsVO.setPid(elementPrice.attr("datasku").split("\|")[0]);

//商品标题

String title = "";

if (elementTitle.text().contains("【"))

{

if (elementTitle.text().startsWith("【"))

{

title = elementTitle.text().substring(elementTitle.text().indexOf("】")+1);

}else {

title = elementTitle.text().substring(0,elementTitle.text().indexOf("【"));

}

}else {

title = elementTitle.text();

}

itemDetailsVO.setTitle(title);

//评论数

itemDetailsVO.setCommentNum(elementCommonts.select("i").text());

//销量

itemDetailsVO.setSales(itemDetailsVO.getCommentNum());

//商品名字和品牌

String brand = "";

String itemName = "";

if (itemDetailsVO.getTitle().startsWith("("))

{

brand = itemDetailsVO.getTitle().substring(0, itemDetailsVO.getTitle().indexOf("("));

itemName = itemDetailsVO.getTitle().substring(itemDetailsVO.getTitle().indexOf(")")+1,

itemDetailsVO.getTitle().indexOf(" ")==-1?itemDetailsVO.getTitle().length():itemDetailsVO.getTitle().indexOf(" "));

}else {

brand = itemDetailsVO.getTitle().split(" ")[0];

itemName = itemDetailsVO.getTitle().split(" ")[0];

}

itemDetailsVO.setBrand(brand);

itemDetailsVO.setItemName(itemName);

//店铺名字

itemDetailsVO.setStoreName(element.select("div.item-bg").select("div.product-box").select("div.res-info").select("div.store-stock").select("a").text());

//图片地址

itemDetailsVO.setImgUrl(elementMain.select("img").attr("src"));

//商品链接

itemDetailsVO.setItemUrl("https:"+elementMain.select("a").attr("href"));

try

{

parsePrice(itemDetailsVO, itemDetailsVO.getItemUrl());

} catch (ParseException e)

{

throw ExceptionFactory.parseException();

} catch (IOException e)

{

throw ExceptionFactory.iOStremException();

}

itemDetailsVOs.add(itemDetailsVO);

snThreadSignal.countDown();

}

}).start();

}

try

{

snThreadSignal.await();

} catch (InterruptedException e)

{

throw ExceptionFactory.threadCountException();

}

}

最后

以上就是神勇铃铛最近收集整理的关于JAVA爬取淘宝、京东、天猫以及苏宁商品详细数据(一)写在最前反爬策略问题解决的全部内容,更多相关JAVA爬取淘宝、京东、天猫以及苏宁商品详细数据(一)写在最前反爬策略问题解决内容请搜索靠谱客的其他文章。

![[语录]足球解说员贺炜语录贺炜语录](https://www.shuijiaxian.com/files_image/reation/bcimg11.png)

发表评论 取消回复