PS:首先声明是学校的作业= = 我喊它贝塔狗(原谅我不要脸),因为一直觉得阿法狗很厉害但离我很遥远,终于第一次在作业驱动下尝试写了一个能看的AI,有不错的胜率还是挺开心的

正文

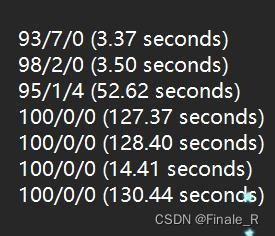

对战随机agent的胜率

对战100局,记录胜/负/平与AI思考总时间(第三个是井字棋)

笔者CPU:i5-12500h,12核

测试用例

[--size SIZE] (boardsize) 棋盘大小

[--games GAMES] (number of games) 玩多少盘

[--iterations ITERATIONS] (number of iterations allowed by the agent) 提高这个会提高算度,但下的更慢[--print-board {all,final}] debug的时候用的

[--parallel PARALLEL] 线程,我的电脑其实可以12,老师给的是8,懒得改了= =

python main.py --games 100 --size 5 --iterations 100 --parallel 8 shapes1.txt >> results.txt # two in a row

python main.py --games 100 --size 10 --iterations 100 --parallel 8 shapes1.txt >> results.txt # two in a row large

python main.py --games 100 --size 3 --iterations 1000 --parallel 8 shapes2.txt >> results.txt # tic-tac-toe

python main.py --games 100 --size 8 --iterations 1000 --parallel 8 shapes3.txt >> results.txt # plus

python main.py --games 100 --size 8 --iterations 1000 --parallel 8 shapes4.txt >> results.txt # circle

python main.py --games 100 --size 8 --iterations 100 --parallel 8 shapes4.txt >> results.txt # circle fast

python main.py --games 100 --size 10 --iterations 1000 --parallel 8 shapes5.txt >> results.txt # disjoint思路/ pseudocode

1. Get every possible move

2. Simulate games for each possible move

3. Calculate the reward for each possible move

4. Return move choice for the real game

上代码

不能直接跑,重点是思路,不过我注释的很细节了

from random_agent import RandomAgent

from game import Game

import numpy as np

import copy

import random

class Agent:

def __init__(self, iterations, id):

self.iterations = iterations

self.id = id

def make_move(self, game):

iter_cnt = 0

rand = np.random.random()

# parameters for each avaliable position

freeposnum = len(game.board.free_positions())

pos_winrate = np.zeros(freeposnum)

pos_reward = np.zeros(freeposnum)

pos_cnt = np.zeros(freeposnum)

free_positions = game.board.free_positions()

# simulation begin with creating a deep copy, which can change without affecting the others

while iter_cnt < self.iterations:

# create a deep copy

board = copy.deepcopy(game.board)

# dynamic epsilon, increased from 0(exploration) to 1(exploitation) by running time

epsilon = iter_cnt / self.iterations

# exploration & exploitation

if rand > epsilon:

#pointer = game.board.random_free()

pointer = random.randrange(0, len(free_positions))

else:

pointer = np.argmax(pos_winrate)

# make the move in the deepcopy and deduce the game by using random agents

finalmove = free_positions[pointer]

board.place(finalmove, self.id)

# attention here, it should be agent no.2 to take the next move

deepcopy_players = [RandomAgent(2), RandomAgent(1)]

deepcopy_game = game.from_board(board, game.objectives, deepcopy_players, game.print_board)

if deepcopy_game.victory(finalmove, self.id):

winner = self

else:

winner = deepcopy_game.play()

# give rewards by outcomes

if winner:

if winner.id == 1:

pos_reward[pointer] += 1

else:

pos_reward[pointer] -= 1

else:

pos_reward[pointer] += 0

# visit times + 1

pos_cnt[pointer] += 1

# calculate the winrate of each position

pos_winrate[pointer] = pos_reward[pointer] / pos_cnt[pointer]

# next iteration

iter_cnt += 1

# back to real match with a postion with the highest winrate

highest_winrate_pos = np.argmax(pos_winrate)

# take the shot

finalmove = free_positions[highest_winrate_pos]

return finalmove

def __str__(self):

return f'Player {self.id} (betago agent)'PSS: 其实我也比较懒,没有把测试用例都截图po上来,但时间精力确实有限,比如现在还有别的作业没写完= =

只希望还是能帮到人吧(笑

最后

以上就是体贴飞机最近收集整理的关于bandit agent下棋AI(python编写) 通过强化学习RL 使用numpy正文的全部内容,更多相关bandit内容请搜索靠谱客的其他文章。

发表评论 取消回复