Binder是Android进程通信过程的机制,也是非常复杂的,整个通信过程涉及到用户态和内核态,从java层到Framework层,到驱动层,以前断断续续的看了不少文章,总是不能看到本质,所以不能表达出原理思想。最近看了几篇文章,写得挺好的,所以趁此将整个过程记录下来,方便后续复习。当然也有理解不到位的,不过大致方向应该没错,想了解更多的可以看后面的链接文章。

分析的源码8.0,驱动源码kernel_3.18

一、涉及源码

frameworksbasecorejavaandroidcontentContextWrapper.java

frameworksbasecorejavaandroidappContextImpl.java

frameworksbasecorejavaandroidappActivityManager.java

frameworksbasecorejavaandroidosServiceManagerNative.java

frameworksbasecorejavaandroidappIActivityManager.aidl

frameworksbasecorejniandroid_util_Binder.cpp

frameworksnativelibsbinderBpBinder.cpp

frameworksbasecorejniAndroidRuntime.cpp

frameworksbasecmdsapp_processapp_main.cpp

frameworksnativelibsbinderIPCThreadState.cpp

frameworksnativelibsbinderProcessState.cpp

driversstagingandroidbinder.c

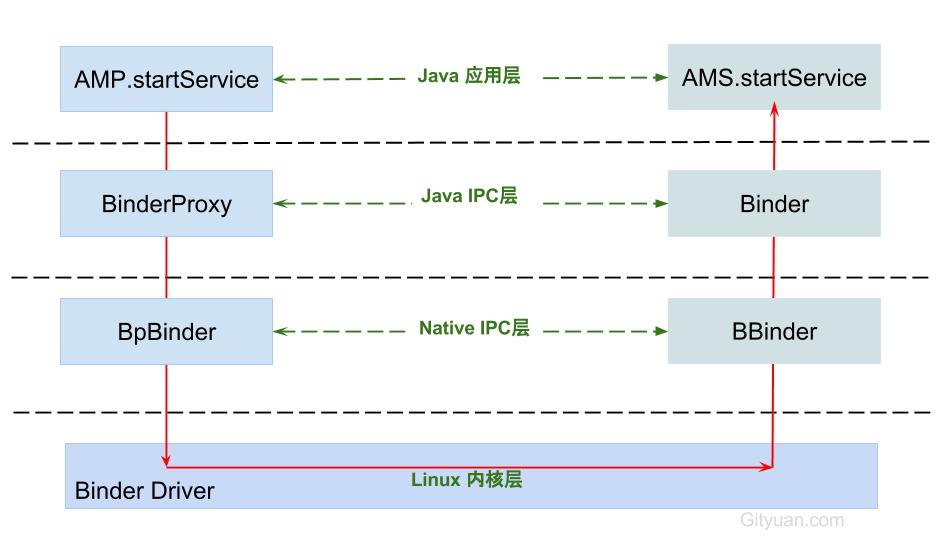

二、分层架构

和TCP/IP协议一样,Binder也有类似分层架构,每一层都有其不同功能,已startService为例,下面图都是直接用的参考文章里面的

Java应用层:对于上层只需要通过调用AMP.startService,而不需要关心底层,经过层层调用到AMS.startService

Java IPC层:Binder通信是采用C/S架构,Android系统的基础架构便已设计好Binder在Java framework层的Binder客户类BinderProxy和服务类Binder。

Native IPC:对于Native层,使用的是BpBinder和BBinder,对于上一层Java IPC的通信也是基于这个层面

Kernel物理层:这里是Binder Driver,前面3层都是用户空间,对于用户空间的内存资源是不共享的,每个Android的进程只能运行在自己进程所拥有的虚拟地址空间,而内核空间却是可以共享的,真正的核心环节是在Binder Driver。

三、ContextWrapper.startService

@Override

//ContextWrapper

public ComponentName startService(Intent service) {

return mBase.startService(service);

}

//ContextImpl

@Override

public ComponentName startService(Intent service) {

warnIfCallingFromSystemProcess();

return startServiceCommon(service, false, mUser);

}

private ComponentName startServiceCommon(Intent service, boolean requireForeground,

UserHandle user) {

try {

...

ComponentName cn = ActivityManager.getService().startService(

mMainThread.getApplicationThread(), service, service.resolveTypeIfNeeded(

getContentResolver()), requireForeground,

getOpPackageName(), user.getIdentifier());

...

} catch (RemoteException e) {

throw e.rethrowFromSystemServer();

}

}

四、ActivityManager.getService()

到这里调用到ActivityManager.getService(),看下返回的是什么

public static IActivityManager getService() {

return IActivityManagerSingleton.get();

}

private static final Singleton<IActivityManager> IActivityManagerSingleton =

new Singleton<IActivityManager>() {

@Override

protected IActivityManager create() {

final IBinder b = ServiceManager.getService(Context.ACTIVITY_SERVICE); //1

final IActivityManager am = IActivityManager.Stub.asInterface(b); //2

return am;

}

};

4.1、ServiceManager.getService(Context.ACTIVITY_SERVICE)

IActivityManagerSingleton.get最终会调用Singleton的create方法,看下里面的实现细节,注释1处,

public static IBinder getService(String name) {

try {

IBinder service = sCache.get(name); //先从缓存获取

if (service != null) {

return service;

} else {

return Binder.allowBlocking(getIServiceManager().getService(name));

}

} catch (RemoteException e) {

Log.e(TAG, "error in getService", e);

}

return null;

}

4.2、getIServiceManager()

private static IServiceManager getIServiceManager() {

if (sServiceManager != null) {

return sServiceManager;

}

// Find the service manager

sServiceManager = ServiceManagerNative

.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()));

return sServiceManager;

}

4.2.1、BinderInternal.getContextObject()

getContextObject是一个native方法

//android_util_binder.cpp

static jobject android_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz)

{

sp<IBinder> b = ProcessState::self()->getContextObject(NULL);

return javaObjectForIBinder(env, b);

}

这篇文章 获取ServiceManager , ProcessState::self()->getContextObject()等价于 new BpBinder(0)

4.2.2、javaObjectForIBinder

//android_util_binder.cpp

jobject javaObjectForIBinder(JNIEnv* env, const sp<IBinder>& val)

{

if (val == NULL) return NULL;

if (val->checkSubclass(&gBinderOffsets)) {

// One of our own!

jobject object = static_cast<JavaBBinder*>(val.get())->object();

LOGDEATH("objectForBinder %p: it's our own %p!n", val.get(), object);

return object;

}

// For the rest of the function we will hold this lock, to serialize

// looking/creation/destruction of Java proxies for native Binder proxies.

AutoMutex _l(mProxyLock);

// Someone else's... do we know about it?

jobject object = (jobject)val->findObject(&gBinderProxyOffsets);

if (object != NULL) {

jobject res = jniGetReferent(env, object);

if (res != NULL) {

ALOGV("objectForBinder %p: found existing %p!n", val.get(), res);

return res;

}

LOGDEATH("Proxy object %p of IBinder %p no longer in working set!!!", object, val.get());

android_atomic_dec(&gNumProxyRefs);

val->detachObject(&gBinderProxyOffsets);

env->DeleteGlobalRef(object);

}

//创建BinderProxy对象

object = env->NewObject(gBinderProxyOffsets.mClass, gBinderProxyOffsets.mConstructor);

if (object != NULL) {

LOGDEATH("objectForBinder %p: created new proxy %p !n", val.get(), object);

//BinderProxy.mObject成员变量记录BpBinder对象

env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get());

val->incStrong((void*)javaObjectForIBinder);

// The native object needs to hold a weak reference back to the

// proxy, so we can retrieve the same proxy if it is still active.

jobject refObject = env->NewGlobalRef(

env->GetObjectField(object, gBinderProxyOffsets.mSelf));

val->attachObject(&gBinderProxyOffsets, refObject,

jnienv_to_javavm(env), proxy_cleanup);

// Also remember the death recipients registered on this proxy

sp<DeathRecipientList> drl = new DeathRecipientList;

drl->incStrong((void*)javaObjectForIBinder);

env->SetLongField(object, gBinderProxyOffsets.mOrgue, reinterpret_cast<jlong>(drl.get()));

// Note that a new object reference has been created.

android_atomic_inc(&gNumProxyRefs);

incRefsCreated(env);

}

return object;

}

根据BpBinder(C++)生成BinderProxy(Java)对象. 主要工作是创建BinderProxy对象,并把BpBinder对象地址保存到BinderProxy.mObject成员变量. 到此,可知ServiceManagerNative.asInterface(BinderInternal.getContextObject()) 等价于

ServiceManagerNative.asInterface(new BinderProxy())

而Binder.allowBlocking里面的操作不影响下面要分析的流程,跳过

BinderProxy在Binder.java里面

4.3、SMN.asInterface

//ServiceManagerNative.java

static public IServiceManager asInterface(IBinder obj)

{

if (obj == null) {

return null;

}

IServiceManager in =

(IServiceManager)obj.queryLocalInterface(descriptor);

if (in != null) {

return in;

}

return new ServiceManagerProxy(obj);

}

可知最后返回了ServiceManagerProxy对象,简称SMP

ServiceManagerNative.asInterface(Binder.allowBlocking(BinderInternal.getContextObject()))最后等价于new ServiceManagerProxy(new BinderProxy()),这里传入了一个BinderProxy对象,而里面有一个BpBinder(0),这里是客户端的一个代理端,这里的0是,指向native层的servicemanager.

看下ServiceManagerProxy的构造方法

//ServiceManagerProxy

public ServiceManagerProxy(IBinder remote) {

//这里的remote就是BinderProxy

mRemote = remote;

}

所以到这里就可以知道ServiceManager.getService调用了ServiceManagerProxy的getService

public IBinder getService(String name) throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IServiceManager.descriptor);

data.writeString(name);

mRemote.transact(GET_SERVICE_TRANSACTION, data, reply, 0);

IBinder binder = reply.readStrongBinder();

reply.recycle();

data.recycle();

return binder;

}

这里到native端的servicemanager去获取AMS代理对象,获取到的对象已BinderProxy对象返回给客户端,而BinderProxy通过BpBinder与底层交互,具体的实现过程参考这篇文章Binder系列7—framework层分析

回到ActivityManager.getService()方法注释2处

4.4、IActivityManager.Stub.asInterface(b)

IActivityManager是一个AIDL对象,Android 8.0 去除了ActivityManagerNative的内部类ActivityManagerProxy,而是使用了AIDL来实现,这里asInterface也是返回一个Proxy代理对象,然后通过代理对象去startService。 服务端也就是AMS只需要继承IActivityManager.Stub类并实现相应的方法就可以了。

类似启动代码如下

public ComponentName startService(IApplicationThread caller, Intent service, String resolvedType, String callingPackage, int userId) throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IActivityManager.descriptor);

data.writeStrongBinder(caller != null ? caller.asBinder() : null);

service.writeToParcel(data, 0);

data.writeString(resolvedType);

data.writeString(callingPackage);

data.writeInt(userId);

//通过Binder 传递数据

mRemote.transact(START_SERVICE_TRANSACTION, data, reply, 0);

reply.readException();

ComponentName res = ComponentName.readFromParcel(reply);

data.recycle();

reply.recycle();

return res;

}

五、BinderProxy.transact

这里的mRemote就是BinderProxy,调用它的transact方法

//BinderProxy

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

....

try {

return transactNative(code, data, reply, flags);

} finally {

...

}

}

//调用到native层

public native boolean transactNative(int code, Parcel data, Parcel reply,

int flags) throws RemoteException;

//android_util_Binder.cpp

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

...

//将java Parcel转为c++ Parcel

Parcel* data = parcelForJavaObject(env, dataObj);

Parcel* reply = parcelForJavaObject(env, replyObj);

...

//gBinderProxyOffsets.mObject中保存的是new BpBinder(handle)对象

IBinder* target = (IBinder*)

env->GetLongField(obj, gBinderProxyOffsets.mObject);

...

//printf("Transact from Java code to %p sending: ", target); data->print();

status_t err = target->transact(code, *data, reply, flags);

//if (reply) printf("Transact from Java code to %p received: ", target); reply->print();

...

signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/, data->dataSize());

return JNI_FALSE;

}

这里先将java的Parce转化位c++ Parcel,然后获取保存的BpBinder对象,调用它的transact

六、BpBinder.transact

//BpBinder.cpp

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

IPCThreadState::self()获取的是一个单例对象,保证每个线程只有一个实例对象,看下IPCThreadState的构造方法

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

IPCThreadState就是和Binder驱动交互的类,写数据和读数据都是通过mOut和mIn

七、IPCThreadState::self()->transact

//IPCThreadState

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

...

if (err == NO_ERROR) {

err = writeTransactionData(BC_TRANSACTION_SG, flags, handle, code, data, NULL); //1

}

...

//默认情况下都是非ONE_WAY,也就是需要等待服务端的返回结果

if ((flags & TF_ONE_WAY) == 0) {

...

if (reply) {

//reply对象不为空

err = waitForResponse(reply); //2

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

...

} else {

err = waitForResponse(NULL, NULL);

}

return err;

}

在注释1处,writeTransactionData向Parcel数据类型的mOut写入数据,此时mIn还没有数据。

7.1、writeTransactionData

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle; //handle指向AMS

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd); //cmd = BC_TRANSACTION

mOut.write(&tr, sizeof(tr)); //写入binder_transaction_data数据

return NO_ERROR;

}

在这里将数据写入mOut,回到transact方法,看注释2

7.2、waitForResponse

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break; //talkWithDriver

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

//每当跟Driver交互一次,若mIn收到数据则往下执行一次BR命令

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

...

goto finish;

case BR_REPLY:

{

...

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

...

}

在这个过程中,首先通过talkWithDriver跟驱动交互,收到应答消息,便会写入mIn,则根据收到的不同响应吗,执行相应的操作。对于非oneway transaction时,当收到BR_REPLY消息,则完整地完成本次Binder通信;

7.8、talkWithDriver

//mOut有数据,mIn还没有数据。doReceive默认值为true

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

//这个值为true

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

//写数据的buffer

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

//从驱动读取的数据放入mIn

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

...

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

....

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) //1

err = NO_ERROR;

else

err = -errno;

...

} while (err == -EINTR);

...

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

...

return NO_ERROR;

}

return err;

}

binder_write_read结构体用来与Binder驱动交换数据的结构,通过ioctl与mDriverFD通信,是真正与Binder驱动进行数据读写交互的过程。

八、Binder Driver

ioctl()方法经过syscall最终调用到Binder_ioctl()方法。

8.1、binder_ioctl

//driversstagingandroidbinder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

//获取binder_pro

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

...

//查找或创建binder_thread结构体

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

//cmd为BINDER_WRITE_READ

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

...

}

default:

ret = -EINVAL;

goto err;

}

ret = 0;

...

return ret;

}

调用到了binder_ioctl_write_read方法

8.2、binder_ioctl_write_read

//driversstagingandroidbinder.c

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

//从用户空间拷贝数据到bwr结构体

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

//先执行写操作

if (bwr.write_size > 0) {

//将数据放入目标进程

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

//再执行读操作

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lldn",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

先从用户空间拷贝数据到bwr结构体,然后写将数据放入目标进程,再读数据

8.3、binder_thread_write

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

//拷贝用户空间的cmd命令,此时为BC_TRANSACTION

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

...

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

...

}

return 0;

}

不断从binder_buffer所指向的地址获取cmd, 当只有BC_TRANSACTION或者BC_REPLY时, 则调用binder_transaction()来处理事务。

8.3.1、binder_transaction

最核心的方法

//此时reply为0

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end;

binder_size_t off_min;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error;

e = binder_transaction_log_add(&binder_transaction_log);

e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

e->from_proc = proc->pid;

e->from_thread = thread->pid;

e->target_handle = tr->target.handle;

e->data_size = tr->data_size;

e->offsets_size = tr->offsets_size;

if (reply) {

...

} else {

if (tr->target.handle) {

struct binder_ref *ref;

//由handle找到相应binde_ref,由binder_ref 找到相应 binder_node

ref = binder_get_ref(proc, tr->target.handle);

target_node = ref->node;

} else {

target_node = binder_context_mgr_node;

}

e->to_node = target_node->debug_id;

// 由binder_node 找到相应 binder_proc

target_proc = target_node->proc;

...

}

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

//首次执行target_thread为空

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

e->to_proc = target_proc->pid;

/* TODO: reuse incoming transaction for reply */

//创建binder_transaction

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = ++binder_last_id;

e->debug_id = t->debug_id;

...

//非oneway的通信方式,把当前thread保存到transaction的from字段

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc; //此次通信目标进程为system_server

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

t->priority = task_nice(current);

trace_binder_transaction(reply, t, target_node);

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

if (t->buffer == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_alloc_buf_failed;

}

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

//分别拷贝用户空间的binder_transaction_data中ptr.buffer和ptr.offsets到目标进程的binder_buffer

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size)) {

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

...

off_end = (void *)offp + tr->offsets_size;

off_min = 0;

for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

if (*offp > t->buffer->data_size - sizeof(*fp) ||

*offp < off_min ||

t->buffer->data_size < sizeof(*fp) ||

!IS_ALIGNED(*offp, sizeof(u32))) {

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

fp = (struct flat_binder_object *)(t->buffer->data + *offp);

off_min = *offp + sizeof(struct flat_binder_object);

switch (fp->type) {

//...

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

struct binder_ref *ref = binder_get_ref(proc, fp->handle);

if (ref == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_failed;

}

if (security_binder_transfer_binder(proc->tsk, target_proc->tsk)) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_failed;

}

if (ref->node->proc == target_proc) {

if (fp->type == BINDER_TYPE_HANDLE)

fp->type = BINDER_TYPE_BINDER;

else

fp->type = BINDER_TYPE_WEAK_BINDER;

fp->binder = ref->node->ptr;

fp->cookie = ref->node->cookie;

binder_inc_node(ref->node, fp->type == BINDER_TYPE_BINDER, 0, NULL);

trace_binder_transaction_ref_to_node(t, ref);

} else {

struct binder_ref *new_ref;

new_ref = binder_get_ref_for_node(target_proc, ref->node);

if (new_ref == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_for_node_failed;

}

fp->handle = new_ref->desc;

binder_inc_ref(new_ref, fp->type == BINDER_TYPE_HANDLE, NULL);

trace_binder_transaction_ref_to_ref(t, ref,

new_ref);

}

} break;

//...

default:

proc->pid, thread->pid, fp->type);

return_error = BR_FAILED_REPLY;

goto err_bad_object_type;

}

}

if (reply) {

binder_pop_transaction(target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {

//BC_TRANSACTION 且 非oneway,则设置事务栈信息

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

//将BINDER_WORK_TRANSACTION添加到目标队列,即target_proc->todo

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

//将BINDER_WORK_TRANSACTION_COMPLETE添加到当前线程队列,即thread->todo

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

//唤醒等待队列,本次通信的目标队列为target_proc->wait

if (target_wait)

wake_up_interruptible(target_wait);

return;

//...

}

主要功能:

1.查询目标进程的过程: handle -> binder_ref -> binder_node -> binder_proc

2.将BINDER_WORK_TRANSACTION添加到目标队列target_list:

3.数据拷贝

将用户空间binder_transaction_data中ptr.buffer和ptr.offsets拷贝到目标进程的binder_buffer->data;

这就是只拷贝一次的真理所在;

4.设置事务栈信息

BC_TRANSACTION且非oneway, 则将当前事务添加到thread->transaction_stack;

5.事务分发过程:

将BINDER_WORK_TRANSACTION添加到目标队列(此时为target_proc->todo队列);

将BINDER_WORK_TRANSACTION_COMPLETE添加到当前线程thread->todo队列;

6.唤醒目标进程target_proc开始执行事务。

下面就进入binder_thread_read读取数据

8.4、binder_thread_read

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

//binder_transaction()已设置transaction_stack不为空,则wait_for_proc_work为false.

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

//...

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

proc->ready_threads++;

if (wait_for_proc_work) {

if (non_block) {

...

} else

//当进程todo队列没有数据,则进入休眠等待状态

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

...

} else

//当线程todo队列有数据则执行往下执行;当线程todo队列没有数据,则进入休眠等待状态

ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)); //1

}

if (wait_for_proc_work)

proc->ready_threads--;

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

//先从线程todo队列获取事务数据

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct binder_work,

entry);

// 线程todo队列没有数据, 则从进程todo对获取事务数据

} else if (!list_empty(&proc->todo) && wait_for_proc_work) {

w = list_first_entry(&proc->todo, struct binder_work,

entry);

} else {

/* no data added */

//没有数据,则返回retry

if (ptr - buffer == 4 &&

!(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN))

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

//获取transaction数据

t = container_of(w, struct binder_transaction, work);

} break;

case BINDER_WORK_TRANSACTION_COMPLETE: {

cmd = BR_TRANSACTION_COMPLETE;

//将BR_TRANSACTION_COMPLETE写入*ptr,并跳出循环。

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

...

list_del(&w->entry);

kfree(w);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

} break;

case BINDER_WORK_NODE: ... break;

case BINDER_WORK_DEAD_BINDER:

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: ...break;

}

//只有BINDER_WORK_TRANSACTION命令才能继续往下执行

if (!t)

continue;

if (t->buffer->target_node) {

//获取目标node

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

...

cmd = BR_TRANSACTION;//设置命令为BR_TRANSACTION

} else {

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;//设置命令为BR_REPLY

}

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid);

if (t->from) {

struct task_struct *sender = t->from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

tr.sender_pid = 0;

}

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

tr.data.ptr.buffer = (binder_uintptr_t)(

(uintptr_t)t->buffer->data +

proc->user_buffer_offset);

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

//将cmd和数据写回用户空间

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

trace_binder_transaction_received(t);

binder_stat_br(proc, thread, cmd);

//...

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

break;

}

done:

*consumed = ptr - buffer;

if (proc->requested_threads + proc->ready_threads == 0 &&

proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */

/*spawn a new thread if we leave this out */) {

proc->requested_threads++;

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BR_SPAWN_LOOPERn",

proc->pid, thread->pid);

// 生成BR_SPAWN_LOOPER命令,用于创建新的线程

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

binder_stat_br(proc, thread, BR_SPAWN_LOOPER);

}

return 0;

}

在前面知道写入目标进程的同时,写入了BINDER_WORK_TRANSACTION_COMPLETE到当前用户进程空间

复习下waitForResponse方法

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break; //talkWithDriver

//...

cmd = (uint32_t)mIn.readInt32();

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

...

goto finish;

case BR_REPLY:

{

...

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

...

}

对于非oneway的需要服务端返回才会结束通信过程,接收到驱动写回的BR_TRANSACTION_COMPLETE命令,不会结束通信过程,继续走talkWithDriver方法,此时mOut没数据,走binder_thread_read方法,在该方法注释1处等待服务端reply数据。

九、参考文章

Binder系列—开篇

彻底理解Android Binder通信架构

Android应用启动的实质(二)

Binder机制之一次响应的故事

Binder——从发起通信到talkWithDriver()

听说你Binder机制学的不错,来面试下这几个问题(一)

ZygoteInit从c到java

最后

以上就是饱满黑米最近收集整理的关于Framework系列-Binder通信流程(一)的全部内容,更多相关Framework系列-Binder通信流程(一)内容请搜索靠谱客的其他文章。

发表评论 取消回复