搜索爬行原理 In this tutorial, I’ll show you how to build a custom SitePoint search engine that far outdoes anything WordPress could ever put out. We’ll be using Diffbot as a service to extract structured data from SitePoint automatically, and this matching API client to do both the searching and crawling. 在本教程中,我将向您展示如何构建自定义SitePoint搜索引擎 ,该引擎远远超过了WordPress可以提供的任何功能。 我们将使用Diffbot作为服务来自动从SitePoint中提取结构化数据,并使用此匹配的API客户端来进行搜索和爬网。 I’ll also be using my trusty Homestead Improved environment for a clean project, so I can experiment in a VM that’s dedicated to this project and this project alone. 我还将在一个干净的项目中使用值得信赖的Homestead Improvementd环境,因此我可以在专门用于该项目和该项目的VM中进行实验。 To make a SitePoint search engine, we need to do the following: 要制作SitePoint搜索引擎,我们需要执行以下操作: Build a GUI for submitting search queries to the saved set produced by this crawljob. Searching is done via the Search API. We’ll do this in a followup post. 构建一个GUI,以将搜索查询提交给此crawljob生成的已保存集。 通过Search API完成搜索 。 我们将在后续帖子中进行介绍。 A Diffbot Crawljob does the following: Diffbot Crawljob执行以下操作: It spiders a URL pattern for URLs. This does not mean processing – it means looking for links to process on all the pages it can find, starting from the domain you originally passed in as seed. For the difference between crawling and processing, see here. 它搜寻URL的URL模式。 这并不意味着处理-这意味着寻找链接过程对所有能找到的网页,从您最初传递的种子域开始。 有关抓取和处理之间的区别,请参见此处 。 Jobs can be created through Diffbot’s GUI, but I find creating them via the crawl API is a more customizable experience. In an empty folder, let’s first install the client library. 可以通过Diffbot的GUI创建作业,但是我发现通过爬网API创建作业是一种更可定制的体验。 在一个空文件夹中,让我们首先安装客户端库。 I now need a 现在我需要一个 The Diffbot instance is used to create access points to API types offered by Diffbot. In our case, a “Crawl” type is needed. Let’s name it “sp_search”. Diffbot实例用于创建对Diffbot提供的API类型的访问点。 在我们的情况下,需要使用“爬网”类型。 我们将其命名为“ sp_search”。 This will create a new crawljob when the Then, we make it notify us when it’s done crawling, just so we know when a crawling round is complete, and we can expect up to date information to be in the dataset. 然后,我们让它在完成爬网时通知我们,以便我们知道爬网回合何时完成,并且我们可以期望最新信息出现在数据集中。 A site can have hundreds of thousands of links to spider, and hundreds of thousands of pages to process – the max limits are a cost-control mechanism, and in this case, I want the most detailed possible set available to me, so I’ll put in one million URLs into both values. 一个站点可以有成千上万的蜘蛛链接,以及成千上万的页面要处理-最大限制是一种成本控制机制,在这种情况下,我希望可以得到最详细的设置,因此将两个值都放入一百万个网址。 We also want this job to refresh every 24 hours, because we know SitePoint publishes several new posts every single day. It’s important to note that repeating means “from the time the last round has finished” – so if it takes a job 24 hours to finish, the new crawling round will actually start 48 hours from the start of the previous round. We’ll set max rounds as 0, to indicate we want this to repeat indefinitely. 我们也希望这项工作每24小时刷新一次,因为我们知道SitePoint每天都会发布多个新帖子。 重要的是要注意,重复的意思是“从上一轮结束时开始 ” –因此,如果需要完成24小时的工作,则新的抓取回合实际上将从上一回合开始48小时开始。 我们将max rounds设置为0,以表示我们希望它无限期地重复。 Finally, there’s the page processing pattern. When Diffbot processes pages during a crawl, only those that are processed – not crawled – are actually charged / counted towards your limit. It is, therefore, in our interest to be as specific as possible with our crawljob’s definition, as to avoid processing pages that aren’t articles – like author bios, ads, or even category listings. Looking for 最后,有页面处理模式。 当Diffbot在抓取过程中处理页面时,只有被处理的页面(而非被抓取的页面)才会实际收取/计入您的限额。 因此,为了我们的利益,请尽可能对爬虫的定义进行具体说明,以避免处理不是文章的页面,例如作者履历,广告或类别列表。 寻找 Before finishing up with the crawljob configuration, there’s just one more important parameter we need to add – the crawl pattern. When passing in a seed URL to the Crawl API, the Crawljob will traverse all subdomains as well. So if we pass in 在完成crawljob配置之前,我们需要添加一个更重要的参数-爬网模式。 当将种子URL传递给Crawl API时,Crawljob也将遍历所有子域。 因此,如果我们传递 Now we need to tell the job which API to use for processing. We could use the default – Analyze API – which would make Diffbot auto-determine the structure of the data we’re trying to obtain, but I prefer specificity and want it to know outright that it should only produce articles. 现在我们需要告诉作业要使用哪个API进行处理。 我们可以使用默认的Analyze API,这将使Diffbot自动确定我们要获取的数据的结构,但是我更喜欢具体性,希望它完全知道它只应生成文章。 Note that with the individual APIs (like Product, Article, Discussion, etc..) you can process individual resources even with the free demo token from Diffbot.com, which lets you test out your links and see what data they’ll return before diving into bulk processing via Crawlbot. For information on how to do this, see the README file. 请注意,使用单独的API(例如产品,文章,讨论等),即使使用Diffbot.com提供的免费演示令牌,您也可以处理单独的资源,该令牌可让您测试链接并查看它们将返回的数据通过Crawlbot进入批量处理。 有关如何执行此操作的信息,请参见README文件 。 The job is now configured, and we can 现在,已经配置了该作业,我们可以 The full code for creating this job is: 创建此作业的完整代码为: Calling this script via command line ( 通过命令行( It’ll take a while to finish (days, actually – SitePoint is a huge place), but all subsequent rounds will be faster because we told the job to only process pages it hasn’t encountered before. 要花一些时间(实际上,几天-SitePoint是一个很大的地方),但是随后的所有回合都将更快,因为我们告诉作业仅处理以前从未遇到过的页面。 To search a dataset, we need to use the Search API. A dataset can be used even before it’s complete – the Search API will simply search through the data it has, ignoring the fact that it doesn’t have everything. 要搜索数据集,我们需要使用Search API。 数据集甚至可以在数据完成之前就可以使用-Search API会简单地搜索其拥有的数据,而忽略了它不具备所有功能的事实。 To use the search API, one needs to create a new search instance with a search query as a constructor parameter: 要使用搜索API,需要使用搜索查询作为构造函数参数创建一个新的搜索实例: The The returned data can be iterated over, and every element will be an instance of Article. Here’s a rudimentary table exposing links and titles: 返回的数据可以进行迭代,并且每个元素都是Article的一个实例。 这是一张显示链接和标题的基本表格: The Search API can return some amazingly fine tuned result sets. The Search API可以返回一些非常精细的结果集。 We can also get the “meta” information about a Search API request by passing 我们还可以通过在进行原始调用后将 The result we get back is a SearchInfo object with the values as shown below (all accessible via getters): 我们返回的结果是一个SearchInfo对象,其值如下所示(均可通过getter访问): With SearchInfo, you get access to the speed of your request, the number of hits (not the returned results, but total number – useful for pagination), etc. 使用SearchInfo,您可以访问请求的速度,命中数(不是返回的结果,而是总数–对分页很有用)等。 To get information about a specific crawljob, like finding out its current status, or how many pages were crawled, processed, etc, we can call the 要获取有关特定爬网作业的信息(例如找出其当前状态或已爬网,处理了多少页等),我们可以再次调用 At this point, we’ve got our collection being populated with crawled data from SitePoint.com. Now all we have to do is build a GUI around the Search functionality of the Diffbot API client, and that’s exactly what we’re going to be doing in the next part. 至此,我们已经从SitePoint.com抓取的数据中填充了集合。 现在,我们要做的就是围绕Diffbot API客户端的“搜索”功能构建一个GUI,这正是我们接下来将要做的事情。 In this tutorial, we looked at Diffbot’s ability to generate collections of structured data from websites of arbitrary format, and its Search API which can be used as the search engine behind a crawled site. While the price might be somewhat over the top for the average solo developer, for teams and companies this tool is a godsend. 在本教程中,我们研究了Diffbot从任意格式的网站生成结构化数据集合的能力,以及其Search API(可以用作已爬网站点背后的搜索引擎)。 对于普通的单独开发人员来说,价格可能会高一些,但对于团队和公司而言,此工具是天赐之物。 Imagine being a media conglomerate with dozens or hundreds of different websites under your belt, and wanting a directory of all your content. Consolidating the efforts of all those backend teams to not only come up with a way to merge the databases but also find the time to do it in their daily efforts (which include keeping their outdated websites alive) would be an impossible and ultra expensive task, but with Diffbot, you unleash Crawlbot on all your domains and just use the Search API to traverse what was returned. What’s more, the data you crawl is downloadable in full as a JSON payload, so even if it gets too expensive, you can always import the data into your own solution later on. 想象一下,您是一家拥有数十个或数百个不同网站的媒体集团,并且想要一个包含所有内容的目录。 整合所有这些后端团队的工作,不仅想出一种方法来合并数据库,而且要花时间在日常工作中(包括让过时的网站保持活动),这将是不可能完成的,也是一项极其昂贵的任务,但是使用Diffbot,您可以在所有域上释放Crawlbot,而只需使用Search API遍历返回的内容。 而且,您抓取的数据可以作为JSON负载完全下载,因此,即使代价太高,您以后也可以随时将数据导入自己的解决方案中。 It’s important to note that not many websites agree with being crawled, so you should probably look at their terms of service before attempting it on a site you don’t own – crawls can rack up people’s server costs rather quickly, and by stealing their content for your personal use without approval, you also rob them of potential ad revenue and other streams of income connected with the site. 重要的是要注意,很少有网站同意进行爬网,因此在不属于自己的网站上尝试使用爬网之前,您可能应该先查看其服务条款-爬网会相当快地增加人们的服务器成本,并会窃取其内容未经批准而用于个人用途,您还会抢劫他们与网站相关的潜在广告收入和其他收入来源。 In part 2, we’ll look at how we can turn everything we’ve got so far into a GUI so that the average Joe can easily use it as an in-depth SitePoint search engine. 在第2部分中,我们将研究如何将到目前为止的所有内容转换为GUI,以便普通的Joe可以轻松地将其用作深入的SitePoint搜索引擎。 If you have any questions or comments, please leave them below! 如果您有任何疑问或意见,请留在下面! 翻译自: https://www.sitepoint.com/crawling-searching-entire-domains-diffbot/ 搜索爬行原理

什么啊 (What’s what?)

创建一个Crawljob (Creating a Crawljob)

composer require swader/diffbot-php-clientjob.php file into which I’ll just dump the job creation procedure, as per the README:job.php文件,按照README的说明 ,我将在其中转储作业创建过程: include 'vendor/autoload.php';

use SwaderDiffbotDiffbot;

$diffbot = new Diffbot('my_token');$job = $diffbot->crawl('sp_search');call() method is called. Next, we’ll need to configure the job. First, we need to give it the seed URL(s) on which to start the spidering process:call()方法时,这将创建一个新的crawljob。 接下来,我们需要配置作业。 首先,我们需要为其提供种子URL,以便在其上开始搜寻过程: $job

->setSeeds(['https://www.sitepoint.com'])$job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')$job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')

->setMaxToCrawl(1000000)

->setMaxToProcess(1000000)$job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')

->setMaxToCrawl(1000000)

->setMaxToProcess(1000000)

->setRepeat(1)

->setMaxRounds(0)<section class="article_body"> should do – every post has this. And of course, we want it to only process the pages it hasn’t encountered before in each new round – no need to extract the same data over and over again, it would just stack up expenses.<section class="article_body">应该可以做–每个帖子都有这个。 当然,我们希望它仅处理在每个新回合中从未遇到过的页面-无需一遍又一遍地提取相同的数据,这只会增加费用。 $job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')

->setMaxToCrawl(1000000)

->setMaxToProcess(1000000)

->setRepeat(1)

->setMaxRounds(0)

->setPageProcessPatterns(['<section class="article_body">'])

->setOnlyProcessIfNew(1)https://www.sitepoint.com, Crawlbot will look through http://community.sitepoint.com, and the now outdated http://reference.sitepoint.com – this is something we want to avoid, as it would slow our crawling process dramatically, and harvest stuff we don’t need (we don’t want the forums indexed right now). To set this up, we use the setUrlCrawlPatterns method, indicating that crawled links must start with sitepoint.com.https://www.sitepoint.com ,则Crawlbot将浏览http://community.sitepoint.com和现在过时的http://reference.sitepoint.com //reference.sitepoint.com-这是我们要避免的事情,因为这会极大地减慢我们的爬网过程,并收获不需要的东西(我们不希望现在对论坛建立索引)。 要进行设置,我们使用setUrlCrawlPatterns方法,该方法指示已爬网的链接必须以sitepoint.com开头。 $job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')

->setMaxToCrawl(1000000)

->setMaxToProcess(1000000)

->setRepeat(1)

->setMaxRounds(0)

->setPageProcessPatterns(['<section class="article_body">'])

->setOnlyProcessIfNew(1)

->setUrlCrawlPatterns(['^http://www.sitepoint.com', '^https://www.sitepoint.com'])$api = $diffbot->createArticleAPI('crawl')->setMeta(true)->setDiscussion(false);

$job->setApi($api);call() Diffbot with instructions on how to create it:call() Diffbot以及有关如何创建该作业的说明: $job->call();$diffbot = new Diffbot('my_token');

$job = $diffbot->crawl('sp_search');

$job

->setSeeds(['https://www.sitepoint.com'])

->notify('bruno.skvorc@sitepoint.com')

->setMaxToCrawl(1000000)

->setMaxToProcess(1000000)

->setRepeat(1)

->setMaxRounds(0)

->setPageProcessPatterns(['<section class="article_body">'])

->setOnlyProcessIfNew(1)

->setApi($diffbot->createArticleAPI('crawl')->setMeta(true)->setDiscussion(false))

->setUrlCrawlPatterns(['^http://www.sitepoint.com', '^https://www.sitepoint.com']);

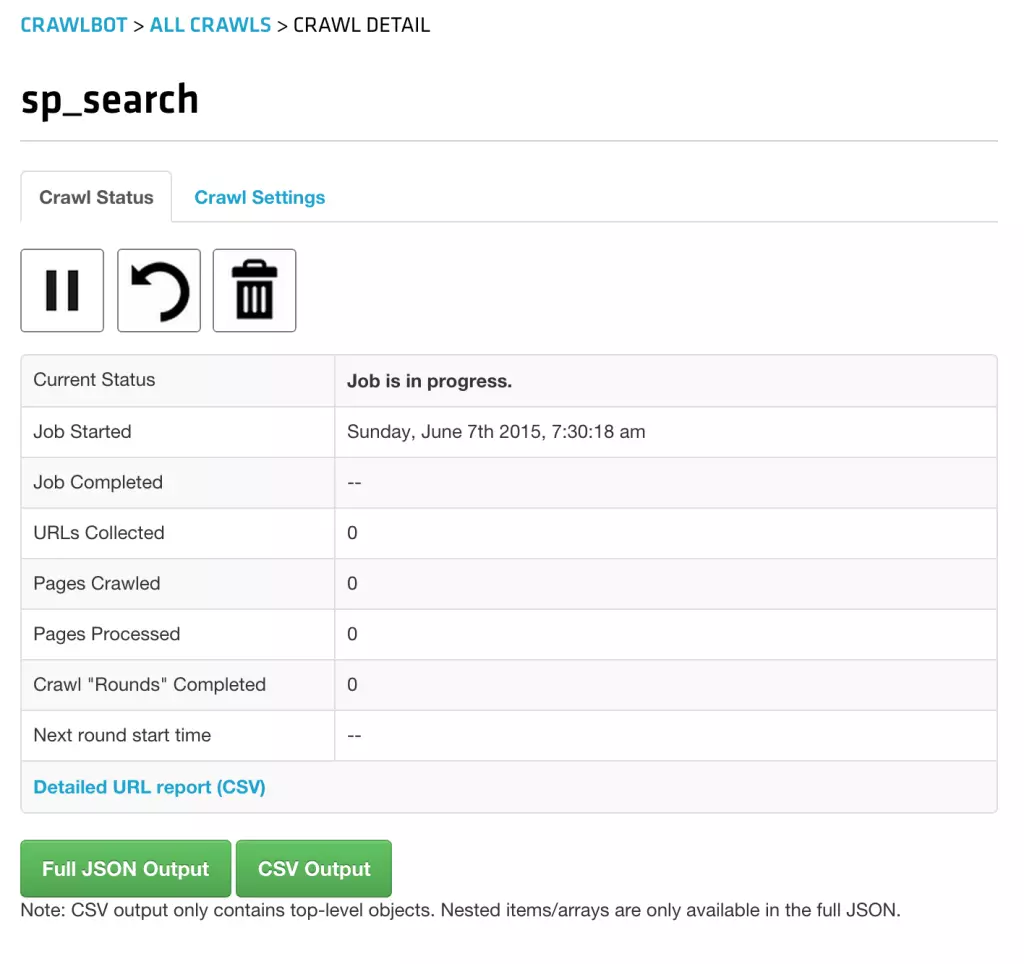

$job->call();php job.php) or opening it in the browser has created the job – it can be seen in the Crawlbot dev screen:php job.php )调用此脚本或在浏览器中打开该脚本即可创建该作业-可以在Crawlbot dev屏幕中看到:

正在搜寻 (Searching)

$search = $diffbot->search('author:"Bruno Skvorc"');

$search->setCol('sp_search');

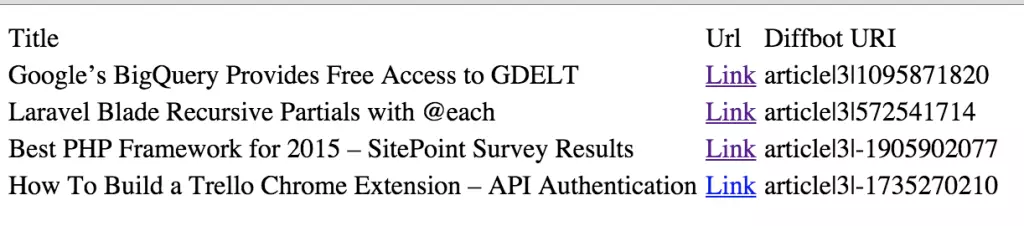

$result = $search->call();setCol method is optional, and if omitted will make the Search API go through all the collections under a single Diffbot token. As I have several collections from my previous experiments, I opted to specify the last one we created: sp_search (collections share names with the jobs that created them).setCol方法是可选的,如果省略,将使Search API在单个Diffbot令牌下遍历所有集合。 由于我以前的实验中有几个集合,因此我选择指定我们创建的最后一个集合: sp_search (集合与创建它们的作业共享名称)。 <table>

<thead>

<tr>

<td>Title</td>

<td>Url</td>

</tr>

</thead>

<tbody>

<?php

foreach ($search as $article) {

echo '<tr>';

echo '<td>' . $article->getTitle() . '</td>';

echo '<td><a href="' . $article->getResolvedPageUrl() . '">Link</a></td>';

echo '</tr>';

}

?>

</tbody>

</table>

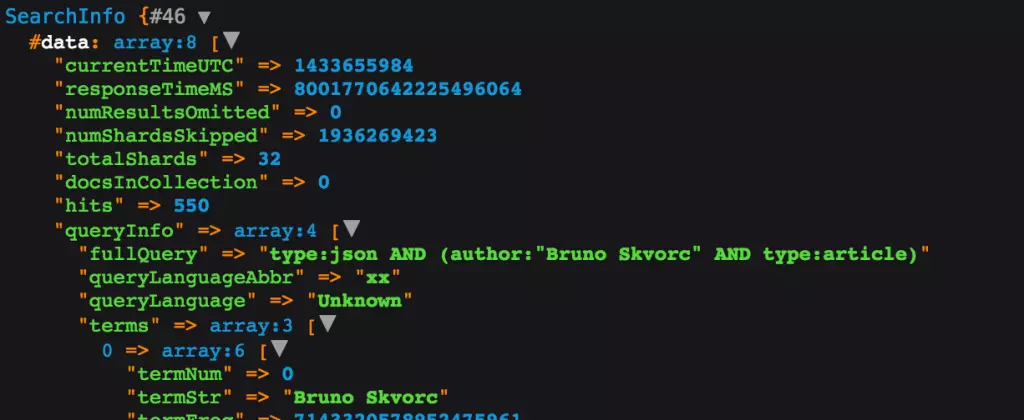

query param will accept everything from common keywords, to date ranges, to targeted specific fields (like title:diffbot) to boolean combinations of various parameters, like type:article AND title:robot AND (overlord OR butler), producing all articles that have the word “robot” in the title and either the word “overlord” or “butler” in any of the fields (title, body, meta tags, etc). We’ll be taking advantage of all this advanced functionality in the next post as we build our search engine’s GUI.query参数将接受所有内容,从通用关键字到日期范围,再到目标特定字段(如title:diffbot )再到各种参数的布尔组合,如type:article AND title:robot AND (overlord OR butler) ,生成所有标题中带有“ robot”一词,并且在任何字段(标题,正文,元标记等)中都有“ overlord”或“ butler”一词。 在构建搜索引擎的GUI时,我们将在下一篇文章中利用所有这些高级功能。 true into the call() after making the original call:true传递给call()获取有关Search API请求的“元”信息: $info = $search->call(true);

dump($info);

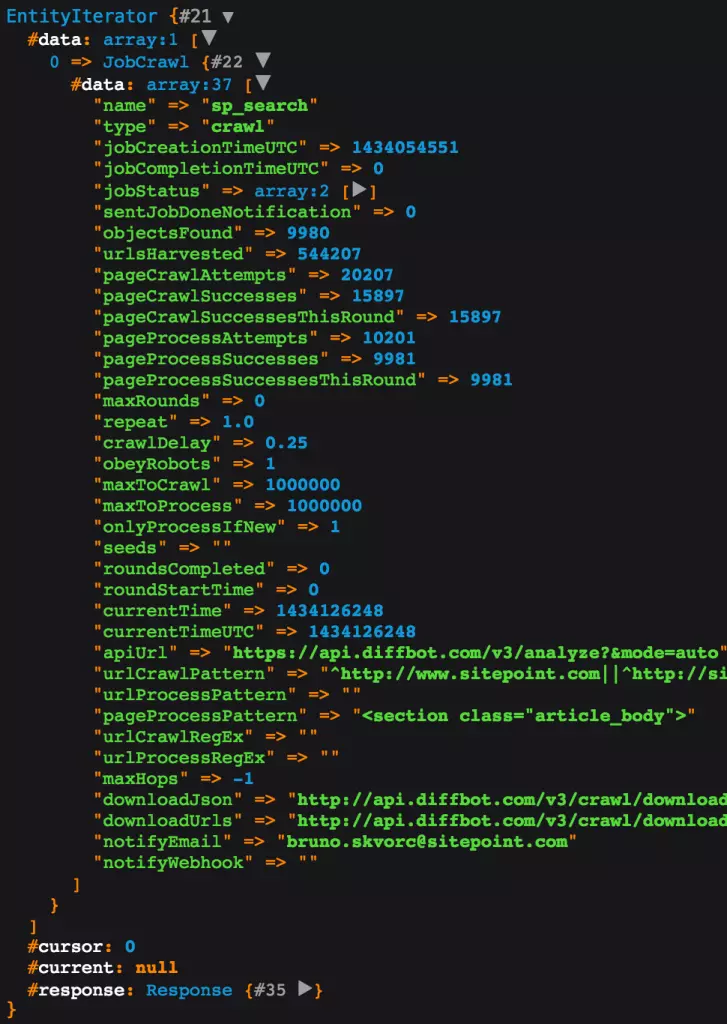

crawl API again and just pass in the same job name. This, then, works as a read only operation, returning all the meta info about our job:crawl API并传入相同的作业名称。 然后,这将作为只读操作,返回有关我们工作的所有元信息: dump($diffbot->crawl('sp_search')->call());

结论 (Conclusion)

最后

以上就是温柔蜡烛最近收集整理的关于搜索爬行原理_使用Diffbot爬行和搜索整个域的全部内容,更多相关搜索爬行原理_使用Diffbot爬行和搜索整个域内容请搜索靠谱客的其他文章。

发表评论 取消回复