文章目录

- 准备阶段

- k-Nearest Neighbor classifier

- Inline Question

最近开始学习CS231N课程,还是通过自己的理解,将编程中问题或者重点进行记录。

准备阶段

对比了历年CS231N开课情况,发现从2017春季课程开始后,每一年的assignment都没有发生变化,故以下记录都是适用所有作业的。

首先,根据2019年cs231n课程的准备指导,将代码和数据先下载下来。随后,使用jupyter notebook进行编辑。

所谓磨刀不误砍柴工,在进行作业前,建议花一点时间将numpy库、matplotlib库的使用方法进行阅读,链接:Python Numpy Tutorial

本次作业分为五个部分:knn、SVM、Softmax classifier、2层神经网络、Higher Level Representations: Image Features。

k-Nearest Neighbor classifier

通过阅读knn文件,可以知道在knn中调用了k_nearest_neighbor.py文件,第一部分首先完成两重循环计算测试集数据到训练集数据的欧式距离。

k_nearest_neighbor.py中的compute_distances_two_loops(self, X)函数

for i in range(num_test):

for j in range(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension, nor use np.linalg.norm(). #

#####################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 使用numpy库对两个向量进行欧几里得距离计算

dists[i,j] = np.sqrt(np.sum(np.square(X[i] - self.X_train[j])))

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

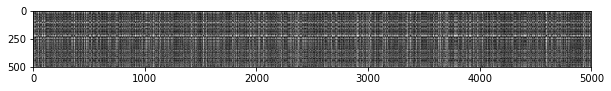

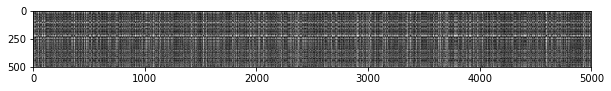

通过之前的学习,可以使用numpy进行代码完成,在这里我是偷了懒,将代码写成了一行,但是我相信这段代码还是很好理解的。首先在两重循环中将测试集和训练集各一行向量通过减法运算后,平方,然后求和,最后开根号,这样就完成了欧式距离计算,运行结果如图:

嵌入在knn文件中的思考问题见最后。

接下来,继续阅读knn代码,可以看到第二部分需要完成的是预测函数predict_labels()

首先,在for循环中,选择与第i个点最近的k个元素的下标,并找到其对应训练数据集的分类值。然后选择出现类别最高的一个元素作为预测分类。

使用到了几个新的函数,具体使用方法见注释。

k_nearest_neighbor.py中的predict_labels()函数 [只展示for循环]

for i in range(num_test):

# A list of length k storing the labels of the k nearest neighbors to

# the ith test point.

closest_y = []

#########################################################################

# TODO: #

# Use the distance matrix to find the k nearest neighbors of the ith #

# testing point, and use self.y_train to find the labels of these #

# neighbors. Store these labels in closest_y. #

# Hint: Look up the function numpy.argsort. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 找到最小的k个值的下标

min_idxs = np.argsort(dists[i])[0:k]

# 选择训练数据标签的值

closest_y = self.y_train[min_idxs]

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#########################################################################

# TODO: #

# Now that you have found the labels of the k nearest neighbors, you #

# need to find the most common label in the list closest_y of labels. #

# Store this label in y_pred[i]. Break ties by choosing the smaller #

# label. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 使用np.bincount()和np.argmax()函数来实现

# np.bincount()返回一个列表,每一位值为Yi,意思是i这个数在列表中出现的次数为Yi次。

# np.argmax()返回列表中最大值的下标

y_pred[i] = np.argmax(np.bincount(closest_y))

pass

接下来实现一重循环计算距离。需要注意的是,内层循环去掉后,只能直接对x_train操作,需要注意求和时,注明求和的纬度。

例如np.sum(X,axis= 1)是在行维度上进行求和,即把每一行加起来;np.sum(X , axis = 0)是在列维度上进行求和,即把每一列加起来。

k_nearest_neighbor.py中的compute_distances_one_loops(self, X)函数 [只展示for循环]

for i in range(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

# Do not use np.linalg.norm(). #

#######################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 求和是需注意,这次是对列纬度上的求和,即把每一列加起来后再开根号

dists[i,:] = np.sqrt(np.sum(((X[i,:] - self.X_train) ** 2 ) , axis=1))

pass

接下来实现完全向量化代码,不得不说这部分实现起来和matlab不一样,原因在于numpy引入了broadcast机制,简单说就是当操作的元素维度不匹配时,广播机制开始将一个矩阵向着维度更多的矩阵填充。具体可参考这篇文章:https://www.runoob.com/numpy/numpy-broadcast.html

k_nearest_neighbor.py中的compute_distances_no_loops(self, X)函数

# 要计算L2距离,使用向量化代码,则只能将完全平方项展开,然后加起来

# 其中乘法部分注意维度

# 使用reshape触发broadcast

dists += np.sum(X ** 2, axis=1).reshape(num_test,1)

dists += np.sum(self.X_train ** 2, axis=1).reshape(1,num_train)

dists -= 2 * np.dot(X, self.X_train.T)

dists = np.sqrt(dists)

pass

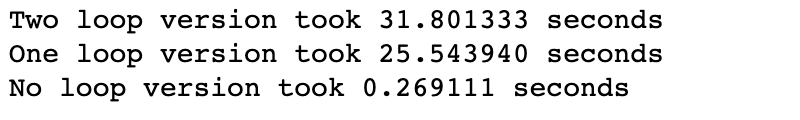

最后是三种方式的时间比较,可以看出完全向量化代码的效率是最高的,因为使用线性代数的方式进行计算,这些库都做了非常好的优化,甚至可以在多核CPU上并行执行。

接下来是在knn文件中,测试不同k值时,何时效果最好。这部分代码有点难,首先将是循环所有的k,并在每一次循环中再循环num_folds次,把数据集中的“一份”数据作为交叉验证集,其余作为训练集。

代码如下

knn.ipynb

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 将原始数据分为5份

X_train_folds = np.array_split(X_train,num_folds)

y_train_folds = np.array_split(y_train,num_folds)

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 中间已给出的代码省略

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# 循环每一个k

for k in k_choices:

# 将字典值部分初始化为一个大小为num_folds的数组

k_to_accuracies[k] = np.zeros(num_folds)

acc = []

for i in range(num_folds):

# 把第i个空出来,作为cv集

X_tr = X_train_folds[0:i] + X_train_folds[i+1:]

y_tr = y_train_folds[0:i] + y_train_folds[i+1:]

# 使用concatenate将4个训练集拼在一起,axis=0可以省略

X_tr = np.concatenate(X_tr,axis=0)

y_tr = np.concatenate(y_tr,axis=0)

# 第i个为cv集

X_cv = X_train_folds[i]

y_cv = y_train_folds[i]

classifier = KNearestNeighbor()

# 将训练数据保存

classifier.train(X_tr,y_tr)

# 计算距离矩阵,使用cv集合

dists = classifier.compute_distances_no_loops(X_cv)

# 预测结果

y_cv_pred = classifier.predict_labels(dists,k=k)

# 计算准确率

num_correct = np.mean(y_cv_pred == y_cv)

acc.append(num_correct)

k_to_accuracies[k] = acc

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

Inline Question

Inline Question 1

Notice the structured patterns in the distance matrix, where some rows or columns are visible brighter. (Note that with the default color scheme black indicates low distances while white indicates high distances.)

- What in the data is the cause behind the distinctly bright rows?

- What causes the columns?

我的答案:

- 白色的点表示测试集和训练集中对应的两幅图片非常的不相似,而白色的横线(或竖线)则是测试集(或训练集)中对应的图片恰好与训练集(或测试集)中对应的图片非常不相似。

Inline Question 2

We can also use other distance metrics such as L1 distance.

For pixel values

p

i

j

(

k

)

p_{ij}^{(k)}

pij(k) at location

(

i

,

j

)

(i,j)

(i,j) of some image

I

k

I_k

Ik,

the mean

μ

mu

μ across all pixels over all images is

μ

=

1

n

h

w

∑

k

=

1

n

∑

i

=

1

h

∑

j

=

1

w

p

i

j

(

k

)

mu=frac{1}{nhw}sum_{k=1}^nsum_{i=1}^{h}sum_{j=1}^{w}p_{ij}^{(k)}

μ=nhw1k=1∑ni=1∑hj=1∑wpij(k)

And the pixel-wise mean

μ

i

j

mu_{ij}

μij across all images is

μ

i

j

=

1

n

∑

k

=

1

n

p

i

j

(

k

)

.

mu_{ij}=frac{1}{n}sum_{k=1}^np_{ij}^{(k)}.

μij=n1k=1∑npij(k).

The general standard deviation

σ

sigma

σ and pixel-wise standard deviation

σ

i

j

sigma_{ij}

σij is defined similarly.

Which of the following preprocessing steps will not change the performance of a Nearest Neighbor classifier that uses L1 distance? Select all that apply.

- Subtracting the mean μ mu μ ( p ~ i j ( k ) = p i j ( k ) − μ tilde{p}_{ij}^{(k)}=p_{ij}^{(k)}-mu p~ij(k)=pij(k)−μ.)

- Subtracting the per pixel mean μ i j mu_{ij} μij ( p ~ i j ( k ) = p i j ( k ) − μ i j tilde{p}_{ij}^{(k)}=p_{ij}^{(k)}-mu_{ij} p~ij(k)=pij(k)−μij.)

- Subtracting the mean μ mu μ and dividing by the standard deviation σ sigma σ.

- Subtracting the pixel-wise mean μ i j mu_{ij} μij and dividing by the pixel-wise standard deviation σ i j sigma_{ij} σij.

- Rotating the coordinate axes of the data.

Y

o

u

r

A

n

s

w

e

r

:

color{blue}{textit Your Answer:}

YourAnswer:(个人理解,有不对的地方希望大家纠正)

1 , 3

Y

o

u

r

E

x

p

l

a

n

a

t

i

o

n

:

color{blue}{textit Your Explanation:}

YourExplanation:

1:将每一个像素减去同一个平均值,故L1距离不会发生任何变化,故性能不变。

2:将每一个像素减去不同图片像素 的平均值,L1距离势必发生变化。

3:同1,减去同一个平均值后,再处以同一个标准差,距离的比例不变,故不影响L1的性能。

4:同2,L1距离势必发生变化。

5:当坐标轴发生旋转时,只有L2距离不会发生变化,L1距离会变化,参考文章:链接

Inline Question 3

Which of the following statements about

k

k

k-Nearest Neighbor (

k

k

k-NN) are true in a classification setting, and for all

k

k

k? Select all that apply.

- The decision boundary of the k-NN classifier is linear.

- The training error of a 1-NN will always be lower than that of 5-NN.

- The test error of a 1-NN will always be lower than that of a 5-NN.

- The time needed to classify a test example with the k-NN classifier grows with the size of the training set.

- None of the above.

Y

o

u

r

A

n

s

w

e

r

:

color{blue}{textit Your Answer:}

YourAnswer:

4

Y

o

u

r

E

x

p

l

a

n

a

t

i

o

n

:

color{blue}{textit Your Explanation:}

YourExplanation:

1:显然不一定是线性

2:不一定吧

3:不一定吧

4:训练集越大,耗时越多,正确

参考文章

Solutions to Stanford’s CS 231n Assignments 1 Inline Problems: KNN

参考代码文章1

参考代码文章2

下一篇:cs231n assignment1 SVM

最后

以上就是孝顺小懒虫最近收集整理的关于cs231n assignment1 knn的全部内容,更多相关cs231n内容请搜索靠谱客的其他文章。

![[cs231n] Assignment1-KNN](https://www.shuijiaxian.com/files_image/reation/bcimg5.png)

发表评论 取消回复