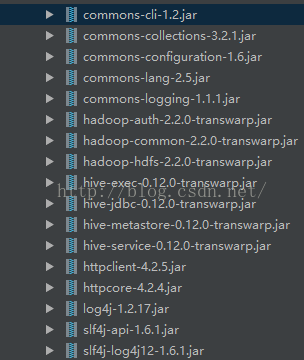

1.相关jar包

2.一些需要的参数定义在message.properties中

jdbcHiveUrl=jdbc:hive2://10.1.9.91:10000 //hive地址

jdbcHiveDriver=org.apache.hive.jdbc.HiveDriver // hive驱动

jdbc_mysql_driver=com.mysql.jdbc.Driver //MySQL驱动

hiveUser=hive //hive用户名

hivePwd=123456 //hive密码

hiveType=star

hiveDbName=default

mapred.reduce.tasks=1

sqoopServerUrl=http://10.1.9.91:12000/sqoop/ //sqoop服务器地址

##polling interval time init(ms)

polling_interval_time = 86400000 //定时的时间间隔,参照上一篇

#Project start day hour:min:second

polling_start_time = 10:54:10 //定时的启动时间

outputFormat=TEXT_FILE

storageType=HDFS

sqoopOutput = /user/outputHive/

HDFSUrl =

export_target_database_url=jdbc:mysql://10.1.35.13:3306/tsinghuadaxue //MySQL数据库地址

export_target_database_username=root //MySQL用户名

export_target_database_password=root //MySQL用户密码

package com.scheduler.service.impl;

import java.io.IOException;

import java.sql.*;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.*;

import java.util.Date;

import com.scheduler.service.JDBCService;

import com.scheduler.util.*;

import org.apache.sqoop.submission.SubmissionStatus;

import com.scheduler.service.HiveService;

import org.apache.commons.lang3.StringUtils;

import org.apache.log4j.Logger;

import org.apache.sqoop.client.SqoopClient;

import org.apache.sqoop.client.SubmissionCallback;

import org.apache.sqoop.model.*;

import org.apache.sqoop.validation.Status;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import com.mysql.jdbc.Statement;

import com.scheduler.service.DataSynMysqlAndHive;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.sqoop.model.MConnection;

import org.apache.sqoop.model.MConnectionForms;

import org.apache.sqoop.model.MJob;

import org.apache.sqoop.model.MJobForms;

import org.apache.sqoop.model.MSubmission;

import org.apache.sqoop.submission.counter.Counter;

import org.apache.sqoop.submission.counter.CounterGroup;

import org.apache.sqoop.submission.counter.Counters;

@Service("DataSynMysqlAndHiveImpl")

public class DataSynMysqlAndHiveImpl implements DataSynMysqlAndHive {

protected Logger log = Logger.getLogger(DataSynMysqlAndHiveImpl.class);

private static String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

private static String jdbcHiveUrl = Messages.getString("jdbcHiveUrl");

private static String hiveUser = Messages.getString("hiveUser");

private static String hivePwd = Messages.getString("hivePwd");

private static String exportDatabase = Messages.getString("export_target_database_url");

private static String exportUsername = Messages.getString("export_target_database_username");

private static String exportPassword = Messages.getString("export_target_database_password");

private static String jdbcMysqlDriver = Messages.getString("jdbc_mysql_driver");

private static String pollingStartTime = Messages.getString("polling_start_time");

private static SimpleDateFormat yMd = new SimpleDateFormat("yyyy-MM-dd");

private static SimpleDateFormat yMdHms = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

private static Date polling_start_time = null; //轮询开始时间

private static String sqoopServerUrl = Messages.getString("sqoopServerUrl");

private SqoopClient sqoopClient;// sqoop客户端对象

@Autowired

private JDBCService jdbcService; // 增加JDBC服务

@Autowired

private HiveService hfs;

@Override

public String exportHiveData(String tableName) {

String flag = "success";

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

flag = "error";

e.printStackTrace();

log.error("hive链接出错", e);

}

//获取当天时间以及前一天的时间

Date nowDate = new Date();

Calendar calendar = Calendar.getInstance();

calendar.setTime(nowDate);

calendar.add(Calendar.DAY_OF_MONTH, -1);

Date predate = calendar.getTime();

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

String predateString = dateFormat.format(predate) + " " + pollingStartTime;

String nowdateString = dateFormat.format(nowDate) + " " + pollingStartTime;

String sql = "select * from " + tableName + " where resource_flag = 1 and create_time <= '" + nowdateString +"' and create_time >'" + predateString +"'";

log.info("sql:" + sql);

System.out.println("sql:" + sql);

try {

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

ResultSet resultSet = stmt.executeQuery(sql);

if (resultSet.next()) {//如果查询hive有数据则进行更新,如果没有数据那么不更新

String exportSql = generateExportSql(sql,tableName);

ResultSet set = stmt.executeQuery(exportSql);

System.out.println("导出sql为:"+exportSql);

if (set.next()) {

int result = set.getInt(1);

if (result == 1) {

flag = "error";

}

}

}

closeConnection(con, stmt, resultSet);

} catch (SQLException e) {

e.printStackTrace();

flag = "error";

}

return flag;

}

/**

* @param sql

* @param tableName

* @return

*/

private String generateExportSql(String selectSql, String tableName) {

//拼接sql,使用udf函数导出

StringBuffer buffer = new StringBuffer();

buffer.append("select dboutput('");

buffer.append(exportDatabase);

buffer.append("','");

buffer.append(exportUsername);

buffer.append("','");

buffer.append(exportPassword);

buffer.append("','");

//定义数据库链接

Connection conn = null;

//定义数据库查询结果劫

ResultSet rs = null;

try {

//设置编码

/*if (exportDatabase.contains("jdbc:mysql") && !exportDatabase.contains("characterEncoding")) {

exportDatabase = exportDatabase + "?characterEncoding=UTF-8";//设置utf-8编码

}*/

//获取数据库链接

conn=getConnection(jdbcMysqlDriver, exportDatabase, exportUsername, exportPassword);

//获取结果

rs=conn.getMetaData().getColumns(null, null, tableName, null);

//循环获取所有结果

String columnNames = "";

String value = "";

while(rs.next()){

if (!StringUtils.equals("id", rs.getString("COLUMN_NAME"))) {

columnNames = columnNames + rs.getString("COLUMN_NAME") + ",";

value = value + "?,";

}

}

columnNames = columnNames.substring(0, columnNames.length()-1);

value = value.substring(0, value.length()-1);

String insertSql = "insert into " + tableName + "(" + columnNames +") values(" +value + ")";

buffer.append(insertSql+"',");

buffer.append(columnNames);

buffer.append(") from ");

buffer.append("("+selectSql.replace("*", columnNames)+")");

} catch (Exception e) {

e.printStackTrace();

}

closeConnection(conn, null, rs);

System.out.println("导出的sql为:"+buffer.toString());

return buffer.toString();

}

public void closeConnection(Connection connection, java.sql.Statement pStatement, ResultSet resultSet){

try {

if (resultSet != null) {

resultSet.close();

}

if (pStatement != null) {

pStatement.close();

}

if (connection != null) {

connection.close();

}

} catch (Exception e) {

e.printStackTrace();

}

}

public Connection getConnection(String driver, String url, String userName,

String password) {

//定义链接

Connection connection = null;

//加载数据库驱动

try {

Class.forName(driver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

System.out.println("The Driver loaded error,please contact to your Software Designer!");

}

//得到数据库链接

try {

Properties props =new Properties();

props.put("remarksReporting","true");

props.put("user", userName);

props.put("password", password);

connection = DriverManager.getConnection(url, props);

//connection = DriverManager.getConnection(url, userName, password);

} catch (SQLException e) {

e.printStackTrace();

}

return connection;

}

/**

* <p>

* Description:[mysql向hive中导入]

*

*/

@Override

public String importHiveData(String sourceTableName) {

//判断有没有数据更新

try {

Date nowTime = yMdHms.parse(yMdHms.format(new Date()));

//前一天时间

String preDate = yMdHms.format(TimeHelper.dateAddDay(nowTime,-1));

//

Timestamp aftTimestamp = getAfterMaxTimestamp(sourceTableName,preDate,"create_time");

if (null == aftTimestamp ){

return "检测没有新数据";

}

} catch (ParseException e) {

e.printStackTrace();

}

//定义全局变量监控抽取过程是否出现错误

boolean hasError = false;

//1.初始化sqoop客户端并且得到sqoop连接

MConnection con =initSqoop();

//如果得到的连接为空,打印日志,结束该任务

if (con == null) {

System.out.print("连接为空");

return "error";

}

//2.创建sqoop任务,任务类型为导入任务

MJob newjob = sqoopClient.newJob(con.getPersistenceId(),org.apache.sqoop.model.MJob.Type.IMPORT);

CallBack callback = new CallBack(sourceTableName);

//获取该表的表信息

List<TableVO> tableVOs = jdbcService.getTables(exportDatabase, exportUsername, exportPassword, null, null, sourceTableName, null);

//获取该表的列信息

List<ColumnVO> columnVOs = jdbcService.getColumns(exportDatabase, exportUsername, exportPassword, sourceTableName);

boolean isFirst = true;

String primaryKey = jdbcService.getPrimaryKey(exportDatabase,exportUsername,exportPassword,null,null,sourceTableName);

String hdfsFilePath= "";

hdfsFilePath=updateIncrementSqoopJob(newjob,sourceTableName,columnVOs);

//启用线程监控sqoop采集时长

Thread thread = monitorRuntime(sqoopClient,3*60*60,newjob);

//定义任务开始时间变量

long startTime = System.currentTimeMillis();

//开始sqoop任务采集,并返回sqoop任务采集状态

MSubmission submission = startSqoopTask(0,newjob,thread,callback);

//将sqoop导入时间字段添加到column中

columnVOs=addSqoopTimeColumn(columnVOs);

if (submission.getStatus().compareTo(SubmissionStatus.SUCCEEDED) == 0) {// 任务执行成功,则把数据写入到hive中

hasError=createOrcHiveAfterSqoop(sourceTableName,columnVOs, hdfsFilePath, startTime, startTime, false);

}

if (submission.getStatus().compareTo(SubmissionStatus.FAILED) == 0|| submission.getExceptionInfo() != null) {// 任务执行出错,打印出错信息,并记录到任务日志中

System.out.println(submission.getExceptionInfo());

//出现错误,记录日志,删除hdfs文件

addLogCaseSqoopFail(submission,newjob,hdfsFilePath,thread);

//标记发生错误

hasError = true;

return "error";

}

//afterFinishTask(hasError);

return "success";

}

/**

* <p>

* Description:[初始化sqoop客户端,得到sqoop链接]

* </p>

* @return MConnection sqoop连接

*/

public MConnection initSqoop(){

//初始化客户端

this.sqoopClient = new SqoopClient(sqoopServerUrl);

//获取该数据源的sqoop链接id

Long conId = createSqoopConnection("zheda",exportDatabase,exportUsername,exportPassword,jdbcMysqlDriver);

//根据sqoop xid 获得链接

MConnection con =sqoopClient.getConnection(conId);

//将该链接返回

return con;

}

public long createSqoopConnection(String resourceName, String jdbcUrl,

String name, String passwd, String driver) {

SqoopClient sqoopClient = new SqoopClient(Messages.getString("sqoopServerUrl"));

MConnection newCon = sqoopClient.newConnection(1);

MConnectionForms conForms = newCon.getConnectorPart();

MConnectionForms frameworkForms = newCon.getFrameworkPart();

newCon.setName(resourceName);

conForms.getStringInput("connection.connectionString").setValue(jdbcUrl);// 数据库连接url字符串

conForms.getStringInput("connection.jdbcDriver").setValue(driver);// 数据库驱动

conForms.getStringInput("connection.username").setValue(name);// 数据库用户名

conForms.getStringInput("connection.password").setValue(passwd);// 数据库密码

frameworkForms.getIntegerInput("security.maxConnections").setValue(0);// sqoop的最大连接数

try {

Status status = sqoopClient.createConnection(newCon);

if (status.canProceed()) {

return newCon.getPersistenceId();

} else {

log.info("Check for status and forms error ");

System.out.println("Check for status and forms error ");

return -1;

}

} catch (Exception e) {

log.error("创建连接出错!:"+e.getMessage());

System.out.println(e.getMessage());

return -1;

}

}

/**

* <p>

* Description:[初始化sqoop客户端,得到sqoop链接]

* </p>

*

*/

// sqoop任务执行回调内部类

class CallBack implements SubmissionCallback {

private String tableName;

public String getTableName() {

return tableName;

}

public void setTableName(String tableName) {

this.tableName = tableName;

}

public CallBack() {

super();

}

public CallBack(String tableName){

super();

this.tableName= tableName;

}

@Override

public void submitted(MSubmission mSubmission) {

}

@Override

public void updated(MSubmission mSubmission) {

}

// sqoop任务完成回调函数

@Override

public void finished(MSubmission arg0) {

}

}

/**

* <p>

* Description:[启用线程监控sqoop任务执行时长,如果超过执行时长,停止执行该任务]

* </p>

*

* @param SqoopClient sqoop客户端

* @param int 任务执行时长

* @param final long sqoop任务Id

* @return Thread 当前的监控线程

*/

public Thread monitorRuntime(SqoopClient sqc,int taskTime,final MJob sJob){

//获取监听时间,如果没有指定监听时间,默认为24小时

final int job_timeout_time = taskTime != 0 ? taskTime :20;

// 启用一个线程,用于监听sqoop执行任务的时间,如果时间超过最大执行时间,则停止掉该任务

Thread thread = new Thread(new Runnable() {

@Override

public void run() {

try {

//监听任务执行时长,如果超过最大时间,停掉sqoop任务

Thread.sleep(job_timeout_time * 60 * 60 * 1000);

sqoopClient.stopSubmission(sJob.getPersistenceId());

} catch (InterruptedException e) {

log.error("sqoop全量任务发生异常!",e);

}

}

});

thread.start();

//将该线程返回

return thread;

}

/**

* <p>

* Description:[任务采集后,根据原表中的字段信息以及hdfs文件地址创建hive表]

* </p>

*

* @param tableName 表名称

* @param columnVOs 表字段

* @param hdfsPath hdfs文件地址

* @return boolean 是否创建成功

*/

public boolean createHiveTable(String tableName,List<ColumnVO> columnVOs,String hdfsPath){

boolean hasError = false;

//组装sql

StringBuffer createSql = new StringBuffer("create table " + tableName + "(");

for (int i = 0; i < columnVOs.size(); i++) {

if (i == 0) {

createSql.append("`" + columnVOs.get(i).getColumnName()+ "` string");

} else {

createSql.append(",`"+ columnVOs.get(i).getColumnName()+ "` string");

}

}

createSql.append(") ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' LOCATION ");

createSql.append(" '" + hdfsPath + "'");

log.info("createSql:" + createSql);

String sql = createSql.toString().trim();

//创建表

try {

boolean success = hfs.createHiveTable(tableName, sql);

//如果返回的结果有错误,则标记hive创建出现错误

if(!success){

hasError = true;

}

} catch (Exception e) {

e.printStackTrace();

hasError =true;

}

//返回结果

return hasError;

}

/**

* <p>

* Description:[hive表创建失败后,记录日志并且删除对应的hdfs文件]

* </p>

*

* @param tableName 表名称

* @param hdfsPath hdfs文件地址

* @param jobId sqoopJobid

*/

public void addLogCaseCreatehiveTableError(String tableName,String hdfsPath,long jobId){

//记录日志,

//addTaskLog("create hiveTable "+tableName+" failed!", jobId);

//删除hdfs文件

deleteHdfsHiveTable(hdfsPath,tableName);

}

/**

* <p>

* Description:[启动sqoop采集任务]

* </p>

* @param loopTime 任务执行次数标识,用于判断创建或者更新任务

* @param newjob sqoopJob实体

* @param Thread 监控任务执行时长的线程

* @param callback sqoop回调类

* @return MSubmission Sqoop提交结果

*/

public MSubmission startSqoopTask(int loopTime,MJob newjob,Thread thread,CallBack callback){

MSubmission submission= null;

//第一次执行,则创建新的任务,否则,更新任务

if (loopTime == 0) {

sqoopClient.createJob(newjob);

} else {

sqoopClient.updateJob(newjob);

}

//执行sqoop任务

try {

submission = sqoopClient.startSubmission(newjob.getPersistenceId(), callback, 100);

} catch (InterruptedException e1) {

// 发生异常停止掉

if (thread.isAlive()) {

thread.interrupt();

}

log.error("sqoop提交全量任务出错!:",e1);

}

//返回结果

return submission;

}

/**

* <p>

* Description:[sqoop任务失败时,添加日志,删除hdfs文件等]

* </p>

* @param MSubmission Sqoop提交结果

* @param MJob sqoopJob实体

* @param String hdfs文件地址

* @param Thread 监控任务执行时长的线程

* @return void

*/

public void addLogCaseSqoopFail(MSubmission submission,MJob sJob,String hdfsUrl,Thread thread){

//后台打印出错误信息

System.out.println(submission.getExceptionInfo());

// 删除hdfs文件

deleteHdfsFiles(hdfsUrl);

//如果监控线程还在继续,则停止线程

if (thread.isAlive()) {

thread.interrupt();// 发生异常停止掉

}

}

/**

* <p>

* Description:[根据传入的表名和列信息,组装成创建表的sql]

* </p>

* @param tableName 表名称

* @param columnVOs 表字段

* @return String 生成的sql

*/

public String getCreateTableSQL(String tableName,List<ColumnVO> columnVOs,boolean isText){

//组装sql

StringBuffer createSql = new StringBuffer("create table " + tableName + "(");

for (int i = 0; i < columnVOs.size(); i++) {

if (i == 0) {

createSql.append("`" + columnVOs.get(i).getColumnName()+ "` string");

} else {

createSql.append(",`"+ columnVOs.get(i).getColumnName()+ "` string");

}

}

createSql.append(")");

if (isText) {

createSql.append(" ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' ");

}

log.info("createSql:" + createSql);

String sql = createSql.toString().trim();

//返回结果

return sql;

}

/**

* <p>

* Description:[根据传入列对象,组装列信息]

* </p>

*

* @param columnVOs 表字段

* @return String 生成的sql

*/

public String getColumns(List<ColumnVO> columnVOs){

//组装sql

StringBuffer columns = new StringBuffer("");

for (int i = 0; i < columnVOs.size(); i++) {

if (i == 0) {

columns.append("`" + columnVOs.get(i).getColumnName()+ "` string");

} else {

columns.append(",`"+ columnVOs.get(i).getColumnName()+ "` string");

}

}

log.info("createSql:" + columns);

String column = columns.toString().trim();

//返回结果

return column;

}

/**

* <p>

* Description:[增量sqoop导入完成之后,创建hiveorc表,插入orc数据,实现增量,保存源数据信息]

* </p>

*

* @param tableVOs 源表信息

* @param columnVOs 源表字段信息

* @param hdfsFilePath sqoop导入成功后hdfs文件地址

* @param jobId sqoopJobid 用于保存任务日志信息

* @param startTime 任务开始时间用于保存该任务总共花费时间

* @return boolean 整个过程是否发生错误,true 存在错误, false 正常执行,不存在错误

*/

public boolean createOrcHiveAfterSqoop(String table, List<ColumnVO> columnVOs,String hdfsFilePath,long jobId,long startTime,boolean isFirst){

boolean hasError = false;

// 定义表名

String orcTableName = table;

String sourceTableName= table;

String primaryKey = jdbcService.getPrimaryKey(exportDatabase,exportUsername,exportPassword,null,null,sourceTableName);

try {

if(primaryKey == null || primaryKey.trim().equals("")) {

primaryKey = columnVOs.get(0).getColumnName();

}

//textfileTable在这里表示 增量数据临时表的表名,先将增量数据放在临时表,再将临时表的数据导入目标表

String textfileTable = orcTableName+"_temp";

//获取sql

String sql = getCreateTableSQL(textfileTable,columnVOs,true);

// 创建hive表,并把增量的数据导入到hive表中

hfs.createHiveTempTable(textfileTable, sql,hdfsFilePath);

// 非第一次导入,先将hive中相关的数据删除,再插入相关数据

long incrementInsertTime = System.currentTimeMillis();

hfs.deleteIncrementDataExistInOrcTable(textfileTable, orcTableName, primaryKey,

jdbcHiveUrl);

hfs.insertIntoHiveOrcTable(textfileTable, orcTableName, jdbcHiveUrl);

long incrementInsertTimeEnd = System.currentTimeMillis();

System.out.println("orc增量新增和更新数据到orc表所用时间:" + (incrementInsertTimeEnd - incrementInsertTime));

log.info("orc增量新增和更新数据到orc表所用时间:" + (incrementInsertTimeEnd - incrementInsertTime));

} catch (Exception e) {

hasError = true;

log.error("全量任务创建hive表出错!",e);

}

return hasError;

}

/**

* <p>

* Description:[在获取的源表的字段列表中加入sqoop的loadtime字段,字段名称为“load_bigdata_time”]

* </p>

* @param List<ColumnVO> 源表字段信息

* @return List<ColumnVO>

*/

public List<ColumnVO> addSqoopTimeColumn(List<ColumnVO> cVos){

ColumnVO cVo= new ColumnVO();

cVo.setColumnName("load_bigdata_time");

cVo.setComment("Sqoop导入时间");

cVo.setType("datetime");

cVos.add(cVo);

return cVos;

}

/**

* 在sqoop导入时出现问题,删除已经生成的hdfs文件,hive在创建表时出现问题,删除已经创建的表和hdfs文件

*

* @param HDFSPath

* @param HiveTableName

*/

private void deleteHdfsHiveTable(String HDFSPath, String HiveTableName) {

String HDFSUrl = Messages.getString("HDFSUrl");

String HDFSFilePath = HDFSUrl + HDFSPath;

System.setProperty("HADOOP_USER_NAME", Messages.getString("hiveUser"));

try {

try {

hfs.deleteFdfsByHiveTable(HiveTableName);

hfs.deleteHiveTrueTable(HiveTableName);

} catch (ClassNotFoundException e1) {

e1.printStackTrace();

} // 如果表存在,删除表

// 删除hdfs文件

Path p = new Path(HDFSFilePath);

Configuration conf = new Configuration();

try {

FileSystem fs = p.getFileSystem(conf);

boolean isHad = fs.exists(p);

if (isHad) {

fs.delete(p, true);

}

// boolean b = fs.createNewFile(p);

fs.close();

} catch (IOException e) {

e.printStackTrace();

}

} catch (SQLException e) {

e.printStackTrace();

}

}

public void deleteHdfsFiles(String hdfsPath) {

String HDFSFilePath = jdbcHiveUrl + hdfsPath;

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

// 删除hdfs文件

Path p = new Path(HDFSFilePath);

Configuration conf = new Configuration();

FileSystem fs = p.getFileSystem(conf);

boolean isHad = fs.exists(p);

if (isHad) {

fs.delete(p, true);

}

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

//判断从上一次更新之后数据的最大时间

public Timestamp getAfterMaxTimestamp( String tableName, String preTimestamp, String columnName) {

Timestamp timestamp = null;

Connection connection = JdbcConnection.getConnection(jdbcMysqlDriver, exportDatabase,

exportUsername, exportPassword);

PreparedStatement pStatement = null;

ResultSet resultSet = null;

String sql = "select max(date_format(" + columnName + ",'%Y-%m-%d %H:%i:%S')) from "

+ "(select * from " + tableName + " where date_format(" + columnName + ",'%Y-%m-%d %H:%i:%S') > '" + preTimestamp + "') as increment";

/*如果是Oracle {

sql = "select max(to_char(" + columnName + ",'yyyy-MM-dd hh24:mi:ss')) from ("

+ "select * from " + tableName + " where to_char(" + columnName + ",'yyyy-MM-dd hh24:mi:ss') > '" + preTimestamp + "')";

} 如果是Sybase {

sql = "select * from " + tableName;

} 如果是sql server {

sql = "select max(Convert(varchar," + columnName + ",120)) from ("

+ "select * from " + tableName + " where Convert(varchar," + columnName + ",120) > '" + preTimestamp + "') as increment";

}*/

try {

pStatement = connection.prepareStatement(sql);

resultSet = pStatement.executeQuery();

if (resultSet.next()) {

//timestamp = changeToTimestamp(resultSet.getString(1));

if(resultSet.getString(1) == null) {

return timestamp;

}

timestamp =Timestamp.valueOf(resultSet.getString(1));

}

} catch (SQLException e) {

e.printStackTrace();

} finally {

JdbcConnection.closeConnection(connection, pStatement, resultSet);

}

return timestamp;

}

/**

* 1111更新increment sqoop Job配置

*/

private String updateIncrementSqoopJob(MJob newjob, String tableName, List<ColumnVO> columns) {

MJobForms connectorForm = newjob.getConnectorPart();

MJobForms frameworkForm = newjob.getFrameworkPart();

newjob.setName("ImportJob_zheda");

//获取源表的主键

String primaryKey = jdbcService.getPrimaryKey(exportDatabase,exportUsername,exportPassword,null,null,tableName);

//如果主键不为空,设定“partitionColumn”参数为主键,并且设置任务执行的map数为10

if(primaryKey != null && !primaryKey.trim().equals("")) {

frameworkForm.getIntegerInput("throttling.extractors").setValue(10);// 指定map的个数

connectorForm.getStringInput("table.partitionColumn").setValue(primaryKey);

//如果主键为空,选取不为时间类型的字段为“partitionColumn”参数,并指定map数为1

}else {

//选取不为时间类型的字段

for(int i=0;i<columns.size();i++){

if (!columns.get(i).getType().toUpperCase().contains("TIME")&&!columns.get(i).getType().toUpperCase().contains("DATE")) {

primaryKey = columns.get(i).getColumnName();

break;

}

}

//设定“partitionColumn”参数

connectorForm.getStringInput("table.partitionColumn").setValue(primaryKey);

// 指定map的个数

frameworkForm.getIntegerInput("throttling.extractors").setValue(1);

}

// 控制增量导入

//获取当天时间以及前一天的时间

Date nowDate = new Date();

Calendar calendar = Calendar.getInstance();

calendar.setTime(nowDate);

calendar.add(Calendar.DAY_OF_MONTH, -1);

Date predate = calendar.getTime();

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

String predateString = dateFormat.format(predate) + " " + pollingStartTime;

String nowdateString = dateFormat.format(nowDate) + " " + pollingStartTime;

String sql = "select * FROM " + tableName + " where ";

String charStr = " Convert(varchar,"+"create_time"+",120) ";

charStr = "date_format(" +"create_time" + ",'%Y-%m-%d %H:%i:%S') ";

sql += charStr + " > '" + predateString + "' and " + charStr + " <= '" + nowdateString

+ "' and ${CONDITIONS}";

System.out.println("SQL ::"+sql);

connectorForm.getStringInput("table.sql").setValue(sql);

String hdfdFilePath = Messages.getString("sqoopOutput") + new Date().getTime() + tableName;

frameworkForm.getEnumInput("output.storageType").setValue(Messages.getString("storageType"));

frameworkForm.getEnumInput("output.outputFormat").setValue(Messages.getString("outputFormat"));

frameworkForm.getStringInput("output.outputDirectory").setValue(hdfdFilePath);

frameworkForm.getIntegerInput("throttling.extractors").setValue(1);// 指定map的个数

return hdfdFilePath;

}

}

package com.scheduler.service.impl;

import java.io.IOException;

import org.apache.hadoop.fs.FileSystem;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.sql.Timestamp;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Date;

import java.util.HashMap;

import java.util.LinkedList;

import java.util.List;

import java.util.Map;

import com.scheduler.util.Constant;

import com.scheduler.util.Messages;

import com.scheduler.util.ColumnVO;

import org.json.JSONArray;

import org.json.JSONObject;

import org.springframework.stereotype.Service;

import com.scheduler.service.HiveService;

import org.apache.sqoop.client.SubmissionCallback;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.LocatedFileStatus;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.RemoteIterator;

import org.apache.log4j.Logger;

import javax.annotation.Resource;

/**

* <p>

* Title: manageplatform_[Hive]

* </p>

* <p>

* Description: [HiveService实现层]

* </p>

*

* @author GLJ

* @author (latest modification by $Author$)

* @version $Revision$ 2015-03-18

* @since 20130601

*/

@Service("hiveServiceImpl")

public class HiveServiceImpl implements HiveService {

protected Logger log = Logger.getLogger(DataSynMysqlAndHiveImpl.class);

private static String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

private static String jdbcHiveUrl = Messages.getString("jdbcHiveUrl");

private static String hiveUser = Messages.getString("hiveUser");

private static String hivePwd = Messages.getString("hivePwd");

private static String exportDatabase = Messages.getString("export_target_database_url");

private static String exportUsername = Messages.getString("export_target_database_username");

private static String exportPassword = Messages.getString("export_target_database_password");

private static String jdbcMysqlDriver = Messages.getString("jdbc_mysql_driver");

public HiveServiceImpl() {

}

@Override

public boolean existTable(String table) throws SQLException {

boolean flag = false;

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error("hive链接出错", e);

}

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

String sql = "show tables '" + table + "'";

log.info("sql:" + sql);

ResultSet set = stmt.executeQuery(sql);

while (set.next()) {

String reTableName = set.getString(1);

if ((table.toLowerCase()).equals(reTableName.toLowerCase())) {

flag = true;

break;

}

}

return flag;

}

@Override

public boolean createTableAsSelect(String targetTableName, String select)

throws SQLException {

String create = "CREATE TABLE " + targetTableName;

String option = " row format delimited fields terminated by '�01' "; // you

// can

// change

// it

String as = " AS " + select; // here you can decide which column, table

// to select, join table or more

// comprehension clause

String sql = create + option + as;

log.info("创建数据表sql:" + sql);

System.out.println("Running: " + sql);

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接出错", e);

e.printStackTrace();

}

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

stmt.execute(sql);

stmt.close();

con.close();

return true;

}

//11111111111111

@Override

public void deleteHiveTrueTable(String tableName) throws SQLException {

String deleteSql = "drop table if exists " + tableName;

System.out.println("Running: " + deleteSql);

log.info("删除数据表sql:" + deleteSql);

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接出错", e);

e.printStackTrace();

}

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

stmt.execute(deleteSql);

stmt.close();

con.close();

}

@Override

public List<Map<String, String>> getHiveColunmsByTableName(String hiveurl,

String userName, String password, String tableName) {

List<Map<String, String>> colsAndType = new ArrayList<Map<String, String>>();

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接出错", e);

e.printStackTrace();

}

Connection con;

try {

con = DriverManager.getConnection(hiveurl, userName, password);

Statement stmt = con.createStatement();

String sql = "desc " + tableName;

log.info("获取表字段sql" + sql);

ResultSet resultSet = stmt.executeQuery(sql);

while (resultSet.next()) {

Map<String, String> map = new HashMap<String, String>();

String colunm = resultSet.getString(1);

String type = resultSet.getString(2);

map.put("column", colunm);

map.put("type", type);

colsAndType.add(map);

}

stmt.close();

con.close();

} catch (SQLException e) {

e.printStackTrace();

log.error("sql执行出错", e);

}

return colsAndType;

}

@Override

public List<String> getColumnValues(String tableName, String colName) {

String jdbcHiveUrl = Messages.getString("jdbcHiveUrl");

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String sql = "select distinct " + colName + " from " + tableName;

try {

final String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

Connection con;

con = DriverManager.getConnection(jdbcHiveUrl, hiveUser, hivePwd);

final Statement stmt = con.createStatement();

log.info("sql:" + sql);

final ResultSet datSet = stmt.executeQuery(sql);

List<String> values = new ArrayList<String>();

while (datSet.next()) {

values.add(datSet.getString(1));

}

return values;

} catch (final ClassNotFoundException e) {

log.error("hive链接出错", e);

e.printStackTrace();

return null;

} catch (SQLException e) {

log.error("sql执行出错", e);

e.printStackTrace();

return null;

}

}

/*

* 得到所有表

*/

/*private ArrayList<String> getTables() throws SQLException {

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error("hive链接出错",e);

}

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

if (stmt == null)

return null;

String sql = "show tables";

ArrayList<String> result = new ArrayList<String>();

log.info("sql:"+sql);

ResultSet res = stmt.executeQuery(sql);

while (res.next()) {

result.add(res.getString(1));

}

stmt.close();

con.close();

return result;

}*/

@Override

public List<String> getTablesColName(String url, long resourceId,

String userName, String password, String goOnTableName) {

List<String> tableList = new LinkedList<String>();

if (url.contains("jdbc:sybase:Tds")) {

tableList = this.getColNameOfSybase(url, resourceId, userName,

password, goOnTableName);

return tableList;

}

try {

String jdbcMysqlDriver = Messages.getString("jdbc_mysql_driver");

if (url.contains("jdbc:oracle")) {

jdbcMysqlDriver = Messages.getString("jdbc_oracle_driver");

} else if (url.contains("jdbc:sqlserver")) {

jdbcMysqlDriver = Messages.getString("jdbc_sqlserver_driver");

}

Class.forName(jdbcMysqlDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

// TODO Auto-generated catch block

e.printStackTrace();

}

Connection con;

try {

con = DriverManager.getConnection(url, userName, password);

Statement stmt = con.createStatement();

ResultSet tableSet = null;

PreparedStatement pStatement = null;

if (url.contains("jdbc:oracle")) {

String sql1 = Messages.getString("oracle_show_tables");

log.info("sql:" + sql1);

pStatement = con.prepareStatement(sql1);

tableSet = pStatement.executeQuery();

} else if (url.contains("jdbc:sqlserver")) {

String sql2 = Messages.getString("sqlserver_show_tables");

log.info("sql:" + sql2);

pStatement = con.prepareStatement(sql2);

tableSet = pStatement.executeQuery();

} else {

String[] type = {"TABLE"};

tableSet = con.getMetaData().getTables("", "", "", type);

}

Boolean id = false;

while (tableSet.next()) {

String tableName = null;

if (url.contains("jdbc:oracle")) {

tableName = tableSet.getString(1);

} else if (url.contains("jdbc:sqlserver")) {

tableName = tableSet.getString(1);

} else {

tableName = tableSet.getString("TABLE_NAME");

}

if (goOnTableName == null || goOnTableName.equals("")

|| goOnTableName.equals(" ")) {

id = true;

} else {

if (tableName.equals(goOnTableName))

id = true;

}

if (id) {

tableList.add(tableName);

}

}

stmt.close();

con.close();

} catch (SQLException e) {

log.error("SQL执行异常", e);

e.printStackTrace();

}

return tableList;

}

private List<String> getColNameOfSybase(String url, long resourceId,

String userName, String password, String goOnTableName) {

List<String> tableList = new LinkedList<String>();

String jdbcMysqlDriver = Messages.getString("jdbc_sybase_driver");

try {

Class.forName(jdbcMysqlDriver);

String sql = Messages.getString("sybase_show_tables");

Connection con = DriverManager.getConnection(url, userName,

password);

Statement stmt = con.createStatement(

ResultSet.TYPE_SCROLL_INSENSITIVE,

ResultSet.CONCUR_READ_ONLY);

log.info("sql:" + sql);

PreparedStatement pStatement = con.prepareStatement(sql);

ResultSet tableSet = pStatement.executeQuery();

Boolean id = false;

while (tableSet.next()) {

String tableName = tableSet.getString("TABLE_NAME");

if (goOnTableName == null || goOnTableName.equals("")

|| goOnTableName.equals(" ")) {

id = true;

} else {

if (tableName.equals(goOnTableName))

id = true;

}

if (id) {

tableList.add(tableName);

}

}

stmt.close();

con.close();

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error("hive链接出错", e);

} catch (SQLException e) {

e.printStackTrace();

log.error("SQL执行异常", e);

}

return tableList;

}

@Override

public List<String> getViewsColName(String url, long resourceId,

String userName, String password, String schemaName,

String goOnViewName) {

List<String> viewList = new LinkedList<String>();

if (url.contains("jdbc:sybase:Tds")) {

viewList = getSybaseView(url, resourceId, userName, password,

goOnViewName);

return viewList;

}

try {

String jdbcMysqlDriver = Messages.getString("jdbc_mysql_driver");

if (url.contains("jdbc:oracle")) {

jdbcMysqlDriver = Messages.getString("jdbc_oracle_driver");

} else if (url.contains("jdbc:sqlserver")) {

jdbcMysqlDriver = Messages.getString("jdbc_sqlserver_driver");

}

Class.forName(jdbcMysqlDriver);

} catch (ClassNotFoundException e) {

log.error("jdbc链接异常", e);

e.printStackTrace();

}

Connection con;

try {

con = DriverManager.getConnection(url, userName, password);

Statement stmt = con.createStatement();

ResultSet viewSet = null;

PreparedStatement pStatement = null;

String vn = "name";

if (url.contains("jdbc:oracle")) {

String sql1 = Messages.getString("oracle_show_views");

log.info("sql:" + sql1);

pStatement = con.prepareStatement(sql1);

viewSet = pStatement.executeQuery();

vn = "VIEW_NAME";

} else if (url.contains("jdbc:sqlserver")) {

String sql2 = Messages.getString("sqlserver_show_views");

log.info("sql:" + sql2);

pStatement = con.prepareStatement(sql2);

viewSet = pStatement.executeQuery();

} else {

String sql3 = Messages.getString("mysql_show_views") + "'"

+ schemaName + "'";

log.info("sql:" + sql3);

pStatement = con.prepareStatement(sql3);

viewSet = pStatement.executeQuery();

vn = "table_name";

}

Boolean id = false;

while (viewSet.next()) {

String tableName = viewSet.getString(vn);

if (goOnViewName == null || goOnViewName.equals("")

|| goOnViewName.equals(" ")) {

id = true;

} else {

if (tableName.equals(goOnViewName))

id = true;

}

if (id) {

viewList.add(tableName);

}

}

stmt.close();

con.close();

} catch (SQLException e) {

log.error("SQL执行异常", e);

e.printStackTrace();

}

return viewList;

}

private List<String> getSybaseView(String url, long resourceId,

String userName, String password, String goOnTableName) {

List<String> viewList = new LinkedList<String>();

String jdbcMysqlDriver = Messages.getString("jdbc_sybase_driver");

try {

Class.forName(jdbcMysqlDriver);

String sql = Messages.getString("sybase_show_views")

+ "'sysquerymetrics'";

Connection con = DriverManager.getConnection(url, userName,

password);

Statement stmt = con.createStatement(

ResultSet.TYPE_SCROLL_INSENSITIVE,

ResultSet.CONCUR_READ_ONLY);

log.info("sql:" + sql);

PreparedStatement pStatement = con.prepareStatement(sql);

ResultSet tableSet = pStatement.executeQuery();

Boolean id = false;

while (tableSet.next()) {

String tableName = tableSet.getString("name");

if (goOnTableName == null || goOnTableName.equals("")

|| goOnTableName.equals(" ")) {

id = true;

} else {

if (tableName.equals(goOnTableName))

id = true;

}

if (id) {

viewList.add(tableName);

}

}

stmt.close();

con.close();

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

} catch (SQLException e) {

log.error("SQL执行异常", e);

e.printStackTrace();

}

return viewList;

}

//111111111111111111111

@Override

public boolean createHiveTable(String tableName,String sql) throws SQLException {

boolean success= true;

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String hiveUrl=Messages.getString("jdbcHiveUrl");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

success = false;

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

try {

deleteFdfsByHiveTable(tableName);

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error("hive连接异常", e);

} // 同时删除对应的hdfs文件,因为是建外表

long startTime = System.currentTimeMillis();

String dropIfExistsTable = "drop table if exists " + tableName;

long endTime = System.currentTimeMillis();

System.out.println("删除已存在的表所花时间(针对全量导入):" + (endTime - startTime));

stmt.execute(dropIfExistsTable);

log.info("createSql:" + sql);

stmt.execute(sql);

stmt.close();

con.close();

return success;

}

/**

* 根据表名删除该hive表对应的hdfs文件,主要针对hive中的外表

*11111111111111

* @param tableName

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

public boolean deleteFdfsByHiveTable(String tableName)

throws ClassNotFoundException, SQLException {

boolean b = false;

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

String jdbcHiveUrl = Messages.getString("jdbcHiveUrl");

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String sqoopOutput = Messages.getString("sqoopOutput");

String HDFSpath = Messages.getString("HDFSpath");

System.setProperty("HADOOP_USER_NAME", Messages.getString("hiveUser"));

String rootPath = Messages.getString("HDFSUrl");

Class.forName(jdbcHiveDriver);

String path = null;

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

// 判断该表是否存在

String sqlHad = "show tables '" + tableName + "'";

ResultSet had = stmt.executeQuery(sqlHad);

if (!had.next()) {

return true;

}

String sql = "describe formatted " + tableName;

log.info("sql:" + sql);

ResultSet set = stmt.executeQuery(sql);

while (set.next()) {

String location = set.getString(1);

if (location != null

&& "Location:".equals(location.replace(" ", "")))

path = set.getString(2);

}

set.close();

stmt.close();

con.close();

if (path != null) {

String[] paths = null;

if (path.contains(sqoopOutput)) {

paths = path.split(sqoopOutput);

} else if (path.contains(HDFSpath)) {

paths = path.split(HDFSpath);

}

if (paths != null && paths.length > 0) {

String dfs = paths[0];

path = path.replace(dfs, rootPath);

Path p = new Path(path);

Configuration conf = new Configuration();

try {

FileSystem fs = p.getFileSystem(conf);

boolean isHad = fs.exists(p);

if (isHad) {

b = fs.delete(p, true);

} else {

b = true;

}

// boolean b = fs.createNewFile(p);

fs.close();

} catch (IOException e) {

log.error("HDFS文件读取异常", e);

e.printStackTrace();

}

}

}

return b;

}

@Override

public boolean isExistHiveTable(String tableName) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String hiveUrl = Messages.getString("jdbcHiveUrl");

System.setProperty("HADOOP_USER_NAME", hiveUser);

boolean exist = false;

if (tableName == null || tableName.trim().equals(""))

return false;

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String showTablesql = "show tables '" + tableName + "'";

log.info("showTablesql:" + showTablesql);

ResultSet tableSet = stmt.executeQuery(showTablesql);

if (tableSet.next()) {

exist = true;

}

return exist;

}

/**

* 创建Hive textfiled表

*/

public String createHiveTempTable(String tableName,String sql, String HDFSPAth) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String hiveUrl = Messages.getString("jdbcHiveUrl");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String dropIfExistsTable = "drop table if exists " + tableName;

log.info("dropIfExistsTable:" + dropIfExistsTable);

stmt.execute(dropIfExistsTable);

log.info("createSql:" + sql);

stmt.execute(sql);

String loadData = "LOAD DATA INPATH '" + HDFSPAth + "' INTO TABLE " + tableName;

log.info("loadData:" + loadData);

stmt.execute(loadData);

stmt.close();

con.close();

return tableName;

}

/**

* 创建hive表 add by yangqi 2015/10/10

*/

@Override

public String createHiveORCTable(String tableName,String primaryKey, String sql) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String hiveUrl = Messages.getString("jdbcHiveUrl");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

try {

deleteFdfsByHiveTable(tableName);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

} // 同时删除对应的hdfs文件,因为是建外表

String dropIfExistsTable = "drop table if exists " + tableName;

log.info("dropIfExistsTable:" + dropIfExistsTable);

stmt.execute(dropIfExistsTable);

stmt.execute("set ngmr.partition.automerge = true");

String createSql= sql+" CLUSTERED BY ("

+ primaryKey

+ ") INTO "

+ "100"

+ " BUCKETS ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' STORED AS ORC TBLPROPERTIES "

+ "('transactional'='true')";

System.out.println(createSql);

log.info("createSql:" + createSql);

stmt.execute(createSql.toString().trim());

stmt.close();

con.close();

//

return tableName;

}

/**

* 创建hiveorc表 add by yangqi 2015/10/10

*///1111111111111111

// 将数据从hive的textFile表导入到orc表中

@Override

public void insertIntoHiveOrcTable(String textfileTableName,

String orcTableName, String hiveUrl) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

//获取text表的大小,根据这个大小来判断task的量

Map<String,String> map = getCountAndSize(textfileTableName, Messages.getString("jdbcHiveUrl"));

stmt.execute("set ngmr.partition.automerge = true");

long count = Long.parseLong(map.get("count"));

if(count>=50000000){

stmt.execute("set mapred.reduce.tasks=100");

}else if(10000000<=count&&count<=50000000){

stmt.execute("set mapred.reduce.tasks=20");

}else{

stmt.execute("set mapred.reduce.tasks=10");

}

String insertSql = "insert into table " + orcTableName

+ " select * from " + textfileTableName + " where resource_flag = 0 distribute by rand()";

log.info("insertSql:" + insertSql);

stmt.execute(insertSql);

stmt.close();

con.close();

}

/**

* 根据表名统计数据表的记录数和文件大小

*

* @author ZYY

* @since 2015/1/14

*/

@Override

public Map<String, String> getCountAndSize(String tableName, String hiveUrl)

throws SQLException {

Map<String, String> map = new HashMap<String, String>(); //返回结果map

String[] pathAndSize = new String[2]; //存储数据大小,地址数组变量

String count = ""; //数据表记录量变量

/*

* 获取用户名,密码,得到jdbchive链接

* */

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", Messages.getString("hiveUser"));

String rootPath = Messages.getString("HDFSUrl");

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con = DriverManager

.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

//定义获取数据表记录总量的sql

String countSql = "select count(*) from " + tableName;

log.info("获取数据表记录总量的sql" + countSql);

try {

ResultSet rs = stmt.executeQuery(countSql);

if (rs.next()) {

count = rs.getString(1);

}

} catch (Exception e) {

log.error("SQL执行异常", e);

e.printStackTrace();

}

//定义获取hive中数据大小和地址sql

String sizesql = "describe formatted " + tableName;

ResultSet set = stmt.executeQuery(sizesql);

while (set.next()) {

String location = set.getString(1);

if (location != null

&& "Location:".equals(location.replace(" ", "")))

pathAndSize[0] = set.getString(2);

String totalSize = set.getString(2);

if (totalSize != null

&& "totalSize".equals(totalSize.replace(" ", "")))

pathAndSize[1] = set.getString(3);

}

// 由于hive创建的是外表,对path和siz进行处理

// 将path中的节点信息改为port

if (pathAndSize[0] != null && !pathAndSize[0].contains(rootPath)) {

String path = pathAndSize[0];

String[] paths = path.split("://");

if (paths.length > 1) {

String dfs = paths[1];

String[] filPaths = dfs.split("/");

if (filPaths.length > 0) {

String f = filPaths[0];

path = dfs.replace(f, rootPath);

pathAndSize[0] = path;

}

}

}

// hive外表不能获取size的处理

if (pathAndSize[1] == null || pathAndSize[1].equals("") || "0".equals(pathAndSize[1].trim())) {

if (pathAndSize[0] != null) {

String path = pathAndSize[0];

Path p = new Path(path);

long total = 0;

Configuration conf = new Configuration();

try {

FileSystem fs = p.getFileSystem(conf);

boolean isHad = fs.exists(p);

if (isHad) {

RemoteIterator<LocatedFileStatus> fd = fs.listFiles(p,

true);// 获取文件夹下所有文件

while (fd.hasNext()) {

LocatedFileStatus lf = fd.next();// 获取文件

System.out.println(lf.getLen());

total = total + lf.getLen();// 文件大小

}

}

// 将单位由b转换为kb

total =total/1024;

pathAndSize[1] = total + "";

fs.close();

} catch (IOException e) {

log.error("Hive文件读取出错", e);

e.printStackTrace();

}

}

}

//关闭结果集,事务和数据库链接

set.close();

stmt.close();

con.close();

//将结果存入到结果map

map.put("count", count);

map.put("size", pathAndSize[1]);

return map;

}

/**

* 增11111111量导入的数据,在hive中全部删除

*/

public void deleteIncrementDataExistInOrcTable(String textfileTable,

String orcTableName, String primaryKey, String hiveUrl)

throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String deleteSql = "delete from " + orcTableName + " where "

+ primaryKey + " in (select " + primaryKey + " from "

+ textfileTable + ")";

log.info("deleteSql:" + deleteSql);

stmt.execute(deleteSql);

stmt.close();

con.close();

}

/**

* merge临时表和orc add by yangqi 2015/10/14

*/

@Override

public void mergeIntoHiveOrcTable(Map<String, String[]> map,

String hiveUrl, String primaryKey) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String resourceId = map.get("resourceId")[0];

String tableName = map.get("tableName")[0];

String orcTableName = resourceId + "_orc_" + tableName;

String tempOrcTable = resourceId + "_" + tableName;

StringBuffer mergeSql = new StringBuffer("MERGE INTO " + orcTableName + " a USING "

+ tempOrcTable + " b ON (a." + primaryKey + " = b."

+ primaryKey + ") WHEN MATCHED THEN UPDATE SET ");

String[] cols = map.get(tableName);

if (cols != null && cols.length > 0) {

for (int i = 0; i < cols.length; i++) {

if (0 == i) {

mergeSql.append(cols[i].split(" ")[0] + " = b."

+ cols[i].split(" ")[0]);

} else {

mergeSql.append(", " + cols[i].split(" ")[0]

+ " = b." + cols[i].split(" ")[0]);

}

}

}

mergeSql.append(" WHEN NOT MATCHED THEN INSERT (");

if (cols != null && cols.length > 0) {

for (int i = 0; i < cols.length; i++) {

if (0 == i) {

mergeSql.append(cols[i].split(" ")[0]);

} else {

mergeSql.append(", " + cols[i].split(" ")[0]);

}

}

}

mergeSql.append(") VALUES(");

if (cols != null && cols.length > 0) {

for (int i = 0; i < cols.length; i++) {

if (0 == i) {

mergeSql.append("b." + cols[i].split(" ")[0]);

} else {

mergeSql.append(", " + "b." + cols[i].split(" ")[0]);

}

}

}

mergeSql.append(");");

log.info("mergeSql" + mergeSql);

stmt.execute(mergeSql.toString().trim());

stmt.close();

con.close();

}

/**

* 创建orc临时表 yangqi 2015/10/23

*/

@Override

public String createTempHiveORCTable(Map<String, String[]> map,

String primaryKey, String hiveUrl) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

if (map == null || map.get("tableName") == null

|| map.get("tableName").length == 0)

return null;

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con;

String resourceId = map.get("resourceId")[0];

String tableName = map.get("tableName")[0];

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String[] cols = map.get(tableName);

String table = resourceId + "_temp_orc_" + tableName;// 标识为orc表

try {

deleteFdfsByHiveTable(table);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

// TODO Auto-generated catch block

e.printStackTrace();

} // 同时删除对应的hdfs文件,因为是建外表

String dropIfExistsTable = "drop table if exists " + table;

stmt.execute(dropIfExistsTable);

StringBuffer createSql = new StringBuffer("create external table " + table + "(");

if (cols != null && cols.length > 0) {

for (int i = 0; i < cols.length; i++) {

if (i == 0) {

createSql.append("`" + cols[i].replace(" ", "` "));

} else {

createSql.append("," + "`" + cols[i].replace(" ", "` "));

}

}

}

createSql.append(

") CLUSTERED BY ("

+ primaryKey

+ ") INTO "

+ "10"

+ " BUCKETS ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' STORED AS ORC TBLPROPERTIES "

+ "('transactional'='true')"

);

log.info("createSql" + createSql);

stmt.execute(createSql.toString().trim());

stmt.close();

con.close();

return table;

}

/**

* 将hive临时表数据导入到hive orc表中

*/

@Override

public void insertIntoTempOrcTable(String textfileTableName,

String tempOrcTable, String hiveUrl) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String insertSql = "insert overwrite table " + tempOrcTable

+ " select * from " + textfileTableName;

log.info("insertSql" + insertSql);

stmt.execute(insertSql);

stmt.close();

con.close();

}

public String createOrUpdateHiveTable(Map<String, String[]> map,

String pCol, String hiveUrl, String HDFSPAth) throws SQLException {

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

System.setProperty("HADOOP_USER_NAME", hiveUser);

if (map == null || map.get("tableName") == null

|| map.get("tableName").length == 0)

return null;

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive链接异常", e);

e.printStackTrace();

}

Connection con;

String resourceId = map.get("resourceId")[0];

String tableName = map.get("tableName")[0];

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

String[] cols = map.get(tableName);

String table = resourceId + "_" + tableName;

// try {

// deleteFdfsByHiveTable(table);

// } catch (ClassNotFoundException e) {

// // TODO Auto-generated catch block

// e.printStackTrace();

// } // 同时删除对应的hdfs文件,因为是建外表

// String dropIfExistsTable = "drop table if exists " + table;

// stmt.execute(dropIfExistsTable);

// 创建分区表

StringBuffer createSql = new StringBuffer("CREATE TABLE IF NOT EXISTS " + table + "(");

if (cols != null && cols.length > 0)

for (int i = 0; i < cols.length; i++)

createSql.append(cols[i] + ",");

createSql.append(") PARTITIONED BY (p_column String) ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' ");

createSql = new StringBuffer(createSql.toString().replace(",)", ")"));

log.info("hive建表语句:" + createSql);

stmt.execute(createSql.toString().trim());

// 修改表创建分区

String alterSql = "ALTER TABLE " + table

+ " ADD IF NOT EXISTS PARTITION (p_column='" + pCol + "')";

// + "LOCATION '" + HDFSPAth + "'";

log.info("hive修改分区语句:" + alterSql);

stmt.execute(alterSql);

// load数据

String loadSql = "LOAD DATA INPATH '" + HDFSPAth

+ "' OVERWRITE INTO TABLE " + table + " PARTITION(p_column='"

+ pCol + "')";

log.info("hive分区导入数据:" + loadSql);

stmt.execute(loadSql);

stmt.close();

con.close();

return table;

}

@Override

public void deleteHiveTruePartition(String tableName, String partitionStr)

throws SQLException {

// TODO Auto-generated method stub

String deleteSql = "ALTER TABLE " + tableName

+ " DROP IF EXISTS PARTITION (" + partitionStr + ")";

System.out.println("Running: " + deleteSql);

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

e.printStackTrace();

log.error("hive连接异常", e);

}

log.info("deleteSql" + deleteSql);

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

java.sql.Statement stmt = con.createStatement();

stmt.execute(deleteSql);

stmt.close();

con.close();

}

@Override

public String createHivePartitionTable(String tableName,List<ColumnVO> columnVOs, String HDFSPAth) throws SQLException {

System.out.println("in to createHivePartitionTable");

String hiveUser = Messages.getString("hiveUser");

String hivePwd = Messages.getString("hivePwd");

String hiveUrl = Messages.getString("jdbcHiveUrl");

System.setProperty("HADOOP_USER_NAME", hiveUser);

try {

String jdbcHiveDriver = Messages.getString("jdbcHiveDriver");

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("hive连接异常", e);

e.printStackTrace();

}

Connection con;

con = DriverManager.getConnection(hiveUrl, hiveUser, hivePwd);

Statement stmt = con.createStatement();

StringBuffer createSql = new StringBuffer("create table IF NOT EXISTS " + tableName + "_tmp (");

StringBuffer columnSql = new StringBuffer();

for (int i = 0; i < columnVOs.size()-1; i++) {

createSql.append(columnVOs.get(i).getColumnName() + " string,");

columnSql.append(columnVOs.get(i).getColumnName()+",");

}

createSql.append("p_column String,");

createSql.append(columnVOs.get(columnVOs.size()-1).getColumnName() + " string");

columnSql.append(columnVOs.get(columnVOs.size()-1).getColumnName()+",");

createSql.append(") ROW FORMAT DELIMITED FIELDS TERMINATED BY '�01' LOCATION ");

createSql.append(" '" + HDFSPAth + "'");

log.info("createSql:" + createSql);

System.out.println(createSql);

stmt.execute(createSql.toString().trim());

String createPartitionSql = createSql.toString().replace("_tmp", "")

.replace(",p_column String", "")

.replace(") ROW", ") partitioned by (p_hive String) ROW")

.split("LOCATION ")[0];

System.out.println(createPartitionSql);

log.info("sql:" + createPartitionSql);

stmt.execute(createPartitionSql);

stmt.execute("set hive.exec.dynamic.partition=true");

stmt.execute("set hive.exec.dynamic.partition.mode=nonstrict");

String insertPartitionSql = "insert overwrite table " + tableName

+ " partition(p_hive) select " + columnSql.toString()

+ "substr(p_column,1,length( p_column )-1) p_hive FROM "

+ tableName + "_tmp";

System.out.println(insertPartitionSql);

log.info("sql:" + insertPartitionSql);

stmt.execute(insertPartitionSql);

String dropIfExistsTable = "drop table if exists " + tableName + "_tmp";

log.info("sql:" + dropIfExistsTable);

stmt.execute(dropIfExistsTable);

stmt.close();

con.close();

//

return tableName;

}

@Override

public List<String> getTablesColName(String tableName) {

List<String> result = null;

if (!StringUtils.isBlank(tableName)) {

tableName = tableName.trim();

boolean tableExist = false;// 标示表是否存在

try {

Class.forName(jdbcHiveDriver);

} catch (ClassNotFoundException e) {

log.error("Hive链接异常", e);

e.printStackTrace();

}

try {

tableExist = existTable(tableName);

} catch (SQLException e1) {

log.error("SQL执行异常", e1);

log.error(e1.getMessage());

}

if (tableExist) {

Connection con = null;

Statement stmt = null;

try {

con = DriverManager.getConnection(jdbcHiveUrl, hiveUser,

hivePwd);

stmt = con.createStatement();

ResultSet resultSet = null;

log.info("sql:" + "select * from " + tableName + " limit 1");

resultSet = stmt.executeQuery("select * from " + tableName

+ " limit 1");

result = new ArrayList<>();

// 获取列名

ResultSetMetaData metaData = resultSet.getMetaData();

for (int i = 0; i < metaData.getColumnCount(); i++) {

// resultSet数据下标从1开始

String columnName = metaData.getColumnName(i + 1);

result.add(columnName);

}

} catch (SQLException e) {

log.error("SQL执行异常", e);

log.error(e.getMessage());

} finally {// 释放资源

try {

if (null != stmt)

stmt.close();

if (null != con)

con.close();

} catch (SQLException e) {

log.error("hive链接关闭异常", e);

e.printStackTrace();

}

}

}

}

return result;

}

@Override

public Map<String, String[]> getTablesCol(String url, long resourceId,

String userName, String password, String goOnTableName,

String tableName) {

Map<String, String[]> map = new HashMap<String, String[]>();

try {

String jdbcMysqlDriver = Messages.getString("jdbc_mysql_driver");

if (url.contains("jdbc:oracle")) {

jdbcMysqlDriver = Messages.getString("jdbc_oracle_driver");

} else if (url.contains("jdbc:sqlserver")) {

jdbcMysqlDriver = Messages.getString("jdbc_sqlserver_driver");

} else if (url.contains("jdbc:sybase:Tds")) {

jdbcMysqlDriver = Messages.getString("jdbc_sybase_driver");

}

Class.forName(jdbcMysqlDriver);

} catch (ClassNotFoundException e) {

log.error("jdbc链接异常", e);

e.printStackTrace();

}

Connection con;

try {

con = DriverManager.getConnection(url, userName, password);

Statement stmt = con.createStatement();

Boolean id = false;

String sql = null;

String sqltableComments = "";//查找表对应的comments字段的SQL语句

String sqlColumnInfo = "";//查找表中所有字段的信息

if (url.contains("jdbc:oracle")) {

sql = "select * from " + tableName + " where rownum<=1";

sqltableComments = "select comments from user_tab_comments WHERE table_name = '"+tableName+"'";

sqlColumnInfo = "select COLUMN_NAME,DATA_TYPE from user_tab_columns where table_name = '"+tableName+"'";

} else if (url.contains("jdbc:sqlserver")) {

sql = "select top 1 * from " + tableName;

sqltableComments = "select * from TABLES where TABLE_SCHEMA='my_db' and table_name='"+tableName+"'";

sqlColumnInfo = "select * from INFORMATION_SCHEMA.columns where table_name = '"+tableName+"'";

} else if (url.contains("jdbc:sybase:Tds")) {

sql = "select top 1 * from " + tableName;

} else {

sql = "select * from " + tableName + " limit 1";

sqltableComments = "SHOW TABLE STATUS LIKE '" + tableName + "'";

sqlColumnInfo = "show full fields from " + tableName;

}

log.info("sql" + sql);

String[] tableRemarkInfo = new String[1];

ResultSet colsSet = stmt.executeQuery(sql);

System.out.println(sql);

ResultSetMetaData data = colsSet.getMetaData();

int count = data.getColumnCount();

String[] resourceIds = {resourceId + ""};

String[] cols = new String[count];

String[] colsNameAndType = new String[count];//存储字段名和字段类型 added by XH 2016-2-16 10:15:58

String[] colsRemarks = new String[count];//存储字段备注

String[] parColumn = {""};

colsSet.close(); //查完表信息先关

if (!sqltableComments.isEmpty() && !sqlColumnInfo.isEmpty()) {

ResultSet tableRemarkSet = stmt.executeQuery(sqltableComments);

while (tableRemarkSet.next()) {

if (url.contains("jdbc:mysql")) {

tableRemarkInfo[0] = tableRemarkSet.getString("Comment");

} else if(url.contains("jdbc:oracle")){

tableRemarkInfo[0] = tableRemarkSet.getString("comments");

}else{

tableRemarkInfo[0] = tableRemarkSet.getString(1);

}

break;

}

tableRemarkSet.close();

ResultSet colSet = stmt.executeQuery(sqlColumnInfo);

int i = 0;

while (colSet.next()) {

String ColumnName = "";

String ColumnType = "";

String ColumnRemark = "";

if(url.contains("jdbc:oracle")){

ColumnName = colSet.getString("COLUMN_NAME");

ColumnType = colSet.getString("DATA_TYPE");

String sqlcolumnComment = "select comments from user_col_comments where table_name='"+tableName+"' and COLUMN_NAME = '"+ColumnName+"'";

ResultSet columnCommentSet = stmt.executeQuery(sqlcolumnComment);

while(columnCommentSet.next()){

ColumnRemark = columnCommentSet.getString("comments");

break;

}

columnCommentSet.close();

}

cols[i] = ColumnName + " " + "String";

colsNameAndType[i] = ColumnName + " " + ColumnType;//设置字段名和字段类型

colsRemarks[i++] = ColumnRemark;

}

colSet.close();

} else {

for (int i = 1; i <= count; i++) {

String cloName = data.getColumnName(i); //字段名 commtens added by XH 2016-2-3 10:44:19

String cloType = data.getColumnTypeName(i);// 字段类型 comments added by XH 2016-2-3 10:44:34

cols[i - 1] = cloName + " " + "String";

colsNameAndType[i - 1] = cloName + " " + cloType;//设置字段名和字段类型

if (parColumn[0].equals("")) {

if (!cloType.equals("DATE")) {

parColumn[0] = cloName;

}

}

}

}

if (goOnTableName == null || goOnTableName.equals("")

|| goOnTableName.equals(" ")) {

id = true;

} else {

if (tableName.equals(goOnTableName))

id = true;

}

if (id) {

//导入hive表新增一个导入平台时间字段

String colsTime = "load_bigdata_time" + " " + "String";//创建hive表字段

String colsNameAndTypeTime = "load_bigdata_time" + " " + "datetime";//保存到元数据字段表字段

if(!isHaveStr(cols, "load_bigdata_time")) {//导入的表中不存在新增字段

// 字段数组中增加新增字段元素

List<String> listCol = new ArrayList<String>();

List<String> listColAndType = new ArrayList<String>();

List<String> listColsRemark = new ArrayList<String>();

for (int j = 0; j < cols.length; j++) {

listCol.add(cols[j]);

listColAndType.add(colsNameAndType[j]);

listColsRemark.add(colsRemarks[j]);

}

listCol.add(colsTime);

listColAndType.add(colsNameAndTypeTime);

listColsRemark.add("导入平台时间");

// 返回String型的数组

cols = listCol.toArray(new String[0]);//创建hive表用

colsNameAndType = listColAndType.toArray(new String[0]);//保存元数据字段表用

colsRemarks = listColsRemark.toArray(new String[0]);//保存元数据字段表用

}

map.put(tableName, cols);

String[] talbelNames = {tableName};

map.put("tableName", talbelNames);

map.put("resourceId", resourceIds);

map.put("partitionColumn", parColumn);

map.put("colsNameAndType", colsNameAndType);

map.put("tableRemark", tableRemarkInfo);

map.put("colsRemark", colsRemarks);

}

//tmt.close();

//ctmt.close();

stmt.close();

con.close();

} catch (SQLException e) {

log.error("SQL执行异常", e);

// TODO Auto-generated catch block

e.printStackTrace();

} finally {

}

return map;

}

/**

* 此方法有两个参数,第一个是要查找的字符串数组,第二个是要查找的字符或字符串

* @param strs

* @param s

* @return true包含,false不包含

*/

public static boolean isHaveStr(String[] strs,String s){

for(int i=0;i<strs.length;i++){

if(strs[i].indexOf(s)!=-1){//循环查找字符串数组中的每个字符串中是否包含所有查找的内容

return true;//查找到了就返回真,不在继续查询

}

}

return false;//没找到返回false

}

@Override

public boolean grantTableSelectToUser(String tableName, String username) {

boolean flag = false;

try {

String sql = "grant select on table " + tableName + " to user " + username;

log.info("数据库授权语句" + sql);

Class.forName(jdbcHiveDriver);

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser, hivePwd);

Statement statement = con.createStatement();

statement.execute(sql);

statement.close();

con.close();

System.out.println(sql);

flag = true;

} catch (Exception e) {

log.error("hive链接出错", e);

e.printStackTrace();

}

return flag;

}

@Override

public boolean revokeTableSelectFromUser(String tableName, String username) {

boolean flag = false;

try {

String sql = "revoke select on table " + tableName + " from user " + username;

log.info("sql" + sql);

Class.forName(jdbcHiveDriver);

Connection con = DriverManager.getConnection(jdbcHiveUrl, hiveUser, hivePwd);

Statement statement = con.createStatement();

statement.execute(sql);

statement.close();

con.close();

System.out.println(sql);

flag = true;

} catch (Exception e) {

log.error("hive链接出错", e);

e.printStackTrace();

}

return flag;

}

@Override

public boolean importMongoDBToHive(List<String> list, String tableName, String mongoUrl) {

Connection con = null; //定义链接并初始化

Statement statement = null; //定义事务并初始化

//得到hive链接,若失败,抛出异常并且返回

try {

Class.forName(jdbcHiveDriver);

con = DriverManager.getConnection(jdbcHiveUrl, hiveUser, hivePwd);

statement = con.createStatement();

} catch (Exception e) {

log.error("hive链接出错", e);

e.printStackTrace();

return false;

}

String dropIfExistsTable = "drop table if exists " + tableName + "_temp";

log.info("dropIfExistsTable" + dropIfExistsTable);

try {

statement.execute(dropIfExistsTable);

} catch (SQLException e1) {

log.error("SQL异常", e1);

e1.printStackTrace();

return false;

}

//根据传入的值,得到创建hive与mongodb关联sql语句

StringBuffer createSql = new StringBuffer();

createSql.append("create external table " + tableName + "_temp (");

for (int i = 0; i < list.size(); i++) {

createSql.append(list.get(i) + " String");

if (i != list.size() - 1) {

createSql.append(",");

}

}