http://docs.python.org/3/library/re.html

代码:

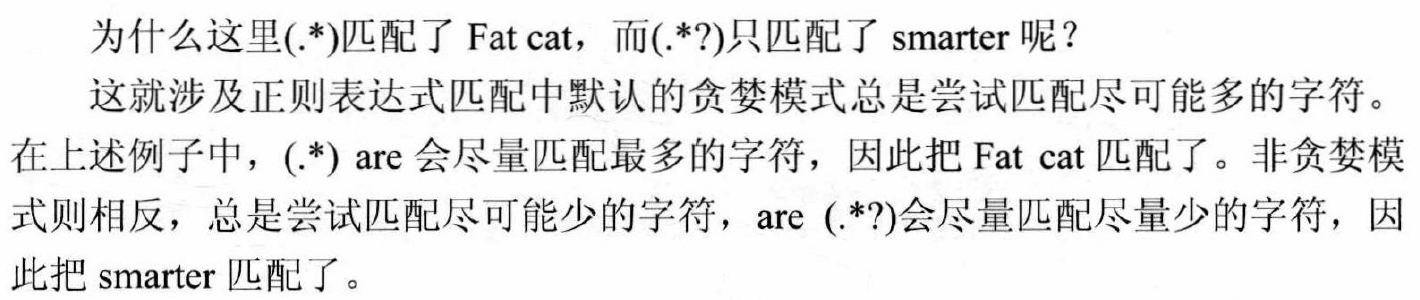

import re

line = "Fat cats are smarter than dogs, is it right?"

m = re.match( r'(.*) are (.*?) ', line)

print ("匹配的结果: ", m)

print ("匹配的起始与终点: ", m.span())

print ('匹配的整句话', m.group(0))

print ('匹配的第一个结果', m.group(1))

print ('匹配的第二个结果', m.group(2))

print ('匹配的结果列表', m.groups())

运行结果:

C:ProgramDataAnaconda3python.exe E:/python/work/ana_test/test.py

匹配的结果: <re.Match object; span=(0, 21), match='Fat cats are smarter '>

匹配的起始与终点: (0, 21)

匹配的整句话 Fat cats are smarter

匹配的第一个结果 Fat cats

匹配的第二个结果 smarter

匹配的结果列表 ('Fat cats', 'smarter')

Process finished with exit code 0

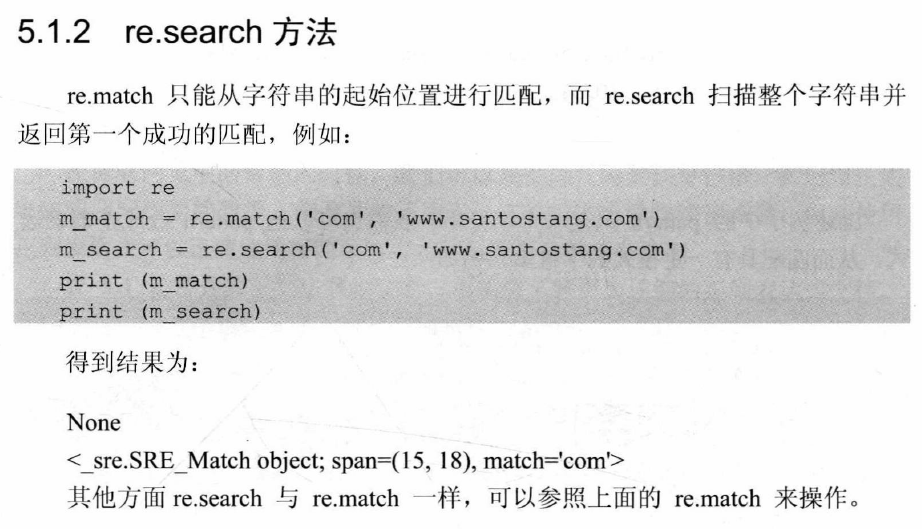

贪婪模式:

import re

m_match = re.match('[0-9]+', '12345 is the first number, 23456 is the sencond')

m_search = re.search('[0-9]+', 'The first number is 12345, 23456 is the sencond')

m_findall = re.findall('[0-9]+', '12345 is the first number, 23456 is the sencond')

print (m_match.group())

print (m_search.group())

print (m_findall)

结果:

C:ProgramDataAnaconda3python.exe E:/python/work/ana_test/test.py

12345

12345

['12345', '23456']

Process finished with exit code 0

代码:

import requests

import re

link = "http://www.santostang.com/"

headers = {'User-Agent' : 'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6'}

r = requests.get(link, headers= headers)

html = r.text

title_list = re.findall('<h1 class="post-title"><a href=.*?>(.*?)</a></h1>', html)

print (title_list)

结果:

['第四章 – 4.3 通过selenium 模拟浏览器抓取', '第四章 – 4.2 解析真实地址抓取', '第四章- 动态网页抓取 (解析真实地址 + selenium)', 'Hello world!']

代码:

import requests

from bs4 import BeautifulSoup

link = "http://www.santostang.com/"

headers = {'User-Agent' : 'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6'}

r = requests.get(link, headers= headers)

soup = BeautifulSoup(r.text,"lxml")

first_title = soup.find("h1", class_="post-title").a.text.strip()

print ("第1篇文章的标题是:", first_title)

title_list = soup.find_all("h1", class_="post-title")

for i in range(len(title_list)):

title = title_list[i].a.text.strip()

print ('第 %s 篇文章的标题是:%s' %(i+1, title))

结果:

C:ProgramDataAnaconda3python.exe E:/python/work/ana_test/test.py

第1篇文章的标题是: 第四章 – 4.3 通过selenium 模拟浏览器抓取

第 1 篇文章的标题是:第四章 – 4.3 通过selenium 模拟浏览器抓取

第 2 篇文章的标题是:第四章 – 4.2 解析真实地址抓取

第 3 篇文章的标题是:第四章- 动态网页抓取 (解析真实地址 + selenium)

第 4 篇文章的标题是:Hello world!

Process finished with exit code 0

代码:

import re

html = """

<html>

<body>

<header id="header">

<h1 id="name">

唐松Santos

</h1>

<div class="sns">

<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>

<a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>

<a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>

<a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>

</div>

<div class="nav">

<ul>

<li>

<a href="http://www.santostang.com/">

首页

</a>

</li>

<li>

<a href="http://www.santostang.com/sample-page/">

关于我

</a>

</li>

<li>

<a href="http://www.santostang.com/python%e7%bd%91%e7%bb%9c%e7%88%ac%e8%99%ab%e4%bb%a3%e7%a0%81/">

爬虫书代码

</a>

</li>

<li>

<a href="http://www.santostang.com/%e5%8a%a0%e6%88%91%e5%be%ae%e4%bf%a1/">

加我微信

</a>

</li>

<li>

<a href="https://santostang.github.io/">

EnglishSite

</a>

</li>

</ul>

</div>

</header>

</body>

</html>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, "lxml")

print (soup.prettify())

print ('----------- 1 -----------n')

print (soup.header.h1)

print ('----------- 2 -----------n')

print (soup.header.div.contents)

print ('----------- 3 -----------n')

print (soup.header.div.contents[1])

print ('----------- 4 -----------n')

for child in soup.header.div.children:

print (child)

print ('----------- 5 -----------n')

for child in soup.header.div.descendants:

print(child)

print ('----------- 6 -----------n')

a_tag = soup.header.div.a

a_tag.parent

print ('----------- 7 -----------n')

soup.find_all('div', class_='sns')

print ('----------- 8 -----------n')

for tag in soup.find_all(re.compile("^h")):

print(tag.name)

print ('----------- 9 -----------n')

soup.select("header h1")

print ('----------- 10 -----------n')

print (soup.select("header > h1"))

print ('----------- 11 -----------n')

print (soup.select("div > a"))

print ('----------- 12 -----------n')

print ( soup.select('a[href^="http://www.santostang.com/"]') )

print ('----------- 13 -----------n')

结果:

C:ProgramDataAnaconda3python.exe E:/python/work/ana_test/test.py

<html>

<body>

<header id="header">

<h1 id="name">

唐松Santos

</h1>

<div class="sns">

<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>

<a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>

<a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>

<a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>

</div>

<div class="nav">

<ul>

<li>

<a href="http://www.santostang.com/">

首页

</a>

</li>

<li>

<a href="http://www.santostang.com/sample-page/">

关于我

</a>

</li>

<li>

<a href="http://www.santostang.com/python%e7%bd%91%e7%bb%9c%e7%88%ac%e8%99%ab%e4%bb%a3%e7%a0%81/">

爬虫书代码

</a>

</li>

<li>

<a href="http://www.santostang.com/%e5%8a%a0%e6%88%91%e5%be%ae%e4%bf%a1/">

加我微信

</a>

</li>

<li>

<a href="https://santostang.github.io/">

EnglishSite

</a>

</li>

</ul>

</div>

</header>

</body>

</html>

----------- 1 -----------

<h1 id="name">

唐松Santos

</h1>

----------- 2 -----------

['n', <a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>, 'n', <a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>, 'n', <a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>, 'n', <a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>, 'n']

----------- 3 -----------

<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>

----------- 4 -----------

<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>

<a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>

<a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>

<a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>

----------- 5 -----------

<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>

<i aria-hidden="true" class="fa fa-rss">

</i>

<a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>

<i aria-hidden="true" class="fa fa-weibo">

</i>

<a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>

<i aria-hidden="true" class="fa fa-linkedin">

</i>

<a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>

<i aria-hidden="true" class="fa fa-envelope">

</i>

----------- 6 -----------

----------- 7 -----------

----------- 8 -----------

html

header

h1

----------- 9 -----------

----------- 10 -----------

[<h1 id="name">

唐松Santos

</h1>]

----------- 11 -----------

[<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>, <a href="http://weibo.com/santostang" rel="nofollow" target="_blank" title="Weibo">

<i aria-hidden="true" class="fa fa-weibo">

</i>

</a>, <a href="https://www.linkedin.com/in/santostang" rel="nofollow" target="_blank" title="Linkedin">

<i aria-hidden="true" class="fa fa-linkedin">

</i>

</a>, <a href="mailto:tangsongsky@gmail.com" rel="nofollow" target="_blank" title="envelope">

<i aria-hidden="true" class="fa fa-envelope">

</i>

</a>]

----------- 12 -----------

[<a href="http://www.santostang.com/feed/" rel="nofollow" target="_blank" title="RSS">

<i aria-hidden="true" class="fa fa-rss">

</i>

</a>, <a href="http://www.santostang.com/">

首页

</a>, <a href="http://www.santostang.com/sample-page/">

关于我

</a>, <a href="http://www.santostang.com/python%e7%bd%91%e7%bb%9c%e7%88%ac%e8%99%ab%e4%bb%a3%e7%a0%81/">

爬虫书代码

</a>, <a href="http://www.santostang.com/%e5%8a%a0%e6%88%91%e5%be%ae%e4%bf%a1/">

加我微信

</a>]

----------- 13 -----------

Process finished with exit code 0

代码:

import requests

from lxml import etree

link = "http://www.santostang.com/"

headers = {'User-Agent' : 'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6'}

r = requests.get(link, headers= headers)

html = etree.HTML(r.text)

title_list = html.xpath('//h1[@class="post-title"]/a/text()')

print (title_list)

(稍后补充)

最后

以上就是幽默萝莉最近收集整理的关于python网络爬虫从入门到实践 第5章 (一)的全部内容,更多相关python网络爬虫从入门到实践内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复