俯视机器学习

数学有多伤人,就有多销魂!

第9章 神经网络

1. 简介

模型术语:

- 神经元

- 输入(训练数据)

- 输出

- 激活函数

- 损失函数(交叉熵、平方误差)

- 权重(训练参数)

优化术语:

- 优化方法(优化器):梯度下降法

- 学习步长

训练术语:

- batch size

- iteration

- epoch

模型特点:多个局部最小值

直观理解为什么 work。

2. 矩阵求导

逐元素函数:

如果

σ

(

X

)

sigma(X)

σ(X) 是一个逐元素函数,则

d

σ

(

X

)

=

σ

′

(

X

)

⊙

d

X

{rm d}sigma(X) = sigma'(X)odot {rm d}X

dσ(X)=σ′(X)⊙dX

其中

X

X

X 可以为向量或矩阵,

σ

′

sigma'

σ′ 同样为逐元素函数,

⊙

odot

⊙ 代表逐元素相乘。例如

σ

(

h

)

=

1

1

+

e

−

h

sigma(h) = frac{1}{1+e^{-h}}

σ(h)=1+e−h1

其中

σ

sigma

σ 是一个逐元素函数,

h

∈

R

d

hin R^d

h∈Rd 是一个

d

d

d 维向量。则返回的

σ

(

h

)

sigma(h)

σ(h) 也是一个

n

n

n 维向量,且有

σ

(

h

)

i

=

1

1

+

e

−

h

i

,

i

=

1

,

⋯

,

d

sigma(h)_i = frac{1}{1+e^{-h_i}}, i=1,cdots, d

σ(h)i=1+e−hi1,i=1,⋯,d 。则

d

σ

(

h

)

=

(

1

1

+

e

−

h

)

′

d

h

=

e

−

h

(

1

+

e

−

h

)

2

d

h

{rm d}sigma(h) = (frac{1}{1+e^{-h}})'{rm d}h = frac{e^{-h}}{(1+e^{-h})^2}{rm d}h

dσ(h)=(1+e−h1)′dh=(1+e−h)2e−hdh

注意区分逐元素函数与普通函数区别。逐元素函数可以把输入向量或矩阵的单独元素输入,返回一个值。而普通函数把向量或矩阵当作一个整体进行运算。

另外,

σ

(

x

)

=

s

i

g

m

o

i

d

(

x

)

sigma(x) = sigmoid(x)

σ(x)=sigmoid(x) 函数有一个很好的性质,即:

σ

′

(

x

)

=

σ

(

x

)

(

1

−

σ

(

x

)

)

sigma'(x) = sigma(x)(1-sigma(x))

σ′(x)=σ(x)(1−σ(x))

3. 全连接神经网络公式推导

推导2层全连接前馈神经网络。输如

x

∈

R

d

xin R^d

x∈Rd ,两个隐藏层权重为

W

1

W_1

W1 、

W

2

W_2

W2 。第1层和第2层输出分别为

h

1

h_1

h1 和

h

2

h_2

h2 。中间用

s

i

g

m

o

i

d

sigmoid

sigmoid 函数,损失函数采用平方误差和,则

h

1

=

W

1

x

s

1

=

σ

(

h

1

)

h

2

=

W

2

s

1

l

=

1

2

∥

h

2

−

y

∥

2

2

=

1

2

(

h

2

−

y

)

T

(

h

2

−

y

)

begin{aligned} h_1 &= W_1 x\ s_1 &= sigma(h_1) \ h_2 &= W_2 s_1 \ l &= frac{1}{2}Vert h_2 - yVert_2^2 = frac{1}{2}(h_2 - y)^T (h_2 - y) end{aligned}

h1s1h2l=W1x=σ(h1)=W2s1=21∥h2−y∥22=21(h2−y)T(h2−y)

整个推导想要的结果为:

∂

l

∂

W

1

,

∂

l

∂

W

2

frac{partial l}{partial W_1}, frac{partial l}{partial W_2}

∂W1∂l,∂W2∂l

(1)

d

l

=

d

h

2

T

(

h

2

−

y

)

+

(

h

2

−

y

)

T

d

h

2

t

r

(

d

l

)

=

t

r

(

(

h

2

−

y

)

T

d

h

2

)

∂

l

∂

h

2

=

h

2

−

y

begin{aligned} dl &= dh_2^T (h_2 - y) + (h_2 - y)^T dh_2 \ tr(dl) &= tr((h_2 - y)^T dh_2)\ frac{partial l}{partial h_2} &= h_2 - y end{aligned}

dltr(dl)∂h2∂l=dh2T(h2−y)+(h2−y)Tdh2=tr((h2−y)Tdh2)=h2−y

(2)

d

l

=

t

r

(

∂

l

∂

h

2

T

d

h

2

)

=

t

r

(

∂

l

∂

h

2

T

d

(

W

2

s

1

)

)

=

t

r

(

∂

l

∂

h

2

T

d

W

2

s

1

)

+

t

r

(

∂

l

∂

h

2

T

W

2

d

s

1

)

∂

l

∂

W

2

=

∂

l

∂

h

2

s

1

T

∂

l

∂

s

1

=

W

2

T

∂

l

∂

h

2

begin{aligned} dl &= tr(frac{partial l}{partial h_2}^T dh_2) \ &= trleft(frac{partial l}{partial h_2}^T d(W_2 s_1)right) \ &= trleft(frac{partial l}{partial h_2}^T dW_2 s_1right) + trleft(frac{partial l}{partial h_2}^T W_2 ds_1right) \ frac{partial l}{partial W_2} &= frac{partial l}{partial h_2} s_1^T \ frac{partial l}{partial s_1} &= W_2^Tfrac{partial l}{partial h_2} \ end{aligned}

dl∂W2∂l∂s1∂l=tr(∂h2∂lTdh2)=tr(∂h2∂lTd(W2s1))=tr(∂h2∂lTdW2s1)+tr(∂h2∂lTW2ds1)=∂h2∂ls1T=W2T∂h2∂l

(3)

t

r

(

∂

l

∂

s

1

T

d

s

1

)

=

t

r

(

∂

l

∂

s

1

T

d

σ

(

h

1

)

)

=

t

r

(

∂

l

∂

s

1

T

[

σ

′

(

h

1

)

⊙

d

h

1

]

)

=

t

r

(

[

∂

l

∂

s

1

⊙

σ

′

(

h

1

)

]

T

d

h

1

)

∂

l

∂

h

1

=

∂

l

∂

s

1

⊙

σ

′

(

h

1

)

=

∂

l

∂

s

1

⊙

[

σ

(

h

1

)

⊙

(

1

−

σ

(

h

1

)

)

]

begin{aligned} trleft(frac{partial l}{partial s_1}^T ds_1right) &= trleft(frac{partial l}{partial s_1}^T dsigma(h_1)right) \ &= trleft(frac{partial l}{partial s_1}^T [sigma'(h_1)odot dh_1]right) \ &= trleft([frac{partial l}{partial s_1}odot sigma'(h_1)]^T dh_1right) \ frac{partial l}{partial h_1} &= frac{partial l}{partial s_1}odot sigma'(h_1) =frac{partial l}{partial s_1}odot [sigma(h_1) odot (1-sigma(h_1))] end{aligned}

tr(∂s1∂lTds1)∂h1∂l=tr(∂s1∂lTdσ(h1))=tr(∂s1∂lT[σ′(h1)⊙dh1])=tr([∂s1∂l⊙σ′(h1)]Tdh1)=∂s1∂l⊙σ′(h1)=∂s1∂l⊙[σ(h1)⊙(1−σ(h1))]

(4)

d

l

=

t

r

(

∂

l

∂

h

1

T

d

h

1

)

=

t

r

(

∂

l

∂

h

1

T

d

(

W

1

x

)

)

=

t

r

(

∂

l

∂

h

1

T

d

W

1

x

)

∂

l

∂

W

1

=

∂

l

∂

h

1

x

T

begin{aligned} dl &= tr(frac{partial l}{partial h_1}^T dh_1) \ &= trleft(frac{partial l}{partial h_1}^T d(W_1 x)right) \ &= trleft(frac{partial l}{partial h_1}^T dW_1 xright)\ frac{partial l}{partial W_1} &= frac{partial l}{partial h_1} x^T \ end{aligned}

dl∂W1∂l=tr(∂h1∂lTdh1)=tr(∂h1∂lTd(W1x))=tr(∂h1∂lTdW1x)=∂h1∂lxT

如果损失函数取交叉熵,参考此处,则

l

=

−

y

T

log

s

o

f

t

m

a

x

(

h

2

)

l = -y^T log {rm softmax}(h_2)

l=−yTlogsoftmax(h2)

其中

s

o

f

t

m

a

x

{rm softmax}

softmax 函数的定义为对向量

z

∈

R

n

zin R^n

z∈Rn

[

s

o

f

t

m

a

x

(

z

)

]

i

=

e

z

i

∑

j

=

1

n

e

z

j

[{rm softmax}(z)]_i = frac{e^{z_i}} {sum_{j=1}^n e^{z_j}}

[softmax(z)]i=∑j=1nezjezi

最终获得的结果也为一个

n

n

n 维向量。

则

∂

l

∂

h

2

=

s

o

f

t

m

a

x

(

h

2

)

−

y

frac{partial l}{partial h_2} = {rm softmax}(h_2) - y

∂h2∂l=softmax(h2)−y

4. 实战:手写数字识别(2层全连接网络)

数据集介绍:

- 数据来源:E. Alpaydin, C. Kaynak

- 5000+图片,每张大小为 8 × 8 8times 8 8×8 ,拉成一维列表,故得 64 个特征。本次实战只采用 100 个数字,0-9 每个数字 10 个。

- 目标:根据输入的图片,对数字进行分类。

import numpy as np

import pandas as pd

from sklearn.datasets import load_digits

import matplotlib.pyplot as plt

def sigmoid(x):

return 1./(1 + np.exp(-x))

def softmax(x):

return np.exp(x) / np.exp(x).sum()

def onehot(y, n):

yy = np.zeros(n)

yy[y] = 1.

return yy.reshape(-1, 1)

X, y = load_digits(return_X_y=True)

X = X[:100] # 只取前面100个数字测试,0-9每个数字10个

y = y[:100]

N = 128

nClass = 10

eta = 1e-3

epoch = 4

W1 = np.random.randn(N, X.shape[1])

W2 = np.random.randn(nClass, N)

loss = []

for e in range(20):

lss = []

for _ in range(1000):

i = np.random.choice(np.arange(len(X)))

x = X[i, :].reshape(-1, 1) # 变成列向量

yi = onehot(y[i], nClass) # 变成列向量

# 正向计算

h1 = (W1 @ x).reshape(-1, 1)

s1 = sigmoid(h1)

h2 = (W2 @ s1).reshape(-1, 1)

ls = -yi.T @ np.log(softmax(h2))

lss.append(ls[0,0])

# 反向传播

plph2 = h2 - yi

plpW2 = plph2 * s1.T

plps1 = W2.T @ plph2

plph1 = plps1 * (sigmoid(h1) * (1 - sigmoid(h1)))

plpW1 = plph1 @ x.T

# 更新权重

W1 -= eta * plpW1

W2 -= eta * plpW2

loss.append(np.mean(lss))

eta *= 0.6

# 准确率

H1 = (W1 @ X.T)

S1 = sigmoid(H1)

H2 = (W2 @ S1)

# acc

acc = np.sum(H2.argmax(axis=0) == y) / len(y)

print(f'Accurancy = {acc}')

# 绘图

plt.plot(loss)

plt.show()

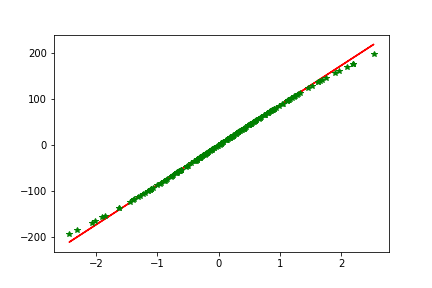

5. 实战:神经网络回归问题

X, y = make_regression(n_samples=200, n_features=1, random_state=1)

N = 128

eta = 0.003

epoch = 200

W1 = np.random.randn(N, X.shape[1])

W2 = np.random.randn(1, N)

loss = []

for e in range(epoch):

lss = []

for i in range(len(X)):

x = X[i, :].reshape(-1, 1) # 变成列向量

yi = np.array([y[i]]) # 变成列向量

# 正向计算

h1 = (W1 @ x).reshape(-1, 1)

s1 = sigmoid(h1)

h2 = (W2 @ s1).reshape(-1, 1)

ls = np.linalg.norm(h2.ravel() - yi)

lss.append(ls)

# 反向传播

plph2 = h2 - yi

plpW2 = plph2 * s1.T #+ 0.0001 * W2

plps1 = W2.T @ plph2

plph1 = plps1 * (sigmoid(h1) * (1 - sigmoid(h1)))

plpW1 = plph1 @ x.T #+ 0.0001 * W1

# 更新权重

W1 -= eta * plpW1

W2 -= eta * plpW2

loss.append(np.mean(lss))

# if e % 10 == 0:

# print(np.linalg.norm(W1, 'fro'))

H1 = (W1 @ X.T)

S1 = sigmoid(H1)

H2 = (W2 @ S1)

# 绘图

plt.plot(X.ravel(), y, 'r', X.ravel(), H2.ravel(), 'g*')

plt.show()

6. Sklearn 中的神经网络

class sklearn.neural_network.MLPRegressor(hidden_layer_sizes=(100, ), activation=’relu’, *, solver=’adam’, alpha=0.0001,batch_size=’auto’, learning_rate=’constant’, learning_rate_init=0.001, power_t=0.5, max_iter=200, shuffle=True, random_state=None, tol=0.0001, verbose=False, warm_start=False, momentum=0.9, nesterovs_momentum=True,early_stopping=False, validation_fraction=0.1, beta_1=0.9, beta_2=0.999, epsilon=1e-08, n_iter_no_change=10, max_fun=15000)

- 参数

hidden_layer_sizes [tuple, length = n_layers - 2, default=(100,)]隐藏层activation [{‘identity’, ‘logistic’, ‘tanh’, ‘relu’}, default=’relu’]激活函数batch_size [int, default=’auto’]批处理数据量learning_rate [{‘constant’, ‘invscaling’, ‘adaptive’}, default=’constant’]学习率learning_rate_init [double, default=0.001]学习率初始值max_iter [int, default=200]最大迭代次数shuffle [bool, default=True]是否打乱每次迭代的样本顺序

- 属性

loss_ [float]损失coefs_ [list, length n_layers - 1]系数intercepts_ [list, length n_layers - 1]截距

- 方法

fit(X, y)predict(X)score(X, y[, sample_weight])

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import load_digits, make_regression

from sklearn.neural_network import MLPClassifier, MLPRegressor

# 分类

X, y = load_digits(return_X_y=True)

X = X[:100] # 只取前面100个数字测试,0-9每个数字10个

y = y[:100]

clf = MLPClassifier((128,)).fit(X, y)

clf.score(X, y)

# 回归

X, y = make_regression(n_samples=200, n_features=1, random_state=1)

print(X.shape)

clf = MLPRegressor((128,), max_iter=200, learning_rate_init=0.2).fit(X, y)

ypred = clf.predict(X)

plt.plot(X.ravel(), y, 'r', X.ravel(), ypred, 'g*')

plt.show()

版权申明:本教程版权归创作人所有,未经许可,谢绝转载!

交流讨论QQ群:784117704

部分视频观看地址:b站搜索“火力教育”

课件下载地址:QQ群文件(有最新更新) or 百度网盘PDF课件及代码

链接:https://pan.baidu.com/s/1lc8c7yDc30KY1L_ehJAfDg

提取码:u3ls

最后

以上就是聪慧吐司最近收集整理的关于第9章 BP神经网络(算法原理+手编实现+库调用) 俯视机器学习 第9章 神经网络的全部内容,更多相关第9章内容请搜索靠谱客的其他文章。

![BP 神经网络train训练函数[net=train(net,inputn,outputn)]](https://www.shuijiaxian.com/files_image/reation/bcimg4.png)

发表评论 取消回复