硬件准备

-

Win10上安装virtualbox,采用桥接模式,网卡为wireless

虚拟机地址为,192.168.1.188

cat /etc/issue Ubuntu 16.04.2 LTS n l -

其他已安装 (除去jdk外非必需)

安装了redis/usr/bin/redis-server,redis-cli 安装了java user/bin/java 安装了sonar/usr/local/sonar/sonarqube-5.6.6/bin/linux-x86- 64/sonar.shstart 默认监听9000端口 安装了mysql server && client 用户名密码root 安装了php 7.0 安装了apache2 apachectl -v 2.4.10 安装了szrz小工具 安装了jenkins service jenkins start,默认监听8080端口 用户名密码 tongbo

软件准备

jdk

root@ubuntu:/usr/bin# /usr/local/java/jdk1.8.0_121/bin/java -version

java version "1.8.0_121"

Java(TM) SE Runtime Environment (build 1.8.0_121-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

root@ubuntu:/usr/bin#

root@ubuntu:/home/tb# /usr/local/java/jdk1.8.0_121/bin/jps

2050 Jps

1533 jenkins.war

hadoop官网下载地址

可以利用szrz小工具,我是解压后hadoop安装目录为:

/home/tb/tbdown/hadoop-2.8.2

tar zxf hadoop-2.8.2.tar.gz

root@ubuntu:/home/tb/tbdown# ls

dump.rdb hadoop-2.8.2-src hadoop-2.8.2.tar.gz nginx-1.8.1.tar.gz

hadoop-2.8.2 hadoop-2.8.2-src.tar.gz nginx-1.8.1 spider111

验证hadoop是否安装成功

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# ./bin/hadoop version

Hadoop 2.8.2

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 66c47f2a01ad9637879e95f80c41f798373828fb

Compiled by jdu on 2017-10-19T20:39Z

Compiled with protoc 2.5.0

From source with checksum dce55e5afe30c210816b39b631a53b1d

This command was run using /home/tb/tbdown/hadoop-2.8.2/share/hadoop/common/hadoop-common-2.8.2.jar

修改配置

注意所有操作都在hadoop安装目录(/home/tb/tbdown/hadoop-2.8.2/)进行

如有需要改动ip配置,重启网卡 /etc/init.d/networking restart

vim /etc/hosts

添加一行

127.0.0.1 tb001

然后需要修改配置文件,在hadoop安装目录的./etc/hadoop下

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2/etc/hadoop# ls

capacity-scheduler.xml hadoop-policy.xml kms-log4j.properties ssl-client.xml.example

configuration.xsl hdfs-site.xml kms-site.xml ssl-server.xml.example

container-executor.cfg httpfs-env.sh log4j.properties yarn-env.cmd

core-site.xml httpfs-log4j.properties mapred-env.cmd yarn-env.sh

hadoop-env.cmd httpfs-signature.secret mapred-env.sh yarn-site.xml

hadoop-env.sh httpfs-site.xml mapred-queues.xml.template

hadoop-metrics2.properties kms-acls.xml mapred-site.xml.template

hadoop-metrics.properties kms-env.sh slaves

修改配置文件前,最好先备份一下原始的配置文件

vim hadoop-env.sh

需要更改第一行的 export java home ,如果已经配置了全局java,则无需更改,否则使JAVA_HOME =/your java.jdk...*/

将simple配置文件复制一份自己定义的,yarn是用来hadoop的资源管理系统,

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2/etc/hadoop# cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

vim core-site.xml

如果没有设置hosts里面的对应,以下的tb001可以设置为localhost,fs.defaultFS是用来设置hadoop的文件系统,默认就是hdfs了。这样客户端可以通过8020端口来连接namenode服务,hdfs的守护进程也会通过该属性确定主机和端口

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs:://tb001:8020</value>

</property>

</configuration>

vim hdfs-site.xml

第一个参数配置为副本数,由于是单机,先为1,默认应该是3,但是我们是伪分布式,设置为3会在block做副本的时候报错,就是说无法将某块复制到3个datanode上。

另外关于副本数量,可以通过hodoop fs -ls命令看到副本的数量

第二三个参数配置为 两个目录配置,配置好后启动hadoop会自动创建,默认为/tmp/,如果是虚拟机,请一定设置非/tmp路径

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/tb/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/tb/hadoop/dfs/data</value>

vim yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_value</value>

</property>

vim slaves

如果是单机,loalhost或者修改对应hosts的值都可以

默认为localhost

启动服务

启动namenode ,启动之前先进行格式化,第一次进行时运行,如果后期再运行,将格式化所有数据

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# ./bin/hadoop namenode -format

格式化后对应目录将会有以下变化(根据你设置的hdfs-site.xml第一个设置dfs路径的):多了dfs目录及以下

(启动datanode会创建第二个配置文件中的目录,见下文)

root@ubuntu:/home/tb/hadoop# pwd

/home/tb/hadoop

root@ubuntu:/home/tb/hadoop# tree ./

./

└── dfs

└── name

└── current

├── fsimage_0000000000000000000

├── fsimage_0000000000000000000.md5

├── seen_txid

└── VERSION

3 directories, 4 files

启动namenode

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# ./sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/tb/tbdown/hadoop-2.8.2/logs/hadoop-root-namenode-ubuntu.out

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2#

如何知道namenode有没有成功呢?看下面没有对应进程,说明没有成功,下面我们调试错误

/usr/local/java/jdk1.8.0_121/bin/jps

1533 jenkins.war

3215 Jps

调试错误

没有成功,可以查看log

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# cd logs/

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2/logs# ls

hadoop-root-namenode-ubuntu.log hadoop-root-namenode-ubuntu.out SecurityAuth-root.audit

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2/logs# tail -f hadoop-root-namenode-ubuntu.log

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:682)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:905)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:884)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1610)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1678)

2017-11-04 16:42:22,937 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1

2017-11-04 16:42:22,939 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at ubuntu/127.0.1.1

************************************************************/

找到问题所在:

java.lang.IllegalArgumentException: Invalid URI for NameNode address (check fs.defaultFS): hdfs:://tb001:8020 has no authority.

看问题是没有授权,解决办法如下[可以参考文末官方文档],ssh主要是方便主节点直接登录操作其他子节点,无需单独登录到子节点逐一管理

apt-get install ssh

sudo apt-get install pdsh

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

再次执行启动namenode就可以了,

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# ./sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/tb/tbdown/hadoop-2.8.2/logs/hadoop-root-namenode-ubuntu.out

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# /usr/local/java/jdk1.8.0_121/bin/jps

6658 NameNode

6731 Jps

1550 jenkins.war

namenode已经启动成功,继续启动其他吧

当然也可以把dfs的namenode和datanode 一起执行,执行 sbin/start-dfs.sh即可

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# sbin/start-dfs.sh

Starting namenodes on [tb001]

tb001: namenode running as process 6658. Stop it first.

localhost: starting datanode, logging to /home/tb/tbdown/hadoop-2.8.2/logs/hadoop-root-datanode-ubuntu.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/tb/tbdown/hadoop-2.8.2/logs/hadoop-root-secondarynamenode-ubuntu.out

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# /usr/local/java/jdk1.8.0_121/bin/jps

6658 NameNode

7235 Jps

7124 SecondaryNameNode

6942 DataNode

1550 jenkins.war

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2#

停止

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# sbin/stop-dfs.sh

Stopping namenodes on [tb001]

tb001: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2#

启动hive

root@ubuntu:/usr/local/apache-hive-2.2.0-bin/bin# ./hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.2.0-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/tb/tbdown/hadoop-2.8.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/usr/local/apache-hive-2.2.0-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

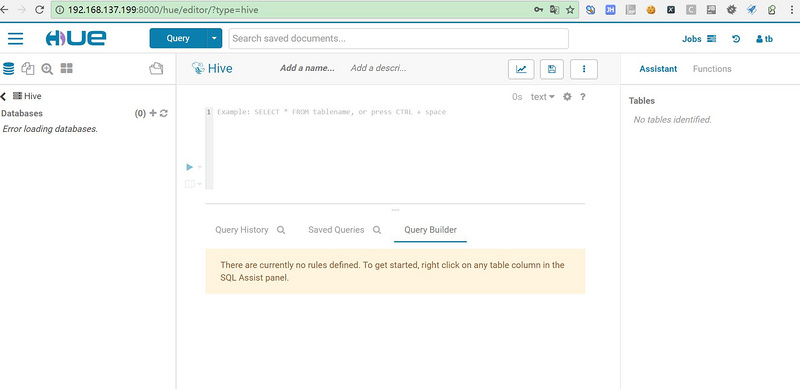

启动hive server2供hue

root@ubuntu:/home/tb/tbdown/hue# hive --service hiveserver2

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.2.0-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/tb/tbdown/hadoop-2.8.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

检查一下

oot@ubuntu:/home/tb/tbdown/hadoop-2.8.2/etc/hadoop# netstat -anp | grep 10000

tcp 0 0 0.0.0.0:10000 0.0.0.0:* LISTEN 5030/java

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2/etc/hadoop#

启动yarn

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /home/tb/tbdown/hadoop-2.8.2/logs/yarn-root-resourcemanager-ubuntu.out

localhost: starting nodemanager, logging to /home/tb/tbdown/hadoop-2.8.2/logs/yarn-root-nodemanager-ubuntu.out

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2# /usr/local/java/jdk1.8.0_121/bin/jps

9252 SecondaryNameNode

8918 NameNode

10039 Jps

9066 DataNode

1550 jenkins.war

9631 ResourceManager

root@ubuntu:/home/tb/tbdown/hadoop-2.8.2#

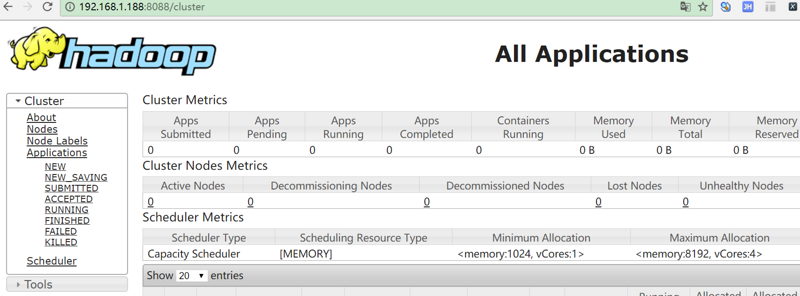

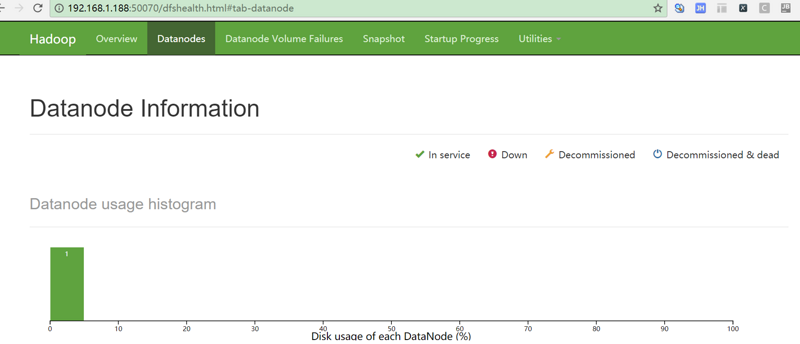

验证成功

yarn :http://192.168.1.188:8088/cluster

hdfs :http://192.168.1.188:50070/dfshealth.html#tab-overview

安装HUE

HUE GITHUB

HUE python写的,需要安装dev python包

apt-get update

apt-get install python-dev

git clone https://github.com/cloudera/hue.git

cd hue

执行 make apps

cd /home/tb/tbdown/hue/maven && mvn install

/bin/bash: mvn: command not found

Makefile:122: recipe for target 'parent-pom' failed

make: *** [parent-pom] Error 127

root@ubuntu:/home/tb/tbdown/hue#

看来是没有安装mvn

mvn -v

The program 'mvn' is currently not installed. You can install it by typing:

apt install maven

root@ubuntu:/home/tb/tbdown/hue# apt install maven

安装一下吧

apt install maven

再次 mvn -v

Apache Maven 3.3.9

Maven home: /usr/share/maven

Java version: 1.8.0_121, vendor: Oracle Corporation

Java home: /usr/local/java/jdk1.8.0_121/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "4.4.0-62-generic", arch: "amd64", family: "unix"

再次 make apps

fatal error: libxml/xmlversion.h: No such file or directory

解决办法:依次执行apt-get install libxml2-dev libxslt1-dev python-dev

然后再再次 make apps

马蛋又错了:错误部分如下:...7/src/kerberos.o sh: 1: krb5-conf..

怎么就这么难,然后又去撸了撸文档,发现人家说的清清楚楚,安装hue之前需要安装哪些东西。

You'll need these library development packages and tools installed on your system:

不好好看文档!

https://github.com/cloudera/h...

然后再再再次 make apps

10分钟后成功了

...

426 static files copied to '/home/tb/tbdown/hue/build/static', 1426 post-processed.

make[1]: Leaving directory '/home/tb/tbdown/hue/apps'

...

然后执行 build/env/bin/hue runserver

默认只能本机访问,如需外网build/env/bin/hue runserver 0.0.0.0:8000

启动成功

[19/Jan/2018 00:27:26 +0000] __init__ INFO Couldn't import snappy. Support for snappy compression disabled.

0 errors found

January 19, 2018 - 00:27:26

Django version 1.6.10, using settings 'desktop.settings'

Starting development server at http://0.0.0.0:8000/

Quit the server with CONTROL-C.

HUE上图

更多内容及参考

官方安装说明

自动安装部署

ambari

minos(小米开源hadoop部署)

choudear manager(收费)

一键打包兼容

hdp

cdh4 OR cdh5

最后

以上就是专一小蜜蜂最近收集整理的关于 ubuntu16.04 安装单机Hadoop&HIVE&HUE的全部内容,更多相关内容请搜索靠谱客的其他文章。

发表评论 取消回复