计划部署安装

ovs + dpdk,为了安装过程顺利少踩坑,所以严格按照ovs官网的部署安装教程执行。Ovs版本采用2.7版本,dpdk采用16.11.1版本。

Ovs官方安装步骤链接: http://docs.openvswitch.org/en/latest/intro/install/dpdk/

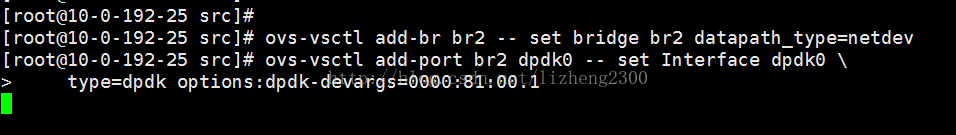

前面的安装过程一切都顺利,安装完成后需要添加ovs网桥和端口。

命令如下:(网桥是br2 ,端口是dpdk0)

ovs-vsctl add-br br2 -- set bridge br2datapath_type=netdev

ovs-vsctl add-port br2 dpdk0 -- set Interface dpdk0

type=dpdkoptions:dpdk-devargs=0000:81:00.1

添加完端口看终端挂了,现象如下。

在查看下ovs的相关进程发现ovs-vswitchd进程挂了。

[root@10-0-192-25 src]# ps -aux | grep ovs

root 67164 0.0 0.0 17168 1536 ? Ss 11:07 0:00 ovsdb-server --remote=punix:/usr/local/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --pidfile --detach

root 67735 0.0 0.0 112648 964 pts/0 S+ 11:10 0:00 grep --color=auto ovs

[root@10-0-192-25 src]#

正常情况如下所示:

[root@10-0-192-25 src]# ps -aux | grep ovs

root 67918 0.0 0.0 17056 1268 ? Ss 11:11 0:00 ovsdb-server --remote=punix:/usr/local/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --pidfile --detach

root 67975 16.0 0.0 1312112 2692 ? Ssl 11:11 0:01 ovs-vswitchd unix:/usr/local/var/run/openvswitch/db.sock --pidfile --detach --log-file

root 68015 0.0 0.0 112648 964 pts/0 R+ 11:11 0:00 grep --color=auto ovs

[root@10-0-192-25 src]#

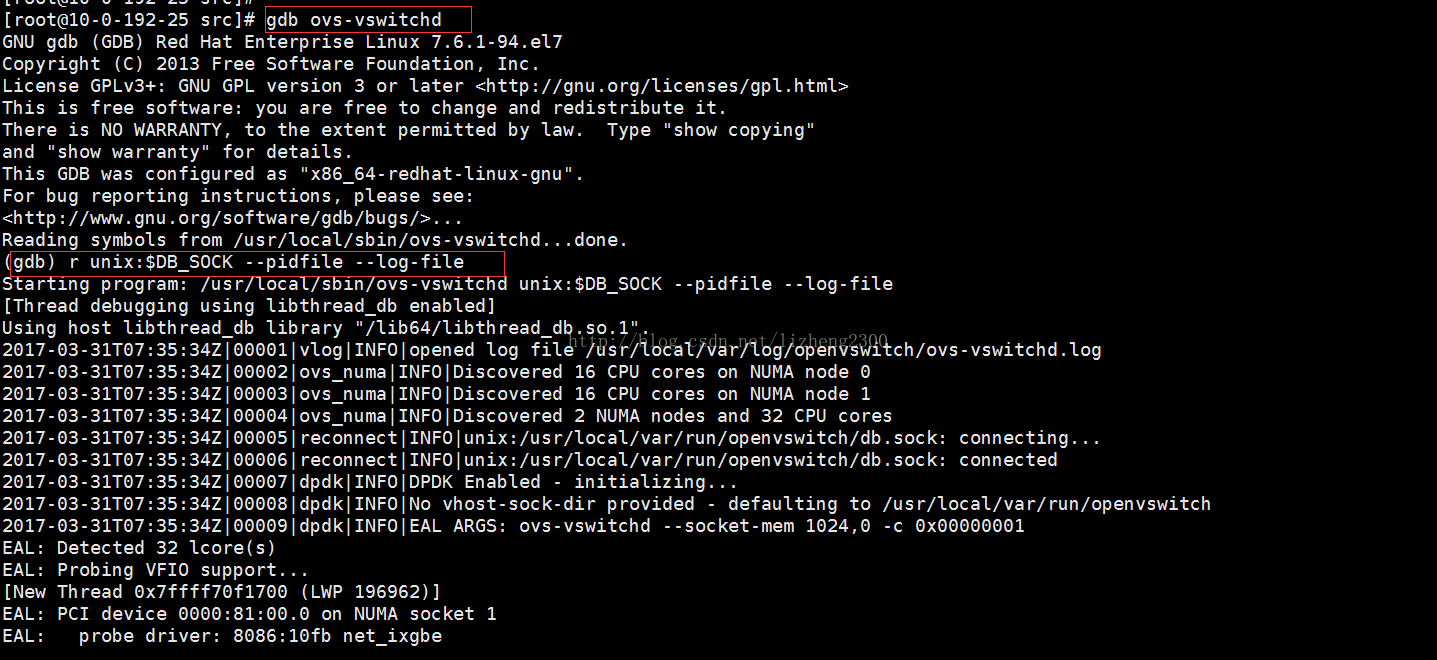

用gdb跟踪下问题出现在了哪里?

添加完ovs端口后查看问题复现了,bug挂在了ovs_list_insert这个函数,具体的代码是 elem->prev = before->prev; 推断八成是空指针引起的非法访问造成的,函数调用栈如下:

Program received signal SIGSEGV, Segmentation fault.

0x0000000000e78be7 in ovs_list_insert (before=0x1266290 <dpdk_mp_list>, elem=0x18) at ./include/openvswitch/list.h:124

124 elem->prev = before->prev;

Missing separate debuginfos, use: debuginfo-install glibc-2.17-157.el7_3.1.x86_64

(gdb) bt

#0 0x0000000000e78be7 in ovs_list_insert (before=0x1266290 <dpdk_mp_list>, elem=0x18)

at ./include/openvswitch/list.h:124

#1 0x0000000000e78c35 in ovs_list_push_back (list=0x1266290 <dpdk_mp_list>, elem=0x18)

at ./include/openvswitch/list.h:164

#2 0x0000000000e79388 in dpdk_mp_get (socket_id=1, mtu=2030) at lib/netdev-dpdk.c:533

#3 0x0000000000e79475 in netdev_dpdk_mempool_configure (dev=0x7fff7ffc55c0) at lib/netdev-dpdk.c:570

#4 0x0000000000e7f294 in netdev_dpdk_reconfigure (netdev=0x7fff7ffc55c0) at lib/netdev-dpdk.c:3134

#5 0x0000000000da7496 in netdev_reconfigure (netdev=0x7fff7ffc55c0) at lib/netdev.c:2001

#6 0x0000000000d7450c in port_reconfigure (port=0x15f7800) at lib/dpif-netdev.c:2952

#7 0x0000000000d7527f in reconfigure_datapath (dp=0x15bdca0) at lib/dpif-netdev.c:3273

#8 0x0000000000d70d87 in do_add_port (dp=0x15bdca0, devname=0x15bc700 "dpdk0", type=0xf55942 "dpdk", port_no=2)

at lib/dpif-netdev.c:1351

#9 0x0000000000d70e7d in dpif_netdev_port_add (dpif=0x15614d0, netdev=0x7fff7ffc55c0, port_nop=0x7fffffffe1b8)

at lib/dpif-netdev.c:1377

#10 0x0000000000d7afb4 in dpif_port_add (dpif=0x15614d0, netdev=0x7fff7ffc55c0, port_nop=0x7fffffffe20c)

at lib/dpif.c:544

#11 0x0000000000d242a0 in port_add (ofproto_=0x15bc940, netdev=0x7fff7ffc55c0) at ofproto/ofproto-dpif.c:3342

#12 0x0000000000d0c726 in ofproto_port_add (ofproto=0x15bc940, netdev=0x7fff7ffc55c0, ofp_portp=0x7fffffffe374)

at ofproto/ofproto.c:1998

#13 0x0000000000cf94fc in iface_do_create (br=0x15615b0, iface_cfg=0x15f8a80, ofp_portp=0x7fffffffe374,

netdevp=0x7fffffffe378, errp=0x7fffffffe368) at vswitchd/bridge.c:1763

#14 0x0000000000cf9683 in iface_create (br=0x15615b0, iface_cfg=0x15f8a80, port_cfg=0x1565320) at vswitchd/bridge.c:1801

#15 0x0000000000cf6f2b in bridge_add_ports__ (br=0x15615b0, wanted_ports=0x1561690, with_requested_port=false)

at vswitchd/bridge.c:912

#16 0x0000000000cf6fbc in bridge_add_ports (br=0x15615b0, wanted_ports=0x1561690) at vswitchd/bridge.c:928

#17 0x0000000000cf6510 in bridge_reconfigure (ovs_cfg=0x1588a80) at vswitchd/bridge.c:644

---Type <return> to continue, or q <return> to quit---

#18 0x0000000000cfc7a3 in bridge_run () at vswitchd/bridge.c:2961

#19 0x0000000000d01c78 in main (argc=4, argv=0x7fffffffe638) at vswitchd/ovs-vswitchd.c:111

(gdb)

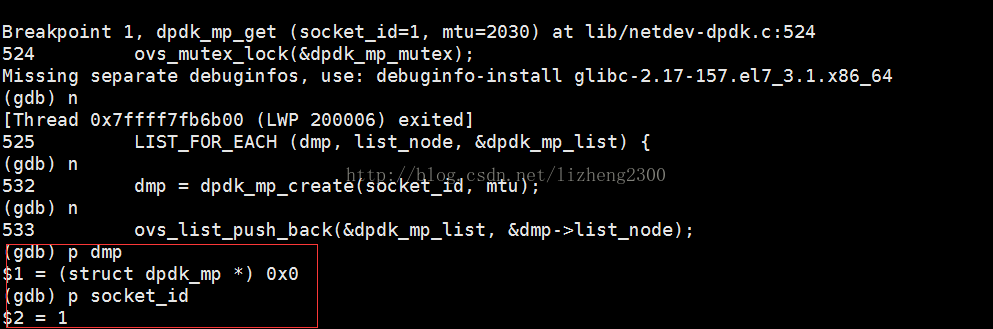

问题函数如下:

static struct dpdk_mp *

dpdk_mp_get(int socket_id, int mtu)

{

struct dpdk_mp *dmp;

ovs_mutex_lock(&dpdk_mp_mutex);

LIST_FOR_EACH (dmp, list_node, &dpdk_mp_list) {

if (dmp->socket_id == socket_id && dmp->mtu == mtu) {

dmp->refcount++;

goto out;

}

}

dmp = dpdk_mp_create(socket_id, mtu);

//dmp返回值有问题

//dmp 返回NULL,却没有判断直接用&dmp->list_node

ovs_list_push_back(&dpdk_mp_list, &dmp->list_node);

out:

ovs_mutex_unlock(&dpdk_mp_mutex);

return dmp;

}

用gdb打印了一下dmp =dpdk_mp_create(socket_id, mtu);此函数返回值果然为NULL。ovs_list_push_back函数也没有做参数检查拿过来就用。

Socket_id打印出来是1,这个是后续问题推断的一个重要线索,意思是在numa node1节点上申请内存。

顺着这个线索往前推,看看到底为什么没有分配到内存。

问题出现在dpdk_mp_create函数,先看看代码都有哪几种情形返回NULL。

用gdb跟踪了一下发问题出现在调用rte_mempool_create函数返回值是NULL。且rte_mempool_create函数是dpdk的lib库函数,ovs调用此函数创建内存池。定位到此处发现如果再继续定位下去需要查看dpdk的代码,没办法只能继续。

问题跟踪到dpdk的代码进度是越来越慢,因为跟踪的这一路是dpdk的内存管理流程,代码很难啃。继续跟踪rte_mempool_create函数。

函数调用流程如下:

rte_mempool_create

-> rte_mempool_create_empty

->rte_memzone_reserve

->rte_memzone_reserve_thread_safe

->memzone_reserve_aligned_thread_unsafe

->malloc_heap_alloc

->find_suitable_element

最后跟踪到find_suitable_element函数,此函数的功能是dpdk的内存管理malloc申请堆内存时,首先查看空闲链表是否有空闲的内存块,如果有空闲内存块则返回空闲节点的地址,如果没有返回NULL。

函数如下:

static struct malloc_elem *

find_suitable_element(struct malloc_heap *heap, size_t size,

unsigned flags, size_t align, size_t bound)

{

size_t idx;

struct malloc_elem *elem, *alt_elem = NULL;

for (idx = malloc_elem_free_list_index(size);

idx < RTE_HEAP_NUM_FREELISTS; idx++) {

for (elem = LIST_FIRST(&heap->free_head[idx]);

!!elem; elem = LIST_NEXT(elem, free_list)) {

if (malloc_elem_can_hold(elem, size, align, bound)) {

if (check_hugepage_sz(flags, elem->ms->hugepage_sz))

return elem;

if (alt_elem == NULL)

alt_elem = elem;

}

}

}

if ((alt_elem != NULL) && (flags & RTE_MEMZONE_SIZE_HINT_ONLY))

return alt_elem;

return NULL;

}

此内存管理的空闲链表是按照socket-id进行区分的,因为ovs调用dpdk接口时已经传入参数socket-id为1,heap->free_head[idx]是socket-id为1空闲链表的头节点。

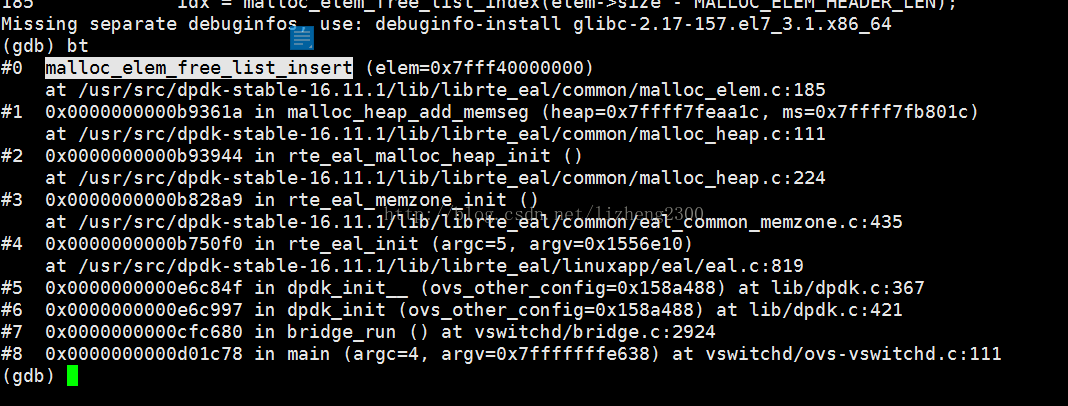

继续往下查空闲链表为什么是空,只能分析下空闲链表是怎么初始化的。搜索了一下dpdk代码发现了空闲链表的插入函数:malloc_elem_free_list_insert

用gdb跟踪下此函数的调用栈如下:

Main->bridge_run->dpdk_init->dpdk_init__

->rte_eal_init->rte_eal_memzone_init->rte_eal_malloc_heap_init

->malloc_heap_add_memseg->malloc_elem_free_list_insert

函数调用到rte_eal_init就进入了dpdk的lib库,此函数之前是ovs的ovs-vswitchd进程函数,顺着这个调用流程往前推。

分析了下rte_eal_malloc_heap_init函数,此函数初始化时读取的rte_eal_get_configuration()->mem_config内存配置信息,且内存配置信息只有一条,此条配置信息是ms->socket_id = 0, ms->len = 1073741824 ,大小刚好是1G字节的内存也就是说numa的node0节点申请分配1G大小的内存空间,而node 1节点没有申请分配内存,而程序运行时恰好是node 1节点在申请开辟内存池。

int

rte_eal_malloc_heap_init(void)

{

struct rte_mem_config *mcfg = rte_eal_get_configuration()->mem_config;

unsigned ms_cnt;

struct rte_memseg *ms;

if (mcfg == NULL)

return -1;

for (ms = &mcfg->memseg[0], ms_cnt = 0;

(ms_cnt < RTE_MAX_MEMSEG) && (ms->len > 0);

ms_cnt++, ms++) {

malloc_heap_add_memseg(&mcfg->malloc_heaps[ms->socket_id], ms);

}

return 0;

}

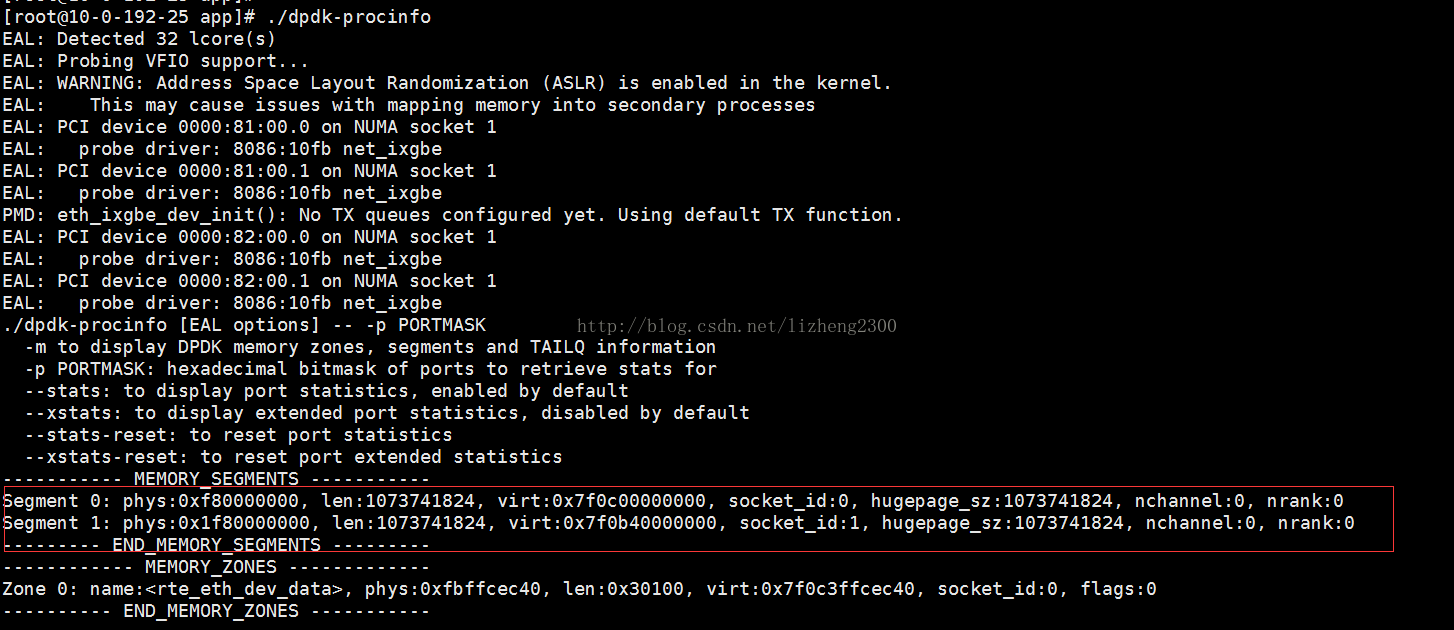

开源软件应该都会有一些辅助定位的app,如通过./dpdk-procinfo命令也可以查询dpdk的内存分配情况,红框中出现两个segment,每个segment对应一个socket_id,大小是1G。如果只有socket_id:0有memory_segment,那么就能确定内存分配出现问题了。

接下来的推导思路是查询下dpdk的内存配置信息是怎么给rte_eal_get_configuration()->mem_config赋值的。

经过搜索代码rte_eal_get_configuration()->mem_config变量的赋值是在rte_eal_hugepage_init()函数中进行的。

此函数的调用栈如下:

Main->bridge_run->dpdk_init->dpdk_init__

->rte_eal_init->rte_eal_memory_init->rte_eal_hugepage_init

rte_eal_hugepage_init函数主要是在/mnt/huge目录下创建的hugetlbfs配置的内存页数的rtemap_xx文件,并为每个rtemap_xx文件做mmap映射,保证mmap后的虚拟地址与实际的物理地址一致。

首先映射页表中的所有大页,经过两次mmap保证虚拟地址和物理地址一致,调用calc_num_pages_per_socket函数计算每个numa node节点上的应该使用的pages 数目,最后调用unmap_unneeded_hugepages函数unmap掉无用的内存页。在安装部署的过程中申请了4个1G的内存页,应该是numa的node 0和1节点各两个大页,但是实际情况是只有node 0节点上有1个大页被使用,rte_eal_hugepage_init函数初始化时原本mmap映射了4页内存,但是后来被unmap_unneeded_hugepages函数释放了只在node 0节点上保留一页。是按照calc_num_pages_per_socket函数计算的结果进行调整的,分析calc_num_pages_per_socket函数得知计算每个numa node节点的内存页数目是按照internal_config.memory配置的总内存页数目和每一个node节点配置的内存页数目计算的。internal_cfg->memory是internal_cfg->socket_mem[i]的总和。internal_cfg->socket_mem[i]的赋值在eal_parse_socket_mem函数中进行。

此函数的调用栈如下:

Main->bridge_run->dpdk_init->dpdk_init__->rte_eal_init->eal_parse_args->eal_parse_socket_mem

internal_cfg->socket_mem[i]配置的下刷要追溯到ovs的代码dpdk_init__函数,此函数中调用get_dpdk_args函数获取获取配置参数,其中对socket_mem进行赋值的是在construct_dpdk_mutex_options函数中进行。

static int

construct_dpdk_mutex_options(const struct smap *ovs_other_config,

char ***argv, const int initial_size,

char **extra_args, const size_t extra_argc)

{

struct dpdk_exclusive_options_map {

const char *category;

const char *ovs_dpdk_options[MAX_DPDK_EXCL_OPTS];

const char *eal_dpdk_options[MAX_DPDK_EXCL_OPTS];

const char *default_value;

int default_option;

} excl_opts[] = {

{"memory type",

{"dpdk-alloc-mem", "dpdk-socket-mem", NULL,},

{"-m", "--socket-mem", NULL,},

"1024,0", 1

},

}; //默认值 1024Mb 和0Mb........

}

socket_mem的默认配置是numa node 0节点1024Mb,node 1节点0Mb。

问题分析到这已经水落石出了,通过查看ovs官网得到如下命令可以修改各个节点socket_mem的数值。

ovs-vsctl --no-wait set Open_vSwitch .

other_config:dpdk-socket-mem="1024,1024

配置此命令后node的0和1节点各分配1024Mb内存空间。再次配置ovs网桥和端口程序运行正常。

辅助定位信息:(进程启动时有如下信息显示)

1.可以看到socket-mem的分配情况。

2.添加ovs端口时我使用的网卡的pci值为0000:81:00.1,如下启动信息已显示此网卡在numa的node1节点上。

[root@10-0-192-25 src]# ovs-vswitchd unix:$DB_SOCK --pidfile --detach --log-file 2017-04-06T03:11:50Z|00001|vlog|INFO|opened log file /usr/local/var/log/openvswitch/ovs-vswitchd.log 2017-04-06T03:11:50Z|00002|ovs_numa|INFO|Discovered 16 CPU cores on NUMA node 0 2017-04-06T03:11:50Z|00003|ovs_numa|INFO|Discovered 16 CPU cores on NUMA node 1 2017-04-06T03:11:50Z|00004|ovs_numa|INFO|Discovered 2 NUMA nodes and 32 CPU cores 2017-04-06T03:11:50Z|00005|reconnect|INFO|unix:/usr/local/var/run/openvswitch/db.sock: connecting... 2017-04-06T03:11:50Z|00006|reconnect|INFO|unix:/usr/local/var/run/openvswitch/db.sock: connected 2017-04-06T03:11:50Z|00007|dpdk|INFO|DPDK Enabled - initializing... 2017-04-06T03:11:50Z|00008|dpdk|INFO|No vhost-sock-dir provided - defaulting to /usr/local/var/run/openvswitch 2017-04-06T03:11:50Z|00009|dpdk|INFO|EAL ARGS: ovs-vswitchd --socket-mem 1024,0 -c 0x00000001 EAL: Detected 32 lcore(s) EAL: Probing VFIO support... EAL: PCI device 0000:81:00.0 on NUMA socket 1 EAL: probe driver: 8086:10fb net_ixgbe EAL: PCI device 0000:81:00.1 on NUMA socket 1 EAL: probe driver: 8086:10fb net_ixgbe EAL: PCI device 0000:82:00.0 on NUMA socket 1 EAL: probe driver: 8086:10fb net_ixgbe EAL: PCI device 0000:82:00.1 on NUMA socket 1 EAL: probe driver: 8086:10fb net_ixgbe Zone 0: name:<rte_eth_dev_data>, phys:0xfbffcec40, len:0x30100, virt:0x7fd77ffcec40, socket_id:0, flags:0 2017-04-06T03:11:52Z|00010|dpdk|INFO|DPDK Enabled - initialized 2017-04-06T03:11:52Z|00011|timeval|WARN|Unreasonably long 1699ms poll interval (146ms user, 1452ms system) 2017-04-06T03:11:52Z|00012|timeval|WARN|faults: 1482 minor, 0 major 2017-04-06T03:11:52Z|00013|timeval|WARN|context switches: 7 voluntary, 38 involuntary 2017-04-06T03:11:52Z|00014|coverage|INFO|Event coverage, avg rate over last: 5 seconds, last minute, last hour, hash=edcf6a06:

最后

以上就是沉默手链最近收集整理的关于ovs + dpdk 定位配置ovs端口后ovs-vswitchd进程挂死问题的总结的全部内容,更多相关ovs内容请搜索靠谱客的其他文章。

发表评论 取消回复