keras rnn中常见的rnn layer

1. LSTM

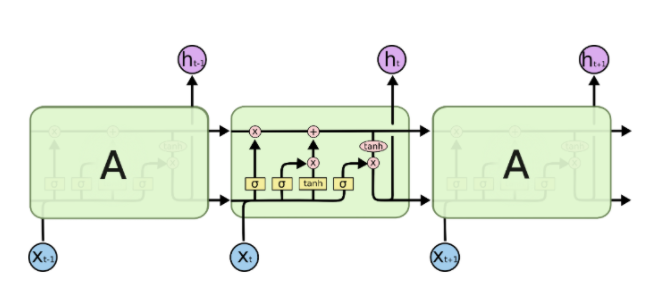

LSTM内部结构如下,

公式为

i

n

p

u

t

g

a

t

e

:

i

t

=

σ

(

W

i

x

t

+

U

i

h

(

t

−

1

)

)

f

o

r

g

e

t

g

a

t

e

:

f

t

=

σ

(

W

f

x

t

+

U

f

h

(

t

−

1

)

)

o

u

t

p

u

t

:

o

t

=

σ

(

W

o

x

t

+

U

o

h

(

t

−

1

)

)

N

e

w

m

e

m

o

r

y

c

e

l

l

:

c

~

t

=

t

a

n

h

(

W

c

x

t

+

U

c

x

t

)

F

i

n

a

l

m

e

m

o

r

y

c

e

l

l

:

c

t

=

f

t

⊙

c

(

t

−

1

)

+

i

t

⊙

c

~

t

h

i

d

d

e

n

s

t

a

t

e

:

h

t

=

o

t

⊙

t

a

n

h

(

c

t

)

input gate: i^t = sigma(W^i x^t +U^i h^{(t-1)}) newline forget gate:f^t = sigma(W^f x^t +U^f h^{(t-1)}) newline output : o^t = sigma(W^o x^t +U^o h^{(t-1)}) newline New memory cell:tilde c^t = tanh(W^c x^t + U^c x^t ) newline Final memory cell:c^t = f^todot c^{(t-1)} + i^t odot tilde c^t newline hidden state: h^t = o^t odot tanh(c^t)

input gate:it=σ(Wixt+Uih(t−1))forget gate:ft=σ(Wfxt+Ufh(t−1))output :ot=σ(Woxt+Uoh(t−1))New memorycell:c~t=tanh(Wcxt+Ucxt)Final memorycell:ct=ft⊙c(t−1)+it⊙c~thidden state:ht=ot⊙tanh(ct)

它的每一个time step都有三个输入与两个输出,

输入为上一个time step的h(t-1),c(t-1),x(t)

输出为h(t),c(t)

下面我们以一个例子来看一下keras lstm的使用

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

import numpy as np

# t1 t2 t3 t4

src = np.array([0.1, 0.2, 0.3, 0.4])

# define model

inputs1 = Input(shape=(4,1))

# 1: Positive integer, dimensionality of the output space

lstm1= LSTM(1)(inputs1)

model = Model(inputs=inputs1, outputs=lstm1)

# define input data

data = src.reshape((1,4,1))

print("====================")

print(model.predict(data))

结果如下,

====================

[[0.11041524]] # h(4)

简单说明一下参数:

- LSTM的参数1,是说明W,U向量的维数。根据上面的公式,一维的参数向量最终计算出来的结果就是一个标量。 它也是最后一个time step的输出h(t)

使用return_sequences和return_state参数

使用return_sequences=True

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from keras.layers import GRU

import numpy as np

# t1 t2 t3 t4

src = np.array([0.1, 0.2, 0.3, 0.4])

# define model

inputs1 = Input(shape=(4,1))

lstm1= LSTM(1, return_sequences=True)(inputs1)

model = Model(inputs=inputs1, outputs=lstm1)

data = src.reshape((1,4,1))

print("====================")

print(model.predict(data))

结果如下:

====================

[[[-0.02509235] # the last h(4)

[-0.06933878] # h(3)

[-0.12798117] # h(2)

[-0.19663112]]] # h(1)

它输出的是每个节点的output。下面是官方注释说明。

return_sequences: Boolean. Whether to return the last output

in the output sequence, or the full sequence.

使用return_state=True

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from keras.layers import GRU

import numpy as np

# t1 t2 t3 t4

src = np.array([0.1, 0.2, 0.3, 0.4])

# define model

inputs1 = Input(shape=(4,1))

lstm1= LSTM(1, return_state=True)(inputs1)

model = Model(inputs=inputs1, outputs=lstm1)

data = src.reshape((1,4,1))

print("====================")

print(model.predict(data))

结果

====================

[array([[-0.11774988]], dtype=float32), # the last output h(4)

array([[-0.11774988]], dtype=float32), # the additional information for the last state. h(4)

array([[-0.24601391]], dtype=float32)] # the state for h(4): c(4)

return_state: Boolean. Whether to return the last state in addition to the output.

最后

以上就是直率书包最近收集整理的关于【keras】rnn中的LSTM1. LSTM的全部内容,更多相关【keras】rnn中的LSTM1.内容请搜索靠谱客的其他文章。

发表评论 取消回复