梯度提升(Gradient Boosting)训练一系列的弱学习器(learners),每个学习器都针对前面的学习器的伪残差(而不是y),以此提升算法的表现(performance)。

维基百科是这样描述梯度提升的

梯度提升(梯度增强)是一种用于回归和分类问题的机器学习技术,其产生的预测模型是弱预测模型的集成,如采用典型的决策树 作为弱预测模型,这时则为梯度提升树(GBT或GBDT)。像其他提升方法一样,它以分阶段的方式构建模型,但它通过允许对任意可微分损失函数进行优化作为对一般提升方法的推广。

必要知识

1. 线性回归

2. 梯度下降

3. 决策树

读完本文以后,您将会学会

1. 梯度提升的概念

2. 梯度提升如何运用于回归

3. 从零开始手写梯度回归

算法

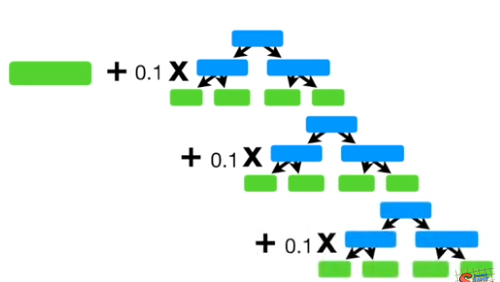

下图很好的表示了梯度提升算法。

(图片来自一个叫 StatQuest with Josh Starmer 的 Youtuber)

上图中,第一部分是一个树桩,它的值是y的平均值。后面分别是第一颗子树,第二颗,第三颗等。每颗子树,都是针对前面的模型的伪残差(pseudo residuals)而训练的(而非y)。在回归问题中,伪残差恰好等于残差,但是他们理论上并不是一回事。

p s e u d o r e s i d u a l = y − p r e v i o u s _ p r e d i c t i o n pseudo residual=y - previous_prediction pseudo residual=y−previous_prediction

流程

Step 1: 计算y的平均值:

y ˉ = 1 n ∑ i = 1 n y i bar{y}=frac{1}{n} sum_{i=1}^{n}y_i yˉ=n1i=1∑nyi

F

0

(

x

)

=

y

ˉ

F_0(x)=bar{y}

F0(x)=yˉ

Step 2 for m in 1 to M:

-

Step 2.1: 计算伪残差:

r i m = y i − F m − 1 ( x i ) r_{im}=y_i-F_{m-1}(x_i) rim=yi−Fm−1(xi) -

Step 2.2: 用伪残差拟合一颗回归树 t m ( x ) t_m(x) tm(x) 并建立终点区域(Terminal Region,其实就是树叶) R j m R_{jm} Rjm for j = 1... J m j=1...Jm j=1...Jm

-

Step 2.3: 针对每一个终点区域,有 p j p_j pj个样本点,计算 γ gamma γ :

γ i m = 1 p j ∑ x i ∈ R j m r i m gamma_{im}=frac{1}{p_j} sum_{x_i in R_{jm}} r_{im} γim=pj1xi∈Rjm∑rim

-

(现实中,上面两步可以合二为一,因为回归树把2.3做了。)

-

Step 2.4: 更新模型(学习率 α alpha α):

F m ( x ) = F m − 1 + α γ m F_m(x)=F_{m-1}+alphagamma_m Fm(x)=Fm−1+αγm

Step 3. 输出模型 F M ( x ) F_M(x) FM(x)

合并2.2和2.3以后有

简化流程

Step 1: 计算y的平均值:

y ˉ = 1 n ∑ i = 1 n y i bar{y}=frac{1}{n} sum_{i=1}^{n}y_i yˉ=n1i=1∑nyi

F

0

(

x

)

=

y

ˉ

F_0(x)=bar{y}

F0(x)=yˉ

Step 2 for m in 1 to M:

-

Step 2.1: 计算伪残差:

r i m = y i − F m − 1 ( x i ) r_{im}=y_i-F_{m-1}(x_i) rim=yi−Fm−1(xi) -

Step 2.2: 用伪残差拟合一颗回归树

-

Step 2.3: 更新模型(学习率 α alpha α):

F m ( x ) = F m − 1 + α γ m F_m(x)=F_{m-1}+alphagamma_m Fm(x)=Fm−1+αγm

Step 3. 输出模型 F M ( x ) F_M(x) FM(x)

(Optional) 从梯度提升算法推理梯度提升回归算法

上面的简化流程的知识,对于手写梯度提升回归算法,已经足够了。如果有余力的,可以和我一起从梯度提升(GB)推理出梯度提升回归(GBR)

首先我们来看GB的步骤

算法步骤

输入: 训练数据 { ( x i , y i ) } i = 1 n {(x_i, y_i)}_{i=1}^{n} {(xi,yi)}i=1n, 一个可微分的损失函数 L ( y , F ( x ) ) L(y, F(x)) L(y,F(x)),循环次数M。

算法:

Step 1: 用一个常量 F 0 ( x ) F_0(x) F0(x)启动算法,这个常量满足以下公式:

F 0 ( x ) = argmin γ ∑ i = 1 n L ( y i , γ ) F_0(x)=underset{gamma}{operatorname{argmin}}sum_{i=1}^{n}L(y_i, gamma) F0(x)=γargmini=1∑nL(yi,γ)

Step 2: for m in 1 to M:

- Step 2.1: 计算伪残差(pseudo-residuals):

r i m = − [ ∂ L ( y i , F ( x i ) ) ∂ F ( x i ) ] F ( x ) = F m − 1 ( x ) r_{im}=-[frac{partial L(y_i, F(x_i))}{partial F(x_i)}]_{F(x)=F_{m-1}(x)} rim=−[∂F(xi)∂L(yi,F(xi))]F(x)=Fm−1(x)

-

Step 2.2: 用伪残差拟合弱学习器 h m ( x ) h_m(x) hm(x) ,建立终点区域 R j m ( j = 1... J m ) R_{jm}(j=1...J_m) Rjm(j=1...Jm)

-

Step 2.3: 针对每个终点区域(也就是每一片树叶),计算 γ gamma γ

γ j m = argmin γ ∑ x i ∈ R j m n L ( y i , F m − 1 ( x i ) + γ ) gamma_{jm}=underset{gamma}{operatorname{argmin}}sum_{x_i in R_{jm}}^{n}L(y_i, F_{m-1}(x_i)+gamma) γjm=γargminxi∈Rjm∑nL(yi,Fm−1(xi)+γ)

- Step 2.4: 更新算法(学习率

α

alpha

α) :

F m ( x ) = F m − 1 + α γ m F_m(x)=F_{m-1}+alphagamma_m Fm(x)=Fm−1+αγm

Step 3. 输出算法 F M ( x ) F_M(x) FM(x)

To deduce the GB to GBR, I simply define a loss function and solve the loss function in step 1, 2.1 and 2.3. We use sum of squared errror(SSE) as the loss function:

为了演绎,我们需要一个损失函数,并带入Step 1, 2.1, 2.3。这里,因为是回归问题,所以,我们可以用方差和(SSE)

L ( y , γ ) = 1 2 ∑ i = 1 n ( y i − γ ) 2 L(y, gamma)=frac{1}{2}sum_{i=1}^{n}(y_i-gamma)^2 L(y,γ)=21i=1∑n(yi−γ)2

对于 step 1:

因为为了找到损失函数的最小值,只要找到其一阶导数为0的地方就行了,所以有

∂ L ( y , F 0 ) ∂ F 0 = ∂ 1 2 ∑ i = 1 n ( y i − F 0 ) 2 ∂ F 0 = ∑ i = 1 n ( y i − F 0 ) = 0 frac{partial L(y, F_0)}{partial F_0}=frac{partial frac{1}{2}sum_{i=1}^{n}(y_i-F_0)^2}{partial F_0} =sum_{i=1}^{n} (y_i-F_0)=0 ∂F0∂L(y,F0)=∂F0∂21∑i=1n(yi−F0)2=i=1∑n(yi−F0)=0

变形后得到:

F 0 = 1 n ∑ i = 1 n y i F_0=frac{1}{n}sum_{i=1}^{n}y_i F0=n1i=1∑nyi

对于 step 2.1:

r i m = − [ ∂ L ( y i , F ( x i ) ) ∂ F ( x i ) ] F ( x ) = F m − 1 ( x ) r_{im}=-[frac{partial L(y_i, F(x_i))}{partial F(x_i)}]_{F(x)=F_{m-1}(x)} rim=−[∂F(xi)∂L(yi,F(xi))]F(x)=Fm−1(x)

= − [ ∂ 1 2 ∑ i = 1 n ( y i − F m − 1 ( x i ) ) 2 ) ∂ F m − 1 ( x i ) ] F ( x ) = F m − 1 ( x ) =-[frac{partial frac{1}{2}sum_{i=1}^{n}(y_i-F_{m-1}(x_i))^2)}{partial F_{m-1}(x_i)}]_{F(x)=F_{m-1}(x)} =−[∂Fm−1(xi)∂21∑i=1n(yi−Fm−1(xi))2)]F(x)=Fm−1(x)

(The chain rule)

= − − 2 ∗ 1 2 ( y i − F m − 1 ( x i ) ) =--2*frac{1}{2}(y_i-F_{m-1}(x_i)) =−−2∗21(yi−Fm−1(xi))

= y i − F m − 1 ( x i ) =y_i-F_{m-1}(x_i) =yi−Fm−1(xi)

对于 step 2.3 也一样:

γ j m = 1 p j ∑ x i ∈ R j r i m gamma_{jm}=frac{1}{p_j}sum_{x_i in R_j}r_{im} γjm=pj1xi∈Rj∑rim

手写代码

引用python库

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

from sklearn.datasets import load_boston

import numpy as np

import matplotlib.pyplot as plt

import graphviz

from sklearn import tree

加载数据

df=pd.DataFrame()

df['name']=['Alex','Brunei','Candy','David','Eric','Felicity']

df['height']=[1.6,1.6,1.5,1.8,1.5,1.4]

df['gender']=['male','female','female','male','male','female']

df['weight']=[88, 76, 56, 73, 77, 57]

display(df)

X=df[['height','gender']].copy()

X.loc[X['gender']=='male','gender']=1

X.loc[X['gender']=='female','gender']=0

y=df['weight']

display(X)

n=df.shape[0]

数据如下:

| name | height | gender | weight |

|---|---|---|---|

| Alex | 1.6 | male | 88 |

| Brunei | 1.6 | female | 76 |

| Candy | 1.5 | female | 56 |

| David | 1.8 | male | 73 |

| Eric | 1.5 | male | 77 |

| Felicity | 1.4 | female | 57 |

X如下:

| height | gender |

|---|---|

| 1.6 | 1 |

| 1.6 | 0 |

| 1.5 | 0 |

| 1.8 | 1 |

| 1.5 | 1 |

| 1.4 | 0 |

Step 1 平均值

#now let's get started

learning_rate=0.2

loss = [0] * 6

residuals = np.zeros([6,n])

predictoin = np.zeros([6,n])

#calculation

average_y=y.mean()

predictoin[0] = [average_y] * n

residuals[0] = y - predictoin[0]

df['$f_0$']=predictoin[0]

df['$r_0$']=residuals[0]

display(df)

loss[0] = np.sum(residuals[0] ** 2)

trees = []

平均值和残差如下:

| name | height | gender | weight | ????0 | ????0 | |

|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 71.166667 | 16.833333 |

| 1 | Brunei | 1.6 | female | 76 | 71.166667 | 4.833333 |

| 2 | Candy | 1.5 | female | 56 | 71.166667 | -15.166667 |

| 3 | David | 1.8 | male | 73 | 71.166667 | 1.833333 |

| 4 | Eric | 1.5 | male | 77 | 71.166667 | 5.833333 |

| 5 | Felicity | 1.4 | female | 57 | 71.166667 | -14.166667 |

这里平均值是 71.2,残差是16.8, 4.8, 等.

Step 2 循环

我们定义一个循环函数

def iterate(i):

t = DecisionTreeRegressor(max_depth=1)

t.fit(X,residuals[i])

trees.append(t)

#next prediction, residual

predictoin[i+1]=predictoin[i]+learning_rate * t.predict(X)

residuals[i+1]=y-predictoin[i+1]

loss[i+1] = np.sum(residuals[i+1] ** 2)

df[f'$gamma_{i+1}$']=t.predict(X)

df[f'$f_{i+1}$']=predictoin[i+1]

df[f'$r_{i+1}$']=residuals[i+1]

display(df[['name','height','gender','weight',f'$f_{i}$',f'$r_{i}$',f'$gamma_{i+1}$',f'$f_{i+1}$',f'$r_{i+1}$']])

dot_data = tree.export_graphviz(t, out_file=None, filled=True, rounded=True,feature_names=X.columns)

graph = graphviz.Source(dot_data)

display(graph)

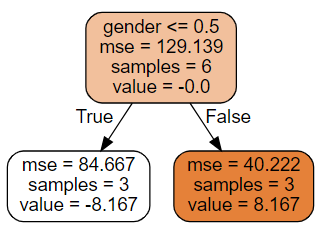

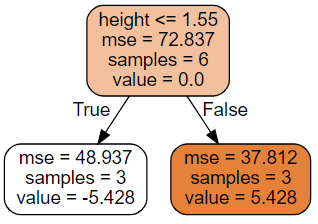

循环0

| name | height | gender | weight | ????0 | ????0 | ????1 | ????1 | ????1 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 71.166667 | 16.833333 | 8.166667 | 72.800000 | 15.200000 |

| 1 | Brunei | 1.6 | female | 76 | 71.166667 | 4.833333 | -8.166667 | 69.533333 | 6.466667 |

| 2 | Candy | 1.5 | female | 56 | 71.166667 | -15.166667 | -8.166667 | 69.533333 | -13.533333 |

| 3 | David | 1.8 | male | 73 | 71.166667 | 1.833333 | 8.166667 | 72.800000 | 0.200000 |

| 4 | Eric | 1.5 | male | 77 | 71.166667 | 5.833333 | 8.166667 | 72.800000 | 4.200000 |

| 5 | Felicity | 1.4 | female | 57 | 71.166667 | -14.166667 | -8.166667 | 69.533333 | -12.533333 |

在循环0,我们用残差0(r0)训练了一颗树。这颗树告诉我们男性比女性重,且男性的体重比平均值高8.167,而女性则少-8.167。所以,我们应该给男性增重,给女性减重。当然,我们还是要一小步一小步的来的,所以,我们有了一个叫学习率的东西,这里,我们取了0.2。这样缩放了以后,男性应该增加1.6334公斤,而女性则应该减少-1.6334公斤。最后,循环0预测男性体重为72.8公斤,女性为69.5公斤。

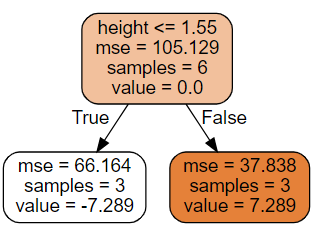

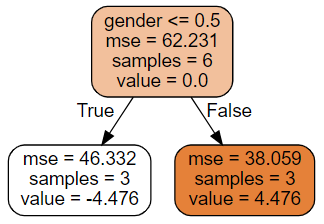

循环 1

| name | height | gender | weight | ????1 | ????1 | ????2 | ????2 | ????2 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 72.800000 | 15.200000 | 7.288889 | 74.257778 | 13.742222 |

| 1 | Brunei | 1.6 | female | 76 | 69.533333 | 6.466667 | 7.288889 | 70.991111 | 5.008889 |

| 2 | Candy | 1.5 | female | 56 | 69.533333 | -13.533333 | -7.288889 | 68.075556 | -12.075556 |

| 3 | David | 1.8 | male | 73 | 72.800000 | 0.200000 | 7.288889 | 74.257778 | -1.257778 |

| 4 | Eric | 1.5 | male | 77 | 72.800000 | 4.200000 | -7.288889 | 71.342222 | 5.657778 |

| 5 | Felicity | 1.4 | female | 57 | 69.533333 | -12.533333 | -7.288889 | 68.075556 | -11.075556 |

在循环1里面,我们用r1训练一颗决策树。这颗新的决策树告诉我们身高也很重要。1.55米是分水岭,高之,则体重亦高1.4578公斤。反之则少-1.4578公斤。我们用这个规则计算出f2

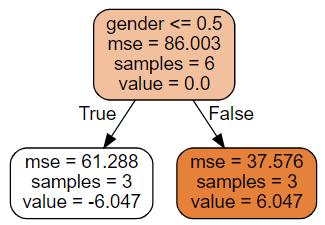

Iteration 2

| name | height | gender | weight | ????2 | ????2 | ????3 | ????3 | ????3 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 74.257778 | 13.742222 | 6.047407 | 75.467259 | 12.532741 |

| 1 | Brunei | 1.6 | female | 76 | 70.991111 | 5.008889 | -6.047407 | 69.781630 | 6.218370 |

| 2 | Candy | 1.5 | female | 56 | 68.075556 | -12.075556 | -6.047407 | 66.866074 | -10.866074 |

| 3 | David | 1.8 | male | 73 | 74.257778 | -1.257778 | 6.047407 | 75.467259 | -2.467259 |

| 4 | Eric | 1.5 | male | 77 | 71.342222 | 5.657778 | 6.047407 | 72.551704 | 4.448296 |

| 5 | Felicity | 1.4 | female | 57 | 68.075556 | -11.075556 | -6.047407 | 66.866074 | -9.866074 |

Iteration 3

| name | height | gender | weight | ????3 | ????3 | ????4 | ????4 | ????4 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 75.467259 | 12.532741 | 5.427951 | 76.552849 | 11.447151 |

| 1 | Brunei | 1.6 | female | 76 | 69.781630 | 6.218370 | 5.427951 | 70.867220 | 5.132780 |

| 2 | Candy | 1.5 | female | 56 | 66.866074 | -10.866074 | -5.427951 | 65.780484 | -9.780484 |

| 3 | David | 1.8 | male | 73 | 75.467259 | -2.467259 | 5.427951 | 76.552849 | -3.552849 |

| 4 | Eric | 1.5 | male | 77 | 72.551704 | 4.448296 | -5.427951 | 71.466114 | 5.533886 |

| 5 | Felicity | 1.4 | female | 57 | 66.866074 | -9.866074 | -5.427951 | 65.780484 | -8.780484 |

Iteration 4

| name | height | gender | weight | ????4 | ????4 | ????5 | ????5 | ????5 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Alex | 1.6 | male | 88 | 76.552849 | 11.447151 | 4.476063 | 77.448062 | 10.551938 |

| 1 | Brunei | 1.6 | female | 76 | 70.867220 | 5.132780 | -4.476063 | 69.972007 | 6.027993 |

| 2 | Candy | 1.5 | female | 56 | 65.780484 | -9.780484 | -4.476063 | 64.885271 | -8.885271 |

| 3 | David | 1.8 | male | 73 | 76.552849 | -3.552849 | 4.476063 | 77.448062 | -4.448062 |

| 4 | Eric | 1.5 | male | 77 | 71.466114 | 5.533886 | 4.476063 | 72.361326 | 4.638674 |

| 5 | Felicity | 1.4 | female | 57 | 65.780484 | -8.780484 | -4.476063 | 64.885271 | -7.885271 |

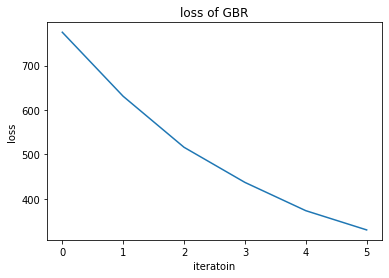

损失随着学习而下降。

希望你看懂了。

代码放在我的github了:

https://github.com/EricWebsmith/machine_learning_from_scrach/blob/master/Gradiant_Boosting_Regression.ipynb

引用:

https://en.wikipedia.org/wiki/Gradient_boosting

https://www.youtube.com/watch?v=3CC4N4z3GJc&list=PLblh5JKOoLUICTaGLRoHQDuF_7q2GfuJF&index=44

https://www.youtube.com/watch?v=2xudPOBz-vs&list=PLblh5JKOoLUICTaGLRoHQDuF_7q2GfuJF&index=45

推荐以上的Youtube频道StatQuest

最后

以上就是精明长颈鹿最近收集整理的关于python机器学习手写算法系列——梯度提升回归算法(Optional) 从梯度提升算法推理梯度提升回归算法手写代码引用:的全部内容,更多相关python机器学习手写算法系列——梯度提升回归算法(Optional)内容请搜索靠谱客的其他文章。

发表评论 取消回复