前言

Libevent 是一个用C语言编写的、轻量级的开源高性能事件通知库,主要有以下几个亮点:事件驱动( event-driven),高性能;轻量级,专注于网络,不如 ACE 那么臃肿庞大;源代码相当精炼、易读;跨平台,支持 Windows、 Linux、 *BSD 和 Mac Os;支持多种 I/O 多路复用技术, epoll、 poll、 dev/poll、 select 和 kqueue 等;支持 I/O,定时器和信号等事件;注册事件优先级。

正文

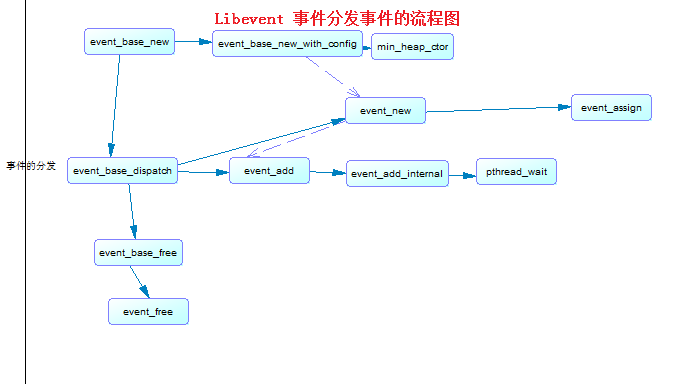

libevent 大致创建使用流程图

一, event_base_new

event_base_new 设置io类型和 event通知类型

struct event_base *event_base_new(void)

{

struct event_base *base = NULL;

struct event_config *cfg = event_config_new();

if (cfg)

{

// 设置event的通知事件

base = event_base_new_with_config(cfg);

// 释放配置文件分配的内存

event_config_free(cfg);

}

return base;

}

struct event_config *event_config_new(void)

{

struct event_config *cfg = mm_calloc(1, sizeof(*cfg));

if (cfg == NULL)

{

return (NULL);

}

TAILQ_INIT(&cfg->entries);

return (cfg);

}

struct event_base *event_base_new_with_config(const struct event_config *cfg)

{

int i;

struct event_base *base;

int should_check_environment;

#ifndef _EVENT_DISABLE_DEBUG_MODE

event_debug_mode_too_late = 1;

if (_event_debug_mode_on && !_event_debug_map_lock)

{

EVTHREAD_ALLOC_LOCK(_event_debug_map_lock, 0);

}

#endif

if ((base = mm_calloc(1, sizeof(struct event_base))) == NULL)

{

event_warn("%s: calloc", __func__);

return NULL;

}

detect_monotonic();

gettime(base, &base->event_tv);

// 1. 设置反应堆为0

min_heap_ctor(&base->timeheap);

// 2. 设置队列中节点

TAILQ_INIT(&base->eventqueue);

// 3. 设置event的事件的本地通信 socket

base->sig.ev_signal_pair[0] = -1;

base->sig.ev_signal_pair[1] = -1;

// 4. notify 通知事件

base->th_notify_fd[0] = -1;

base->th_notify_fd[1] = -1;

// 5. 回调函数的初始化

event_deferred_cb_queue_init(&base->defer_queue);

// 6. 设置事件通知回调函数

base->defer_queue.notify_fn = notify_base_cbq_callback;

base->defer_queue.notify_arg = base;

if (cfg)

{

base->flags = cfg->flags;

}

// 7. 初始化 io 中map事件

evmap_io_initmap(&base->io);

// 8. 初始化 信号map

evmap_signal_initmap(&base->sigmap);

// 9. 初始化 链表中文件描述符初始化

event_changelist_init(&base->changelist);

base->evbase = NULL;

should_check_environment =

!(cfg && (cfg->flags & EVENT_BASE_FLAG_IGNORE_ENV));

// 10. eventops 是对 epoll, select, poll 和iocp等等封装 io的的初始化

for (i = 0; eventops[i] && !base->evbase; i++)

{

if (cfg != NULL)

{

// 10.1 获取配置文件中io 类型 epoll, select, poll 和iocp

/* determine if this backend should be avoided */

if (event_config_is_avoided_method(cfg,

eventops[i]->name))

{

continue;

}

// 10.2 获取配置文件中 设置io 的文件描述符的通知 使用边缘触发(LT)模式和水平触发(ET)模式

if ((eventops[i]->features & cfg->require_features)

!= cfg->require_features)

{

continue;

}

}

/* also obey the environment variables */

if (should_check_environment &&

event_is_method_disabled(eventops[i]->name))

{

continue;

}

// 10.3 设置 io的类型 epoll , select

base->evsel = eventops[i];

// 10.4 初始化 io模式

base->evbase = base->evsel->init(base);

}

if (base->evbase == NULL)

{

event_warnx("%s: no event mechanism available",

__func__);

event_base_free(base);

return NULL;

}

if (evutil_getenv("EVENT_SHOW_METHOD"))

{

event_msgx("libevent using: %s", base->evsel->name);

}

/* allocate a single active event queue */

if (event_base_priority_init(base, 1) < 0)

{

event_base_free(base);

return NULL;

}

/* prepare for threading */

#ifndef _EVENT_DISABLE_THREAD_SUPPORT

if (!cfg || !(cfg->flags & EVENT_BASE_FLAG_NOLOCK))

{

int r;

EVTHREAD_ALLOC_LOCK(base->th_base_lock,

EVTHREAD_LOCKTYPE_RECURSIVE);

base->defer_queue.lock = base->th_base_lock;

EVTHREAD_ALLOC_COND(base->current_event_cond);

// 11. 设置 本地 event通知事件

r = evthread_make_base_notifiable(base);

if (r<0)

{

event_base_free(base);

return NULL;

}

}

#endif

#ifdef WIN32

if (cfg && (cfg->flags & EVENT_BASE_FLAG_STARTUP_IOCP))

event_base_start_iocp(base, cfg->n_cpus_hint);

#endif

return (base);

}

int evthread_make_base_notifiable(struct event_base *base)

{

void (*cb)(evutil_socket_t, short, void *) = evthread_notify_drain_default;

int (*notify)(struct event_base *) = evthread_notify_base_default;

/* XXXX grab the lock here? */

if (!base)

{

return -1;

}

if (base->th_notify_fd[0] >= 0)

{

return 0;

}

#if defined(_EVENT_HAVE_EVENTFD) && defined(_EVENT_HAVE_SYS_EVENTFD_H)

#ifndef EFD_CLOEXEC

#define EFD_CLOEXEC 0

#endif

base->th_notify_fd[0] = eventfd(0, EFD_CLOEXEC);

if (base->th_notify_fd[0] >= 0)

{

evutil_make_socket_closeonexec(base->th_notify_fd[0]);

notify = evthread_notify_base_eventfd;

cb = evthread_notify_drain_eventfd;

}

#endif

#if defined(_EVENT_HAVE_PIPE)

if (base->th_notify_fd[0] < 0)

{

if ((base->evsel->features & EV_FEATURE_FDS))

{

if (pipe(base->th_notify_fd) < 0)

{

event_warn("%s: pipe", __func__);

}

else

{

evutil_make_socket_closeonexec(base->th_notify_fd[0]);

evutil_make_socket_closeonexec(base->th_notify_fd[1]);

}

}

}

#endif

#ifdef WIN32

#define LOCAL_SOCKETPAIR_AF AF_INET

#else

#define LOCAL_SOCKETPAIR_AF AF_UNIX

#endif

if (base->th_notify_fd[0] < 0) {

if (evutil_socketpair(LOCAL_SOCKETPAIR_AF, SOCK_STREAM, 0,

base->th_notify_fd) == -1) {

event_sock_warn(-1, "%s: socketpair", __func__);

return (-1);

} else {

evutil_make_socket_closeonexec(base->th_notify_fd[0]);

evutil_make_socket_closeonexec(base->th_notify_fd[1]);

}

}

evutil_make_socket_nonblocking(base->th_notify_fd[0]);

base->th_notify_fn = notify;

/*

Making the second socket nonblocking is a bit subtle, given that we

ignore any EAGAIN returns when writing to it, and you don't usally

do that for a nonblocking socket. But if the kernel gives us EAGAIN,

then there's no need to add any more data to the buffer, since

the main thread is already either about to wake up and drain it,

or woken up and in the process of draining it.

*/

if (base->th_notify_fd[1] > 0)

{

evutil_make_socket_nonblocking(base->th_notify_fd[1]);

}

/* prepare an event that we can use for wakeup */

event_assign(&base->th_notify, base, base->th_notify_fd[0],

EV_READ|EV_PERSIST, cb, base);

/* we need to mark this as internal event */

base->th_notify.ev_flags |= EVLIST_INTERNAL;

event_priority_set(&base->th_notify, 0);

return event_add(&base->th_notify, NULL);

}

2, event_new 新的连接的事件

struct event *event_new(struct event_base *base, evutil_socket_t fd, short events, void (*cb)(evutil_socket_t, short, void *), void *arg)

{

struct event *ev;

ev = mm_malloc(sizeof(struct event));

if (ev == NULL)

{

return (NULL);

}

if (event_assign(ev, base, fd, events, cb, arg) < 0)

{

mm_free(ev);

return (NULL);

}

return (ev);

}

/**

* 赋值的操作得到 事件struct event

**/

int event_assign(struct event *ev, struct event_base *base, evutil_socket_t fd, short events, void (*callback)(evutil_socket_t, short, void *), void *arg)

{

if (!base)

{

base = current_base;

}

_event_debug_assert_not_added(ev);

ev->ev_base = base;

ev->ev_callback = callback;

ev->ev_arg = arg;

ev->ev_fd = fd;

ev->ev_events = events;

ev->ev_res = 0;

ev->ev_flags = EVLIST_INIT;

ev->ev_ncalls = 0;

ev->ev_pncalls = NULL;

if (events & EV_SIGNAL)

{

if ((events & (EV_READ|EV_WRITE)) != 0)

{

event_warnx("%s: EV_SIGNAL is not compatible with "

"EV_READ or EV_WRITE", __func__);

return -1;

}

ev->ev_closure = EV_CLOSURE_SIGNAL;

}

else

{

if (events & EV_PERSIST)

{

evutil_timerclear(&ev->ev_io_timeout);

ev->ev_closure = EV_CLOSURE_PERSIST;

}

else

{

ev->ev_closure = EV_CLOSURE_NONE;

}

}

//

min_heap_elem_init(ev);

if (base != NULL)

{

/* by default, we put new events into the middle priority */

ev->ev_pri = base->nactivequeues / 2;

}

_event_debug_note_setup(ev);

return 0;

}

3, event_add 添加事件到反应堆中

- 事件添加到io事件中

int event_add(struct event *ev, const struct timeval *tv)

{

int res;

if (EVUTIL_FAILURE_CHECK(!ev->ev_base))

{

event_warnx("%s: event has no event_base set.", __func__);

return -1;

}

EVBASE_ACQUIRE_LOCK(ev->ev_base, th_base_lock);

res = event_add_internal(ev, tv, 0);

EVBASE_RELEASE_LOCK(ev->ev_base, th_base_lock);

return (res);

}

static inline int event_add_internal(struct event *ev, const struct timeval *tv,

int tv_is_absolute)

{

struct event_base *base = ev->ev_base;

int res = 0;

int notify = 0;

EVENT_BASE_ASSERT_LOCKED(base);

_event_debug_assert_is_setup(ev);

event_debug((

"event_add: event: %p (fd %d), %s%s%scall %p",

ev,

(int)ev->ev_fd,

ev->ev_events & EV_READ ? "EV_READ " : " ",

ev->ev_events & EV_WRITE ? "EV_WRITE " : " ",

tv ? "EV_TIMEOUT " : " ",

ev->ev_callback));

EVUTIL_ASSERT(!(ev->ev_flags & ~EVLIST_ALL));

/*

* prepare for timeout insertion further below, if we get a

* failure on any step, we should not change any state.

*/

// 1. 添加计时器到哈希表中

if (tv != NULL && !(ev->ev_flags & EVLIST_TIMEOUT))

{

if (min_heap_reserve(&base->timeheap,

1 + min_heap_size(&base->timeheap)) == -1)

{

return (-1); /* ENOMEM == errno */

}

}

/* If the main thread is currently executing a signal event's

* callback, and we are not the main thread, then we want to wait

* until the callback is done before we mess with the event, or else

* we can race on ev_ncalls and ev_pncalls below. */

#ifndef _EVENT_DISABLE_THREAD_SUPPORT

// 2. listen的描述符

if (base->current_event == ev && (ev->ev_events & EV_SIGNAL)

&& !EVBASE_IN_THREAD(base))

{

++base->current_event_waiters;

EVTHREAD_COND_WAIT(base->current_event_cond, base->th_base_lock);

}

#endif

// 3. 根据read 或者write 事件 把文件描述符添加到io map集合中

if ((ev->ev_events & (EV_READ|EV_WRITE|EV_SIGNAL)) &&

!(ev->ev_flags & (EVLIST_INSERTED|EVLIST_ACTIVE)))

{

if (ev->ev_events & (EV_READ | EV_WRITE))

{

res = evmap_io_add(base, ev->ev_fd, ev);

}

else if (ev->ev_events & EV_SIGNAL)

{

res = evmap_signal_add(base, (int)ev->ev_fd, ev);

}

if (res != -1)

{

// 添加到反应堆队列中

event_queue_insert(base, ev, EVLIST_INSERTED);

}

if (res == 1)

{

/* evmap says we need to notify the main thread. */

notify = 1;

res = 0;

}

}

/*

* we should change the timeout state only if the previous event

* addition succeeded.

*/

if (res != -1 && tv != NULL)

{

struct timeval now;

int common_timeout;

/*

* for persistent timeout events, we remember the

* timeout value and re-add the event.

*

* If tv_is_absolute, this was already set.

*/

if (ev->ev_closure == EV_CLOSURE_PERSIST && !tv_is_absolute)

{

ev->ev_io_timeout = *tv;

}

/*

* we already reserved memory above for the case where we

* are not replacing an existing timeout.

*/

if (ev->ev_flags & EVLIST_TIMEOUT)

{

/* XXX I believe this is needless. */

if (min_heap_elt_is_top(ev))

{

notify = 1;

}

event_queue_remove(base, ev, EVLIST_TIMEOUT);

}

/* Check if it is active due to a timeout. Rescheduling

* this timeout before the callback can be executed

* removes it from the active list. */

if ((ev->ev_flags & EVLIST_ACTIVE) &&

(ev->ev_res & EV_TIMEOUT))

{

if (ev->ev_events & EV_SIGNAL)

{

/* See if we are just active executing

* this event in a loop

*/

if (ev->ev_ncalls && ev->ev_pncalls)

{

/* Abort loop */

*ev->ev_pncalls = 0;

}

}

event_queue_remove(base, ev, EVLIST_ACTIVE);

}

gettime(base, &now);

common_timeout = is_common_timeout(tv, base);

if (tv_is_absolute)

{

ev->ev_timeout = *tv;

}

else if (common_timeout)

{

struct timeval tmp = *tv;

tmp.tv_usec &= MICROSECONDS_MASK;

evutil_timeradd(&now, &tmp, &ev->ev_timeout);

ev->ev_timeout.tv_usec |=

(tv->tv_usec & ~MICROSECONDS_MASK);

}

else

{

evutil_timeradd(&now, tv, &ev->ev_timeout);

}

event_debug((

"event_add: timeout in %d seconds, call %p",

(int)tv->tv_sec, ev->ev_callback));

event_queue_insert(base, ev, EVLIST_TIMEOUT);

if (common_timeout)

{

struct common_timeout_list *ctl =

get_common_timeout_list(base, &ev->ev_timeout);

if (ev == TAILQ_FIRST(&ctl->events))

{

common_timeout_schedule(ctl, &now, ev);

}

}

else

{

/* See if the earliest timeout is now earlier than it

* was before: if so, we will need to tell the main

* thread to wake up earlier than it would

* otherwise. */

if (min_heap_elt_is_top(ev))

{

notify = 1;

}

}

}

/* if we are not in the right thread, we need to wake up the loop */

if (res != -1 && notify && EVBASE_NEED_NOTIFY(base))

{

// @@@ 这个回调 有点意思

evthread_notify_base(base);

}

_event_debug_note_add(ev);

return (res);

}

4, event_base_dispatch 事件分发

- io 事件反应

- 添加事件队列中

int event_base_dispatch(struct event_base *event_base)

{

return (event_base_loop(event_base, 0));

}

int event_base_loop(struct event_base *base, int flags)

{

const struct eventop *evsel = base->evsel;

struct timeval tv;

struct timeval *tv_p;

int res, done, retval = 0;

/* Grab the lock. We will release it inside evsel.dispatch, and again

* as we invoke user callbacks. */

EVBASE_ACQUIRE_LOCK(base, th_base_lock);

if (base->running_loop)

{

event_warnx("%s: reentrant invocation. Only one event_base_loop"

" can run on each event_base at once.", __func__);

EVBASE_RELEASE_LOCK(base, th_base_lock);

return -1;

}

base->running_loop = 1;

clear_time_cache(base);

if (base->sig.ev_signal_added && base->sig.ev_n_signals_added)

{

evsig_set_base(base);

}

done = 0;

#ifndef _EVENT_DISABLE_THREAD_SUPPORT

base->th_owner_id = EVTHREAD_GET_ID();

#endif

base->event_gotterm = base->event_break = 0;

while (!done)

{

/* Terminate the loop if we have been asked to */

if (base->event_gotterm)

{

break;

}

if (base->event_break)

{

break;

}

timeout_correct(base, &tv);

tv_p = &tv;

if (!N_ACTIVE_CALLBACKS(base) && !(flags & EVLOOP_NONBLOCK))

{

timeout_next(base, &tv_p);

}

else

{

/*

* if we have active events, we just poll new events

* without waiting.

*/

evutil_timerclear(&tv);

}

/* If we have no events, we just exit */

if (!event_haveevents(base) && !N_ACTIVE_CALLBACKS(base))

{

event_debug(("%s: no events registered.", __func__));

retval = 1;

goto done;

}

/* update last old time */

gettime(base, &base->event_tv);

clear_time_cache(base);

// epoll_wait active

res = evsel->dispatch(base, tv_p);

if (res == -1)

{

event_debug(("%s: dispatch returned unsuccessfully.",

__func__));

retval = -1;

goto done;

}

update_time_cache(base);

// 处理 超时的文件描述符 删除io的事件 提交到事件中 给业务队列中

timeout_process(base);

if (N_ACTIVE_CALLBACKS(base))

{

// 处理队列中的事件

int n = event_process_active(base);

if ((flags & EVLOOP_ONCE)

&& N_ACTIVE_CALLBACKS(base) == 0

&& n != 0)

{

done = 1;

}

}

else if (flags & EVLOOP_NONBLOCK)

{

done = 1;

}

}

event_debug(("%s: asked to terminate loop.", __func__));

done:

clear_time_cache(base);

base->running_loop = 0;

EVBASE_RELEASE_LOCK(base, th_base_lock);

return (retval);

}

/* Activate every event whose timeout has elapsed. */

static void timeout_process(struct event_base *base)

{

/* Caller must hold lock. */

struct timeval now;

struct event *ev;

if (min_heap_empty(&base->timeheap))

{

return;

}

gettime(base, &now);

while ((ev = min_heap_top(&base->timeheap)))

{

if (evutil_timercmp(&ev->ev_timeout, &now, > ))

{

break;

}

/* delete this event from the I/O queues */

event_del_internal(ev);

event_debug(("timeout_process: call %p",

ev->ev_callback));

event_active_nolock(ev, EV_TIMEOUT, 1);

}

}

/*

* Active events are stored in priority queues. Lower priorities are always

* process before higher priorities. Low priority events can starve high

* priority ones.

*/

static int event_process_active(struct event_base *base)

{

/* Caller must hold th_base_lock */

struct event_list *activeq = NULL;

int i, c = 0;

for (i = 0; i < base->nactivequeues; ++i)

{

if (TAILQ_FIRST(&base->activequeues[i]) != NULL)

{

activeq = &base->activequeues[i];

c = event_process_active_single_queue(base, activeq);

if (c < 0)

{

return -1;

}

else if (c > 0)

{

break; /* Processed a real event; do not

* consider lower-priority events */

/* If we get here, all of the events we processed

* were internal. Continue. */

}

}

}

event_process_deferred_callbacks(&base->defer_queue,&base->event_break);

return c;

}

/*

Helper for event_process_active to process all the events in a single queue,

releasing the lock as we go. This function requires that the lock be held

when it's invoked. Returns -1 if we get a signal or an event_break that

means we should stop processing any active events now. Otherwise returns

the number of non-internal events that we processed.

*/

static int event_process_active_single_queue(struct event_base *base,

struct event_list *activeq)

{

struct event *ev;

int count = 0;

EVUTIL_ASSERT(activeq != NULL);

for (ev = TAILQ_FIRST(activeq); ev; ev = TAILQ_FIRST(activeq))

{

if (ev->ev_events & EV_PERSIST)

{

event_queue_remove(base, ev, EVLIST_ACTIVE);

}

else

{

event_del_internal(ev);

}

if (!(ev->ev_flags & EVLIST_INTERNAL))

{

++count;

}

event_debug((

"event_process_active: event: %p, %s%scall %p",

ev,

ev->ev_res & EV_READ ? "EV_READ " : " ",

ev->ev_res & EV_WRITE ? "EV_WRITE " : " ",

ev->ev_callback));

#ifndef _EVENT_DISABLE_THREAD_SUPPORT

base->current_event = ev;

base->current_event_waiters = 0;

#endif

switch (ev->ev_closure)

{

case EV_CLOSURE_SIGNAL:

event_signal_closure(base, ev);

break;

case EV_CLOSURE_PERSIST:

event_persist_closure(base, ev);

break;

default:

case EV_CLOSURE_NONE:

EVBASE_RELEASE_LOCK(base, th_base_lock);

(*ev->ev_callback)(

(int)ev->ev_fd, ev->ev_res, ev->ev_arg); // 调用业务的回调函数

break;

}

EVBASE_ACQUIRE_LOCK(base, th_base_lock);

#ifndef _EVENT_DISABLE_THREAD_SUPPORT

base->current_event = NULL;

if (base->current_event_waiters)

{

base->current_event_waiters = 0;

EVTHREAD_COND_BROADCAST(base->current_event_cond);

}

#endif

if (base->event_break)

{

return -1;

}

}

return count;

}

5, event_base_free 是否event基类

void event_base_free(struct event_base *base)

{

int i, n_deleted=0;

struct event *ev;

/* XXXX grab the lock? If there is contention when one thread frees

* the base, then the contending thread will be very sad soon. */

if (base == NULL && current_base)

{

base = current_base;

}

if (base == current_base)

{

current_base = NULL;

}

/* XXX(niels) - check for internal events first */

EVUTIL_ASSERT(base);

#ifdef WIN32

event_base_stop_iocp(base);

#endif

/* threading fds if we have them */

if (base->th_notify_fd[0] != -1)

{

event_del(&base->th_notify);

EVUTIL_CLOSESOCKET(base->th_notify_fd[0]);

if (base->th_notify_fd[1] != -1)

{

EVUTIL_CLOSESOCKET(base->th_notify_fd[1]);

}

base->th_notify_fd[0] = -1;

base->th_notify_fd[1] = -1;

event_debug_unassign(&base->th_notify);

}

/* Delete all non-internal events. */

for (ev = TAILQ_FIRST(&base->eventqueue); ev; )

{

struct event *next = TAILQ_NEXT(ev, ev_next);

if (!(ev->ev_flags & EVLIST_INTERNAL))

{

event_del(ev);

++n_deleted;

}

ev = next;

}

while ((ev = min_heap_top(&base->timeheap)) != NULL)

{

event_del(ev);

++n_deleted;

}

for (i = 0; i < base->n_common_timeouts; ++i)

{

struct common_timeout_list *ctl =

base->common_timeout_queues[i];

event_del(&ctl->timeout_event); /* Internal; doesn't count */

event_debug_unassign(&ctl->timeout_event);

for (ev = TAILQ_FIRST(&ctl->events); ev; )

{

struct event *next = TAILQ_NEXT(ev,

ev_timeout_pos.ev_next_with_common_timeout);

if (!(ev->ev_flags & EVLIST_INTERNAL))

{

event_del(ev);

++n_deleted;

}

ev = next;

}

mm_free(ctl);

}

if (base->common_timeout_queues)

{

mm_free(base->common_timeout_queues);

}

for (i = 0; i < base->nactivequeues; ++i)

{

for (ev = TAILQ_FIRST(&base->activequeues[i]); ev; )

{

struct event *next = TAILQ_NEXT(ev, ev_active_next);

if (!(ev->ev_flags & EVLIST_INTERNAL))

{

event_del(ev);

++n_deleted;

}

ev = next;

}

}

if (n_deleted)

{

event_debug(("%s: %d events were still set in base",

__func__, n_deleted));

}

if (base->evsel != NULL && base->evsel->dealloc != NULL)

{

base->evsel->dealloc(base);

}

for (i = 0; i < base->nactivequeues; ++i)

{

EVUTIL_ASSERT(TAILQ_EMPTY(&base->activequeues[i]));

}

EVUTIL_ASSERT(min_heap_empty(&base->timeheap));

min_heap_dtor(&base->timeheap);

mm_free(base->activequeues);

EVUTIL_ASSERT(TAILQ_EMPTY(&base->eventqueue));

evmap_io_clear(&base->io);

evmap_signal_clear(&base->sigmap);

event_changelist_freemem(&base->changelist);

EVTHREAD_FREE_LOCK(base->th_base_lock, EVTHREAD_LOCKTYPE_RECURSIVE);

EVTHREAD_FREE_COND(base->current_event_cond);

mm_free(base);

}

结语

个人博客地址

最后

以上就是震动蛋挞最近收集整理的关于Libevent的事件驱动源码分析(一)的全部内容,更多相关Libevent内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复