import torch

import torch.nn as nn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

batch_size = 50

# MNIST dataset

train_dataset = dsets.MNIST(root='../../data_sets/mnist', # 选择数据的根目录

train=True, # 选择训练集

transform=transforms.ToTensor(), # 转换成tensor变量

download=False) # 不从网络上download图片

test_dataset = dsets.MNIST(root='../../data_sets/mnist', # 选择数据的根目录

train=False, # 选择训练集

transform=transforms.ToTensor(), # 转换成tensor变量

download=False) # 不从网络上download图片

# 加载数据

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True) # 将数据打乱

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=True)

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(1, 16, 5, 1, 2), # 10 *32 *32 out = 16*28*28

nn.ReLU(),

nn.MaxPool2d(2) # 16*14*14

) # output 10*

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, 5, 1, 2), # 10 × 18 x 18-> 14 *14 * 32

nn.ReLU(),

nn.MaxPool2d(2) # 32*7*7

)

self.out = nn.Linear(32 * 7 * 7, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

x = self.out(x)

return x

# define the neural net

net = Net()

if torch.cuda.is_available():

net.cuda()

optimizer = torch.optim.SGD(net.parameters(), lr=0.0510) # 0.052

optimizer = torch.optim.Adam(net.parameters(), lr=0.001)

loss_func = torch.nn.CrossEntropyLoss()

loss_numpy = np.array([])

for epoch in range(10):

for i, (images, labels) in enumerate(train_loader): # 利用enumerate取出一个可迭代对象的内容

images = images.reshape(50, 1, 28, 28)

labels = labels

if torch.cuda.is_available():

images = images.cuda()

labels = labels.cuda()

# print(images.size())

out = net(images)

loss = loss_func(out, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 100 == 0:

print('current loss = %.5f' % loss.item())

loss_numpy = np.insert(loss_numpy, loss_numpy.size, loss.item())

with torch.no_grad():

correct = 0

total = 0

for images, labels in test_loader:

images = images.reshape(50, 1, 28, 28)

labels = labels

if torch.cuda.is_available():

images = images.cuda()

labels = labels.cuda()

output_t = net(images)

_, predicted = torch.max(output_t.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images: {} %'.format(100 * correct / total))

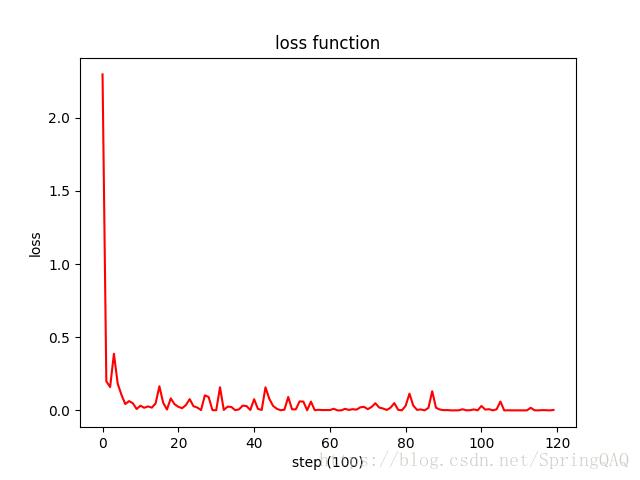

plt.plot(loss_numpy, 'r-', )

plt.title('loss function', fontsize='large')

plt.xlabel('step (100)')

plt.ylabel('loss')

plt.show()

准确率:Accuracy of the network on the 10000 test images: 99.1 %

损失函数:

最后

以上就是义气百褶裙最近收集整理的关于CNN 卷积神经网络实践(基于Mnist数据集)的全部内容,更多相关CNN内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复