我是靠谱客的博主 高高期待,这篇文章主要介绍轻量级网络论文: ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Block,现在分享给大家,希望可以做个参考。

ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks

PDF: https://arxiv.org/pdf/1908.03930v1.pdf

PyTorch代码: https://github.com/shanglianlm0525/PyTorch-Networks

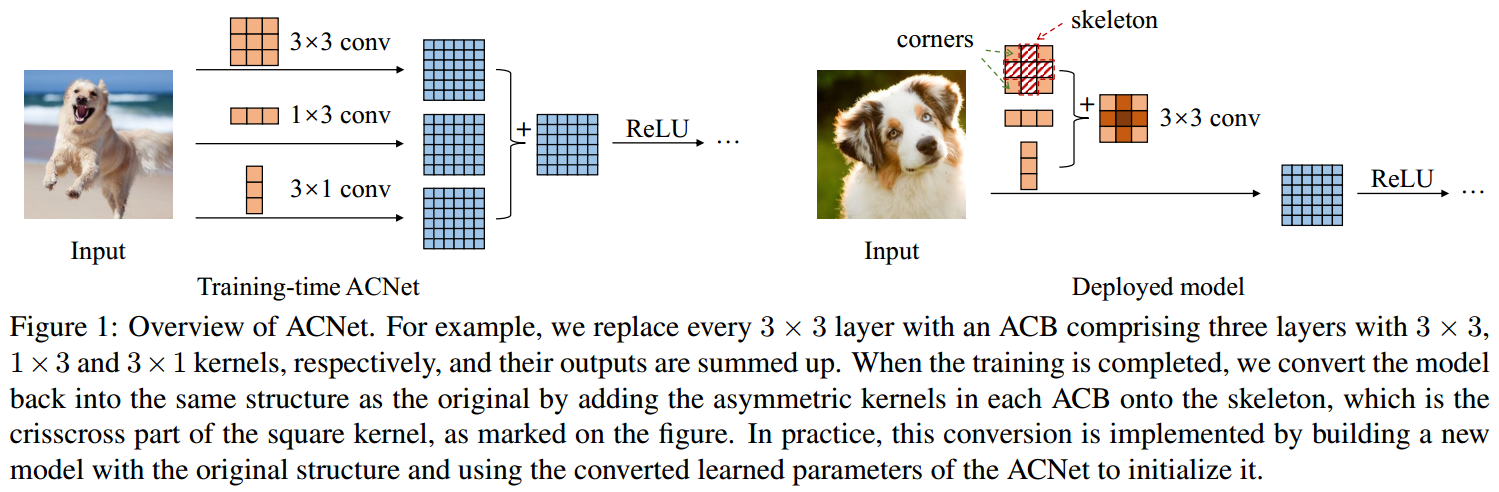

3x3卷积+1x3卷积+3x1卷积==性能提升但是没有额外的推理开销.

class ACBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, padding_mode='zeros', deploy=False):

super(ACBlock, self).__init__()

self.deploy = deploy

if deploy:

self.fused_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(kernel_size,kernel_size), stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=True, padding_mode=padding_mode)

else:

self.square_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

kernel_size=(kernel_size, kernel_size), stride=stride,

padding=padding, dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.square_bn = nn.BatchNorm2d(num_features=out_channels)

self.ver_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(3, 1),

stride=stride,

padding=(padding - kernel_size // 2 + 1, padding - kernel_size // 2), dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.ver_bn = nn.BatchNorm2d(num_features=out_channels)

self.hor_conv = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=(1, 3),

stride=stride,

padding=(padding - kernel_size // 2, padding - kernel_size // 2 + 1), dilation=dilation, groups=groups, bias=False,

padding_mode=padding_mode)

self.hor_bn = nn.BatchNorm2d(num_features=out_channels)

def forward(self, input):

if self.deploy:

return self.fused_conv(input)

else:

square_outputs = self.square_conv(input)

square_outputs = self.square_bn(square_outputs)

vertical_outputs = self.ver_conv(input)

vertical_outputs = self.ver_bn(vertical_outputs)

horizontal_outputs = self.hor_conv(input)

horizontal_outputs = self.hor_bn(horizontal_outputs)

return square_outputs + vertical_outputs + horizontal_outputs

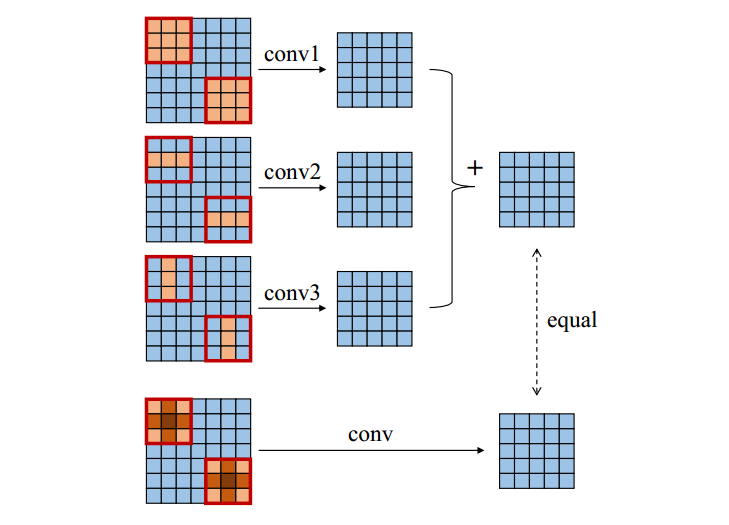

具体来说就是,训练的时候并行地做3x3, 1x3和3x1卷积,然后将三路的输出加起来, 但是推理的时候将三者转换为一个新的卷积核, 如下图

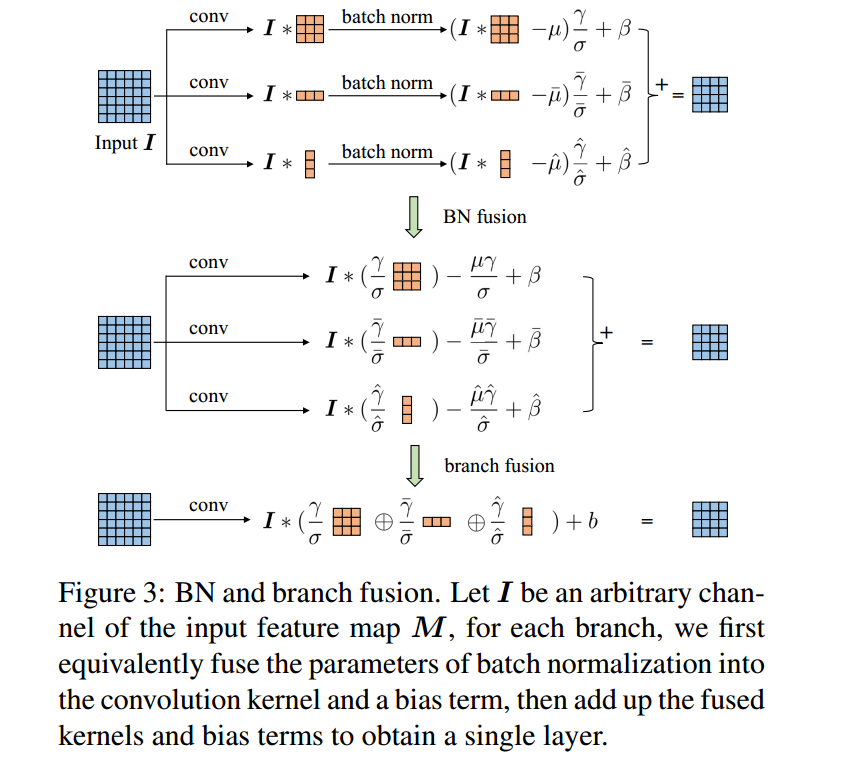

推断的时候,先BN fusion后Branch fusion,

QUARE_KERNEL_KEYWORD = 'square_conv.weight'

def _fuse_kernel(kernel, gamma, std):

b_gamma = np.reshape(gamma, (kernel.shape[0], 1, 1, 1))

b_gamma = np.tile(b_gamma, (1, kernel.shape[1], kernel.shape[2], kernel.shape[3]))

b_std = np.reshape(std, (kernel.shape[0], 1, 1, 1))

b_std = np.tile(b_std, (1, kernel.shape[1], kernel.shape[2], kernel.shape[3]))

return kernel * b_gamma / b_std

def _add_to_square_kernel(square_kernel, asym_kernel):

asym_h = asym_kernel.shape[2]

asym_w = asym_kernel.shape[3]

square_h = square_kernel.shape[2]

square_w = square_kernel.shape[3]

square_kernel[:, :, square_h // 2 - asym_h // 2: square_h // 2 - asym_h // 2 + asym_h,

square_w // 2 - asym_w // 2 : square_w // 2 - asym_w // 2 + asym_w] += asym_kernel

def convert_acnet_weights(train_weights, deploy_weights, eps):

train_dict = read_hdf5(train_weights)

print(train_dict.keys())

deploy_dict = {}

square_conv_var_names = [name for name in train_dict.keys() if SQUARE_KERNEL_KEYWORD in name]

for square_name in square_conv_var_names:

square_kernel = train_dict[square_name]

square_mean = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'square_bn.running_mean')]

square_std = np.sqrt(train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'square_bn.running_var')] + eps)

square_gamma = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'square_bn.weight')]

square_beta = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'square_bn.bias')]

ver_kernel = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'ver_conv.weight')]

ver_mean = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'ver_bn.running_mean')]

ver_std = np.sqrt(train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'ver_bn.running_var')] + eps)

ver_gamma = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'ver_bn.weight')]

ver_beta = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'ver_bn.bias')]

hor_kernel = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'hor_conv.weight')]

hor_mean = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'hor_bn.running_mean')]

hor_std = np.sqrt(train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'hor_bn.running_var')] + eps)

hor_gamma = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'hor_bn.weight')]

hor_beta = train_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'hor_bn.bias')]

fused_bias = square_beta + ver_beta + hor_beta - square_mean * square_gamma / square_std

- ver_mean * ver_gamma / ver_std - hor_mean * hor_gamma / hor_std

fused_kernel = _fuse_kernel(square_kernel, square_gamma, square_std)

_add_to_square_kernel(fused_kernel, _fuse_kernel(ver_kernel, ver_gamma, ver_std))

_add_to_square_kernel(fused_kernel, _fuse_kernel(hor_kernel, hor_gamma, hor_std))

deploy_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'fused_conv.weight')] = fused_kernel

deploy_dict[square_name.replace(SQUARE_KERNEL_KEYWORD, 'fused_conv.bias')] = fused_bias

for k, v in train_dict.items():

if 'hor_' not in k and 'ver_' not in k and 'square_' not in k:

deploy_dict[k] = v

save_hdf5(deploy_dict, deploy_weights)

最后

以上就是高高期待最近收集整理的关于轻量级网络论文: ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Block的全部内容,更多相关轻量级网络论文:内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复