1 参考:

https://www.freesion.com/article/9435149734/

https://blog.csdn.net/Shea1992/article/details/101041244

https://www.jianshu.com/p/9fb9f32e1f0f

https://www.jianshu.com/p/45c95a51a8c2

https://blog.csdn.net/weixin_43941899/article/details/105787688

2 我的环境:

hadoop版本:3.0.0-cdh6.3.2

linux环境:centos7.6

jdk1.8.0_131

3 安装maven

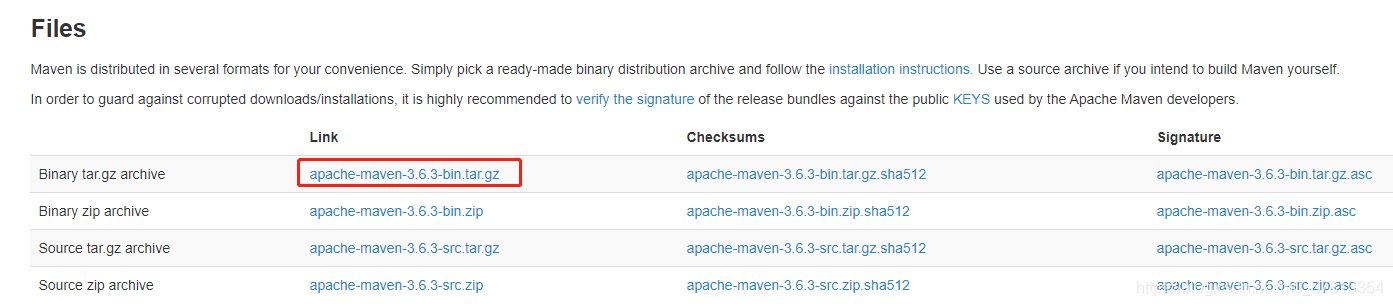

3.1 下载maven

https://maven.apache.org/download.cgi

3.2上传解压

tar -zxvf apache-maven-3.6.3-bin.tar.gz -C /data/module

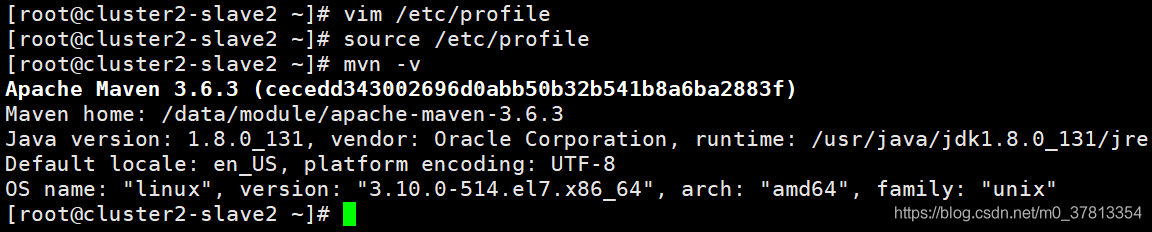

3.3 配置 MVN_HOMR

[root@cluster2-slave2 ~]# vim /etc/profile

export MVN_HOME=/data/module/apache-maven-3.6.3

export PATH=

P

A

T

H

:

PATH:

PATH:MVN_HOME/bin

[root@cluster2-slave2 ~]# source /etc/profile

3.4 验证maven

[root@cluster2-slave2 ~]# mvn -v

4 安装protobuf-2.5.0.tar.gz

4.1 下载

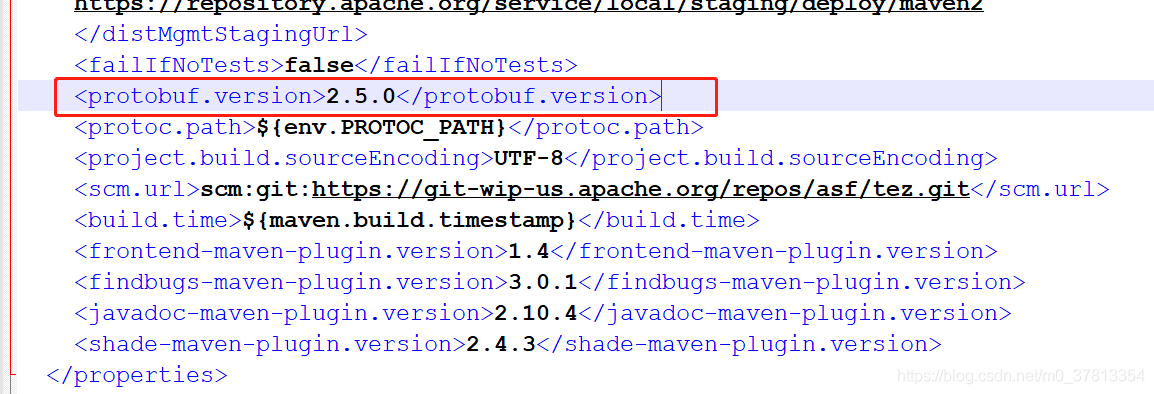

只能是2.5.0这个版本

因为后面安装tez0.91的时候加压后在pom.xml里可以看到,要求就是2.5.0的

hadoop使用protocol buffer进行通信,需要下载和安装 protobuf-2.5.0.tar.gz。

但是现在 protobuf-2.5.0.tar.gz已经无法在官网 https://code.google.com/p/protobuf/downloads/list中 下载了

我在百度网盘找到了下载链接这里附上下载链接

链接:https://pan.baidu.com/s/1hm7D2_wxIxMKbN9xnlYWuA

提取码:haz4

复制这段内容后打开百度网盘手机App,操作更方便哦

4.2 上传解压

tar -zxvf protobuf-2.5.0.tar.gz

4.3 configure校验

cd protobuf-2.5.0/

[root@cluster2-slave2 protobuf-2.5.0]# ./configure

第一次我校验失败:

checking whether to enable maintainer-specific portions of Makefiles… yes

checking build system type… x86_64-unknown-linux-gnu

checking host system type… x86_64-unknown-linux-gnu

checking target system type… x86_64-unknown-linux-gnu

checking for a BSD-compatible install… /usr/bin/install -c

checking whether build environment is sane… yes

checking for a thread-safe mkdir -p… /usr/bin/mkdir -p

checking for gawk… gawk

checking whether make sets $(MAKE)… yes

checking for gcc… gcc

checking whether the C compiler works… yes

checking for C compiler default output file name… a.out

checking for suffix of executables…

checking whether we are cross compiling… no

checking for suffix of object files… o

checking whether we are using the GNU C compiler… yes

checking whether gcc accepts -g… yes

checking for gcc option to accept ISO C89… none needed

checking for style of include used by make… GNU

checking dependency style of gcc… gcc3

checking for g++… no

checking for c++… no

checking for gpp… no

checking for aCC… no

checking for CC… no

checking for cxx… no

checking for cc++… no

checking for cl.exe… no

checking for FCC… no

checking for KCC… no

checking for RCC… no

checking for xlC_r… no

checking for xlC… no

checking whether we are using the GNU C++ compiler… no

checking whether g++ accepts -g… no

checking dependency style of g++… none

checking how to run the C++ preprocessor… /lib/cpp

configure: error: in /data/software/protobuf-2.5.0': configure: error: C++ preprocessor "/lib/cpp" fails sanity check Seeconfig.log’ for more details

错误信息:

configure: error: in `/data/software/protobuf-2.5.0’:

configure: error: C++ preprocessor “/lib/cpp” fails sanity check

问题的根源是缺少必要的C++库。如果是CentOS系统,运行,如下命令解决

yum install glibc-headers

yum install gcc-c++

结束后日志

…省略…

Installed:

gcc-c++.x86_64 0:4.8.5-39.el7

Dependency Installed:

libstdc+±devel.x86_64 0:4.8.5-39.el7

Dependency Updated:

libstdc++.x86_64 0:4.8.5-39.el7

Complete!

再次检查通过

[root@cluster2-slave2 protobuf-2.5.0]# ./configure

checking whether to enable maintainer-specific portions of Makefiles… yes

checking build system type… x86_64-unknown-linux-gnu

checking host system type… x86_64-unknown-linux-gnu

checking target system type… x86_64-unknown-linux-gnu

checking for a BSD-compatible install… /usr/bin/install -c

checking whether build environment is sane… yes

checking for a thread-safe mkdir -p… /usr/bin/mkdir -p

。。。。。。。。省略。。。。。。。。

checking whether the g++ linker (/usr/bin/ld -m elf_x86_64) supports shared libraries… yes

checking dynamic linker characteristics… (cached) GNU/Linux ld.so

checking how to hardcode library paths into programs… immediate

checking for python… /usr/bin/python

checking for the pthreads library -lpthreads… no

checking whether pthreads work without any flags… no

checking whether pthreads work with -Kthread… no

checking whether pthreads work with -kthread… no

checking for the pthreads library -llthread… no

checking whether pthreads work with -pthread… yes

checking for joinable pthread attribute… PTHREAD_CREATE_JOINABLE

checking if more special flags are required for pthreads… no

checking whether to check for GCC pthread/shared inconsistencies… yes

checking whether -pthread is sufficient with -shared… yes

configure: creating ./config.status

config.status: creating Makefile

config.status: creating scripts/gtest-config

config.status: creating build-aux/config.h

config.status: executing depfiles commands

config.status: executing libtool commands

[root@cluster2-slave2 protobuf-2.5.0]#

4.4 make

[root@cluster2-slave2 protobuf-2.5.0]# make

…省略…

libtool: link: ranlib .libs/libprotobuf-lite.a

libtool: link: ( cd “.libs” && rm -f “libprotobuf-lite.la” && ln -s “…/libprotobuf-lite.la” “libprotobuf-lite.la” )

make[3]: Leaving directory /data/software/protobuf-2.5.0/src' make[2]: Leaving directory/data/software/protobuf-2.5.0/src’

make[1]: Leaving directory `/data/software/protobuf-2.5.0’

4.5 make install

[root@cluster2-slave2 protobuf-2.5.0]# make install

…省略…

/usr/bin/mkdir -p ‘/usr/local/include/google/protobuf/io’

/usr/bin/install -c -m 644 google/protobuf/io/coded_stream.h google/protobuf/io/gzip_stream.h google/protobuf/io/printer.h google/protobuf/io/tokenizer.h google/protobuf/io/zero_copy_stream.h google/protobuf/io/zero_copy_stream_impl.h google/protobuf/io/zero_copy_stream_impl_lite.h ‘/usr/local/include/google/protobuf/io’

make[3]: Leaving directory /data/software/protobuf-2.5.0/src' make[2]: Leaving directory/data/software/protobuf-2.5.0/src’

make[1]: Leaving directory `/data/software/protobuf-2.5.0/src’

4.6 验证protobuf

[root@cluster2-slave2 protobuf-2.5.0]# protoc --version

libprotoc 2.5.0

可见安装成功

5 安装 Tez

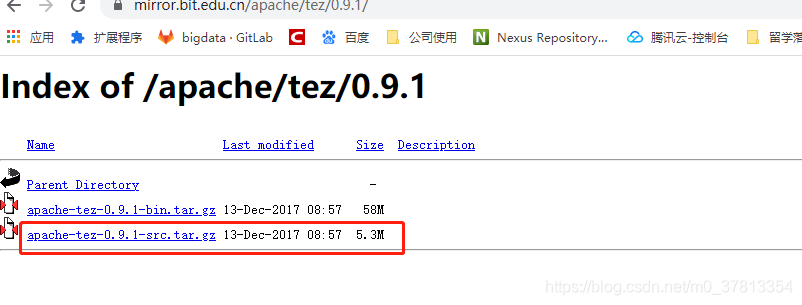

5.1 下载

http://www.apache.org/dyn/closer.lua/tez/0.9.1/

下载源码自己根据自己的环境编译

5.2 上传解压

[root@cluster2-slave2 software]# tar -zxvf apache-tez-0.9.1-src.tar.gz -C …/module/

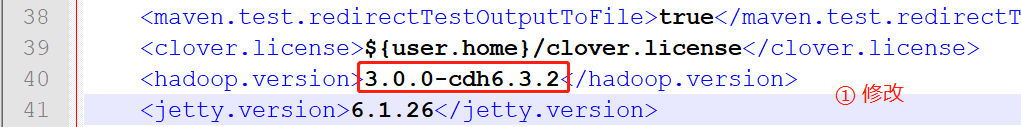

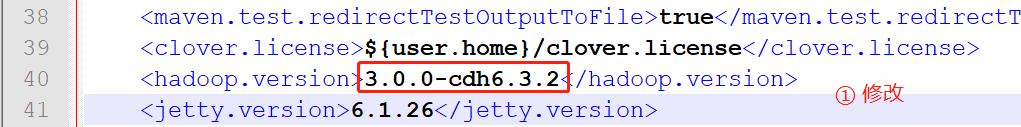

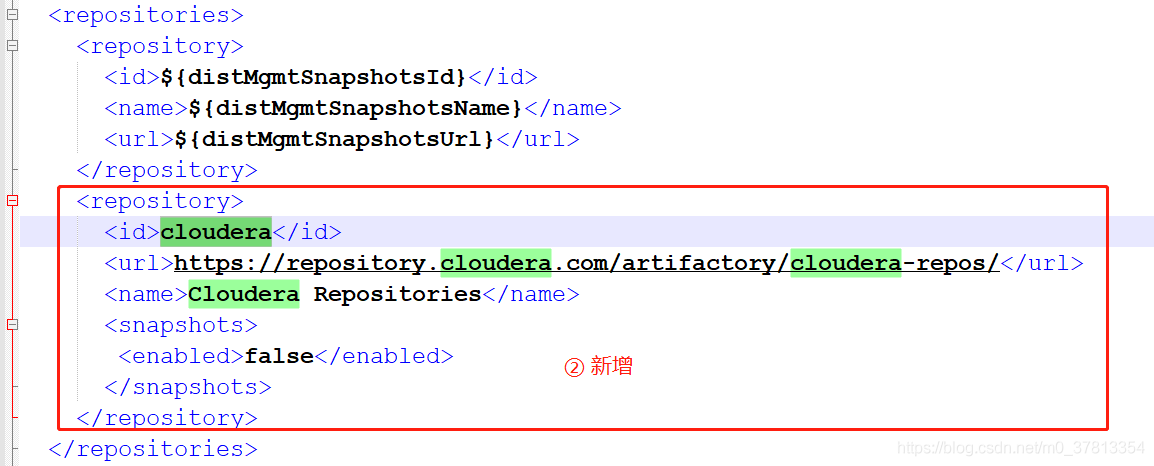

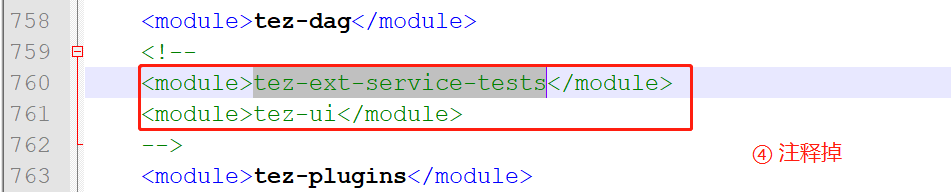

5.3 修改pom.xml

有四处修改:

(1)hadoop.version版本对应修改

确认自己的hadoop版本

修改如下

(2)repository.cloudera

(3) 新增pluginRepository.cloudera

(4) 注释不必要的东西减少下载和编译出错概率

完整pom.xml 如下

<?xml version="1.0" encoding="UTF-8"?>

4.0.0

org.apache.tez

tez

pom

0.9.1

tez

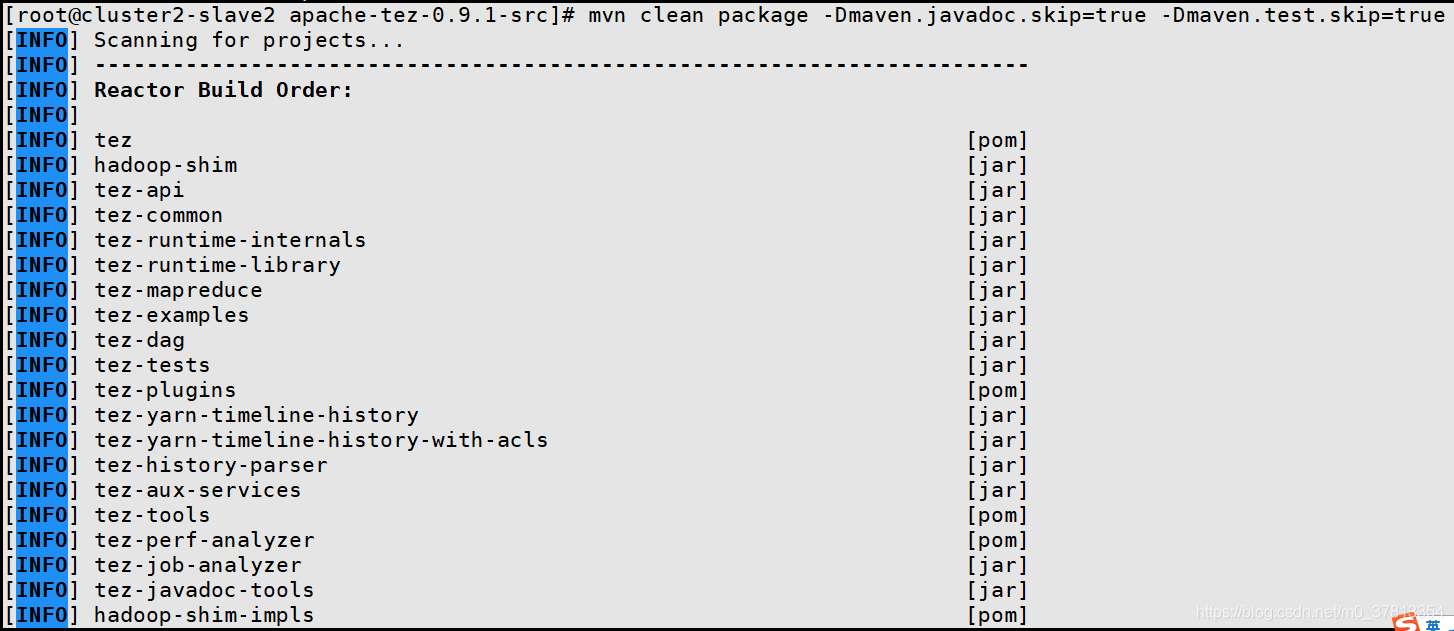

所以用下面领命编译

在pom.xml同级目录下执行

mvn clean package -Dmaven.javadoc.skip=true -Dmaven.test.skip=true

开始编译

5.5 遇到问题

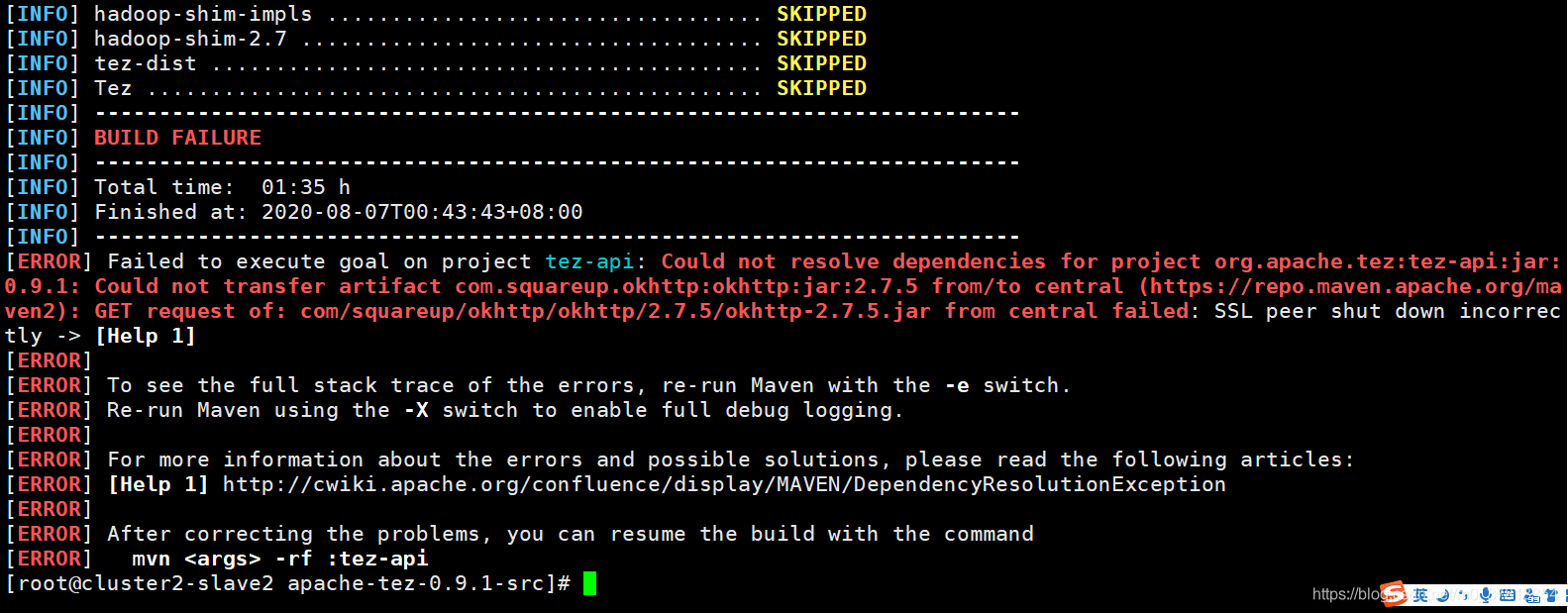

第一次编译要下载很多包有可能中途下载失败

比如下面就是一次包的依赖没有下载成功报错了

重试后所有依赖的jar下载成功

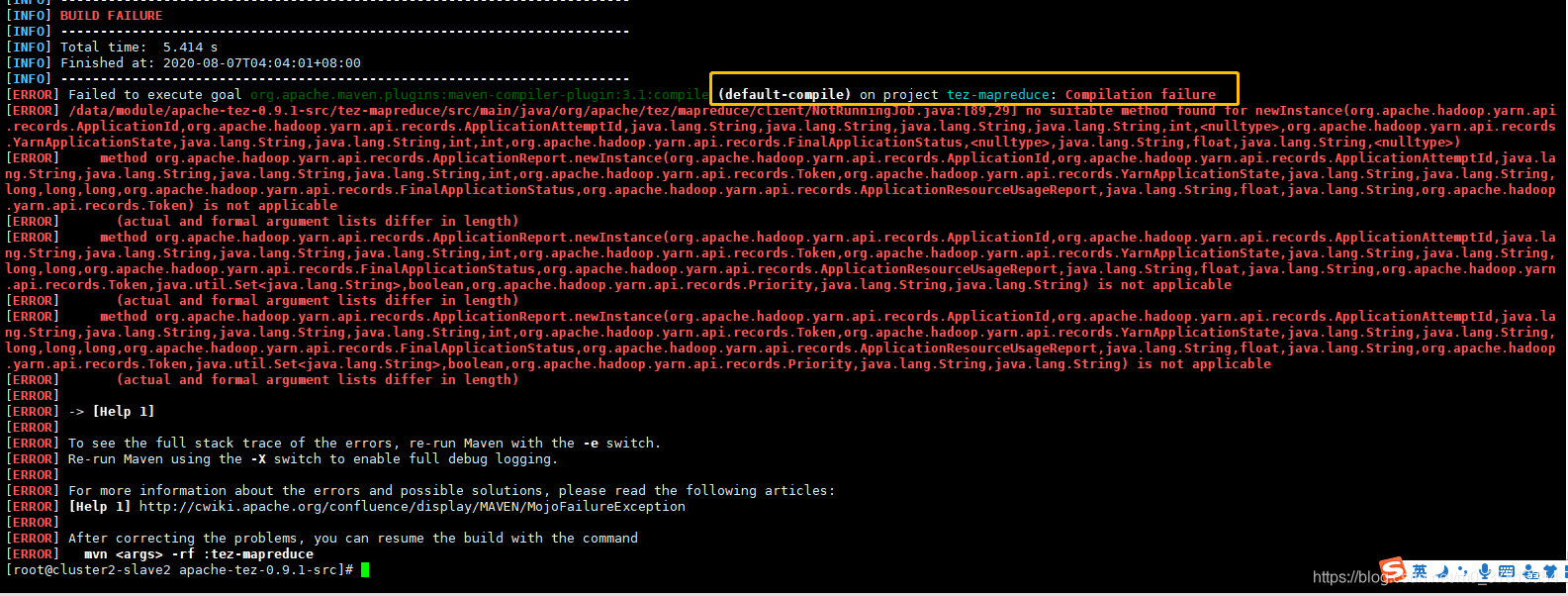

但是编译还遇到了一个问题

报ApplicationReport.newInstance() 89行异常

/data/module/apache-tez-0.9.1-src/tez-mapreduce/src/main/java/org/apache/tez/mapreduce/clienttRunningJob.java:[89,29] no suitable method found for newInstance(org.apache.hadoop.yarn.api.records.ApplicationId,org.apache.hadoop.yarn.api.records.ApplicationAttemptId,java.lang.String,java.lang.String,java.lang.String,java.lang.String,int,,org.apache.hadoop.yarn.api.records.YarnApplicationState,java.lang.String,java.lang.String,int,int,org.apache.hadoop.yarn.api.records.FinalApplicationStatus,,java.lang.String,float,java.lang.String,)

解决办法:更换ApplicationReport.newInstance()的另一个方法

更换源代码:

return ApplicationReport.newInstance(unknownAppId, unknownAttemptId, “N/A”,

“N/A”, “N/A”, “N/A”, 0, null, YarnApplicationState.NEW, “N/A”, “N/A”,

0, 0, FinalApplicationStatus.UNDEFINED, null, “N/A”, 0.0f, “TEZ_MRR”, null)

更换为新代码:

return ApplicationReport.newInstance(unknownAppId, unknownAttemptId, “N/A”,

“N/A”, “N/A”, “N/A”, 0, null, YarnApplicationState.NEW, “N/A”, “N/A”,

0, 0, 0, FinalApplicationStatus.UNDEFINED, null, “N/A”, 0.0f, “TEZ_MRR”, null);

vim tez-mapreduce/src/main/java/org/apache/tez/mapreduce/clienttRunningJob.java

然后继续编译

mvn clean package -Dmaven.javadoc.skip=true -Dmaven.test.skip=true

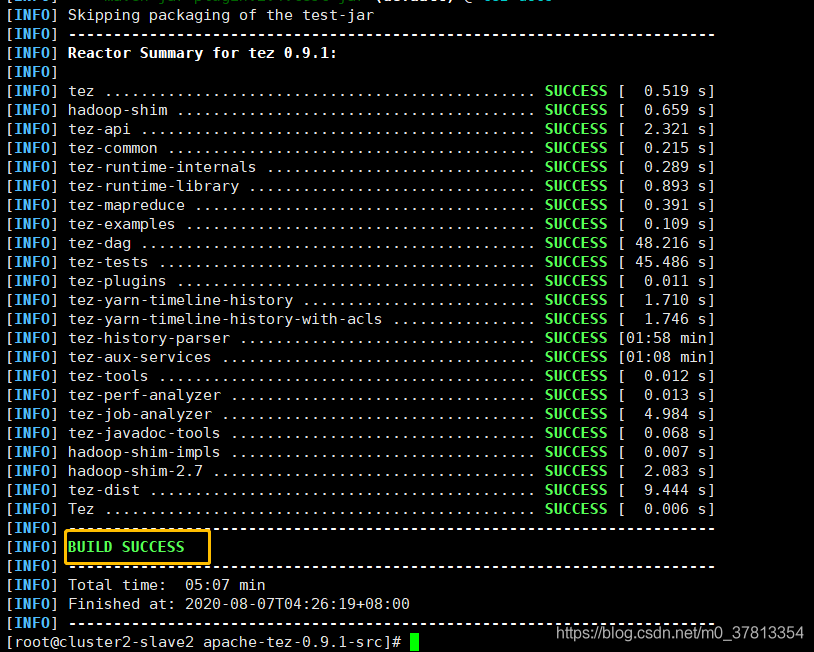

5.6 最后编译成功

编译后的文件在tez-dist/target下面

我的在:/data/module/apache-tez-0.9.1-src/tez-dist/target

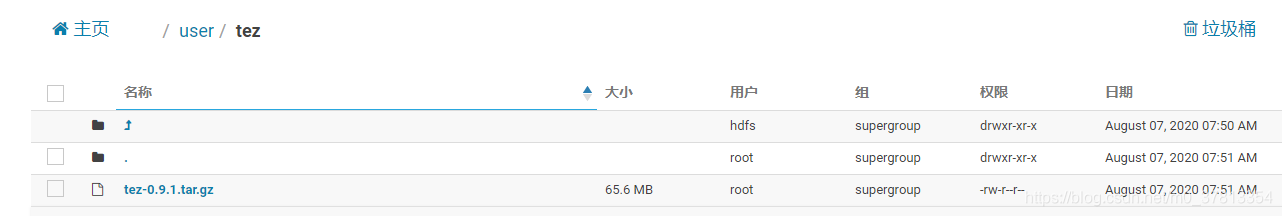

5.7 整个tez到hdfs

hdfs上创建相应的tez目录

[root@cluster2-slave2 target]# hadoop fs -mkdir /user/tez

上传tez-0.9.1.tar.gz到 /user/tez目录

[root@cluster2-slave2 target]# hadoop fs -put tez-0.9.1.tar.gz /user/tez

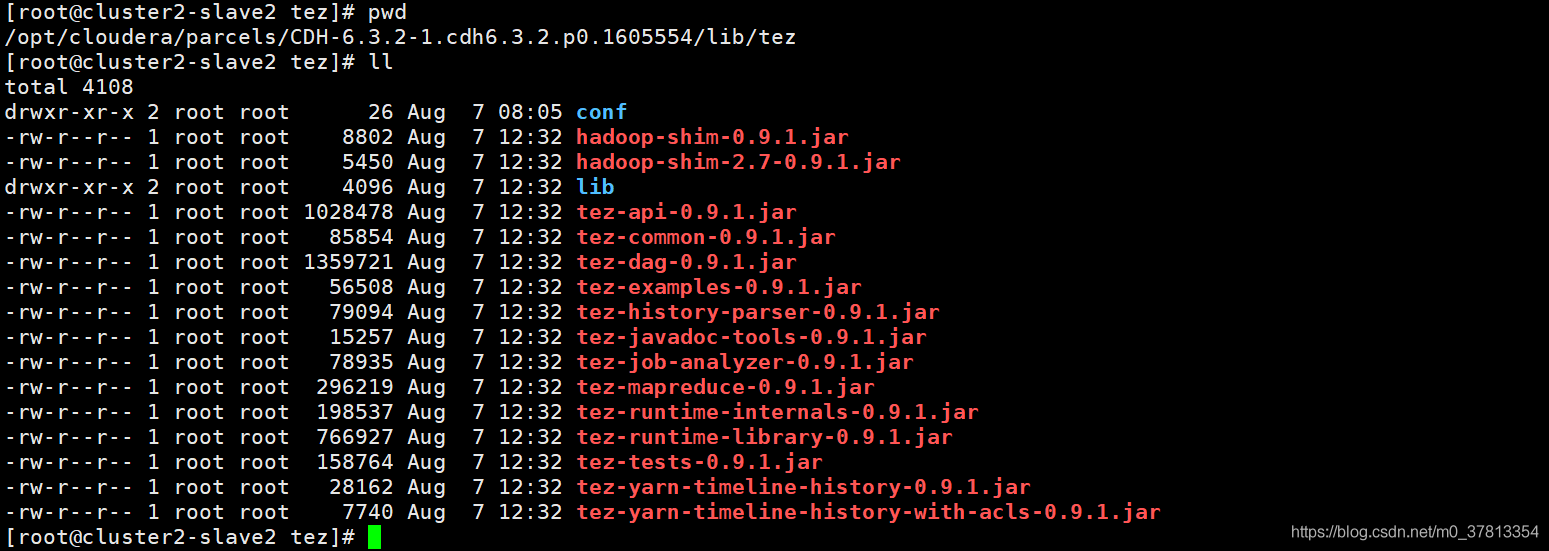

5.8 整个tez到hive

(1)进入CDH lib目录

cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib

(2) 创建tez相关目录目录

[root@cluster2-slave2 lib]# mkdir -p tez/conf

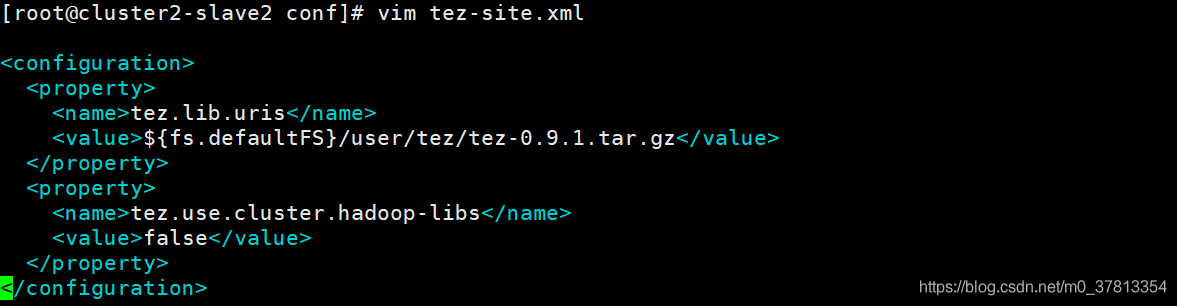

(3)创建 tez-site.xml文件

[root@cluster2-slave2 lib]# cd tez/conf/

[root@cluster2-slave2 conf]# vim tez-site.xml

tez.lib.uris

${fs.defaultFS}/user/tez/tez-0.9.1.tar.gz

tez.use.cluster.hadoop-libs

false

(4)tez-0.9.1-minimal拷贝到tez中

下面的目录

/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez

[root@cluster2-slave2 tez-0.9.1-minimal]# cp ./*.jar /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez

[root@cluster2-slave2 tez-0.9.1-minimal]# cp -r lib /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez

(5)分发tez这个文件到各个节点

[root@cluster2-slave2 lib]# scp -r tez root@cluster2-slave1:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib

[root@cluster2-slave2 lib]# scp -r tez root@cluster2-master:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib

6 配置hvie环境

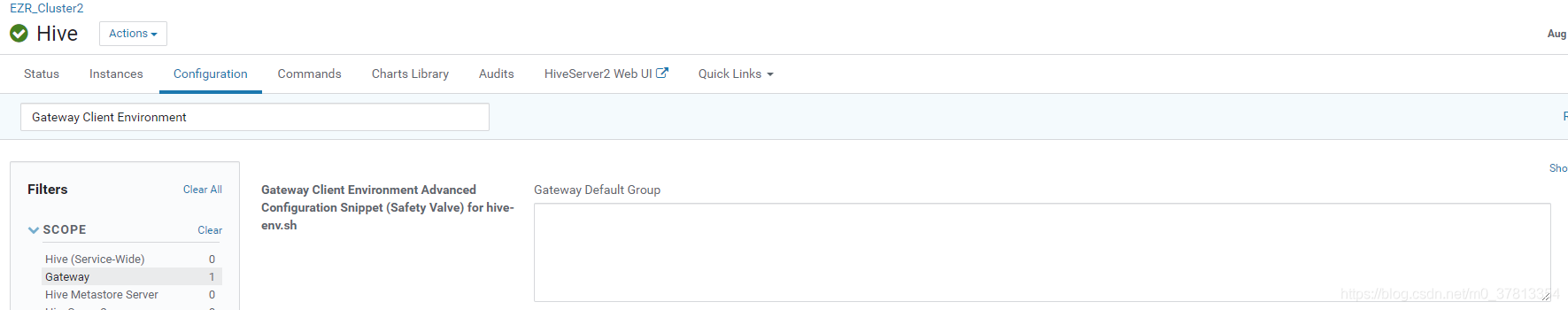

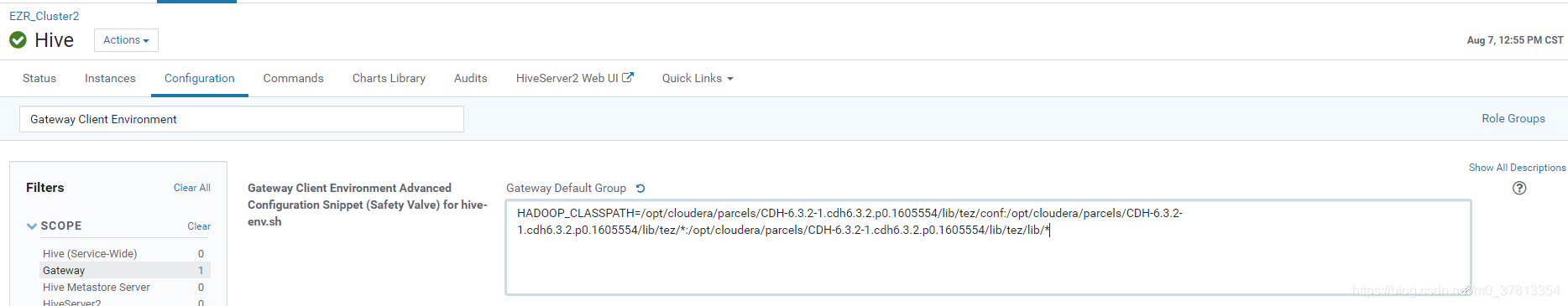

6.1 配置环境

在cdh找到hive客户端配置

HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/conf:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/lib/

然后保存并部署客户端配置,使生效

6.2 测试效果

报错

VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

Map 1 container FAILED -1 0 0 -1 0 0

Reducer 2 container KILLED 1 0 0 1 0 0

VERTICES: 00/02 [>>--------------------------] 0% ELAPSED TIME: 0.15 s

20/08/07 13:01:23 INFO SessionState: Map 1: -/- Reducer 2: 0/1

Status: Failed

20/08/07 13:01:23 ERROR SessionState: Status: Failed

Vertex failed, vertexName=Map 1, vertexId=vertex_1596710469388_0001_1_00, diagnostics=[Vertex vertex_1596710469388_0001_1_00 [Map 1] killed/failed due to:INIT_FAILURE, Fail to create InputInitializerManager, org.apache.tez.dag.api.TezReflectionException: Unable to instantiate class with 1 arguments: org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator

at org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:71)

at org.apache.tez.common.ReflectionUtils.createClazzInstance(ReflectionUtils.java:89)

at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:152)

at org.apache.tez.dag.app.dag.RootInputInitializerManager$1.run(RootInputInitializerManager.java:148)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.createInitializer(RootInputInitializerManager.java:148)

at org.apache.tez.dag.app.dag.RootInputInitializerManager.runInputInitializers(RootInputInitializerManager.java:121)

at org.apache.tez.dag.app.dag.impl.VertexImpl.setupInputInitializerManager(VertexImpl.java:4101)

at org.apache.tez.dag.app.dag.impl.VertexImpl.access

3100

(

V

e

r

t

e

x

I

m

p

l

.

j

a

v

a

:

205

)

a

t

o

r

g

.

a

p

a

c

h

e

.

t

e

z

.

d

a

g

.

a

p

p

.

d

a

g

.

i

m

p

l

.

V

e

r

t

e

x

I

m

p

l

3100(VertexImpl.java:205) at org.apache.tez.dag.app.dag.impl.VertexImpl

3100(VertexImpl.java:205)atorg.apache.tez.dag.app.dag.impl.VertexImplInitTransition.handleInitEvent(VertexImpl.java:2912)

at org.apache.tez.dag.app.dag.impl.VertexImpl

I

n

i

t

T

r

a

n

s

i

t

i

o

n

.

t

r

a

n

s

i

t

i

o

n

(

V

e

r

t

e

x

I

m

p

l

.

j

a

v

a

:

2859

)

a

t

o

r

g

.

a

p

a

c

h

e

.

t

e

z

.

d

a

g

.

a

p

p

.

d

a

g

.

i

m

p

l

.

V

e

r

t

e

x

I

m

p

l

InitTransition.transition(VertexImpl.java:2859) at org.apache.tez.dag.app.dag.impl.VertexImpl

InitTransition.transition(VertexImpl.java:2859)atorg.apache.tez.dag.app.dag.impl.VertexImplInitTransition.transition(VertexImpl.java:2841)

at org.apache.hadoop.yarn.state.StateMachineFactory$MultipleInternalArc.doTransition(StateMachineFactory.java:385)

at org.apache.hadoop.yarn.state.StateMachineFactory.doTransition(StateMachineFactory.java:302)

at org.apache.hadoop.yarn.state.StateMachineFactory.access

500

(

S

t

a

t

e

M

a

c

h

i

n

e

F

a

c

t

o

r

y

.

j

a

v

a

:

46

)

a

t

o

r

g

.

a

p

a

c

h

e

.

h

a

d

o

o

p

.

y

a

r

n

.

s

t

a

t

e

.

S

t

a

t

e

M

a

c

h

i

n

e

F

a

c

t

o

r

y

500(StateMachineFactory.java:46) at org.apache.hadoop.yarn.state.StateMachineFactory

500(StateMachineFactory.java:46)atorg.apache.hadoop.yarn.state.StateMachineFactoryInternalStateMachine.doTransition(StateMachineFactory.java:487)

at org.apache.tez.state.StateMachineTez.doTransition(StateMachineTez.java:59)

at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:1939)

at org.apache.tez.dag.app.dag.impl.VertexImpl.handle(VertexImpl.java:204)

at org.apache.tez.dag.app.DAGAppMaster

V

e

r

t

e

x

E

v

e

n

t

D

i

s

p

a

t

c

h

e

r

.

h

a

n

d

l

e

(

D

A

G

A

p

p

M

a

s

t

e

r

.

j

a

v

a

:

2317

)

a

t

o

r

g

.

a

p

a

c

h

e

.

t

e

z

.

d

a

g

.

a

p

p

.

D

A

G

A

p

p

M

a

s

t

e

r

VertexEventDispatcher.handle(DAGAppMaster.java:2317) at org.apache.tez.dag.app.DAGAppMaster

VertexEventDispatcher.handle(DAGAppMaster.java:2317)atorg.apache.tez.dag.app.DAGAppMasterVertexEventDispatcher.handle(DAGAppMaster.java:2303)

at org.apache.tez.common.AsyncDispatcher.dispatch(AsyncDispatcher.java:180)

at org.apache.tez.common.AsyncDispatcher

1.

r

u

n

(

A

s

y

n

c

D

i

s

p

a

t

c

h

e

r

.

j

a

v

a

:

115

)

a

t

j

a

v

a

.

l

a

n

g

.

T

h

r

e

a

d

.

r

u

n

(

T

h

r

e

a

d

.

j

a

v

a

:

748

)

C

a

u

s

e

d

b

y

:

j

a

v

a

.

l

a

n

g

.

r

e

f

l

e

c

t

.

I

n

v

o

c

a

t

i

o

n

T

a

r

g

e

t

E

x

c

e

p

t

i

o

n

a

t

s

u

n

.

r

e

f

l

e

c

t

.

N

a

t

i

v

e

C

o

n

s

t

r

u

c

t

o

r

A

c

c

e

s

s

o

r

I

m

p

l

.

n

e

w

I

n

s

t

a

n

c

e

0

(

N

a

t

i

v

e

M

e

t

h

o

d

)

a

t

s

u

n

.

r

e

f

l

e

c

t

.

N

a

t

i

v

e

C

o

n

s

t

r

u

c

t

o

r

A

c

c

e

s

s

o

r

I

m

p

l

.

n

e

w

I

n

s

t

a

n

c

e

(

N

a

t

i

v

e

C

o

n

s

t

r

u

c

t

o

r

A

c

c

e

s

s

o

r

I

m

p

l

.

j

a

v

a

:

62

)

a

t

s

u

n

.

r

e

f

l

e

c

t

.

D

e

l

e

g

a

t

i

n

g

C

o

n

s

t

r

u

c

t

o

r

A

c

c

e

s

s

o

r

I

m

p

l

.

n

e

w

I

n

s

t

a

n

c

e

(

D

e

l

e

g

a

t

i

n

g

C

o

n

s

t

r

u

c

t

o

r

A

c

c

e

s

s

o

r

I

m

p

l

.

j

a

v

a

:

45

)

a

t

j

a

v

a

.

l

a

n

g

.

r

e

f

l

e

c

t

.

C

o

n

s

t

r

u

c

t

o

r

.

n

e

w

I

n

s

t

a

n

c

e

(

C

o

n

s

t

r

u

c

t

o

r

.

j

a

v

a

:

423

)

a

t

o

r

g

.

a

p

a

c

h

e

.

t

e

z

.

c

o

m

m

o

n

.

R

e

f

l

e

c

t

i

o

n

U

t

i

l

s

.

g

e

t

N

e

w

I

n

s

t

a

n

c

e

(

R

e

f

l

e

c

t

i

o

n

U

t

i

l

s

.

j

a

v

a

:

68

)

.

.

.

25

m

o

r

e

C

a

u

s

e

d

b

y

:

j

a

v

a

.

l

a

n

g

.

N

o

C

l

a

s

s

D

e

f

F

o

u

n

d

E

r

r

o

r

:

c

o

m

/

e

s

o

t

e

r

i

c

s

o

f

t

w

a

r

e

/

k

r

y

o

/

S

e

r

i

a

l

i

z

e

r

a

t

o

r

g

.

a

p

a

c

h

e

.

h

a

d

o

o

p

.

h

i

v

e

.

q

l

.

e

x

e

c

.

U

t

i

l

i

t

i

e

s

.

g

e

t

B

a

s

e

W

o

r

k

(

U

t

i

l

i

t

i

e

s

.

j

a

v

a

:

404

)

a

t

o

r

g

.

a

p

a

c

h

e

.

h

a

d

o

o

p

.

h

i

v

e

.

q

l

.

e

x

e

c

.

U

t

i

l

i

t

i

e

s

.

g

e

t

M

a

p

W

o

r

k

(

U

t

i

l

i

t

i

e

s

.

j

a

v

a

:

317

)

a

t

o

r

g

.

a

p

a

c

h

e

.

h

a

d

o

o

p

.

h

i

v

e

.

q

l

.

e

x

e

c

.

t

e

z

.

H

i

v

e

S

p

l

i

t

G

e

n

e

r

a

t

o

r

.

<

i

n

i

t

>

(

H

i

v

e

S

p

l

i

t

G

e

n

e

r

a

t

o

r

.

j

a

v

a

:

131

)

.

.

.

30

m

o

r

e

C

a

u

s

e

d

b

y

:

j

a

v

a

.

l

a

n

g

.

C

l

a

s

s

N

o

t

F

o

u

n

d

E

x

c

e

p

t

i

o

n

:

c

o

m

.

e

s

o

t

e

r

i

c

s

o

f

t

w

a

r

e

.

k

r

y

o

.

S

e

r

i

a

l

i

z

e

r

a

t

j

a

v

a

.

n

e

t

.

U

R

L

C

l

a

s

s

L

o

a

d

e

r

.

f

i

n

d

C

l

a

s

s

(

U

R

L

C

l

a

s

s

L

o

a

d

e

r

.

j

a

v

a

:

381

)

a

t

j

a

v

a

.

l

a

n

g

.

C

l

a

s

s

L

o

a

d

e

r

.

l

o

a

d

C

l

a

s

s

(

C

l

a

s

s

L

o

a

d

e

r

.

j

a

v

a

:

424

)

a

t

s

u

n

.

m

i

s

c

.

L

a

u

n

c

h

e

r

1.run(AsyncDispatcher.java:115) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:68) ... 25 more Caused by: java.lang.NoClassDefFoundError: com/esotericsoftware/kryo/Serializer at org.apache.hadoop.hive.ql.exec.Utilities.getBaseWork(Utilities.java:404) at org.apache.hadoop.hive.ql.exec.Utilities.getMapWork(Utilities.java:317) at org.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.<init>(HiveSplitGenerator.java:131) ... 30 more Caused by: java.lang.ClassNotFoundException: com.esotericsoftware.kryo.Serializer at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher

1.run(AsyncDispatcher.java:115)atjava.lang.Thread.run(Thread.java:748)Causedby:java.lang.reflect.InvocationTargetExceptionatsun.reflect.NativeConstructorAccessorImpl.newInstance0(NativeMethod)atsun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)atsun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)atjava.lang.reflect.Constructor.newInstance(Constructor.java:423)atorg.apache.tez.common.ReflectionUtils.getNewInstance(ReflectionUtils.java:68)...25moreCausedby:java.lang.NoClassDefFoundError:com/esotericsoftware/kryo/Serializeratorg.apache.hadoop.hive.ql.exec.Utilities.getBaseWork(Utilities.java:404)atorg.apache.hadoop.hive.ql.exec.Utilities.getMapWork(Utilities.java:317)atorg.apache.hadoop.hive.ql.exec.tez.HiveSplitGenerator.<init>(HiveSplitGenerator.java:131)...30moreCausedby:java.lang.ClassNotFoundException:com.esotericsoftware.kryo.Serializeratjava.net.URLClassLoader.findClass(URLClassLoader.java:381)atjava.lang.ClassLoader.loadClass(ClassLoader.java:424)atsun.misc.LauncherAppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

… 33 more

]

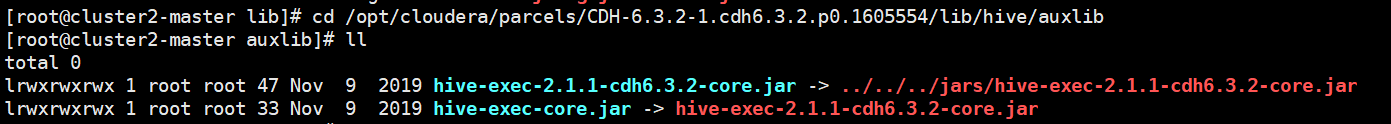

6.3 异常处理

ClassNotFoundException: com.esotericsoftware.kryo.Serializer

解决办法 修改:

删除或者重命名hive/auxlib下的hive-exec-2.1.1-cdh6.3.2-core.jar和hive-exec-core.jar

所有节点我都做了

cd /opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/hive/auxlib

原本这样的

hive-exec-2.1.1-cdh6.3.2-core.jar -> …/…/…/jars/hive-exec-2.1.1-cdh6.3.2-core.jar

hive-exec-core.jar -> hive-exec-2.1.1-cdh6.3.2-core.jar

修改:重命名

mv hive-exec-2.1.1-cdh6.3.2-core.jar hive-exec-2.1.1-cdh6.3.2-core.jar.bck

mv hive-exec-core.jar hive-exec-core.jar.bck

然后重启hive使生效

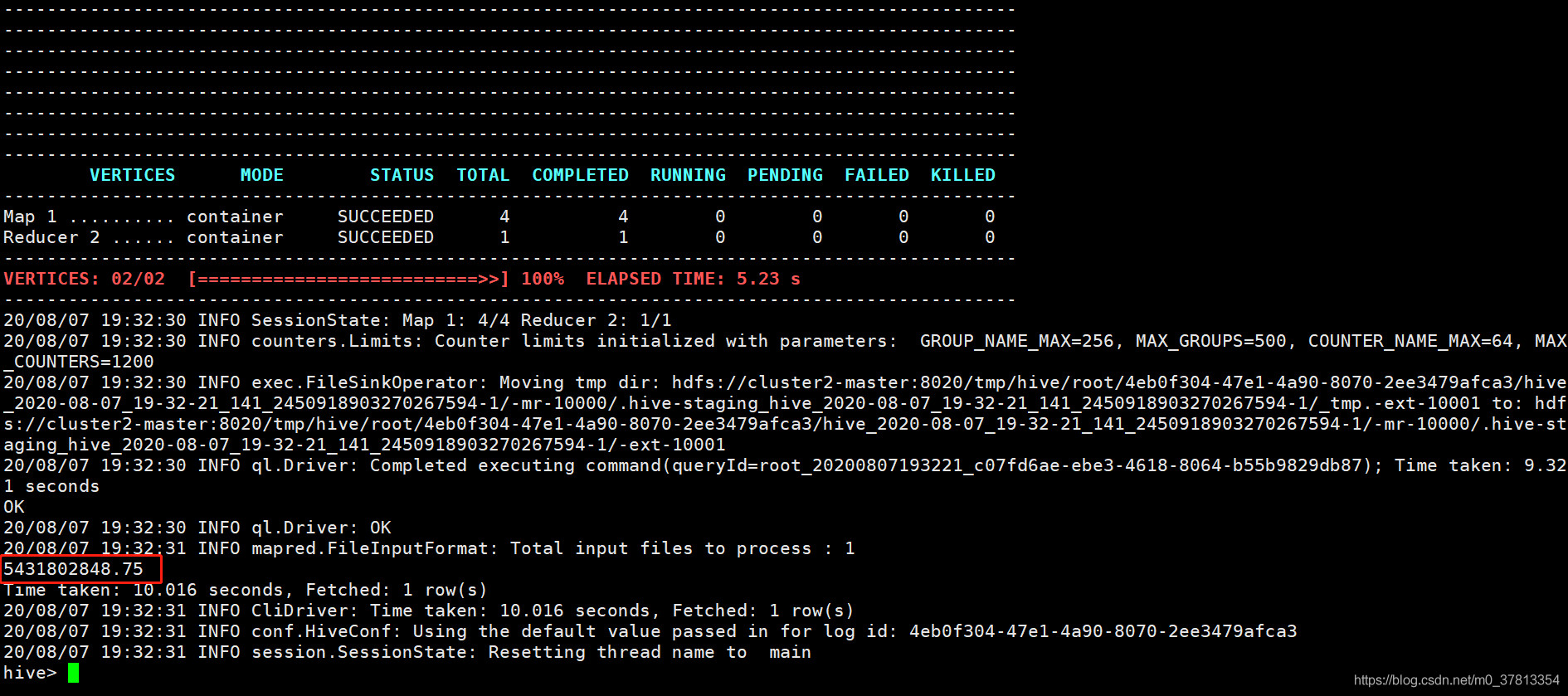

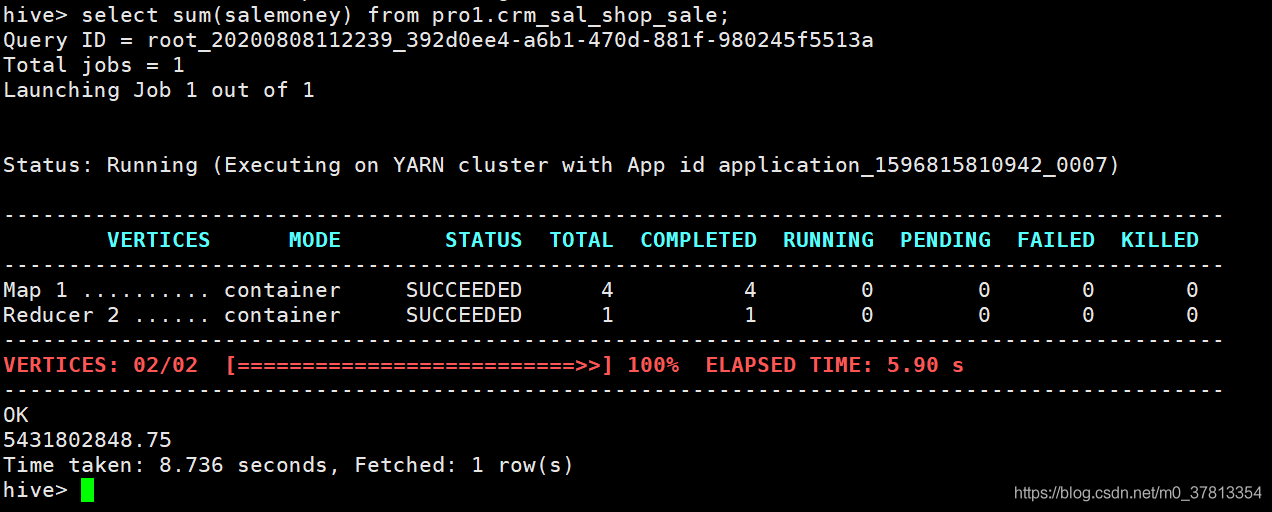

继续测试

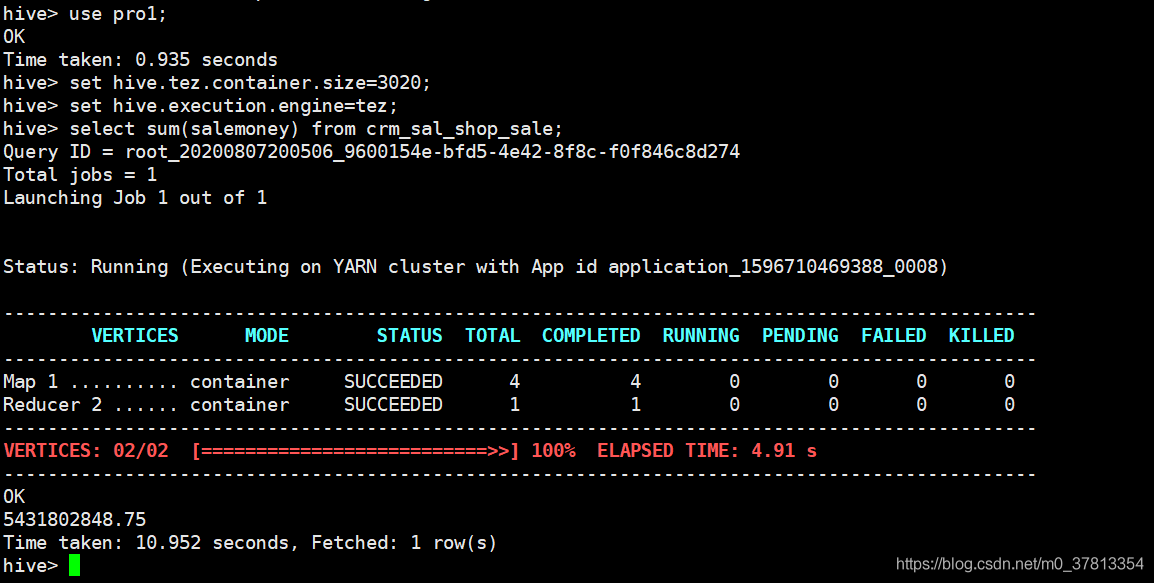

注意必须有set hive.tez.container.size=3020;否则报错

hive> set hive.tez.container.size=3020;

hive> set hive.execution.engine=tez;

执行查询

hive> select sum(salemoney) from crm_sal_shop_sale;

打印出

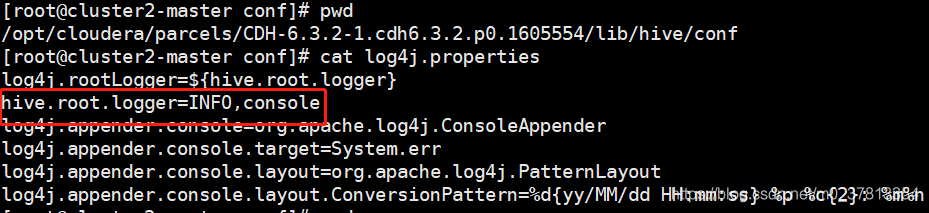

6.4 日志太多的处理

实际上可以看出这个过程日志太多了。看着不清爽,下面的操作减少日志输出量

这是因为/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/lib/tez/lib目录下有

slf4j-api-1.7.10.jar

slf4j-log4j12-1.7.10.jar

两个包

并且现在hive日志级别是 INFO

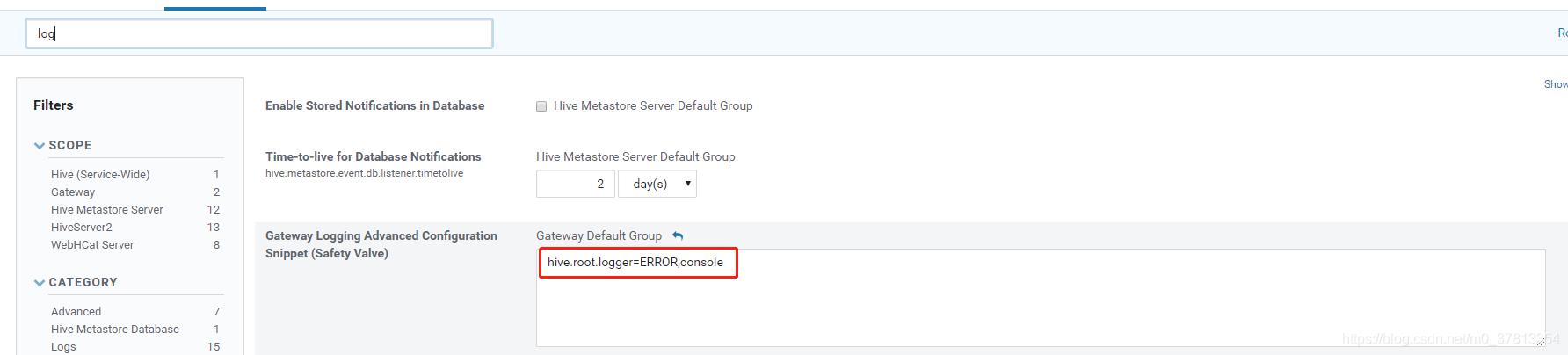

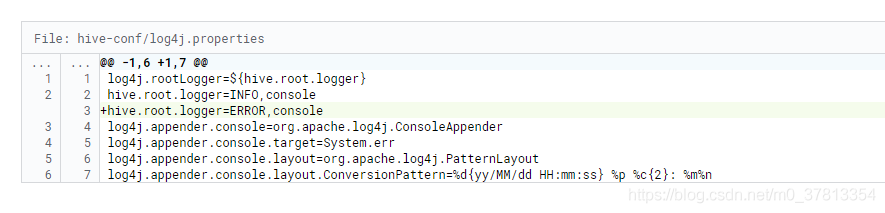

那么现在通过CDH控制台把hive日志级别设置成ERROR

重启hive使生效

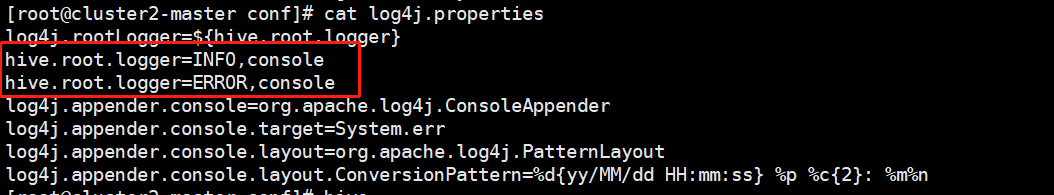

结果查看配置

查看hive查询日志

可见清爽多了

也可以直接删除下面这两个包

slf4j-api-1.7.10.jar

slf4j-log4j12-1.7.10.jar

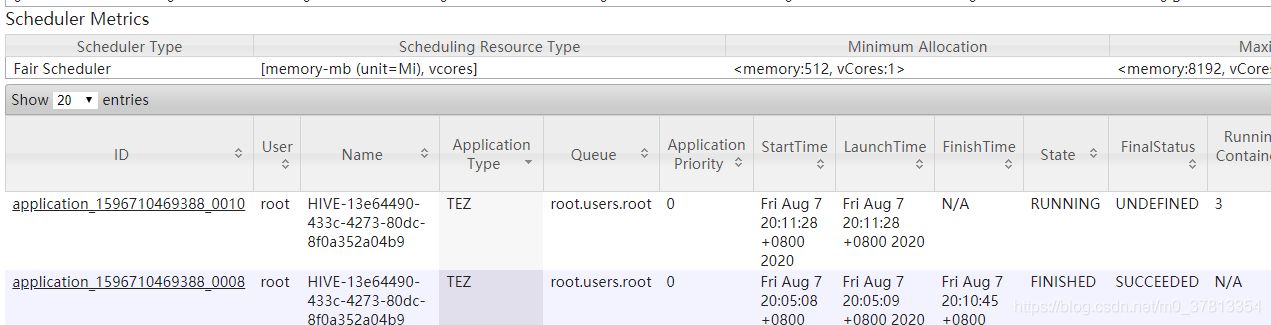

这个时候yarn上也显示 ApplicationType 是 Tez

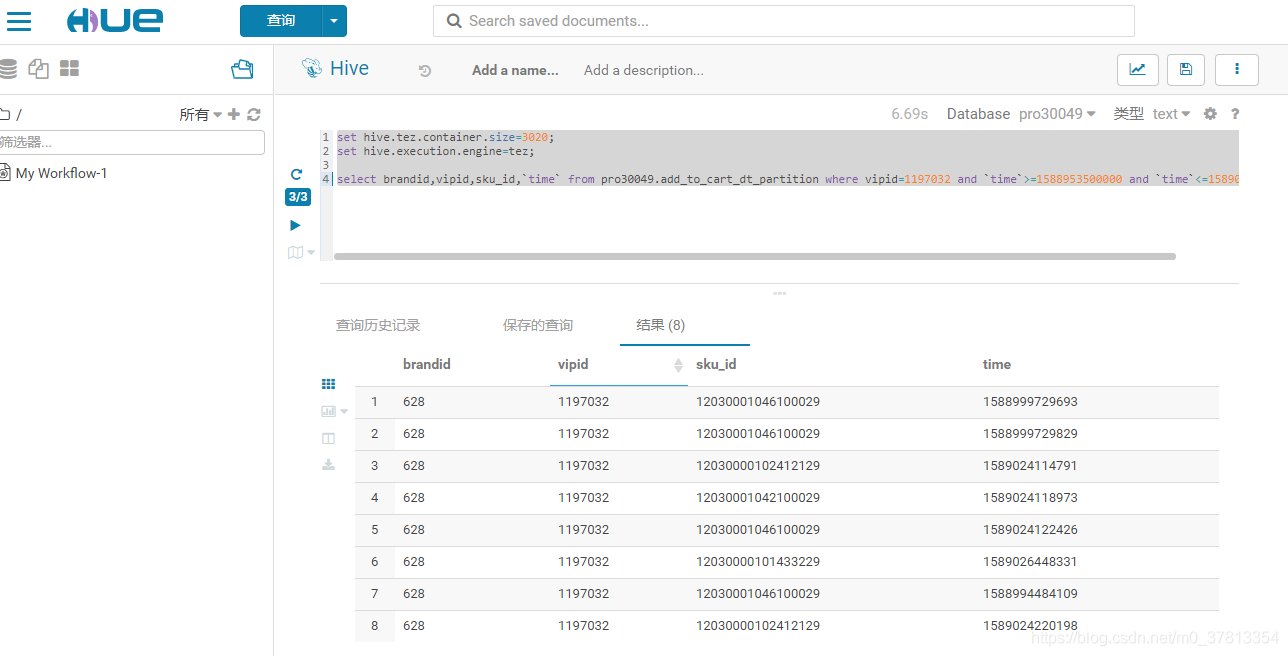

6.5 hiveserver2也配置能启用tez

set hive.tez.container.size=3020;

set hive.execution.engine=tez;

select brandid,vipid,sku_id,time from pro30049.add_to_cart_dt_partition where vipid=1197032 and time>=1588953500000 and time<=1589039999000;

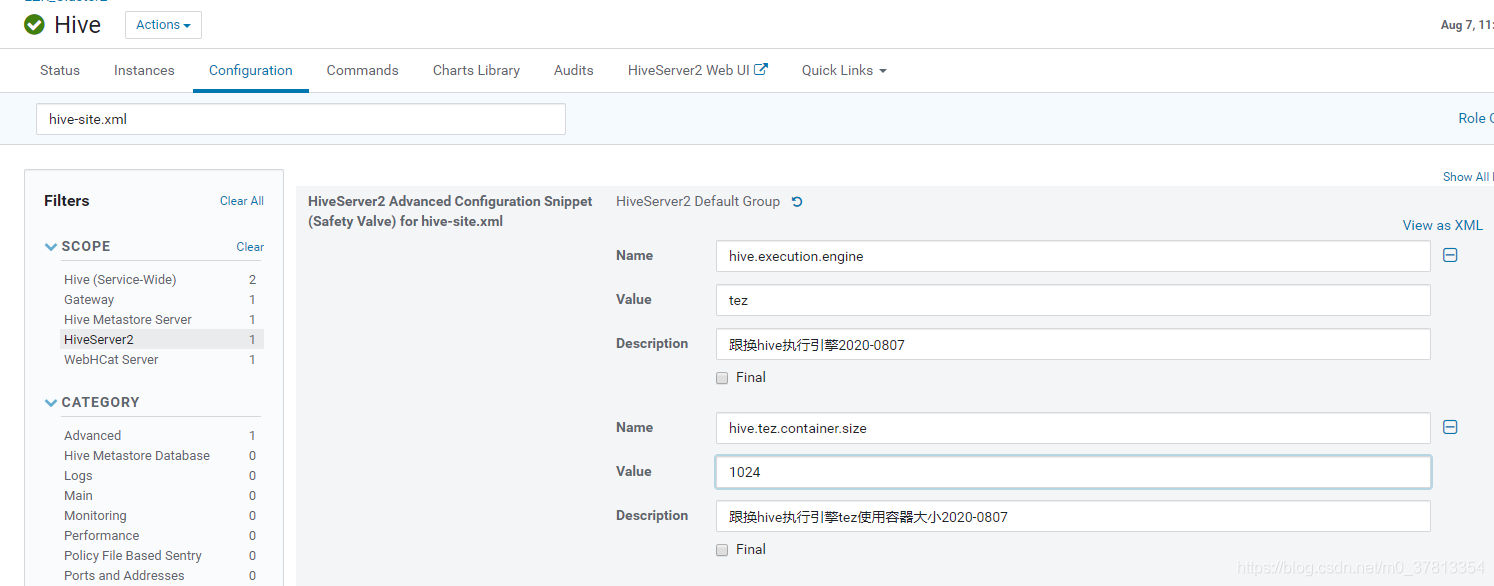

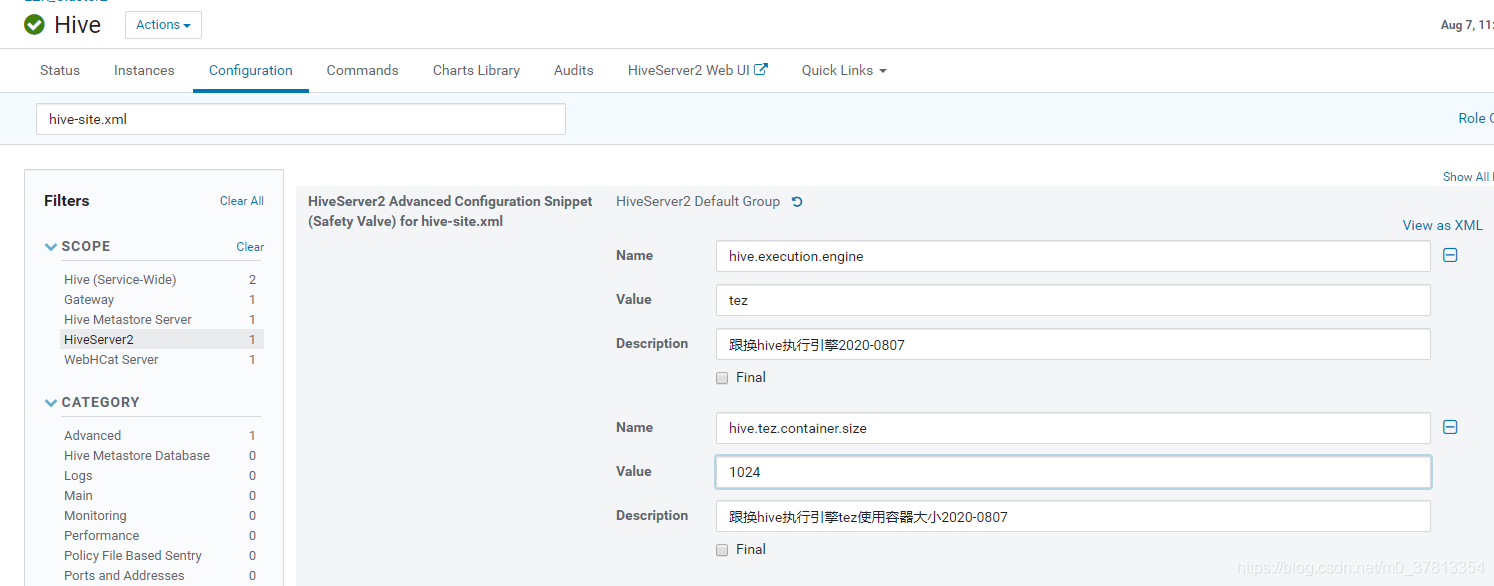

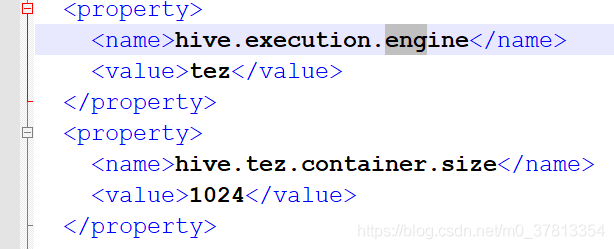

6.6 替换hive默认计算引擎

从上面可以看出,使用tez的时候需要临时设置

set hive.tez.container.size=3020;

set hive.execution.engine=tez;

这里我遇到的一个问题本应该

上面配置

如上图修改的时候就可以把配置分发到hive-site.xml 但是我的没有分发

测试下来还是需要添加临时设置

set hive.tez.container.size=3020;

set hive.execution.engine=tez;

后面尝试直接在一个节点cluster2-slave2去修改hive-site.xml文件

重启hive服务

然后测试

不用设置临时启用tez引擎了

最后

以上就是标致金毛最近收集整理的关于hive-tez的全部内容,更多相关hive-tez内容请搜索靠谱客的其他文章。

发表评论 取消回复