我是靠谱客的博主 淡淡世界,这篇文章主要介绍将Resne34层网络用在MNIST数据集上将Resne34层网络用在MNIST数据集上主要分为3部分:一、主代码:main二、附属代码1:_internally_replaced_utils三、附属代码2:utilsCode:一、主代码:main二、附属代码:_internally_replaced_utils三、附属代码2:utils,现在分享给大家,希望可以做个参考。

将Resne34层网络用在MNIST数据集上

主要分为3部分:

一、主代码:main

二、附属代码1:_internally_replaced_utils

三、附属代码2:utils

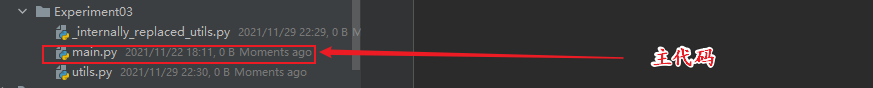

将三个文件放在一个文件夹下面。如下图所示。

Code:

一、主代码:main

from typing import Type, Any, Callable, Union, List, Optional

import torch

import torch.nn as nn

from torch import Tensor

from _internally_replaced_utils import load_state_dict_from_url

from utils import _log_api_usage_once

from typing import Type, Any, Callable, Union, List, Optional

import torch.nn as nn

from torch import Tensor

from torch.utils import model_zoo

from _internally_replaced_utils import load_state_dict_from_url

from utils import _log_api_usage_once

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'TRUE'

__all__ = [

"ResNet",

"resnet18",

"resnet34",

"resnet50",

"resnet101",

"resnet152",

"resnext50_32x4d",

"resnext101_32x8d",

"wide_resnet50_2",

"wide_resnet101_2",

]

model_urls = {

"resnet18": "https://download.pytorch.org/models/resnet18-f37072fd.pth",

"resnet34": "https://download.pytorch.org/models/resnet34-b627a593.pth",

"resnet50": "https://download.pytorch.org/models/resnet50-0676ba61.pth",

"resnet101": "https://download.pytorch.org/models/resnet101-63fe2227.pth",

"resnet152": "https://download.pytorch.org/models/resnet152-394f9c45.pth",

"resnext50_32x4d": "https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth",

"resnext101_32x8d": "https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth",

"wide_resnet50_2": "https://download.pytorch.org/models/wide_resnet50_2-95faca4d.pth",

"wide_resnet101_2": "https://download.pytorch.org/models/wide_resnet101_2-32ee1156.pth",

}

def conv3x3(in_planes: int, out_planes: int, stride: int = 1, groups: int = 1, dilation: int = 1) -> nn.Conv2d:

"""3x3 convolution with padding"""

return nn.Conv2d(

in_planes,

out_planes,

kernel_size=3,

stride=stride,

padding=dilation,

groups=groups,

bias=False,

dilation=dilation,

)

def conv1x1(in_planes: int, out_planes: int, stride: int = 1) -> nn.Conv2d:

"""1x1 convolution"""

return nn.Conv2d(in_planes, out_planes, kernel_size=1, stride=stride, bias=False)

class BasicBlock(nn.Module):

expansion: int = 1

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

expansion: int = 4

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

width = int(planes * (base_width / 64.0)) * groups

# Both self.conv2 and self.downsample layers downsample the input when stride != 1

self.conv1 = conv1x1(inplanes, width)

self.bn1 = norm_layer(width)

self.conv2 = conv3x3(width, width, stride, groups, dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1x1(width, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 10,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

if replace_stride_with_dilation is None:

# each element in the tuple indicates if we should replace

# the 2x2 stride with a dilated convolution instead

replace_stride_with_dilation = [False, False, False]

if len(replace_stride_with_dilation) != 3:

raise ValueError(

"replace_stride_with_dilation should be None "

f"or a 3-element tuple, got {replace_stride_with_dilation}"

)

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0])

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck):

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer(

self,

block: Type[Union[BasicBlock, Bottleneck]],

planes: int,

blocks: int,

stride: int = 1,

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

layers = []

layers.append(

block(

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer

)

)

self.inplanes = planes * block.expansion

for _ in range(1, blocks):

layers.append(

block(

self.inplanes,

planes,

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers)

def _forward_impl(self, x: Tensor) -> Tensor:

# See note [TorchScript super()]

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

def _resnet(

arch: str,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

pretrained: bool,

progress: bool,

**kwargs: Any,

) -> ResNet:

model = ResNet(block, layers, **kwargs)

if pretrained:

state_dict = load_state_dict_from_url(model_urls[arch], progress=progress)

model.load_state_dict(state_dict)

return model

def resnet34(pretrained: bool = False, progress: bool = True, **kwargs: Any) -> ResNet:

return _resnet("resnet34", BasicBlock, [3, 4, 6, 3], pretrained, progress, **kwargs)

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

epochs = 3

batch_size = 64

lr = 0.01

momentum = 0.5

log_interval = 10

# dataset init

train_dataset = torchvision.datasets.MNIST('./data/', train=True, download=True,

transform=transforms.Compose([

transforms.Resize(224), # resnet默认图片输入大小224*224

transforms.ToTensor(),

transforms.Lambda(lambda x: x.repeat(3, 1, 1)),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)),

transforms.Grayscale(num_output_channels=3)

])

)

test_dataset = torchvision.datasets.MNIST('./data/', train=False, download=True,

transform=transforms.Compose([

transforms.Resize(224), # resnet默认图片输入大小224*224

transforms.ToTensor(),

transforms.Lambda(lambda x: x.repeat(3, 1, 1)),

transforms.Normalize(mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5)),

transforms.Grayscale(num_output_channels=3)

])

)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

# data visualization

sample, label = next(iter(train_loader))

print(sample.shape)

print(label)

fig, ax = plt.subplots(nrows=8, ncols=8, sharex=True, sharey=True)

ax = ax.flatten()

# model init

model = model = resnet34(pretrained = False) #pretrained = True 赋值为true意思就是使用预训练的参数,我们加载的是预训练模型,所以这里不需要训练,自己训练需要写训练函数

CELoss = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=momentum)

# training

batch_done = 0

logs = []

for i in range(epochs):

for data, label in train_loader:

output = model.forward(data)

loss = CELoss(output, label)

loss.backward()

optimizer.step()

optimizer.zero_grad()

batch_done += 1

if batch_done % log_interval == 0:

logs.append([batch_done, loss.item()])

print('Epoch {}: {}/{} loss:{}'.format(i, (batch_done) % len(train_loader), len(train_loader), loss.item()))

# loss curve visualization

logs = np.array(logs)

plt.plot(logs[:, 0], logs[:, 1])

plt.show()

# evaluation

model.eval()

correct = 0

for data, label in test_loader:

output = model.forward(data)

_, pred = torch.max(output, dim=1)

correct += float(torch.sum(pred == label))

print('test_acc:{}'.format(correct / len(test_dataset)))

二、附属代码:_internally_replaced_utils

import importlib.machinery

import os

from torch.hub import _get_torch_home

_HOME = os.path.join(_get_torch_home(), "datasets", "vision")

_USE_SHARDED_DATASETS = False

def _download_file_from_remote_location(fpath: str, url: str) -> None:

pass

def _is_remote_location_available() -> bool:

return False

try:

from torch.hub import load_state_dict_from_url # noqa: 401

except ImportError:

from torch.utils.model_zoo import load_url as load_state_dict_from_url # noqa: 401

def _get_extension_path(lib_name):

lib_dir = os.path.dirname(__file__)

if os.name == "nt":

# Register the main torchvision library location on the default DLL path

import ctypes

import sys

kernel32 = ctypes.WinDLL("kernel32.dll", use_last_error=True)

with_load_library_flags = hasattr(kernel32, "AddDllDirectory")

prev_error_mode = kernel32.SetErrorMode(0x0001)

if with_load_library_flags:

kernel32.AddDllDirectory.restype = ctypes.c_void_p

if sys.version_info >= (3, 8):

os.add_dll_directory(lib_dir)

elif with_load_library_flags:

res = kernel32.AddDllDirectory(lib_dir)

if res is None:

err = ctypes.WinError(ctypes.get_last_error())

err.strerror += f' Error adding "{lib_dir}" to the DLL directories.'

raise err

kernel32.SetErrorMode(prev_error_mode)

loader_details = (importlib.machinery.ExtensionFileLoader, importlib.machinery.EXTENSION_SUFFIXES)

extfinder = importlib.machinery.FileFinder(lib_dir, loader_details)

ext_specs = extfinder.find_spec(lib_name)

if ext_specs is None:

raise ImportError

return ext_specs.origin三、附属代码2:utils

import math

import pathlib

import warnings

from typing import Union, Optional, List, Tuple, BinaryIO, no_type_check

import numpy as np

import torch

from PIL import Image, ImageDraw, ImageFont, ImageColor

__all__ = ["make_grid", "save_image", "draw_bounding_boxes", "draw_segmentation_masks", "draw_keypoints"]

@torch.no_grad()

def make_grid(

tensor: Union[torch.Tensor, List[torch.Tensor]],

nrow: int = 8,

padding: int = 2,

normalize: bool = False,

value_range: Optional[Tuple[int, int]] = None,

scale_each: bool = False,

pad_value: int = 0,

**kwargs,

) -> torch.Tensor:

"""

Make a grid of images.

Args:

tensor (Tensor or list): 4D mini-batch Tensor of shape (B x C x H x W)

or a list of images all of the same size.

nrow (int, optional): Number of images displayed in each row of the grid.

The final grid size is ``(B / nrow, nrow)``. Default: ``8``.

padding (int, optional): amount of padding. Default: ``2``.

normalize (bool, optional): If True, shift the image to the range (0, 1),

by the min and max values specified by ``value_range``. Default: ``False``.

value_range (tuple, optional): tuple (min, max) where min and max are numbers,

then these numbers are used to normalize the image. By default, min and max

are computed from the tensor.

scale_each (bool, optional): If ``True``, scale each image in the batch of

images separately rather than the (min, max) over all images. Default: ``False``.

pad_value (float, optional): Value for the padded pixels. Default: ``0``.

Returns:

grid (Tensor): the tensor containing grid of images.

"""

if not (torch.is_tensor(tensor) or (isinstance(tensor, list) and all(torch.is_tensor(t) for t in tensor))):

raise TypeError(f"tensor or list of tensors expected, got {type(tensor)}")

if "range" in kwargs.keys():

warning = "range will be deprecated, please use value_range instead."

warnings.warn(warning)

value_range = kwargs["range"]

# if list of tensors, convert to a 4D mini-batch Tensor

if isinstance(tensor, list):

tensor = torch.stack(tensor, dim=0)

if tensor.dim() == 2: # single image H x W

tensor = tensor.unsqueeze(0)

if tensor.dim() == 3: # single image

if tensor.size(0) == 1: # if single-channel, convert to 3-channel

tensor = torch.cat((tensor, tensor, tensor), 0)

tensor = tensor.unsqueeze(0)

if tensor.dim() == 4 and tensor.size(1) == 1: # single-channel images

tensor = torch.cat((tensor, tensor, tensor), 1)

if normalize is True:

tensor = tensor.clone() # avoid modifying tensor in-place

if value_range is not None:

assert isinstance(

value_range, tuple

), "value_range has to be a tuple (min, max) if specified. min and max are numbers"

def norm_ip(img, low, high):

img.clamp_(min=low, max=high)

img.sub_(low).div_(max(high - low, 1e-5))

def norm_range(t, value_range):

if value_range is not None:

norm_ip(t, value_range[0], value_range[1])

else:

norm_ip(t, float(t.min()), float(t.max()))

if scale_each is True:

for t in tensor: # loop over mini-batch dimension

norm_range(t, value_range)

else:

norm_range(tensor, value_range)

if tensor.size(0) == 1:

return tensor.squeeze(0)

# make the mini-batch of images into a grid

nmaps = tensor.size(0)

xmaps = min(nrow, nmaps)

ymaps = int(math.ceil(float(nmaps) / xmaps))

height, width = int(tensor.size(2) + padding), int(tensor.size(3) + padding)

num_channels = tensor.size(1)

grid = tensor.new_full((num_channels, height * ymaps + padding, width * xmaps + padding), pad_value)

k = 0

for y in range(ymaps):

for x in range(xmaps):

if k >= nmaps:

break

# Tensor.copy_() is a valid method but seems to be missing from the stubs

# https://pytorch.org/docs/stable/tensors.html#torch.Tensor.copy_

grid.narrow(1, y * height + padding, height - padding).narrow( # type: ignore[attr-defined]

2, x * width + padding, width - padding

).copy_(tensor[k])

k = k + 1

return grid

@torch.no_grad()

def save_image(

tensor: Union[torch.Tensor, List[torch.Tensor]],

fp: Union[str, pathlib.Path, BinaryIO],

format: Optional[str] = None,

**kwargs,

) -> None:

"""

Save a given Tensor into an image file.

Args:

tensor (Tensor or list): Image to be saved. If given a mini-batch tensor,

saves the tensor as a grid of images by calling ``make_grid``.

fp (string or file object): A filename or a file object

format(Optional): If omitted, the format to use is determined from the filename extension.

If a file object was used instead of a filename, this parameter should always be used.

**kwargs: Other arguments are documented in ``make_grid``.

"""

grid = make_grid(tensor, **kwargs)

# Add 0.5 after unnormalizing to [0, 255] to round to nearest integer

ndarr = grid.mul(255).add_(0.5).clamp_(0, 255).permute(1, 2, 0).to("cpu", torch.uint8).numpy()

im = Image.fromarray(ndarr)

im.save(fp, format=format)

@torch.no_grad()

def draw_bounding_boxes(

image: torch.Tensor,

boxes: torch.Tensor,

labels: Optional[List[str]] = None,

colors: Optional[Union[List[Union[str, Tuple[int, int, int]]], str, Tuple[int, int, int]]] = None,

fill: Optional[bool] = False,

width: int = 1,

font: Optional[str] = None,

font_size: int = 10,

) -> torch.Tensor:

"""

Draws bounding boxes on given image.

The values of the input image should be uint8 between 0 and 255.

If fill is True, Resulting Tensor should be saved as PNG image.

Args:

image (Tensor): Tensor of shape (C x H x W) and dtype uint8.

boxes (Tensor): Tensor of size (N, 4) containing bounding boxes in (xmin, ymin, xmax, ymax) format. Note that

the boxes are absolute coordinates with respect to the image. In other words: `0 <= xmin < xmax < W` and

`0 <= ymin < ymax < H`.

labels (List[str]): List containing the labels of bounding boxes.

colors (color or list of colors, optional): List containing the colors

of the boxes or single color for all boxes. The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

fill (bool): If `True` fills the bounding box with specified color.

width (int): Width of bounding box.

font (str): A filename containing a TrueType font. If the file is not found in this filename, the loader may

also search in other directories, such as the `fonts/` directory on Windows or `/Library/Fonts/`,

`/System/Library/Fonts/` and `~/Library/Fonts/` on macOS.

font_size (int): The requested font size in points.

Returns:

img (Tensor[C, H, W]): Image Tensor of dtype uint8 with bounding boxes plotted.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"Tensor expected, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"Tensor uint8 expected, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size(0) not in {1, 3}:

raise ValueError("Only grayscale and RGB images are supported")

if image.size(0) == 1:

image = torch.tile(image, (3, 1, 1))

ndarr = image.permute(1, 2, 0).numpy()

img_to_draw = Image.fromarray(ndarr)

img_boxes = boxes.to(torch.int64).tolist()

if fill:

draw = ImageDraw.Draw(img_to_draw, "RGBA")

else:

draw = ImageDraw.Draw(img_to_draw)

txt_font = ImageFont.load_default() if font is None else ImageFont.truetype(font=font, size=font_size)

for i, bbox in enumerate(img_boxes):

if colors is None:

color = None

elif isinstance(colors, list):

color = colors[i]

else:

color = colors

if fill:

if color is None:

fill_color = (255, 255, 255, 100)

elif isinstance(color, str):

# This will automatically raise Error if rgb cannot be parsed.

fill_color = ImageColor.getrgb(color) + (100,)

elif isinstance(color, tuple):

fill_color = color + (100,)

draw.rectangle(bbox, width=width, outline=color, fill=fill_color)

else:

draw.rectangle(bbox, width=width, outline=color)

if labels is not None:

margin = width + 1

draw.text((bbox[0] + margin, bbox[1] + margin), labels[i], fill=color, font=txt_font)

return torch.from_numpy(np.array(img_to_draw)).permute(2, 0, 1).to(dtype=torch.uint8)

@torch.no_grad()

def draw_segmentation_masks(

image: torch.Tensor,

masks: torch.Tensor,

alpha: float = 0.8,

colors: Optional[Union[List[Union[str, Tuple[int, int, int]]], str, Tuple[int, int, int]]] = None,

) -> torch.Tensor:

"""

Draws segmentation masks on given RGB image.

The values of the input image should be uint8 between 0 and 255.

Args:

image (Tensor): Tensor of shape (3, H, W) and dtype uint8.

masks (Tensor): Tensor of shape (num_masks, H, W) or (H, W) and dtype bool.

alpha (float): Float number between 0 and 1 denoting the transparency of the masks.

0 means full transparency, 1 means no transparency.

colors (color or list of colors, optional): List containing the colors

of the masks or single color for all masks. The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

By default, random colors are generated for each mask.

Returns:

img (Tensor[C, H, W]): Image Tensor, with segmentation masks drawn on top.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"The image must be a tensor, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"The image dtype must be uint8, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size()[0] != 3:

raise ValueError("Pass an RGB image. Other Image formats are not supported")

if masks.ndim == 2:

masks = masks[None, :, :]

if masks.ndim != 3:

raise ValueError("masks must be of shape (H, W) or (batch_size, H, W)")

if masks.dtype != torch.bool:

raise ValueError(f"The masks must be of dtype bool. Got {masks.dtype}")

if masks.shape[-2:] != image.shape[-2:]:

raise ValueError("The image and the masks must have the same height and width")

num_masks = masks.size()[0]

if colors is not None and num_masks > len(colors):

raise ValueError(f"There are more masks ({num_masks}) than colors ({len(colors)})")

if colors is None:

colors = _generate_color_palette(num_masks)

if not isinstance(colors, list):

colors = [colors]

if not isinstance(colors[0], (tuple, str)):

raise ValueError("colors must be a tuple or a string, or a list thereof")

if isinstance(colors[0], tuple) and len(colors[0]) != 3:

raise ValueError("It seems that you passed a tuple of colors instead of a list of colors")

out_dtype = torch.uint8

colors_ = []

for color in colors:

if isinstance(color, str):

color = ImageColor.getrgb(color)

colors_.append(torch.tensor(color, dtype=out_dtype))

img_to_draw = image.detach().clone()

# TODO: There might be a way to vectorize this

for mask, color in zip(masks, colors_):

img_to_draw[:, mask] = color[:, None]

out = image * (1 - alpha) + img_to_draw * alpha

return out.to(out_dtype)

@torch.no_grad()

def draw_keypoints(

image: torch.Tensor,

keypoints: torch.Tensor,

connectivity: Optional[List[Tuple[int, int]]] = None,

colors: Optional[Union[str, Tuple[int, int, int]]] = None,

radius: int = 2,

width: int = 3,

) -> torch.Tensor:

"""

Draws Keypoints on given RGB image.

The values of the input image should be uint8 between 0 and 255.

Args:

image (Tensor): Tensor of shape (3, H, W) and dtype uint8.

keypoints (Tensor): Tensor of shape (num_instances, K, 2) the K keypoints location for each of the N instances,

in the format [x, y].

connectivity (List[Tuple[int, int]]]): A List of tuple where,

each tuple contains pair of keypoints to be connected.

colors (str, Tuple): The color can be represented as

PIL strings e.g. "red" or "#FF00FF", or as RGB tuples e.g. ``(240, 10, 157)``.

radius (int): Integer denoting radius of keypoint.

width (int): Integer denoting width of line connecting keypoints.

Returns:

img (Tensor[C, H, W]): Image Tensor of dtype uint8 with keypoints drawn.

"""

if not isinstance(image, torch.Tensor):

raise TypeError(f"The image must be a tensor, got {type(image)}")

elif image.dtype != torch.uint8:

raise ValueError(f"The image dtype must be uint8, got {image.dtype}")

elif image.dim() != 3:

raise ValueError("Pass individual images, not batches")

elif image.size()[0] != 3:

raise ValueError("Pass an RGB image. Other Image formats are not supported")

if keypoints.ndim != 3:

raise ValueError("keypoints must be of shape (num_instances, K, 2)")

ndarr = image.permute(1, 2, 0).numpy()

img_to_draw = Image.fromarray(ndarr)

draw = ImageDraw.Draw(img_to_draw)

img_kpts = keypoints.to(torch.int64).tolist()

for kpt_id, kpt_inst in enumerate(img_kpts):

for inst_id, kpt in enumerate(kpt_inst):

x1 = kpt[0] - radius

x2 = kpt[0] + radius

y1 = kpt[1] - radius

y2 = kpt[1] + radius

draw.ellipse([x1, y1, x2, y2], fill=colors, outline=None, width=0)

if connectivity:

for connection in connectivity:

start_pt_x = kpt_inst[connection[0]][0]

start_pt_y = kpt_inst[connection[0]][1]

end_pt_x = kpt_inst[connection[1]][0]

end_pt_y = kpt_inst[connection[1]][1]

draw.line(

((start_pt_x, start_pt_y), (end_pt_x, end_pt_y)),

width=width,

)

return torch.from_numpy(np.array(img_to_draw)).permute(2, 0, 1).to(dtype=torch.uint8)

def _generate_color_palette(num_masks: int):

palette = torch.tensor([2 ** 25 - 1, 2 ** 15 - 1, 2 ** 21 - 1])

return [tuple((i * palette) % 255) for i in range(num_masks)]

@no_type_check

def _log_api_usage_once(obj: str) -> None: # type: ignore

if torch.jit.is_scripting() or torch.jit.is_tracing():

return

# NOTE: obj can be an object as well, but mocking it here to be

# only a string to appease torchscript

if isinstance(obj, str):

torch._C._log_api_usage_once(obj)

else:

torch._C._log_api_usage_once(f"{obj.__module__}.{obj.__class__.__name__}")最后

以上就是淡淡世界最近收集整理的关于将Resne34层网络用在MNIST数据集上将Resne34层网络用在MNIST数据集上主要分为3部分:一、主代码:main二、附属代码1:_internally_replaced_utils三、附属代码2:utilsCode:一、主代码:main二、附属代码:_internally_replaced_utils三、附属代码2:utils的全部内容,更多相关将Resne34层网络用在MNIST数据集上将Resne34层网络用在MNIST数据集上主要分为3部分内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

![mmClassification学习笔记前言mmcv安装mmcv库文件夹架构mmcv概要mmcvclassification文件夹官方给的demo[只是一个模型推理]根据官方教程进行学习](https://www.shuijiaxian.com/files_image/reation/bcimg7.png)

发表评论 取消回复