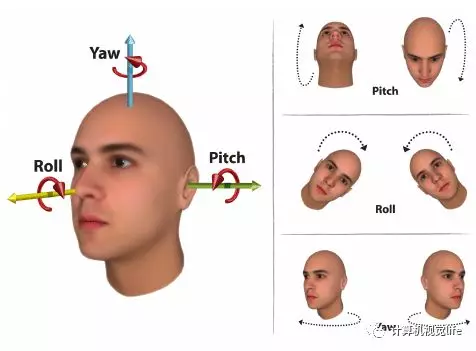

人脸姿态估计主要是获得脸部朝向的角度信息。一般可以用旋转矩阵、旋转向量、四元数或欧拉角表示(这四个量也可以互相转换)。一般而言,欧拉角可读性更好一些,使用更为广泛。本文获得的人脸姿态信息用三个欧拉角(pitch,yaw,roll)表示,通俗讲就是抬头、摇头和转头。

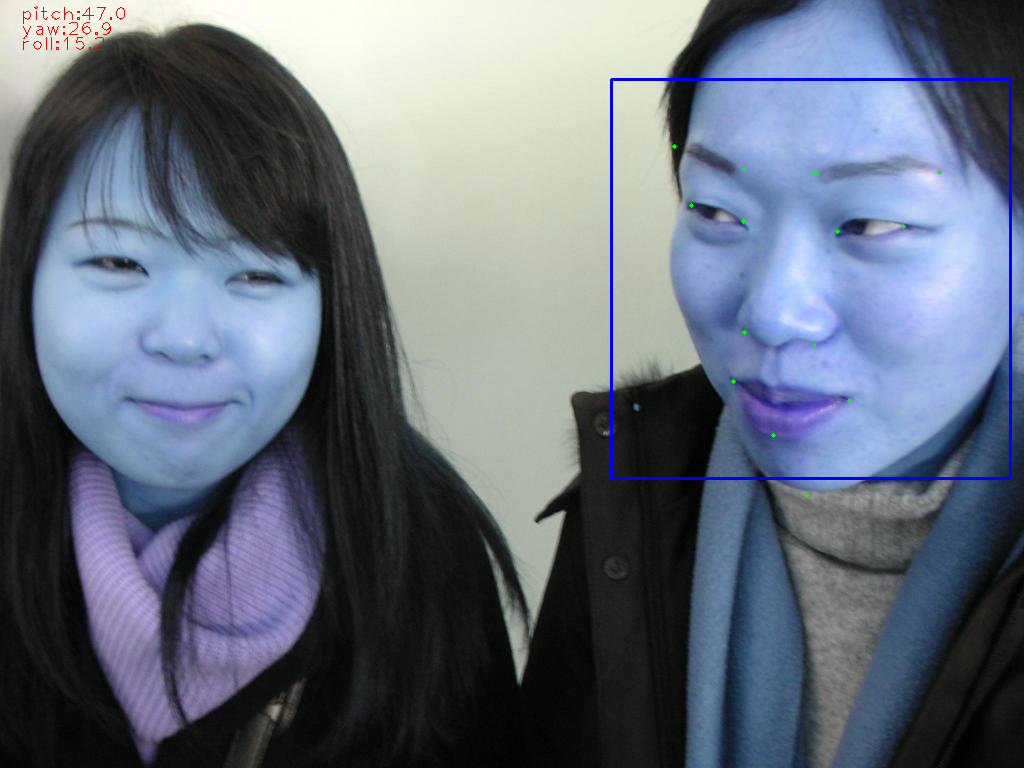

话不多说,先上图:

代码部分解答:

本例中只是用了14个关键点,主要是因为通用的3D人脸模型的标注方式和dlib检测出来的点不对应,因此选择了部分共同的特征,points_68 代表的部分检测出来的点,排列方式与model_points 对应。

代码如下:

import cv2

import matplotlib.pyplot as plt

import numpy as np

import dlib

import os

import sys

import math

model_points = np.array([

[6.825897, 6.760612, 4.402142],

[1.330353, 7.122144, 6.903745],

[-1.330353, 7.122144, 6.903745 ],

[-6.825897, 6.760612, 4.402142],

[5.311432, 5.485328, 3.987654],

[1.789930, 5.393625, 4.413414],

[-1.789930, 5.393625, 4.413414],

[-5.311432, 5.485328, 3.987654],

[2.005628, 1.409845, 6.165652],

[-2.005628, 1.409845, 6.165652],

[2.774015, -2.080775, 5.048531],

[-2.774015, -2.080775, 5.048531],

[0.000000, -3.116408, 6.097667],

[0.000000, -7.415691, 4.070434]])

detector = dlib.get_frontal_face_detector()

pic_path = "/media/xxxxx/5874eb32-3573-4145-9cce-b105957df272/data_process/data_pro/solov2/5007442_2.jpg"

img = cv2.imread(pic_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

faces = detector(img, 2)

face = faces[0]

x0,y0,x1,y1 = face.left(),face.top(),face.right(),face.bottom()

cv2.rectangle(img, (x0,y0), (x1,y1), (255,0,0), 2) #画个人脸框框

predictor = dlib.shape_predictor('/media/zhoukx/5874eb32-3573-4145-9cce-b105957df272/data_process/data_pro/solov2/shape_predictor_68_face_landmarks.dat')

ldmk = predictor(img, face)

points = np.array([(p.x, p.y) for p in ldmk.parts()],dtype="double")

#17 left brow left corner

#21 left brow right corner

#22 right brow left corner

#26 right brow right corner

#36 left eye left corner

#39 left eye right corner

#42 right eye left corner

#45 right eye right corner

#31 nose left corner

#35 nose right corner

#48 mouth left corner

#54 mouth right corner

#57 mouth central bottom corner

#8 chin corner

points_68 = np.array([

points[17],

points[21],

points[22],

points[26],

points[36],

points[39],

points[42],

points[45],

points[31],

points[35],

points[48],

points[54],

points[57],

points[8]

],dtype="double")

size=img.shape

focal_length = size[1]

center = (size[1]/2, size[0]/2)

camera_matrix = np.array(

[[focal_length, 0, center[0]],

[0, focal_length, center[1]],

[0, 0, 1]], dtype = "double"

)

print ("Camera Matrix :n {0}".format(camera_matrix))

dist_coeffs = np.zeros((4,1)) # Assuming no lens distortion

(success, rotation_vector, translation_vector) = cv2.solvePnP(model_points, points_68, camera_matrix, dist_coeffs)

print ("Rotation Vector:n {0}".format(rotation_vector))

print ("Translation Vector:n {0}".format(translation_vector))

theta = np.linalg.norm(rotation_vector)

r = rotation_vector / theta

R_ = np.array([[0, -r[2][0], r[1][0]],

[r[2][0], 0, -r[0][0]],

[-r[1][0], r[0][0], 0]])

R = np.cos(theta) * np.eye(3) + (1 - np.cos(theta)) * r * r.T + np.sin(theta) * R_

print('旋转矩阵')

print(R)

def isRotationMatrix(R):

Rt = np.transpose(R)

shouldBeIdentity = np.dot(Rt, R)

I = np.identity(3, dtype=R.dtype)

n = np.linalg.norm(I - shouldBeIdentity)

return n < 1e-6

def rotationMatrixToAngles(R):

assert (isRotationMatrix(R))

sy = math.sqrt(R[0, 0] * R[0, 0] + R[1, 0] * R[1, 0])

singular = sy < 1e-6

if not singular:

x = math.atan2(R[2, 1], R[2, 2])

y = math.atan2(-R[2, 0], sy)

z = math.atan2(R[1, 0], R[0, 0])

else:

x = math.atan2(-R[1, 2], R[1, 1])

y = math.atan2(-R[2, 0], sy)

z = 0

x = x*180.0/3.141592653589793

y = y*180.0/3.141592653589793

z = z*180.0/3.141592653589793

line = 'pitch:{:.1f}nyaw:{:.1f}nroll:{:.1f}'.format(y,x,z)

print('{},{}'.format(os.path.basename(pic_path), line.replace('n',',')))

y = 20

for _, txt in enumerate(line.split('n')):

cv2.putText(img, txt, (20, y), cv2.FONT_HERSHEY_PLAIN, 1.3, (0,0,255), 1)

y = y + 15

for p in points_68:

cv2.circle(img, (int(p[0]),int(p[1])), 2, (0,255,0), -1, 0)

cv2.imshow('img', img)

if cv2.waitKey(-1) == 27:

pass

return np.array([x, y, z])

n = isRotationMatrix(R)

x_y_z = rotationMatrixToAngles(R)

print(x_y_z)

参考文章:

1 人脸姿态估计(计算欧拉角)_sunnygirl's house-CSDN博客_人脸姿态估计

2 关于人脸关键点的数据集WFLW数据预处理_baidu_40840693的博客-CSDN博客

3 GitHub - lincolnhard/head-pose-estimation: Real-time head pose estimation built with OpenCV and dlib

4 重磅!头部姿态估计「原理详解 + 实战代码」来啦!

最后

以上就是温柔香烟最近收集整理的关于人脸姿态估计(代码已跑通)的全部内容,更多相关人脸姿态估计(代码已跑通)内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复