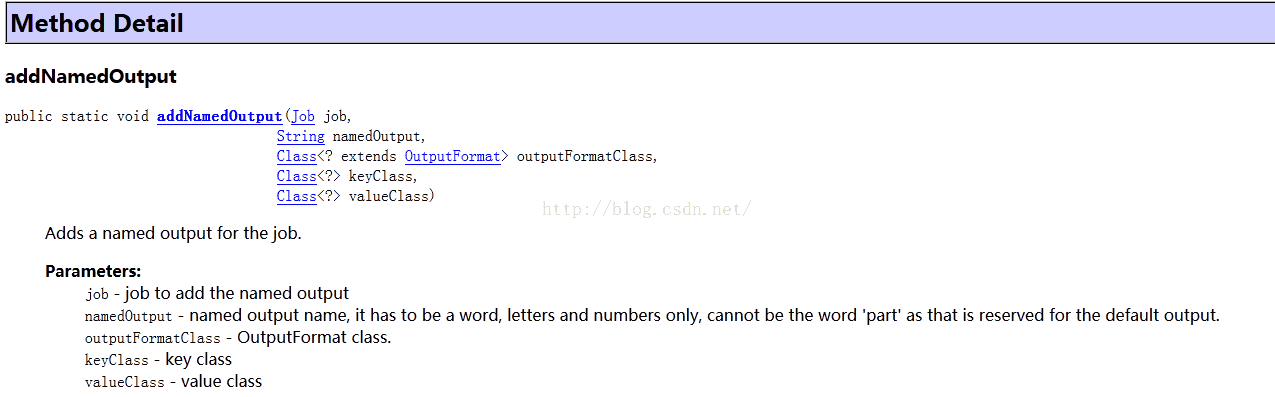

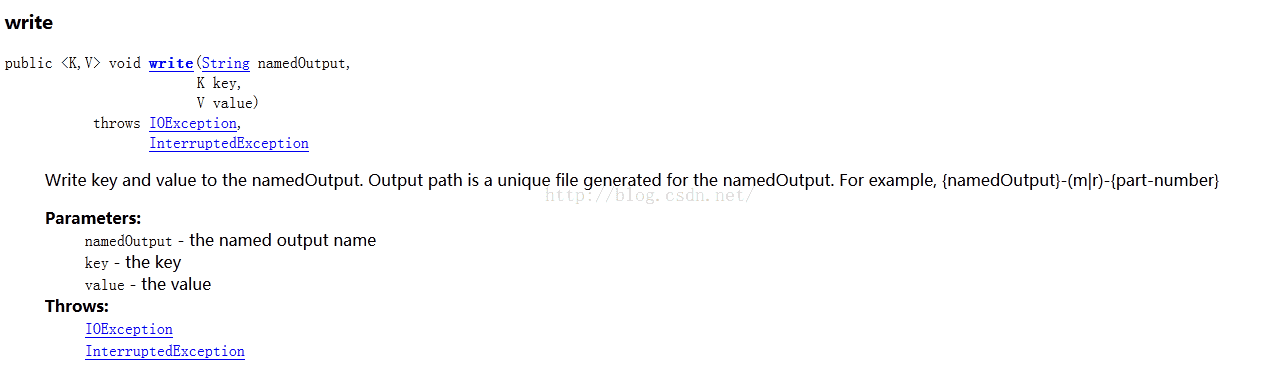

两个主要的方法:

代码:

- package mapreduce.baozi;

- import java.io.IOException;

- import org.apache.hadoop.conf.Configuration;

- import org.apache.hadoop.conf.Configured;

- import org.apache.hadoop.fs.Path;

- import org.apache.hadoop.io.IntWritable;

- import org.apache.hadoop.io.LongWritable;

- import org.apache.hadoop.io.Text;

- import org.apache.hadoop.mapreduce.Job;

- import org.apache.hadoop.mapreduce.Mapper;

- import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

- import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

- import org.apache.hadoop.mapreduce.lib.output.MultipleOutputs;

- import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

- public class TestwithMultipleOutputs extends Configured{

- public static class MapClass extends Mapper<LongWritable, Text, Text, IntWritable> {

- private MultipleOutputs<Text, Text> mos;

- @Override

- protected void setup(Mapper<LongWritable, Text, Text, IntWritable>.Context context)throws IOException, InterruptedException {

- mos = new MultipleOutputs(context);

- }

- public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException{

- String line = value.toString();

- String[] tokens = line.split("t");

- if(tokens[0].equals("hadoop")){

- mos.write("hadoop", new Text(tokens[0]),new Text(tokens[1]));

- }else if(tokens[0].equals("hive")){

- mos.write("hive", new Text(tokens[0]),new Text(tokens[1]));

- }else if(tokens[0].equals("hbase")){

- mos.write("hbase", new Text(tokens[0]),new Text(tokens[1]));

- }else if(tokens[0].equals("spark")){

- mos.write("spark", new Text(tokens[0]),new Text(tokens[1]));

- }

- }

- protected void cleanup(Context context) throws IOException,InterruptedException {

- mos.close();

- }

- }

- public static void main(String[] args) throws Exception {

- Configuration conf = new Configuration();

- Job job=Job.getInstance(conf, "MultipleOutput");

- job.setJarByClass(TestwithMultipleOutputs.class);

- Path in = new Path(args[0]);

- Path out = new Path(args[1]);

- FileInputFormat.setInputPaths(job, in);

- FileOutputFormat.setOutputPath(job, out);

- job.setMapperClass(MapClass.class);

- job.setNumReduceTasks(0);

- MultipleOutputs.addNamedOutput(job,"hadoop",TextOutputFormat.class,Text.class,Text.class);

- MultipleOutputs.addNamedOutput(job,"hive",TextOutputFormat.class,Text.class,Text.class);

- MultipleOutputs.addNamedOutput(job,"hbase",TextOutputFormat.class,Text.class,Text.class);

- MultipleOutputs.addNamedOutput(job,"spark",TextOutputFormat.class,Text.class,Text.class);

- System.exit(job.waitForCompletion(true)?0:1);

- }

- }

- 输入数据:

- more aa.txt

- hadoop hadoops

- hive 21312q

- hbase dwfsdf

- spark sdfsdf

- hbase werwer

- spark wefg

- hive thhdf

- hive jtyj

- hadoop trjuh

- hbase sdfsf

运行结果目录:

- -rw-r--r-- 2 jiuqian supergroup 123 2015-10-13 09:21 libin/input/aa.txt

- Found 6 items

- -rw-r--r-- 2 jiuqian supergroup 0 2015-10-13 09:25 libin/input/mul1/_SUCCESS

- -rw-r--r-- 2 jiuqian supergroup 28 2015-10-13 09:25 libin/input/mul1/hadoop-m-00000

- -rw-r--r-- 2 jiuqian supergroup 38 2015-10-13 09:25 libin/input/mul1/hbase-m-00000

- -rw-r--r-- 2 jiuqian supergroup 33 2015-10-13 09:25 libin/input/mul1/hive-m-00000

- -rw-r--r-- 2 jiuqian supergroup 0 2015-10-13 09:25 libin/input/mul1/part-m-00000

- -rw-r--r-- 2 jiuqian supergroup 24 2015-10-13 09:25 libin/input/mul1/spark-m-00000

- </pre><pre name="code" class="java">hdfs dfs -text libin/input/mul1/hadoop-m-00000

- hadoop hadoops

- hadoop trjuh

- 15/10/13 09:25:01 INFO client.RMProxy: Connecting to ResourceManager at sh-rslog1/27.115.29.102:8032

- 15/10/13 09:25:02 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

- 15/10/13 09:25:03 INFO input.FileInputFormat: Total input paths to process : 1

- 15/10/13 09:25:03 INFO mapreduce.JobSubmitter: number of splits:1

- 15/10/13 09:25:03 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1435558921826_10644

- 15/10/13 09:25:03 INFO impl.YarnClientImpl: Submitted application application_1435558921826_10644

- 15/10/13 09:25:03 INFO mapreduce.Job: The url to track the job: http://sh-rslog1:8088/proxy/application_1435558921826_10644/

- 15/10/13 09:25:03 INFO mapreduce.Job: Running job: job_1435558921826_10644

- 15/10/13 09:25:11 INFO mapreduce.Job: Job job_1435558921826_10644 running in uber mode : false

- 15/10/13 09:25:11 INFO mapreduce.Job: map 0% reduce 0%

- 15/10/13 09:25:18 INFO mapreduce.Job: map 100% reduce 0%

- 15/10/13 09:25:18 INFO mapreduce.Job: Job job_1435558921826_10644 completed successfully

- 15/10/13 09:25:18 INFO mapreduce.Job: Counters: 30

- File System Counters

- FILE: Number of bytes read=0

- FILE: Number of bytes written=107447

- FILE: Number of read operations=0

- FILE: Number of large read operations=0

- FILE: Number of write operations=0

- HDFS: Number of bytes read=241

- HDFS: Number of bytes written=123

- HDFS: Number of read operations=5

- HDFS: Number of large read operations=0

- HDFS: Number of write operations=6

- Job Counters

- Launched map tasks=1

- Data-local map tasks=1

- Total time spent by all maps in occupied slots (ms)=4262

- Total time spent by all reduces in occupied slots (ms)=0

- Total time spent by all map tasks (ms)=4262

- Total vcore-seconds taken by all map tasks=4262

- Total megabyte-seconds taken by all map tasks=6546432

- Map-Reduce Framework

- Map input records=10

- Map output records=0

- Input split bytes=118

- Spilled Records=0

- Failed Shuffles=0

- Merged Map outputs=0

- GC time elapsed (ms)=41

- CPU time spent (ms)=1350

- Physical memory (bytes) snapshot=307478528

- Virtual memory (bytes) snapshot=1981685760

- Total committed heap usage (bytes)=1011351552

- File Input Format Counters

- Bytes Read=123

- File Output Format Counters

- Bytes Written=0

最后

以上就是生动外套最近收集整理的关于Hadoop的多目录输出 -2的全部内容,更多相关Hadoop的多目录输出内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复