[root@node1 ~]# ssh-keygen -t rsa -P""

[root@node1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@node2

[root@node2 ~]# ssh-keygen -t rsa -P""

[root@node2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@node1[root@node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

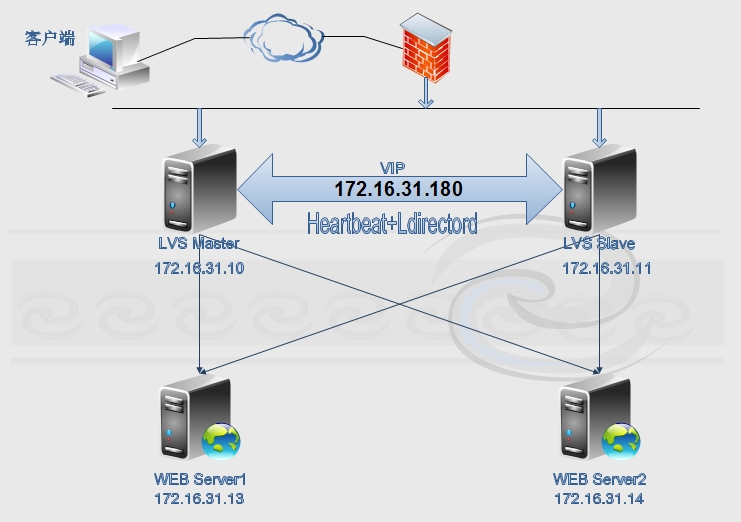

172.16.31.10 node1.stu31.com node1

172.16.31.11 node2.stu31.com node2

172.16.31.13 rs1.stu31.com rs1

172.16.31.14 rs2.stu31.com rs2[root@node1 ~]# date;ssh node2 'date'

Sun Jan 4 17:57:51 CST 2015

Sun Jan 4 17:57:50 CST 2015[root@node1 heartbeat2]# ls

heartbeat-2.1.4-12.el6.x86_64.rpm

heartbeat-gui-2.1.4-12.el6.x86_64.rpm

heartbeat-stonith-2.1.4-12.el6.x86_64.rpm

heartbeat-ldirectord-2.1.4-12.el6.x86_64.rpm

heartbeat-pils-2.1.4-12.el6.x86_64.rpm[root@node1 heartbeat2]# yum install -ynet-snmp-libs libnet PyXML[root@node1 heartbeat2]# rpm -ivh heartbeat-2.1.4-12.el6.x86_64.rpm heartbeat-stonith-2.1.4-12.el6.x86_64.rpm heartbeat-pils-2.1.4-12.el6.x86_64.rpm heartbeat-gui-

2.1.4-12.el6.x86_64.rpm heartbeat-ldirectord-2.1.4-12.el6.x86_64.rpm

error: Failed dependencies:

ipvsadm is needed by heartbeat-ldirectord-2.1.4-12.el6.x86_64

perl(Mail::Send) is needed by heartbeat-ldirectord-2.1.4-12.el6.x86_64# yum -y install ipvsadm perl-MailTools perl-TimeDate# rpm -ivh heartbeat-2.1.4-12.el6.x86_64.rpm heartbeat-stonith-2.1.4-12.el6.x86_64.rpm heartbeat-pils-2.1.4-12.el6.x86_64.rpm heartbeat-gui-2.1.4-12.el6.x86_64.rpm

heartbeat-ldirectord-2.1.4-12.el6.x86_64.rpm

Preparing... ###########################################[100%]

1:heartbeat-pils ########################################### [ 20%]

2:heartbeat-stonith ########################################### [ 40%]

3:heartbeat ########################################### [ 60%]

4:heartbeat-gui ########################################### [ 80%]

5:heartbeat-ldirectord ########################################### [100%][root@node1 heartbeat2]# rpm -ql heartbeat-ldirectord

/etc/ha.d/resource.d/ldirectord

/etc/init.d/ldirectord

/etc/logrotate.d/ldirectord

/usr/sbin/ldirectord

/usr/share/doc/heartbeat-ldirectord-2.1.4

/usr/share/doc/heartbeat-ldirectord-2.1.4/COPYING

/usr/share/doc/heartbeat-ldirectord-2.1.4/README

/usr/share/doc/heartbeat-ldirectord-2.1.4/ldirectord.cf

/usr/share/man/man8/ldirectord.8.gz[root@node1 ha.d]# vim /etc/rsyslog.conf

#添加如下行:

local0.* /var/log/heartbeat.log

拷贝一份到node2:

[root@node1 ha.d]# scp /etc/rsyslog.confnode2:/etc/rsyslog.conf[root@node1 ha.d]# cd /usr/share/doc/heartbeat-2.1.4/

[root@node1 heartbeat-2.1.4]# cp authkeysha.cf /etc/ha.d/[root@node1 ha.d]# grep -v ^# /etc/ha.d/ha.cf

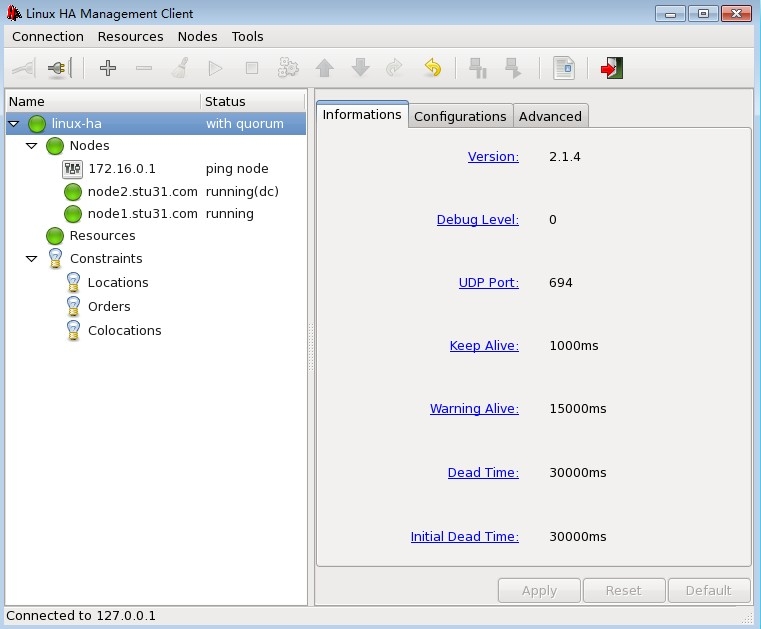

logfacility local0

mcast eth0 225.231.123.31 694 1 0

auto_failback on

node node1.stu31.com

node node2.stu31.com

ping 172.16.0.1

crm on[root@node1 ha.d]# vim authkeys

auth 2

2 sha1 password[root@node1 ha.d]# scp authkeys ha.cf node2:/etc/ha.d/

authkeys 100% 675 0.7KB/s 00:00

ha.cf 100% 10KB 10.4KB/s 00:00

[root@node1 ha.d]#[root@rs1 ~]# cat rs.sh

#!/bin/bash

vip=172.16.31.180

interface="lo:0"

case $1 in

start)

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

ifconfig $interface $vip broadcast $vip netmask 255.255.255.255 up

route add -host $vip dev $interface

;;

stop)

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

ifconfig $interface down

;;

status)

if ficonfig lo:0 |grep $vip &>/dev/null; then

echo "ipvs isrunning."

else

echo "ipvs isstopped."

fi

;;

*)

echo "Usage 'basename $0 start|stop|status"

exit 1

;;

esac[root@rs1 ~]# echo "rs1.stu31.com" > /var/www/html/index.html

[root@rs2 ~]# echo "rs2.stu31.com" > /var/www/html/index.html[root@rs1 ~]# service httpd start

Starting httpd: [ OK ]

[root@rs1 ~]# curl http://172.16.31.13

rs1.stu31.com

[root@rs2 ~]# service httpd start

Starting httpd: [ OK ]

[root@rs2 ~]# curl http://172.16.31.14

rs2.stu31.com# service httpd stop

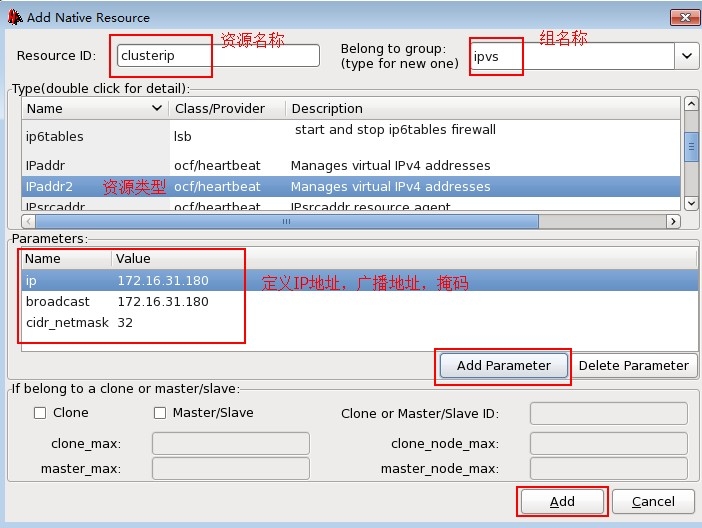

# chkconfig httpd off[root@node1 ~]# ifconfig eth0:0 172.16.31.180 broadcast 172.16.31.180 netmask 255.255.255.255 up

[root@node1 ~]# route add -host 172.16.31.180 dev eth0:0

[root@node1 ~]# ipvsadm -C

[root@node1 ~]# ipvsadm -A -t 172.16.31.180:80 -s rr

[root@node1 ~]# ipvsadm -a -t 172.16.31.180:80 -r 172.16.31.13 -g

[root@node1 ~]# ipvsadm -a -t 172.16.31.180:80 -r 172.16.31.14 -g

[root@node1 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 172.16.31.13:80 Route 1 0 0

-> 172.16.31.14:80 Route 1 0 0[root@nfs ~]# curl http://172.16.31.180

rs2.stu31.com

[root@nfs ~]# curl http://172.16.31.180

rs1.stu31.com[root@node1 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 172.16.31.13:80 Route 1 0 1

-> 172.16.31.14:80 Route 1 0 1[root@node1 ~]# ipvsadm -C

[root@node1 ~]# route del -host 172.16.31.180

[root@node1 ~]# ifconfig eth0:0 down[root@node1 ~]# echo "sorry page fromlvs1" > /var/www/html/index.html

[root@node2 ~]# echo "sorry page fromlvs2" > /var/www/html/index.html[root@node1 ha.d]# service httpd start

Starting httpd: [ OK ]

[root@node1 ha.d]# curl http://172.16.31.10

sorry page from lvs1

[root@node2 ~]# service httpd start

Starting httpd: [ OK ]

[root@node2 ~]# curl http://172.16.31.11

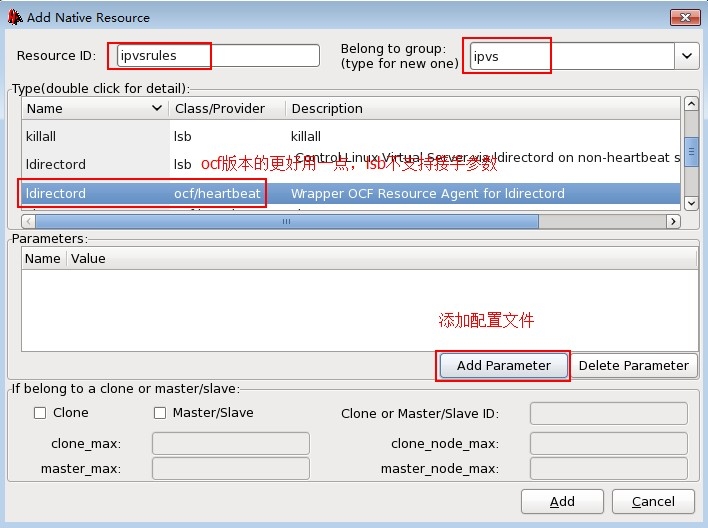

sorry page from lvs2[root@node1 ~]# cd /usr/share/doc/heartbeat-ldirectord-2.1.4/

[root@node1 heartbeat-ldirectord-2.1.4]# ls

COPYING ldirectord.cf README

[root@node1 heartbeat-ldirectord-2.1.4]# cp ldirectord.cf /etc/ha.d[root@node1 ha.d]# grep -v ^# /etc/ha.d/ldirectord.cf

#检测超时

checktimeout=3

#检测间隔

checkinterval=1

#重新载入客户机

autoreload=yes

#real server 宕机后从lvs列表中删除,恢复后自动添加进列表

quiescent=yes

#监听VIP地址80端口

virtual=172.16.31.180:80

#真正服务器的IP地址和端口,路由模式

real=172.16.31.13:80 gate

real=172.16.31.14:80 gate

#如果RS节点都宕机,则回切到本地环回口地址

fallback=127.0.0.1:80 gate

#服务是http

service=http

#保存在RS的web根目录并且可以访问,通过它来判断RS是否存活

request=".health.html"

#网页内容

receive="OK"

#调度算法

scheduler=rr

#persistent=600

#netmask=255.255.255.255

#检测协议

protocol=tcp

#检测类型

checktype=negotiate

#检测端口

checkport=80[root@rs1 ~]# echo "OK" > /var/www/html/.health.html

[root@rs2 ~]# echo "OK" > /var/www/html/.health.html[root@node1 ha.d]# scp ldirectord.cf node2:/etc/ha.d/

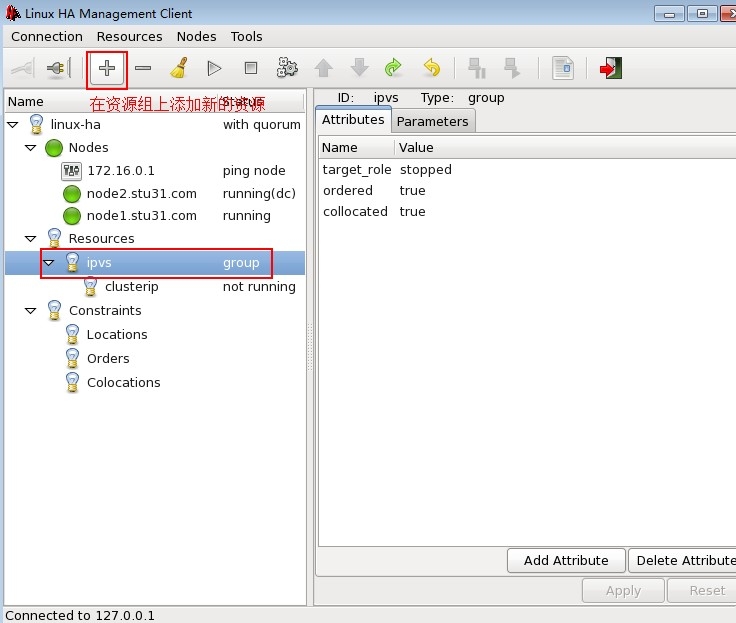

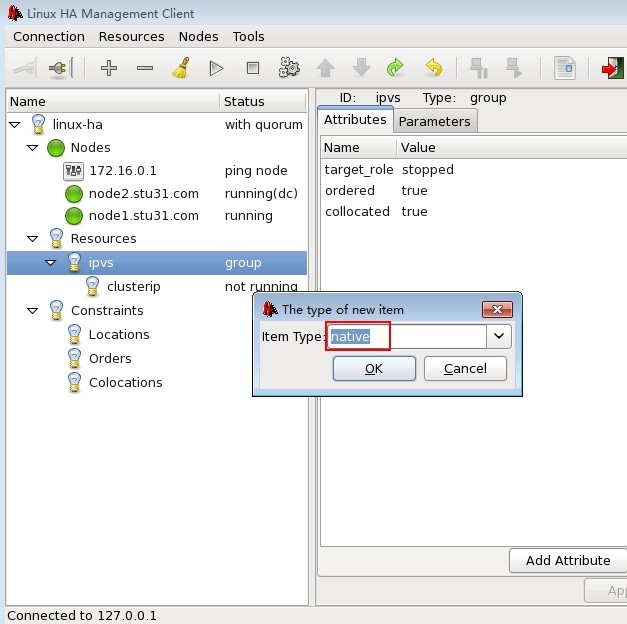

ldirectord.cf 100% 7553 7.4KB/s 00:00[root@node1 ha.d]# service heartbeat start;ssh node2 'service heartbeat start'

Starting High-Availability services:

Done.

Starting High-Availability services:

Done.[root@node1 ha.d]# ss -tunl |grep 5560

tcp LISTEN 0 10 *:5560 *:*[root@node1 ~]# echo oracle |passwd --stdin hacluster

Changing password for user hacluster.

passwd: all authentication tokens updatedsuccessfully.[root@nfs ~]# curl http://172.16.31.180

rs2.stu31.com

[root@nfs ~]# curl http://172.16.31.180

rs1.stu31.com[root@node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 172.16.31.13:80 Route 1 0 1

-> 172.16.31.14:80 Route 1 0 1[root@rs1 ~]# cd /var/www/html/

[root@rs1 html]# mv .health.html a.html

[root@rs2 ~]# cd /var/www/html/

[root@rs2 html]# mv .health.html a.html[root@nfs ~]# curl http://172.16.31.180

sorry page from lvs2[root@node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 127.0.0.1:80 Local 1 0 1

-> 172.16.31.13:80 Route 0 0 0

-> 172.16.31.14:80 Route 0 0 0[root@rs1 html]# mv a.html .health.html

[root@rs2 html]# mv a.html .health.html[root@nfs ~]# curl http://172.16.31.180

rs2.stu31.com

[root@nfs ~]# curl http://172.16.31.180

rs1.stu31.com[root@node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 172.16.31.13:80 Route 1 0 1

-> 172.16.31.14:80 Route 1 0 1[root@nfs ~]# curl http://172.16.31.180

rs2.stu31.com

[root@nfs ~]# curl http://172.16.31.180

rs1.stu31.com[root@node1 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.31.180:80 rr

-> 172.16.31.13:80 Route 1 0 1

-> 172.16.31.14:80 Route 1 0 1最后

以上就是大方电脑最近收集整理的关于利用heartbeat的ldirectord实现ipvs的高可用集群构建的全部内容,更多相关利用heartbeat内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复