gan简介 Generative Adversarial Networks also commonly referred to as GANs are used to generate images without very little or no input. GANs allow us to generate images created by our Neural Networks, completely removing a human (yes you) out of the loop. Before we dive into the theory, I like showing you the abilities of GANs to build your excitement. Turn Horses into Zebras (vice versa). 生成对抗网络通常也称为GAN,用于生成很少输入或没有输入的图像。 GAN使我们能够生成由我们的神经网络创建的图像,从而完全消除了人类(是的)。 在我们深入理论之前,我喜欢向您展示GAN激发您的激情的能力。 将马匹变成斑马(反之亦然)。 Generative adversarial networks (GANs) was introduced by Ian Goodfellow (the GANFather of GANs) et al. in 2014, in his paper appropriately titled “Generative Adversarial Networks”. It was proposed as an alternative to Variational Auto Encoders (VAEs) which learn the latent spaces of images, to generate synthetic images. It’s aimed to create realistic artificial images that could be almost indistinguishable from real ones. 生成对抗网络(GANs)由Ian Goodfellow(GANs的GANFather)等人引入。 2014年,他在论文中恰当地题为“ Generative Adversarial Networks”。 它被提议作为变分自动编码器(VAE)的替代方法,后者学习图像的潜在空间,以生成合成图像。 它旨在创建逼真的人造图像,与真实图像几乎无法区分。 Imagine there’s an ambitious young criminal who wants to counterfeit money and sell to a mobster who specializes in handling counterfeit money. At first, the young counterfeiter is not good and our expert mobster tells him, he’s money is way off from looking real. Slowly he gets better and makes a good ‘copy’ every so often. The mobster tells him when it’s good. After some time, both the forger (our counterfeiter) and expert mobster get better at their jobs and now they have created almost real looking but fake money. 想象一下,有一个雄心勃勃的年轻罪犯想要伪造货币并出售给专门处理伪造货币的黑帮。 起初,年轻的造假者不好,而我们的专家流氓告诉他,他的钱与真实的相去甚远。 慢慢地,他变得更好,并且每隔一段时间就会做出一个好的“副本”。 暴徒告诉他什么时候好。 一段时间之后,伪造者(我们的伪造者)和专家流氓都在工作上变得更好,现在他们创造了几乎是真实的但伪造的钱。 ● The purpose of the Generator Network is to take a random image initialization and decode it into a synthetic image.● The purpose of the Discriminator Network is to take this input and predict whether this image came from a real dataset or is synthetic. ●生成器网络的目的是进行随机图像初始化并将其解码为合成图像。●鉴别器网络的目的是获取此输入并预测此图像是来自真实数据集还是合成图像。 ●As we just saw, this is effectively what GANs are, two antagonistic networks that are contesting against each other. The two components are called: ●正如我们刚刚看到的,这实际上就是GAN,这是两个相互竞争的对立网络。 这两个组件称为: ● Training GANs is notoriously difficult. In CNN’s we used gradient descent to change our weights to reduce our loss. ●众所周知,训练GAN十分困难。 在CNN中,我们使用梯度下降来更改权重以减少损失。 ● However, in a GANs, every weight change changes the entire balance of our dynamic system. ●但是,在GAN中,每次重量变化都会改变动态系统的整体平衡。 ● In GAN’s we are not seeking to minimize loss, but finding an equilibrium between our two opposing Networks. ●在GAN中,我们并不是要尽量减少损失,而是要在两个相对的网络之间找到平衡。 1. Input randomly generates noisy images into our Generator Network to generate a sample image. 1.输入将随机生成的噪声图像生成到我们的生成器网络中,以生成样本图像。 2. We take some sample images from our real data and mix it with some of our generated images. 2.我们从真实数据中获取一些样本图像,并将其与一些生成的图像混合。 3. Input these mixed images to our Discriminator who will then be trained on this mixed set and will update it’s weights accordingly. 3.将这些混合图像输入到我们的鉴别器中,鉴别器随后将在此混合集合上进行训练,并将相应地更新其权重。 4. We then make some more fake images and input them into the Discriminator but we label all as real. This is done to train the Generator. We’ve frozen the weights of the discriminator at this stage (Discriminator learning stops), and we use the feedback from the discriminator to now update the weights of the generator. This is how we teach both our Generator (to make better synthetic images) and Discriminator to get better at spotting fakes. 4.然后,我们制作更多伪造的图像并将其输入到鉴别器中,但我们将所有标签标记为真实。 这样做是为了训练发电机。 在此阶段,我们已经冻结了鉴别器的权重(区分器学习停止),现在我们使用鉴别器的反馈来更新生成器的权重。 这就是我们教导发生器(以生成更好的合成图像)和鉴别器以更好地发现假货的方式。 For this article, we will be generating handwritten numbers using the MNIST dataset. The architecture for this GAN is : 对于本文,我们将使用MNIST数据集生成手写数字。 该GAN的体系结构为: The entire code for the project can be found here. 该项目的完整代码可以在这里找到。 First, we load all the necessary libraries 首先,我们加载所有必要的库 Now we load our dataset. For this blog MNIST dataset is being used, so no dataset needs to be downloaded separately. 现在,我们加载数据集。 对于此博客,正在使用MNIST数据集,因此无需单独下载数据集。 Next, we define the architecture of our generator and discriminator 接下来,我们定义生成器和鉴别器的架构 Now we combine our generator and discriminator to train simultaneously. 现在,我们将生成器和鉴别器结合起来同时进行训练。 Three functions to plot and save the results after every 20 epochs and save the model. 每隔20个周期绘制并保存结果并保存模型的三个功能。 The train function 火车功能 To stay connected follow me here. 要保持联系,请在这里关注我。 READ MY PREVIOUS BLOG: UNDERSTANDING U-Net from here. 阅读我以前的博客: 从这里了解U-Net。 翻译自: https://medium.com/analytics-vidhya/introduction-to-gans-38a7a990a538 gan简介 目录: (TABLE OF CONTENTS:)

介绍 (INTRODUCTION)

GAN的历史 (HISTORY OF GANs)

GAN的直观解释 (INTUITIVE EXPLANATION OF GAN)

生成器和鉴别器网络: (The Generator & Discriminator Networks:)

训练甘 (TRAINING GANs)

GAN训练过程 (THE GAN TRAINING PROCESS)

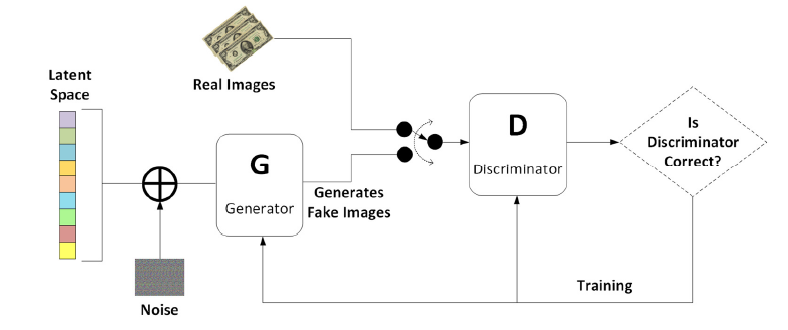

GAN框图 (GAN Block Diagram)

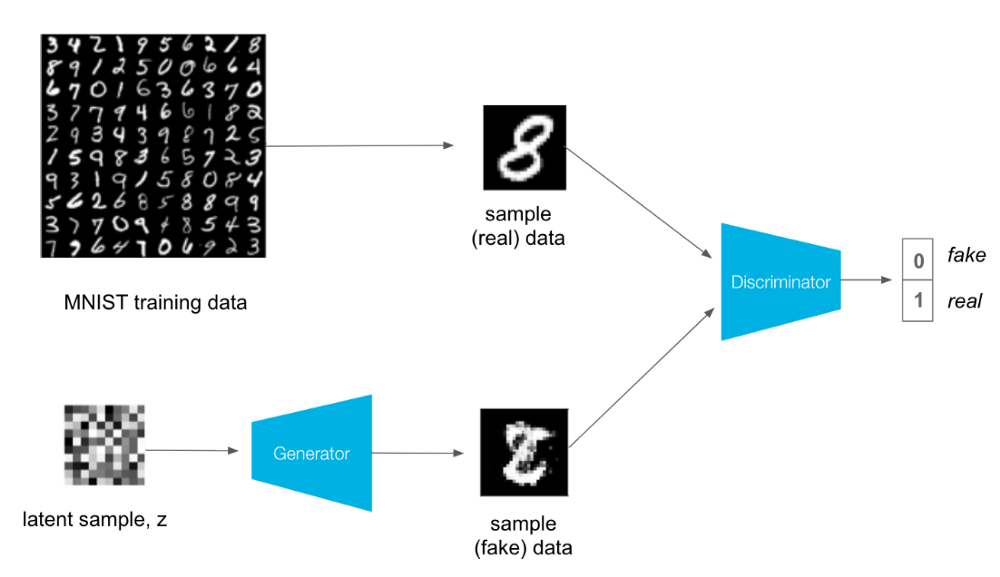

GAN在MNIST数据集上的KERAS实现。 (KERAS IMPLEMENTATION OF GAN ON MNIST DATASET)

import os

os.environ["KERAS_BACKEND"] = "tensorflow"

import numpy as np

from tqdm import tqdm

import matplotlib.pyplot as plt

from keras.layers import Input

from keras.models import Model, Sequential

from keras.layers.core import Reshape, Dense, Dropout, Flatten

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import Convolution2D, UpSampling2D

from keras.layers.normalization import BatchNormalization

from keras.datasets import mnist

from keras.optimizers import Adam

from keras import backend as K

from keras import initializers

K.set_image_dim_ordering('th')

# Deterministic output.

# Tired of seeing the same results every time? Remove the line below.

np.random.seed(1000)

# The results are a little better when the dimensionality of the random vector is only 10.

# The dimensionality has been left at 100 for consistency with other GAN implementations.

randomDim = 100(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = (X_train.astype(np.float32) - 127.5)/127.5

X_train = X_train.reshape(60000, 784)# Optimizer

adam = Adam(lr=0.0002, beta_1=0.5)#generator

generator = Sequential()

generator.add(Dense(256, input_dim=randomDim, kernel_initializer=initializers.RandomNormal(stddev=0.02)))

generator.add(LeakyReLU(0.2))

generator.add(Dense(512))

generator.add(LeakyReLU(0.2))

generator.add(Dense(1024))

generator.add(LeakyReLU(0.2))

generator.add(Dense(784, activation='tanh'))

generator.compile(loss='binary_crossentropy', optimizer=adam)#discriminator

discriminator = Sequential()

discriminator.add(Dense(1024, input_dim=784, kernel_initializer=initializers.RandomNormal(stddev=0.02)))

discriminator.add(LeakyReLU(0.2))

discriminator.add(Dropout(0.3))

discriminator.add(Dense(512))

discriminator.add(LeakyReLU(0.2))

discriminator.add(Dropout(0.3))

discriminator.add(Dense(256))

discriminator.add(LeakyReLU(0.2))

discriminator.add(Dropout(0.3))

discriminator.add(Dense(1, activation='sigmoid'))

discriminator.compile(loss='binary_crossentropy', optimizer=adam)# Combined network

discriminator.trainable = False

ganInput = Input(shape=(randomDim,))

x = generator(ganInput)

ganOutput = discriminator(x)

gan = Model(inputs=ganInput, outputs=ganOutput)

gan.compile(loss='binary_crossentropy', optimizer=adam)

dLosses = []

gLosses = []# Plot the loss from each batch

def plotLoss(epoch):

plt.figure(figsize=(10, 8))

plt.plot(dLosses, label='Discriminitive loss')

plt.plot(gLosses, label='Generative loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

plt.savefig('images/gan_loss_epoch_%d.png' % epoch)

# Create a wall of generated MNIST images

def plotGeneratedImages(epoch, examples=100, dim=(10, 10), figsize=(10, 10)):

noise = np.random.normal(0, 1, size=[examples, randomDim])

generatedImages = generator.predict(noise)

generatedImages = generatedImages.reshape(examples, 28, 28)

plt.figure(figsize=figsize)

for i in range(generatedImages.shape[0]):

plt.subplot(dim[0], dim[1], i+1)

plt.imshow(generatedImages[i], interpolation='nearest', cmap='gray_r')

plt.axis('off')

plt.tight_layout()

plt.savefig('images/gan_generated_image_epoch_%d.png' % epoch)

# Save the generator and discriminator networks (and weights) for later use

def saveModels(epoch):

generator.save('models/gan_generator_epoch_%d.h5' % epoch)

discriminator.save('models/gan_discriminator_epoch_%d.h5' % epoch)def train(epochs=1, batchSize=128):

batchCount = X_train.shape[0] / batchSize

print 'Epochs:', epochs

print 'Batch size:', batchSize

print 'Batches per epoch:', batchCount

for e in xrange(1, epochs+1):

print '-'*15, 'Epoch %d' % e, '-'*15

for _ in tqdm(xrange(batchCount)):

# Get a random set of input noise and images

noise = np.random.normal(0, 1, size=[batchSize, randomDim])

imageBatch = X_train[np.random.randint(0, X_train.shape[0], size=batchSize)]

# Generate fake MNIST images

generatedImages = generator.predict(noise)

# print np.shape(imageBatch), np.shape(generatedImages)

X = np.concatenate([imageBatch, generatedImages])

# Labels for generated and real data

yDis = np.zeros(2*batchSize)

# One-sided label smoothing

yDis[:batchSize] = 0.9

# Train discriminator

discriminator.trainable = True

dloss = discriminator.train_on_batch(X, yDis)

# Train generator

noise = np.random.normal(0, 1, size=[batchSize, randomDim])

yGen = np.ones(batchSize)

discriminator.trainable = False

gloss = gan.train_on_batch(noise, yGen)

# Store loss of most recent batch from this epoch

dLosses.append(dloss)

gLosses.append(gloss)

if e == 1 or e % 20 == 0:

plotGeneratedImages(e)

saveModels(e)

# Plot losses from every epoch

plotLoss(e)

train(200, 128)

最后

以上就是拉长自行车最近收集整理的关于gan简介_GAN简介 目录: (TABLE OF CONTENTS:) 介绍 (INTRODUCTION) GAN的历史 (HISTORY OF GANs) GAN的直观解释 (INTUITIVE EXPLANATION OF GAN) 训练甘 (TRAINING GANs) GAN训练过程 (THE GAN TRAINING PROCESS) GAN框图 (GAN Block Diagram) GAN在MNIST数据集上的KERAS实现。 (KERAS IMPLEMENTATION OF G的全部内容,更多相关gan简介_GAN简介内容请搜索靠谱客的其他文章。

发表评论 取消回复