Oracle RAC集群环境部署

一. 安装前准备

(1) Linux系统版本

SUSE Linux Enterprise Server 11 (x86_64)

(2) Oracle database和Grid安装包

linux.x64_11gR2_database_1of2.zip

linux.x64_11gR2_database_2of2.zip

linux.x64_11gR2_grid.zip

(3) ASMlib安装包

oracleasm-support-2.1.8-1.SLE11.x86_64.rpm

oracleasmlib-2.0.4-1.sle11.x86_64.rpm

oracleasm-2.0.5-7.37.3.x86_64.rpm

oracleasm-kmp-default-2.0.5_3.0.76_0.11-7.37.3.x86_64.rpm

(4) 依赖软件包

| gcc gcc-c++ gcc-32bit glibc-devel glibc-devel-32bit libaio libaio-devel libaio-devel-32bit libstdc++43-devel-32bit libstdc++43-devel sysstat libstdc++-devel libcap1 libcap1-32bit libcap2 libcap2-32bit compat* libgomp unixODBC unixODBC-devel |

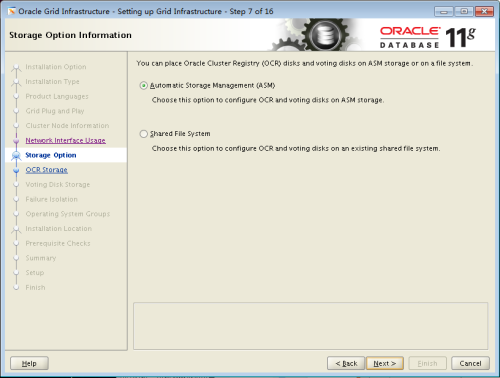

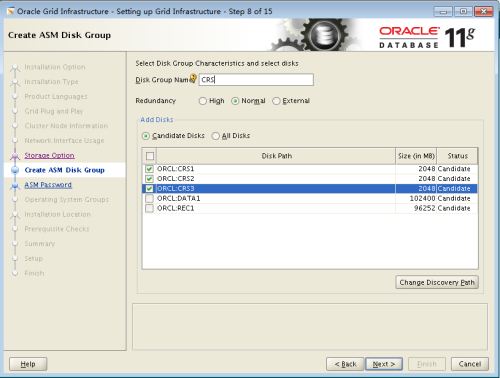

(5) 磁盘分区

名称 | 分区 | 容量 |

/ | /dev/sda | 100G |

Swap | Swap | 4G |

CRS1 | /dev/sdb1 | 2G |

CRS2 | /dev/sdb2 | 2G |

CRS3 | /dev/sdb3 | 2G |

DATA1 | /dev/sdb5 | 100G |

REC1 | /dev/sdb6 | 100G |

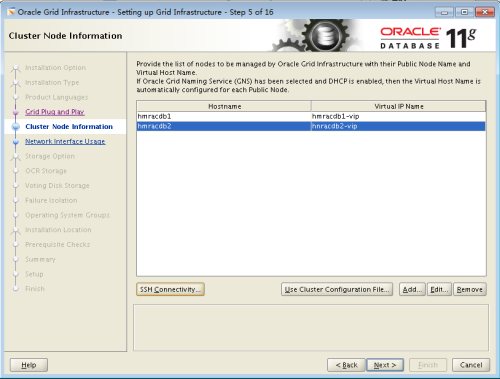

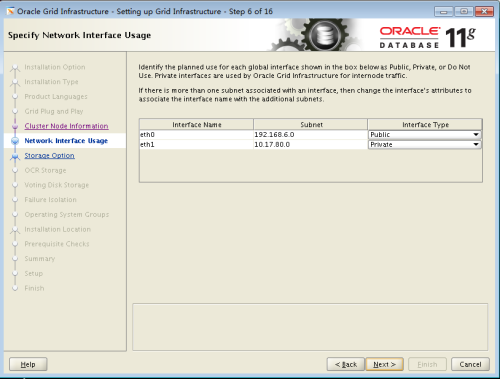

(6) IP地址划分

Hostname | Node name | IP | Device | Type |

hmracdb1 | hmracdb1 | 192.168.6.154 | eth1 | Public |

hmracdb1-vip | 192.168.6.54 | eth1 | VIP | |

hmracdb1-priv | 10.17.81.154 | eth0 | Private | |

hmracdb2 | hmracdb2 | 192.168.6.155 | eth1 | Public |

hmracdb2-vip | 192.168.6.55 | eth1 | VIP | |

hmracdb2-priv | 10.17.81.155 | eth0 | Private | |

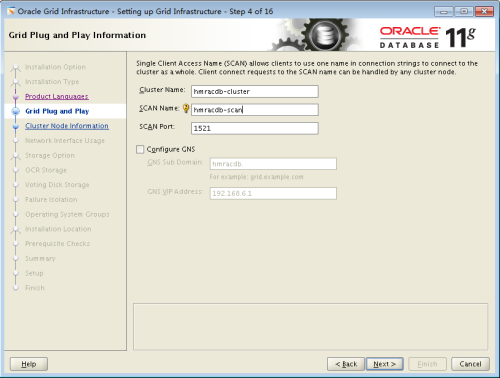

Rac-scan | hmracdb-scan | 192.168.6.66 |

二. 系统环境配置

(1) 主机hosts文件解析配置,两台主机使用相同配置

# public ip 192.168.6.154 hmracdb1 192.168.6.155 hmracdb2

# private ip 10.17.81.154 hmracdb1-priv 10.17.81.155 hmracdb2-priv

# vip 192.168.6.54 hmracdb1-vip 192.168.6.55 hmracdb2-vip

# scan ip 192.168.6.66 hmracdb-scan |

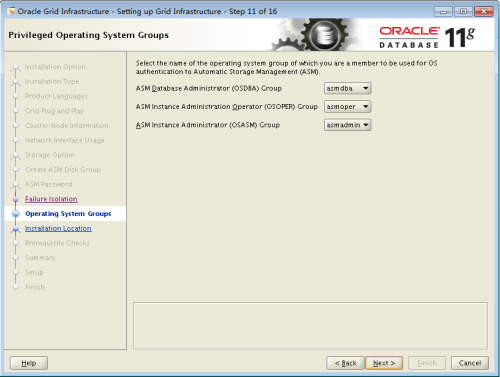

(2) 创建oracle、grig用户和组

1. 创建组和用户 /usr/sbin/groupadd -g 501 oinstall /usr/sbin/groupadd -g 502 dba /usr/sbin/groupadd -g 503 oper /usr/sbin/groupadd -g 504 asmadmin /usr/sbin/groupadd -g 505 asmdba /usr/sbin/groupadd -g 506 asmoper /usr/sbin/useradd -u 501 -g oinstall -Gdba,oper,asmdba,asmadmin oracle -m /usr/sbin/useradd -u 502 -g oinstall -Gdba,asmadmin,asmdba,asmoper,oper grid -m

2. 创建密码 echo oracle | passwd --stdin oracle echo oracle | passwd --stdin grid

# id oracle uid=501(oracle)gid=501(oinstall)groups=502(dba),503(oper),504(asmadmin),505(asmdba),501(oinstall) # id grid |

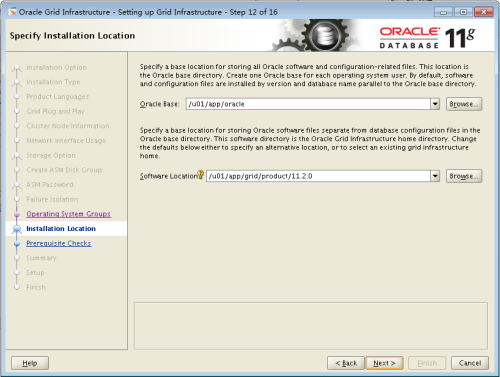

(3) 创建安装目录

mkdir -p /u01/app/{grid,oracle} chown -R grid:oinstall /u01/ chown -R grid:oinstall /u01/app/grid/ chown -R oracle:oinstall /u01/app/oracle/ chmod -R 775 /u01/

ls -l /u01/app/ total 8 drwxrwxr-x 2 grid oinstall 4096 Nov 16 19:09 grid drwxrwxr-x 2 oracle oinstall 4096 Nov 16 19:09 oracle |

(4) 配置grid、oracle用户环境变量

------------------------------------------------------------------------------------------------------- 1. 配置hmracdb1节点grid用户的profile,ORACLE_SID为+ASM1,hmracdb2节点改为+ASM2 ------------------------------------------------------------------------------------------------------- export ORACLE_SID=+ASM1 export ORACLE_BASE=/u01/app/oracle exportORACLE_HOME=/u01/app/grid/product/11.2.0 export PATH=$PATH:$ORACLE_HOME/bin export TMP=/tmp export TMPDIR=$TMP export NLS_DATE_FORMAT="yyyy-mm-ddHH24:MI:SS" export THREADS_FLAG=native export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib export CVUQDISK GRP=oinstall if [ $USER = "oracle" ] || [$USER = 'grid' ];then if [ $SHELL = "/bin/ksh" ];then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi

------------------------------------------------------------------------------------------------------- 2. 配置hmracdb1节点oracle用户的profile,ORACLE_SID为rac1,hmracdb2节点改为rac2 ------------------------------------------------------------------------------------------------------- export ORACLE_SID=rac1 export ORACLE_BASE=/u01/app/oracle exportORACLE_HOME=$ORACLE_BASE/product/11.2.0 exportPATH=$ORACLE_HOME/bin:/usr/sbin:$PATH export TMP=/tmp export TMPDIR=$TMP export ORACLE_TERM=xterm exportLD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib:/usr/lib64 exportCLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib export NLS_DATE_FORMAT="yyyy-mm-ddHH24:MI:SS" export NLS_LANG=AMERICAN_AMERICA.ZHS16GBK if [ $USER = "oracle" ] || [$USER = 'grid' ];then if [ $SHELL = "/bin/ksh" ];then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi |

(5) 修改内核参数配置

1. 修改/etc/sysctl.conf配置 # vim /etc/sysctl.conf kernel.shmmax = 68719476736 kernel.shmall = 4294967296 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 net.ipv4.ip_local_port_range = 9000 65500 fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 # sysctl –p

2. 修改/etc/security/limits.conf # vim /etc/security/limits.conf oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536 grid soft nproc 2047 grid hard nproc 16384 grid soft nofile 1024 grid hard nofile 65536 # vim /etc/pam.d/login session required pam_limits.so |

(5) 配置NTP时间同步

1. 同步时间 # sntp -P no -r 10.10.0.2 &&hwclock –w

2. 配置hmracdb1为上层NTP服务器 hmracdb1:~ # vim /etc/ntp.conf #添加以下配置 restrict hmracdb1 mask 255.255.255.0 nomodify notrap noquery server hmracdb1 server 127.127.1.0

3. 配置hmracdb2节点NTP服务 hmracdb2:~ # vim /etc/ntp.conf server hmracdb1

4. 两个节点修改如下配置,并重启ntp服务 # vim /etc/sysconfig/ntp #修改以下配置 NTPD_OPTIONS="-x -g -u ntp:ntp" # service ntp restart |

(6) 配置oracle、grid用户ssh密钥

su - grid grid@hmracdb1:~> ssh-keygen -t rsa grid@hmracdb2:~> ssh-keygen -t rsa grid@hmracdb1:~> ssh-copy-id -i~/.ssh/id_rsa.pub grid@hmracdb1 grid@hmracdb1:~> ssh-copy-id -i~/.ssh/id_rsa.pub grid@hmracdb2 grid@hmracdb2:~> ssh-copy-id -i~/.ssh/id_rsa.pub grid@hmracdb1 grid@hmracdb2:~> ssh-copy-id -i~/.ssh/id_rsa.pub grid@hmracdb2

su - oracle oracle@hmracdb1:~> ssh-keygen -t rsa oracle@hmracdb2:~> ssh-keygen -t rsa oracle@hmracdb1:~> ssh-copy-id -i~/.ssh/id_rsa.pub oracle@hmracdb1 oracle@hmracdb1:~> ssh-copy-id -i~/.ssh/id_rsa.pub oracle@hmracdb2 oracle@hmracdb2:~> ssh-copy-id -i~/.ssh/id_rsa.pub oracle@hmracdb1 oracle@hmracdb2:~> ssh-copy-id -i ~/.ssh/id_rsa.pub oracle@hmracdb2 |

三. 配置ASM磁盘

(1 )安装ASM软件(两个节点都安装)

# zypper in -y oracleasmoracleasm-kmp-default # zypper inoracleasmlib-2.0.4-1.sle11.x86_64.rpm # zypper in oracleasm-support-2.1.8-1.SLE11.x86_64.rpm |

(2) 创建ASM分区(只需在节点1上创建)

hmracdb1:~ # fdisk /dev/sdb

Device contains neither a valid DOSpartition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with diskidentifier 0xd60a0f97.

Changes will remain in memory only, untilyou decide to write them.

After that, of course, the previouscontent won't be recoverable.

Warning: invalid flag 0x0000 of partitiontable 4 will be corrected by w(rite)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4, default 1):

Using default value 1

First sector (2048-419430399, default2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G}(2048-419430399, default 419430399): +2G

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4, default 2):

Using default value 2

First sector (4196352-419430399, default4196352):

Using default value 4196352

Last sector, +sectors or +size{K,M,G}(4196352-419430399, default 419430399): +2G

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4, default 3):

Using default value 3

First sector (8390656-419430399, default8390656):

Using default value 8390656

Last sector, +sectors or +size{K,M,G}(8390656-419430399, default 419430399): +2G

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Selected partition 4

First sector (12584960-419430399, default12584960):

Using default value 12584960

Last sector, +sectors or +size{K,M,G}(12584960-419430399, default 419430399):

Using default value 419430399

Command (m for help): n

First sector (12587008-419430399, default12587008):

Using default value 12587008

Last sector, +sectors or +size{K,M,G}(12587008-419430399, default 419430399): +100G

Command (m for help): n

First sector (222304256-419430399,default 222304256):

Using default value 222304256

Last sector, +sectors or +size{K,M,G}(222304256-419430399, default 419430399):

Using default value 419430399

Command (m for help): p

Disk /dev/sdb: 214.7 GB, 214748364800bytes

255 heads, 63 sectors/track, 26108cylinders, total 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes/ 512 bytes

I/O size (minimum/optimal): 512 bytes /512 bytes

Disk identifier: 0xd60a0f97

Device Boot Start End Blocks Id System

/dev/sdb1 2048 4196351 2097152 83 Linux

/dev/sdb2 4196352 8390655 2097152 83 Linux

/dev/sdb3 8390656 12584959 2097152 83 Linux

/dev/sdb4 12584960 419430399 203422720 5 Extended

/dev/sdb5 12587008 222302207 104857600 83 Linux

/dev/sdb6 222304256 419430398 98563071+ 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

在节点2上查看:

hmracdb2:/usr/local/src # fdisk -l /dev/sdb

Disk /dev/sdb: 214.7 GB, 214748364800bytes

255 heads, 63 sectors/track, 26108cylinders, total 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes/ 512 bytes

I/O size (minimum/optimal): 512 bytes /512 bytes

Disk identifier: 0xd60a0f97

Device Boot Start End Blocks Id System

/dev/sdb1 2048 4196351 2097152 83 Linux

/dev/sdb2 4196352 8390655 2097152 83 Linux

/dev/sdb3 8390656 12584959 2097152 83 Linux

/dev/sdb4 12584960 419430399 203422720 5 Extended

/dev/sdb5 12587008 222302207 104857600 83 Linux

/dev/sdb6 222304256 419430398 98563071+ 83 Linux

(3) 初始化ASM配置(两个节点都执行)

1.加载asm内核模块

hmracdb1:~ # oracleasminit

Creating /dev/oracleasm mount point:/dev/oracleasm

Loading module "oracleasm":oracleasm

Configuring "oracleasm" to usedevice physical block size

Mounting ASMlib driver filesystem:/dev/oracleasm

2.初始化配置

hmracdb1:~ # /etc/init.d/oracleasmconfigure

Configuring the Oracle ASM librarydriver.

This will configure the on-bootproperties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions itwill have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface[]: grid

Default group to own the driver interface[]: asmadmin

Start Oracle ASM library driver on boot(y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n)[y]: y

Writing Oracle ASM library driverconfiguration: done

Initializing the Oracle ASMLibdriver: done

Scanning the system for Oracle ASMLib disks: done

(4) 创建ASM盘(只需在节点1上创建)

1. 在节点1上ASM磁盘 hmracdb1:~ # oracleasm listdisks hmracdb1:~ # oracleasm createdisk CRS1/dev/sdb1 Writing disk header: done Instantiating disk: done hmracdb1:~ # oracleasm createdisk CRS2/dev/sdb2 Writing disk header: done Instantiating disk: done hmracdb1:~ # oracleasm createdisk CRS3/dev/sdb3 Writing disk header: done Instantiating disk: done hmracdb1:~ # oracleasm createdisk DATA1/dev/sdb5 Writing disk header: done Instantiating disk: done hmracdb1:~ # oracleasm createdisk REC1/dev/sdb6 Writing disk header: done Instantiating disk: done hmracdb1:~ # oracleasm listdisks CRS1 CRS2 CRS3 DATA1 REC1 2. 节点2上扫描ASM共享盘 hmracdb2:~ # oracleasm scandisks Reloading disk partitions: done Cleaning any stale ASM disks... Scanning system for ASM disks... Instantiating disk "CRS1" Instantiating disk "CRS2" Instantiating disk "CRS3" Instantiating disk "DATA1" Instantiating disk "REC1" hmracdb2:~ # oracleasm listdisks CRS1 CRS2 CRS3 DATA1 REC1 3. 检查ASM磁盘路径 hmracdb2:~ # oracleasm querydisk/dev/sdb* Device "/dev/sdb" is not markedas an ASM disk Device "/dev/sdb1" is marked anASM disk with the label "CRS1" Device "/dev/sdb2" is marked anASM disk with the label "CRS2" Device "/dev/sdb3" is marked anASM disk with the label "CRS3" Device "/dev/sdb4" is notmarked as an ASM disk Device "/dev/sdb5" is marked anASM disk with the label "DATA1" Device "/dev/sdb6" is marked anASM disk with the label "REC1" hmracdb2:~ # ll /dev/oracleasm/disks/ total 0 brw-rw---- 1 grid asmadmin 8, 17 Nov 2114:19 CRS1 brw-rw---- 1 grid asmadmin 8, 18 Nov 2114:19 CRS2 brw-rw---- 1 grid asmadmin 8, 19 Nov 2114:19 CRS3 brw-rw---- 1 grid asmadmin 8, 21 Nov 2114:19 DATA1 brw-rw---- 1 grid asmadmin 8, 22 Nov 2114:19 REC1 hmracdb2:~ # ll -ltr /dev | grep "8,*17" brw-rw---- 1 root disk 8, 17 Nov 21 14:19 sdb1 hmracdb2:~ # ll -ltr /dev | grep "8,*18" brw-rw---- 1 root disk 8, 18 Nov 21 14:19 sdb2 hmracdb2:~ # ll -ltr /dev | grep "8,*19" brw-rw---- 1 root disk 8, 19 Nov 21 14:19 sdb3 hmracdb2:~ # ll -ltr /dev | grep "8,*21" brw-rw---- 1 root disk 8, 21 Nov 21 14:19 sdb5 hmracdb2:~ # ll -ltr /dev | grep "8,*22" brw-rw---- 1 root disk 8, 22 Nov 21 14:19 sdb6

(5) 安装oracle依赖软件包

hmracdb1:~ # for i in "gcc gcc-c++gcc-32bit glibc-devel glibc-devel-32bit libaio libaio-devel libaio-devel-32bitlibstdc++43-devel-32bit libstdc++43-devel sysstat libstdc++-devel libcap1libcap1-32bit libcap2 libcap2-32bit compat* libgomp unixODBC unixODBC-devel";dorpm -q $i;done hmracdb1:~ # zypper in gcc gcc-c++ gcc-32bit glibc-develglibc-devel-32bit libaio libaio-devel libaio-devel-32bitlibstdc++43-devel-32bit libstdc++43-devel sysstat libstdc++-devel libcap1libcap1-32bit libcap2 libcap2-32bit compat* libgomp unixODBC unixODBC-devel |

(6) 安装cvuqdisk软件

解压grid软件包,安装grid目录里的cvuqdisk-1.0.7-1.rpm软件 hmracdb1:/usr/local/src # cd grid/rpm/ hmracdb1:/usr/local/src/grid/rpm # ls cvuqdisk-1.0.7-1.rpm hmracdb1:/usr/local/src/grid/rpm # rpm -ivh cvuqdisk-1.0.7-1.rpm |

(7) 配置nslookup

1. 节点1上配置

hmracdb1:~ # mv /usr/bin/nslookup/usr/bin/nslookup.original

hmracdb1:~ # vim /usr/bin/nslookup

#!/bin/bash

HOSTNAME=${1}

if [[ $HOSTNAME ="hmracdb-scan" ]]; then

echo "Server: 192.168.6.54 "

echo "Address: 192.168.6.54 #53"

echo "Non-authoritative answer:"

echo "Name: hmracdb-scan"

echo "Address: 192.168.6.66 "

else

/usr/bin/nslookup.original $HOSTNAME

fi

hmracdb1:~ # chmod 755 /usr/bin/nslookup

2. 节点2上配置

hmracdb2:~ # mv /usr/bin/nslookup/usr/bin/nslookup.original

hmracdb2:~ # vim /usr/bin/nslookup

#!/bin/bash

HOSTNAME=${1}

if [[ $HOSTNAME ="hmracdb-scan" ]]; then

echo "Server: 192.168.6.55 "

echo "Address: 192.168.6.55 #53"

echo "Non-authoritative answer:"

echo "Name: hmracdb-scan"

echo "Address: 192.168.6.66 "

else

/usr/bin/nslookup.original $HOSTNAME

fi

hmracdb2:~ # chmod 755 /usr/bin/nslookup

3. 测试

hmracdb1:~ # nslookup hmracdb-scan

Server: 192.168.6.54

Address: 192.168.6.54 #53

Non-authoritative answer:

Name: hmracdb-scan

Address: 192.168.6.66

(8) Gird安装先决条件检查

1. 修改属主权限为grid用户 hmracdb1:/usr/local/src # chown -R grid.grid 2. 以grid用户执行安装先决条件检查 grid@hmracdb1:/usr/local/src/grid>./runcluvfy.sh stage -pre crsinst -n hmracdb1,hmracdb2 -fixup -verbose |

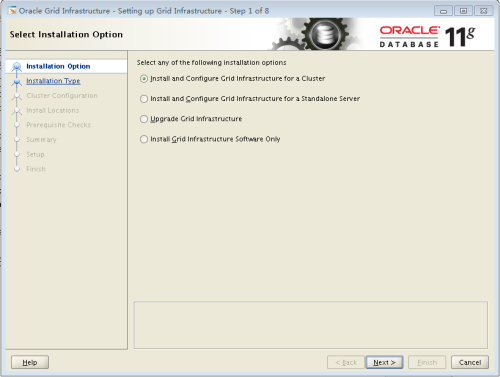

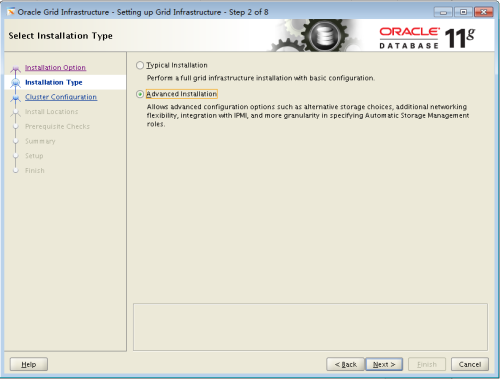

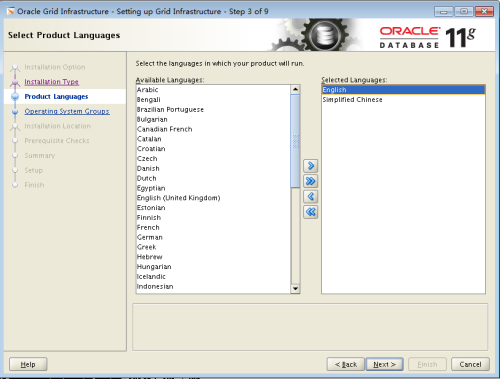

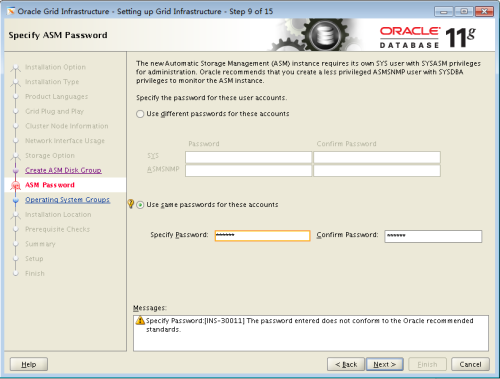

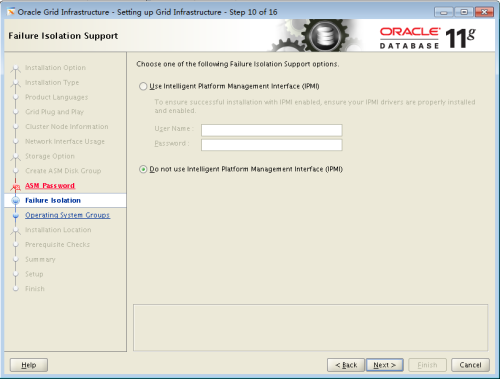

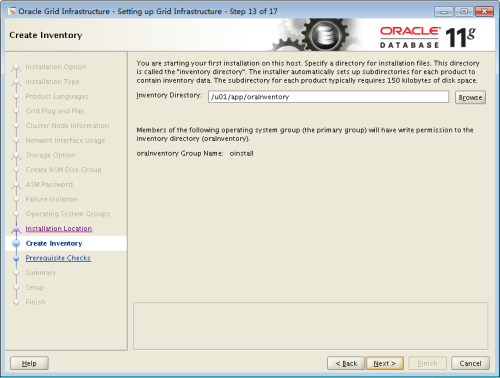

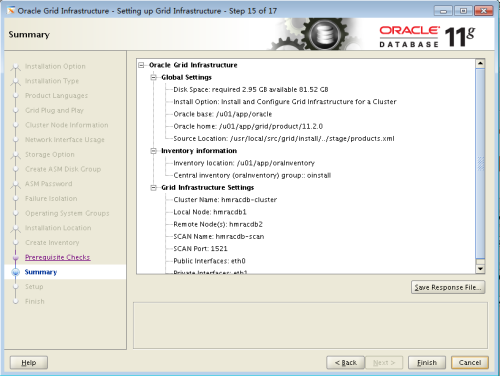

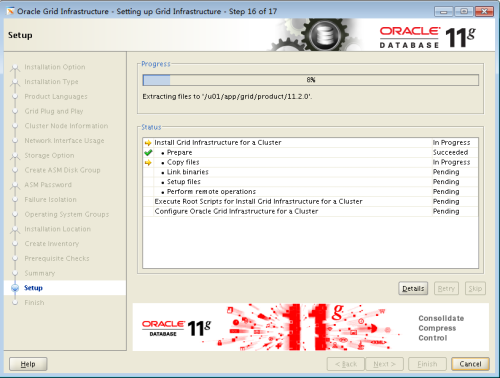

四. 图形化界面安装Grid Infrastructure

安装方法:

方法1:使用VNC软件登陆服务器图形界面安装

方法2:使用Xmanager调用图形界面安装

ln -s /usr/bin/env /bin/ grid@hmracdb1:/usr/local/src/grid>export DISPLAY=10.18.221.155:0.0 grid@hmracdb1:/usr/local/src/grid>xhost + access control disabled, clients canconnect from any host grid@hmracdb1:/usr/local/src/grid>./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than120 MB. Actual 83913 MB Passed Checking swap space: must be greater than150 MB. Actual 4094 MB Passed Checking monitor: must be configured todisplay at least 256 colors. Actual16777216 Passed Preparing to launch Oracle UniversalInstaller from /tmp/OraInstall2016-11-22_09-16-06AM. Please wait...grid@hmracdb1:/usr/local/src/grid> grid@hmracdb1:/usr/local/src/grid>

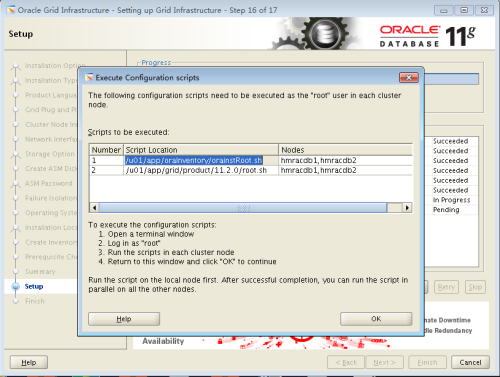

安装步骤:

执行脚本

先执行第一个(节点1执行成功后,再到节点2执行),再执行第二个脚本 hmracdb1:~ #/u01/app/grid/product/11.2.0/root.sh Running Oracle 11g root.sh script... The following environment variables areset as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/grid/product/11.2.0 Enter the full pathname of the local bindirectory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratabfile as needed by Database Configuration Assistant when adatabase is created Finished running generic part of root.shscript. Now product-specific root actions will beperformed. 2016-11-22 14:18:59: Parsing the hostname 2016-11-22 14:18:59: Checking for superuser privileges 2016-11-22 14:18:59: User has super userprivileges Using configuration parameter file:/u01/app/grid/product/11.2.0/crs/install/crsconfig_params Creating trace directory LOCAL ADD MODE Creating OCR keys for user 'root',privgrp 'root'.. Operation successful. root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding daemon to inittab CRS-4123: Oracle High AvailabilityServices has been started. ohasd is starting ADVM/ACFS is not supported onlsb-release-2.0-1.2.18 CRS-2672: Attempting to start 'ora.gipcd'on 'hmracdb1' CRS-2672: Attempting to start 'ora.mdnsd'on 'hmracdb1' CRS-2676: Start of 'ora.mdnsd' on'hmracdb1' succeeded CRS-2676: Start of 'ora.gipcd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.gpnpd'on 'hmracdb1' CRS-2676: Start of 'ora.gpnpd' on'hmracdb1' succeeded CRS-2672: Attempting to start'ora.cssdmonitor' on 'hmracdb1' CRS-2676: Start of 'ora.cssdmonitor' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.cssd'on 'hmracdb1' CRS-2672: Attempting to start'ora.diskmon' on 'hmracdb1' CRS-2676: Start of 'ora.diskmon' on'hmracdb1' succeeded CRS-2676: Start of 'ora.cssd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.ctssd'on 'hmracdb1' CRS-2676: Start of 'ora.ctssd' on'hmracdb1' succeeded ASM created and started successfully. DiskGroup CRS created successfully. clscfg: -install mode specified Successfully accumulated necessary OCRkeys. Creating OCR keys for user 'root',privgrp 'root'.. Operation successful. CRS-2672: Attempting to start 'ora.crsd'on 'hmracdb1' CRS-2676: Start of 'ora.crsd' on'hmracdb1' succeeded CRS-4256: Updating the profile Successful addition of voting disk0cabca1797104f01bfc740460efd9665. Successful addition of voting disk66791219f9f14fc4bf1bb8c8b6bdda6a. Successful addition of voting disk22e396e1b1594ff3bf6f516303e17f28. Successfully replaced voting disk groupwith +CRS. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfullyreplaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1.ONLINE 0cabca1797104f01bfc740460efd9665(ORCL:CRS1) [CRS] 2.ONLINE 66791219f9f14fc4bf1bb8c8b6bdda6a(ORCL:CRS2) [CRS] 3.ONLINE 22e396e1b1594ff3bf6f516303e17f28(ORCL:CRS3) [CRS] Located 3 voting disk(s). CRS-2673: Attempting to stop 'ora.crsd'on 'hmracdb1' CRS-2677: Stop of 'ora.crsd' on'hmracdb1' succeeded CRS-2673: Attempting to stop 'ora.asm' on'hmracdb1' CRS-2677: Stop of 'ora.asm' on 'hmracdb1'succeeded CRS-2673: Attempting to stop 'ora.ctssd'on 'hmracdb1' CRS-2677: Stop of 'ora.ctssd' on'hmracdb1' succeeded CRS-2673: Attempting to stop'ora.cssdmonitor' on 'hmracdb1' CRS-2677: Stop of 'ora.cssdmonitor' on'hmracdb1' succeeded CRS-2673: Attempting to stop 'ora.cssd'on 'hmracdb1' CRS-2677: Stop of 'ora.cssd' on'hmracdb1' succeeded CRS-2673: Attempting to stop 'ora.gpnpd'on 'hmracdb1' CRS-2677: Stop of 'ora.gpnpd' on'hmracdb1' succeeded CRS-2673: Attempting to stop 'ora.gipcd'on 'hmracdb1' CRS-2677: Stop of 'ora.gipcd' on'hmracdb1' succeeded CRS-2673: Attempting to stop 'ora.mdnsd'on 'hmracdb1' CRS-2677: Stop of 'ora.mdnsd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.mdnsd'on 'hmracdb1' CRS-2676: Start of 'ora.mdnsd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.gipcd'on 'hmracdb1' CRS-2676: Start of 'ora.gipcd' on 'hmracdb1'succeeded CRS-2672: Attempting to start 'ora.gpnpd'on 'hmracdb1' CRS-2676: Start of 'ora.gpnpd' on'hmracdb1' succeeded CRS-2672: Attempting to start'ora.cssdmonitor' on 'hmracdb1' CRS-2676: Start of 'ora.cssdmonitor' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.cssd'on 'hmracdb1' CRS-2672: Attempting to start'ora.diskmon' on 'hmracdb1' CRS-2676: Start of 'ora.diskmon' on'hmracdb1' succeeded CRS-2676: Start of 'ora.cssd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.ctssd'on 'hmracdb1' CRS-2676: Start of 'ora.ctssd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.asm'on 'hmracdb1' CRS-2676: Start of 'ora.asm' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.crsd'on 'hmracdb1' CRS-2676: Start of 'ora.crsd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.evmd'on 'hmracdb1' CRS-2676: Start of 'ora.evmd' on'hmracdb1' succeeded CRS-2672: Attempting to start 'ora.asm'on 'hmracdb1' CRS-2676: Start of 'ora.asm' on'hmracdb1' succeeded CRS-2672: Attempting to start'ora.CRS.dg' on 'hmracdb1' CRS-2676: Start of 'ora.CRS.dg' on'hmracdb1' succeeded hmracdb1 2016/11/22 14:25:57 /u01/app/grid/product/11.2.0/cdata/hmracdb1/backup_20161122_142557.olr Configure Oracle Grid Infrastructure fora Cluster ... succeeded Updating inventory properties forclusterware Starting Oracle Universal Installer... Checking swap space: must be greater than500 MB. Actual 4094 MB Passed The inventory pointer is located at/etc/oraInst.loc The inventory is located at/u01/app/oraInventory 'UpdateNodeList' was successful.

五. 安装后的检查

(1) 检查CRS资源组状态

【节点1】 crs_stat -t -v或者crsctl statusresource -t hmracdb1:~ # su - grid grid@hmracdb1:~> crsctl statusresource -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.CRS.dg ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.LISTENER.lsnr ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.asm ONLINE ONLINE hmracdb1 Started ONLINE ONLINE hmracdb2 Started ora.eons ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.gsd OFFLINE OFFLINE hmracdb1 OFFLINE OFFLINE hmracdb2 ora.net1.network ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.ons ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE hmracdb1 ora.hmracdb1.vip 1 ONLINE ONLINE hmracdb1 ora.hmracdb2.vip 1 ONLINE ONLINE hmracdb2 ora.oc4j 1 OFFLINE OFFLINE ora.scan1.vip 1 ONLINE ONLINE hmracdb1

【节点2】 hmracdb2:~ # su - grid grid@hmracdb2:~> crsctl statusresource -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.CRS.dg ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.LISTENER.lsnr ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.asm ONLINE ONLINE hmracdb1 Started ONLINE ONLINE hmracdb2 Started ora.eons ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.gsd OFFLINE OFFLINE hmracdb1 OFFLINE OFFLINE hmracdb2 ora.net1.network ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 ora.ons ONLINE ONLINE hmracdb1 ONLINE ONLINE hmracdb2 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE hmracdb1 ora.hmracdb1.vip 1 ONLINE ONLINE hmracdb1 ora.hmracdb2.vip 1 ONLINE ONLINE hmracdb2 ora.oc4j 1 OFFLINE OFFLINE ora.scan1.vip 1 ONLINE ONLINE hmracdb1 |

(2) 检查监听状态

grid@hmracdb1:~> lsnrctl status

LSNRCTL for Linux: Version 11.2.0.1.0 -Production on 22-NOV-2016 17:47:35

Copyright (c) 1991, 2009, Oracle. All rights reserved.

Connecting to(DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER))) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Linux: Version11.2.0.1.0 - Production Start Date 22-NOV-2016 17:31:00 Uptime 0 days 0 hr. 16 min. 35 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /u01/app/grid/product/11.2.0/network/admin/listener.ora Listener Log File /u01/app/oracle/diag/tnslsnr/hmracdb1/listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.6.154)(PORT=1521))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.6.54)(PORT=1521))) Services Summary... Service "+ASM" has 1instance(s). Instance "+ASM1", status READY, has 1 handler(s) for thisservice... The command completed successfully |

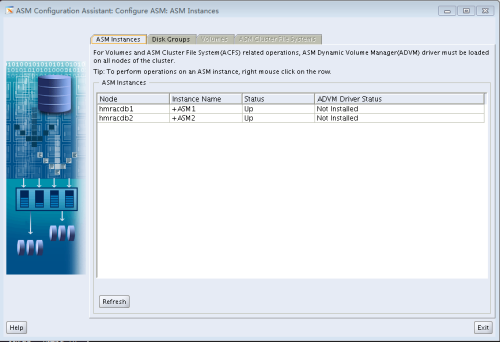

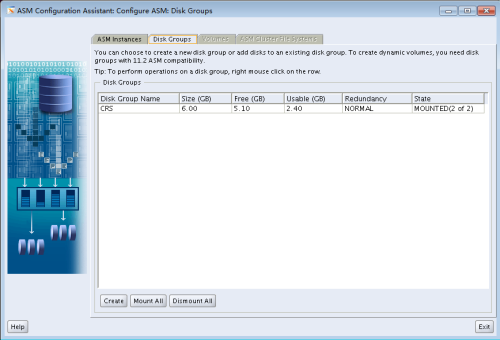

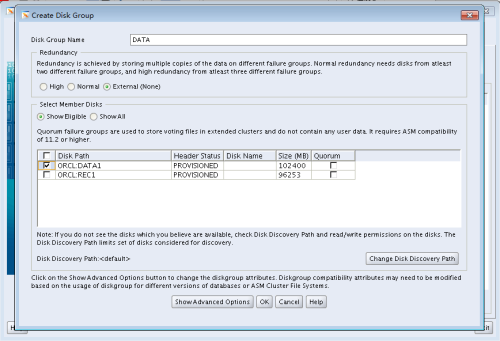

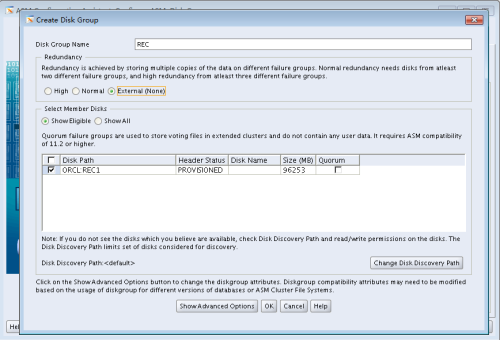

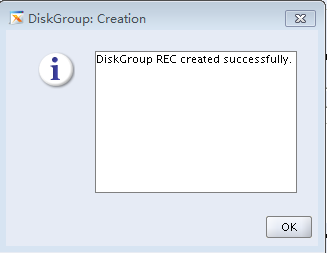

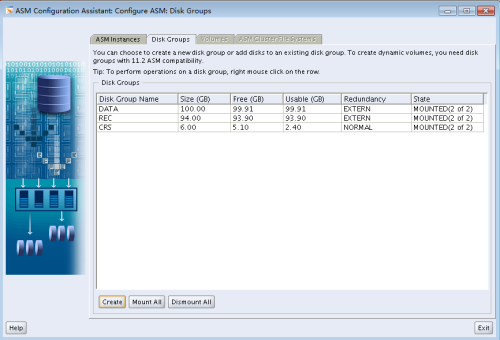

六. 创建ASM磁盘组(asmca)

创建数据区和快速恢复区ASM磁盘组

DATA --- > /dev/oracleasm/disks/DATA1

REC1 --- > /dev/oracleasm/disks/REC1

以grid用户运行asmca

以同样的方法创建REC磁盘组

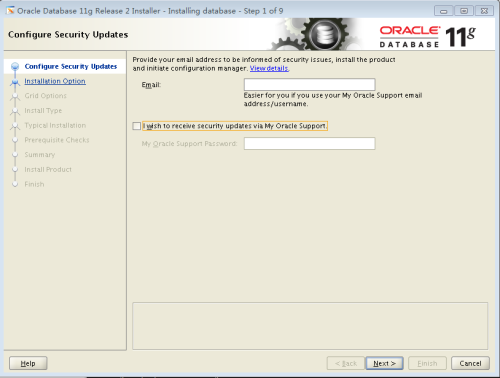

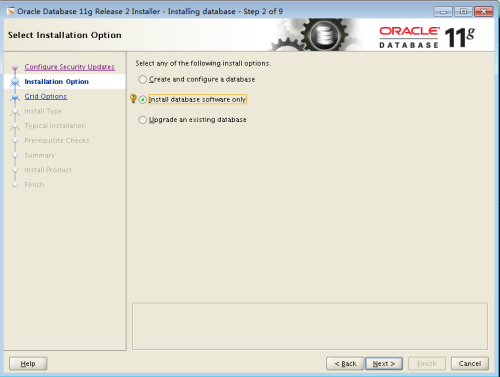

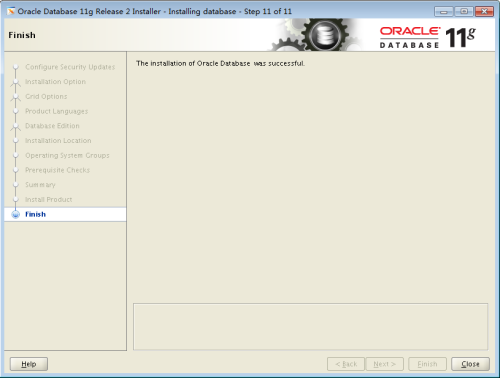

七. Oracle Database 软件安装

(1) 解压database软件

hmracdb1:/usr/local/src # unziplinux.x64_11gR2_database_1of2.zip hmracdb1:/usr/local/src # unzip linux.x64_11gR2_database_2of2.zip hmracdb1:/usr/local/src # chown -R oracle. database

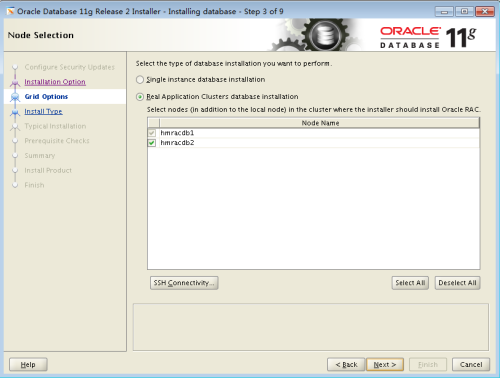

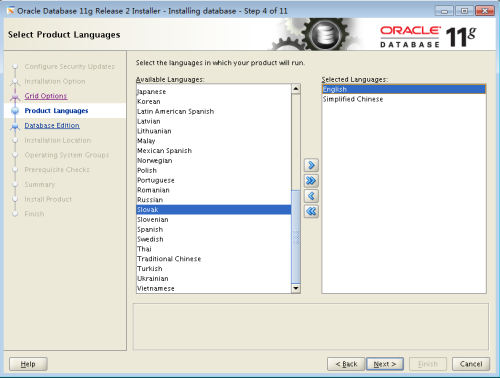

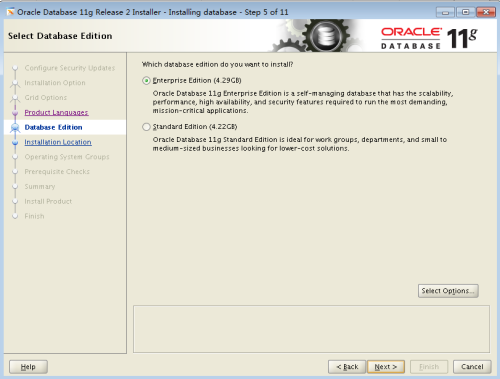

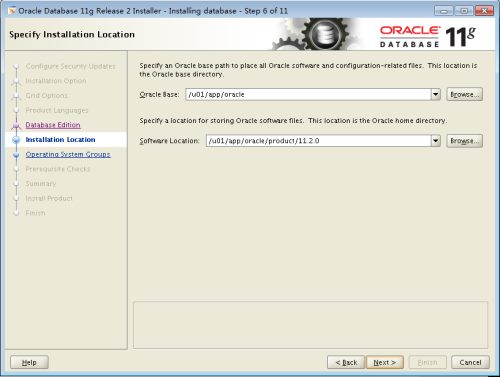

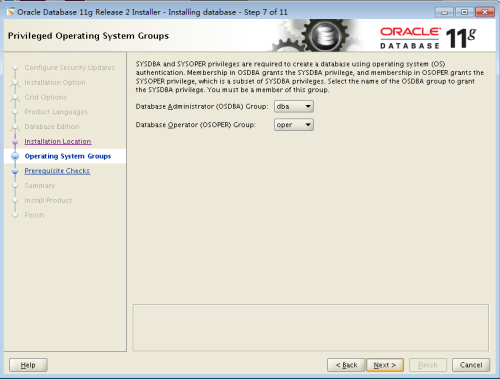

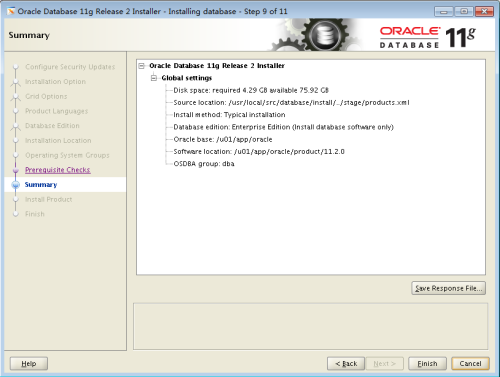

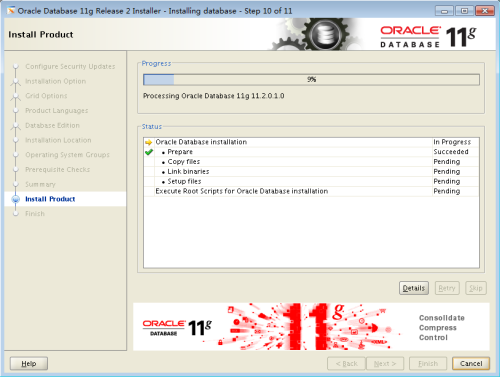

(2) 图形安装过程

oracle@hmracdb1:/usr/local/src/database>./runInstaller

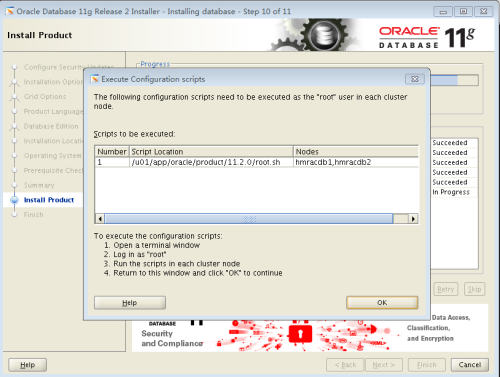

执行脚本

hmracdb1:~ #/u01/app/oracle/product/11.2.0/root.sh Running Oracle 11g root.sh script... The following environment variables areset as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/oracle/product/11.2.0 Enter the full pathname of the local bindirectory: [/usr/local/bin]: The file "dbhome" alreadyexists in /usr/local/bin. Overwrite it?(y/n) [n]: n The file "oraenv" alreadyexists in /usr/local/bin. Overwrite it?(y/n) [n]: n The file "coraenv" alreadyexists in /usr/local/bin. Overwrite it?(y/n) [n]: n Entries will be added to the /etc/oratabfile as needed by Database Configuration Assistant when adatabase is created Finished running generic part of root.shscript. Now product-specific root actions will beperformed. Finished product-specific root actions.

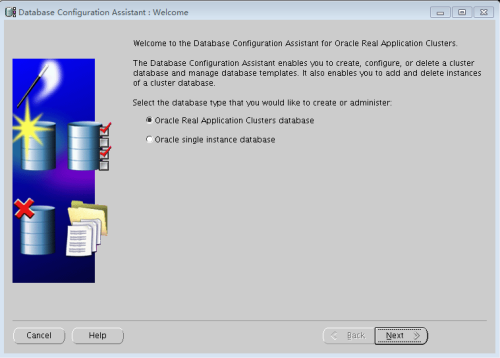

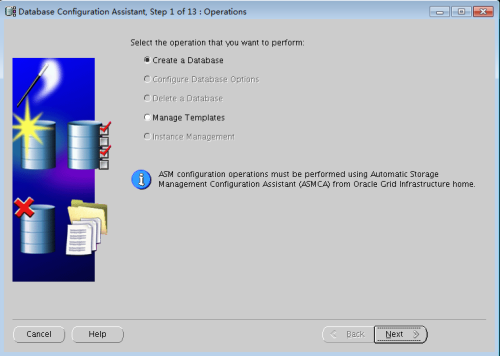

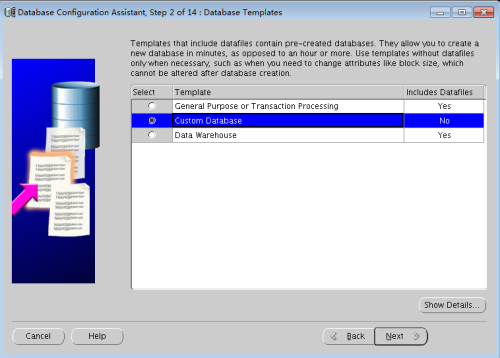

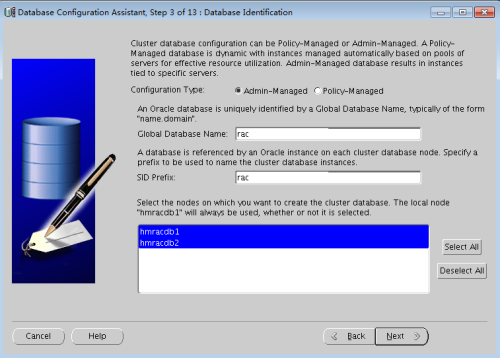

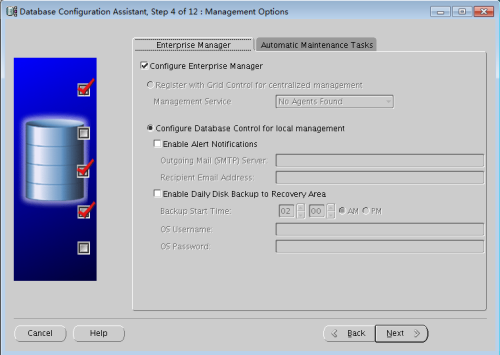

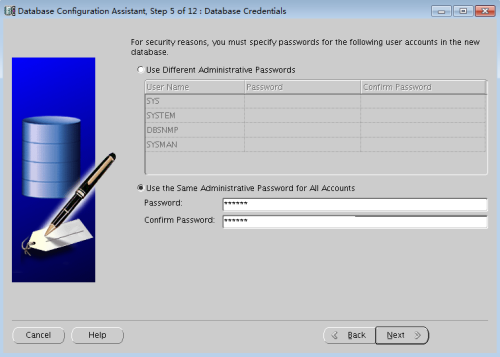

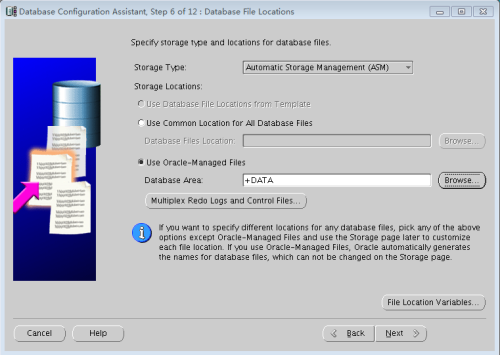

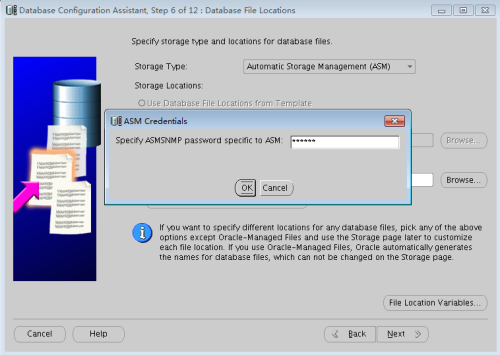

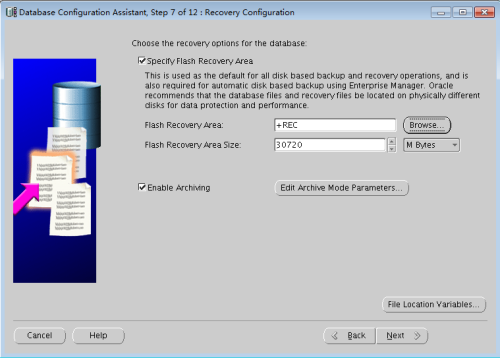

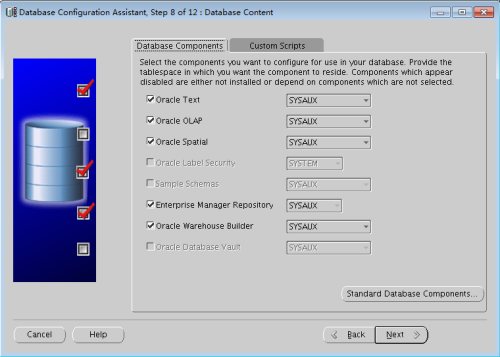

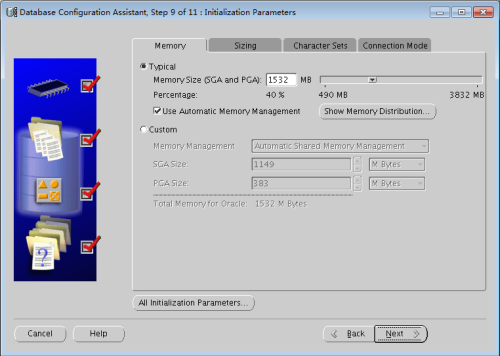

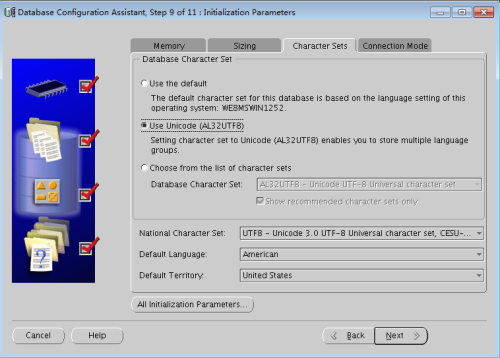

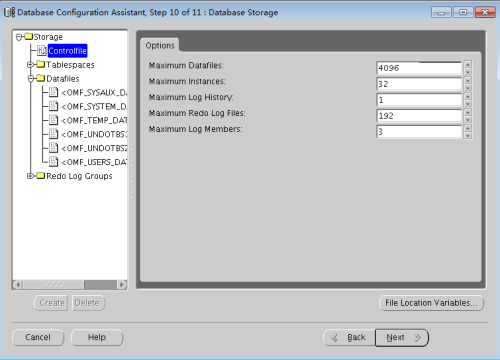

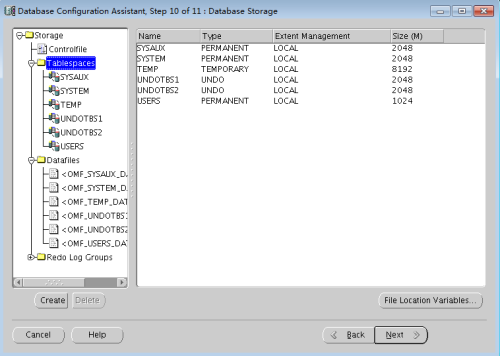

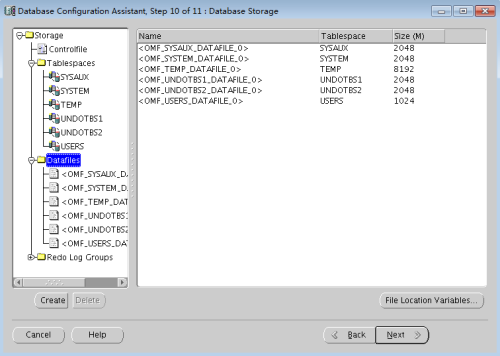

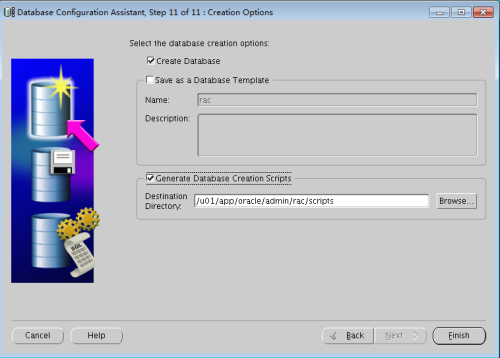

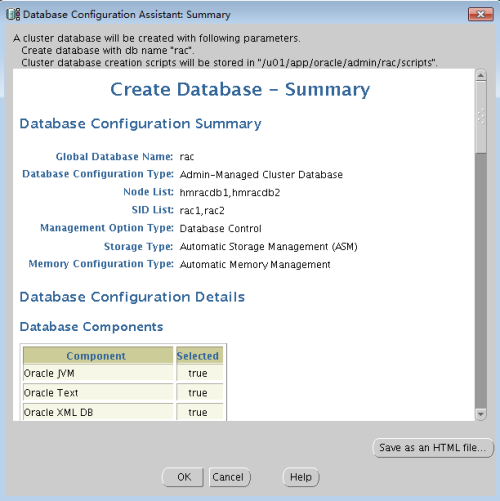

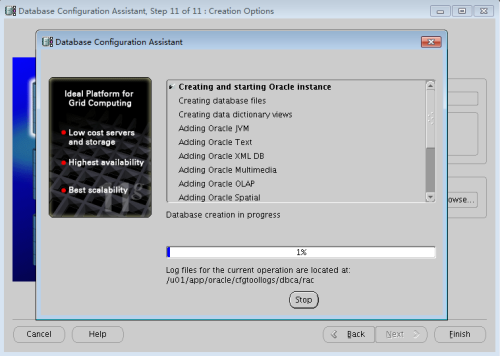

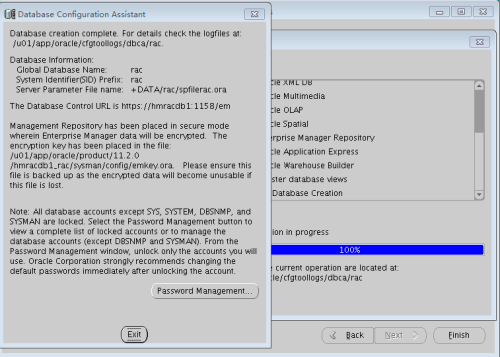

八. 创建数据库实例(dbca)

oracle@hmracdb1:~> dbca

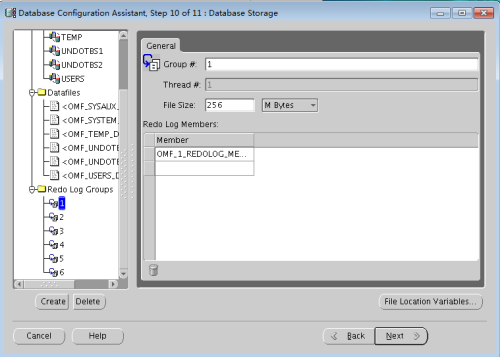

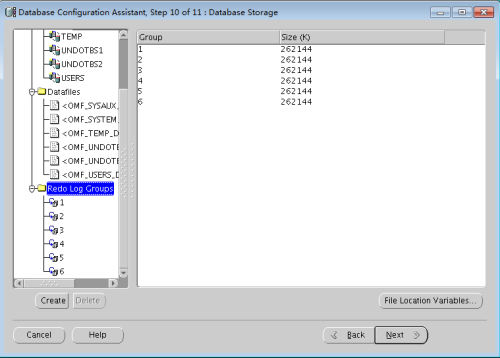

表空间可以根据实际情况创建合适的空间

重组日志

九. 集群操作命令

(1) 集群启动与关闭

关闭步骤: 1、先关闭数据库实例 hmracdb1:~ # su - oracle oracle@hmracdb1:~> srvctl stopdatabase -d rac 2、关闭oracle集群 hmracdb1:~ #/u01/app/grid/product/11.2.0/bin/crsctl stop cluster -all

启动步骤:(oracle集群默认开机自动启动) 1、 启动oracle集群 hmracdb1:~ #/u01/app/grid/product/11.2.0/bin/crsctl start cluster -all 2、 启动数据库实例 hmracdb1:~ # su - oracle oracle@hmracdb1:~> srvctl startdatabase -d rac |

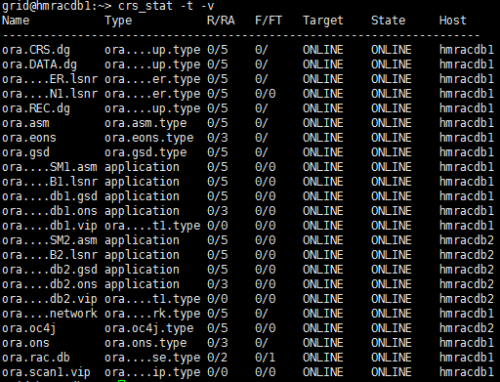

(2) 检查RAC资源组状态

1. 节点1 grid@hmracdb1:~> crs_stat -t -v 2. 节点2 grid@hmracdb2:~> crs_stat -t -v 3. gsd和oc4j默认不需要启动,设置收到启动并设置自动启动 grid@hmracdb1:~> srvctl enablenodeapps –g grid@hmracdb1:~> srvctl start nodeapps grid@hmracdb1:~> srvctl enable oc4j grid@hmracdb1:~> srvctl start oc4j

|

(3) 检查RAC运行状态

1. 检查RAC节点 grid@hmracdb1:~> olsnodes -n hmracdb1 1 hmracdb2 2 2. 检查crs集群状态 grid@hmracdb1:~> crsctl check cluster CRS-4537: Cluster Ready Services isonline CRS-4529: Cluster SynchronizationServices is online CRS-4533: Event Manager is online grid@hmracdb1:~> crsctl check crs CRS-4638: Oracle High AvailabilityServices is online CRS-4537: Cluster Ready Services isonline CRS-4529: Cluster SynchronizationServices is online CRS-4533: Event Manager is online 3. 检查数据库实例状态 grid@hmracdb1:~> srvctl configdatabase -d rac -a Database unique name: rac Database name: rac Oracle home:/u01/app/oracle/product/11.2.0 Oracle user: oracle Spfile: +DATA/rac/spfilerac.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: rac Database instances: rac1,rac2 Disk Groups: DATA,REC Services: Database is enabled Database is administrator managed grid@hmracdb1:~> srvctl statusdatabase -d rac Instance rac1 is running on node hmracdb1 Instance rac2 is running on node hmracdb2 4. 检查ASM磁盘 grid@hmracdb1:~> srvctl config asm ASM home: /u01/app/grid/product/11.2.0 ASM listener: LISTENER grid@hmracdb1:~> srvctl status asm ASM is running on hmracdb1,hmracdb2 5. 检查监听状态 grid@hmracdb1:~> srvctl status asm ASM is running on hmracdb1,hmracdb2 grid@hmracdb1:~> srvctl statuslistener Listener LISTENER is enabled Listener LISTENER is running on node(s):hmracdb1,hmracdb2 grid@hmracdb1:~> srvctl configlistener Name: LISTENER Network: 1, Owner: grid Home: <CRS home> End points: TCP:1521 6. 检查VIP状态 grid@hmracdb1:~> srvctl status vip -nhmracdb1 VIP hmracdb1-vip is enabled VIP hmracdb1-vip is running on node:hmracdb1 grid@hmracdb1:~> srvctl status vip -nhmracdb2 VIP hmracdb2-vip is enabled VIP hmracdb2-vip is running on node:hmracdb2 grid@hmracdb1:~> srvctl config vip -nhmracdb1 VIP exists.:hmracdb1 VIP exists.:/hmracdb1-vip/192.168.6.54/255.255.255.0/eth0 grid@hmracdb1:~> srvctl config vip -nhmracdb2 VIP exists.:hmracdb2 VIP exists.:/hmracdb2-vip/192.168.6.55/255.255.255.0/eth0 7. 检查SCAN grid@hmracdb1:~> srvctl status scan SCAN VIP scan1 is enabled SCAN VIP scan1 is running on nodehmracdb1 grid@hmracdb1:~> srvctl config scan SCAN name: hmracdb-scan, Network:1/192.168.6.0/255.255.255.0/eth0 SCAN VIP name: scan1, IP:/hmracdb-scan/192.168.6.66 8. 检查表决磁盘 grid@hmracdb1:~> crsctl query cssvotedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1.ONLINE 0cabca1797104f01bfc740460efd9665(ORCL:CRS1) [CRS] 2.ONLINE 66791219f9f14fc4bf1bb8c8b6bdda6a(ORCL:CRS2) [CRS] 3.ONLINE 22e396e1b1594ff3bf6f516303e17f28(ORCL:CRS3) [CRS] Located 3 voting disk(s). 9. 检查集群注册表 grid@hmracdb1:~> ocrcheck Status of Oracle Cluster Registry is asfollows : Version : 3 Total space (kbytes) : 262120 Used space (kbytes) : 2568 Available space (kbytes) : 259552 ID : 858387628 Device/File Name : +CRS Device/File integrity checksucceeded Device/Filenot configured Device/Filenot configured Device/Filenot configured Device/File notconfigured Cluster registry integrity check succeeded Logical corruption check bypassed due tonon-privileged user 10. 检查ASM磁盘组 grid@hmracdb1:~> asmcmd lsdg State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED NORMAL N 512 4096 1048576 6144 5218 309 2454 0 N CRS/ MOUNTED EXTERN N 512 4096 1048576 102400 83297 0 83297 0 N DATA/ MOUNTED EXTERN N 512 4096 1048576 96253 94471 0 94471 0 N REC/

(4) OracleDatabase实例检查(oracle查询语句)

SQL> select instance_name,status fromgv$instance; INSTANCE_NAME STATUS -------------------------------------------------------- rac1 OPEN rac2 OPEN 1. asm磁盘检查 SQL> set linesize 100; SQL> select path from v$asm_disk; PATH ---------------------------------------------------------------------------------------------------- ORCL:CRS1 ORCL:CRS2 ORCL:CRS3 ORCL:DATA1 ORCL:REC1 SQL> show parameter spfile; NAME TYPE VALUE ------------------------------------ ---------------------------------------------------- spfile string +DATA/rac/spfilerac.ora SQL> select path from v$asm_disk; PATH ---------------------------------------------------------------------------------------------------- ORCL:CRS1 ORCL:CRS2 ORCL:CRS3 ORCL:DATA1 ORCL:REC1 2. asm磁盘组空间 SQL> set linesize 500; SQL> selectgroup_number,name,state,type,total_mb,free_mb from v$asm_diskgroup; SQL> selectdisk_number,path,name,total_mb,free_mb from v$asm_disk where group_number=1; 3. 查询表空间名 SQL> select tablespace_name fromdba_tablespaces; TABLESPACE_NAME ------------------------------------------------------------ SYSTEM SYSAUX UNDOTBS1 TEMP UNDOTBS2 USERS 6 rows selected. 4. 查询指定用户默认表空间 SQL> selectdefault_tablespace,temporary_tablespace from dba_users where username='SYSTEM'; DEFAULT_TABLESPACE TEMPORARY_TABLESPACE ------------------------------------------------------------------------------------------------------------------------ SYSTEM TEMP 5. 查询表空间使用率 select ff.s tablespace_name, ff.b total, (ff.b - fr.b)usage, fr.b free, round((ff.b - fr.b) / ff.b * 100)|| '% ' usagep from (select tablespace_name s,sum(bytes) / 1024 / 1024 b from dba_data_files group by tablespace_name) ff, (select tablespace_name s,sum(bytes) / 1024 / 1024 b from dba_free_space group by tablespace_name) fr where ff.s = fr.s; 6. 查询表空间数据文件 select name from v$datafile; SQL> select name from v$datafile; NAME ---------------------------------------------------------------------------------------------------- +DATA/rac/datafile/system.261.928753797 +DATA/rac/datafile/sysaux.262.928753819 +DATA/rac/datafile/undotbs1.263.928753839 +DATA/rac/datafile/undotbs2.265.928753875 +DATA/rac/datafile/users.266.928753895 7. 查询日志文件 select member from v$logfile; SQL> select member from v$logfile; MEMBER ---------------------------------------------------------------------------------------------------- +DATA/rac/onlinelog/group_1.257.928753771 +REC/rac/onlinelog/group_1.257.928753775 +DATA/rac/onlinelog/group_2.258.928753777 +REC/rac/onlinelog/group_2.258.928753781 +DATA/rac/onlinelog/group_5.259.928753783 +REC/rac/onlinelog/group_5.259.928753787 +DATA/rac/onlinelog/group_6.260.928753791 +REC/rac/onlinelog/group_6.260.928753793 +DATA/rac/onlinelog/group_3.267.928756451 +REC/rac/onlinelog/group_3.261.928756455 +DATA/rac/onlinelog/group_4.268.928756459 +REC/rac/onlinelog/group_4.262.928756461 12 rows selected. 8. 查询归档日志 SQL> show parameterdb_recovery_file_dest_size; NAME TYPE VALUE ---------------------------------------------------------- ------------------------------ db_recovery_file_dest_size big integer 30G

转载于:https://blog.51cto.com/7424593/1876401

最后

以上就是鲜艳战斗机最近收集整理的关于SUSE11 Oracle11gR2 RAC&ASM双机集群环境部署的全部内容,更多相关SUSE11内容请搜索靠谱客的其他文章。

发表评论 取消回复