基本思想:手中有一款K201开发板,记录一下进行目标检测的历程

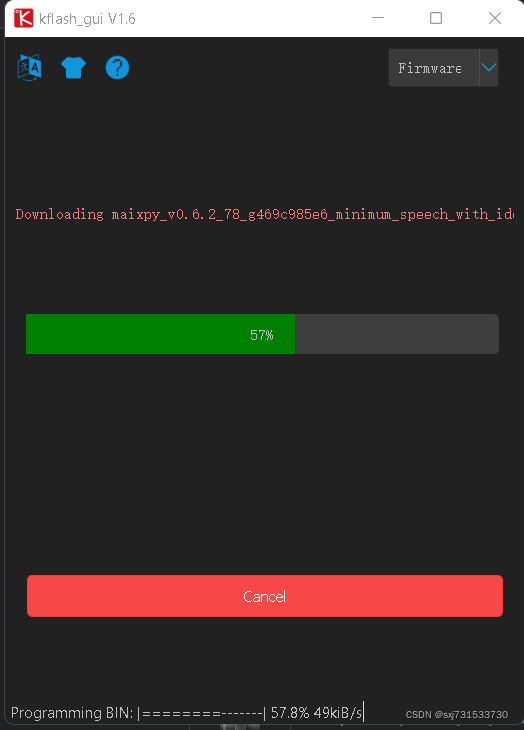

第一步:下载刷机包工具

第一步:下载刷机包工具

https://github.com/sipeed/kflash_gui/releases/download/v1.6.7/kflash_gui_v1.6.7_windows.7z

下载最小刷机包

https://dl.sipeed.com/fileList/MAIX/MaixPy/release/master/maixpy_v0.6.2_78_g469c985e6/maixpy_v0.6.2_78_g469c985e6_minimum_speech_with_ide_support.bin

进行刷机

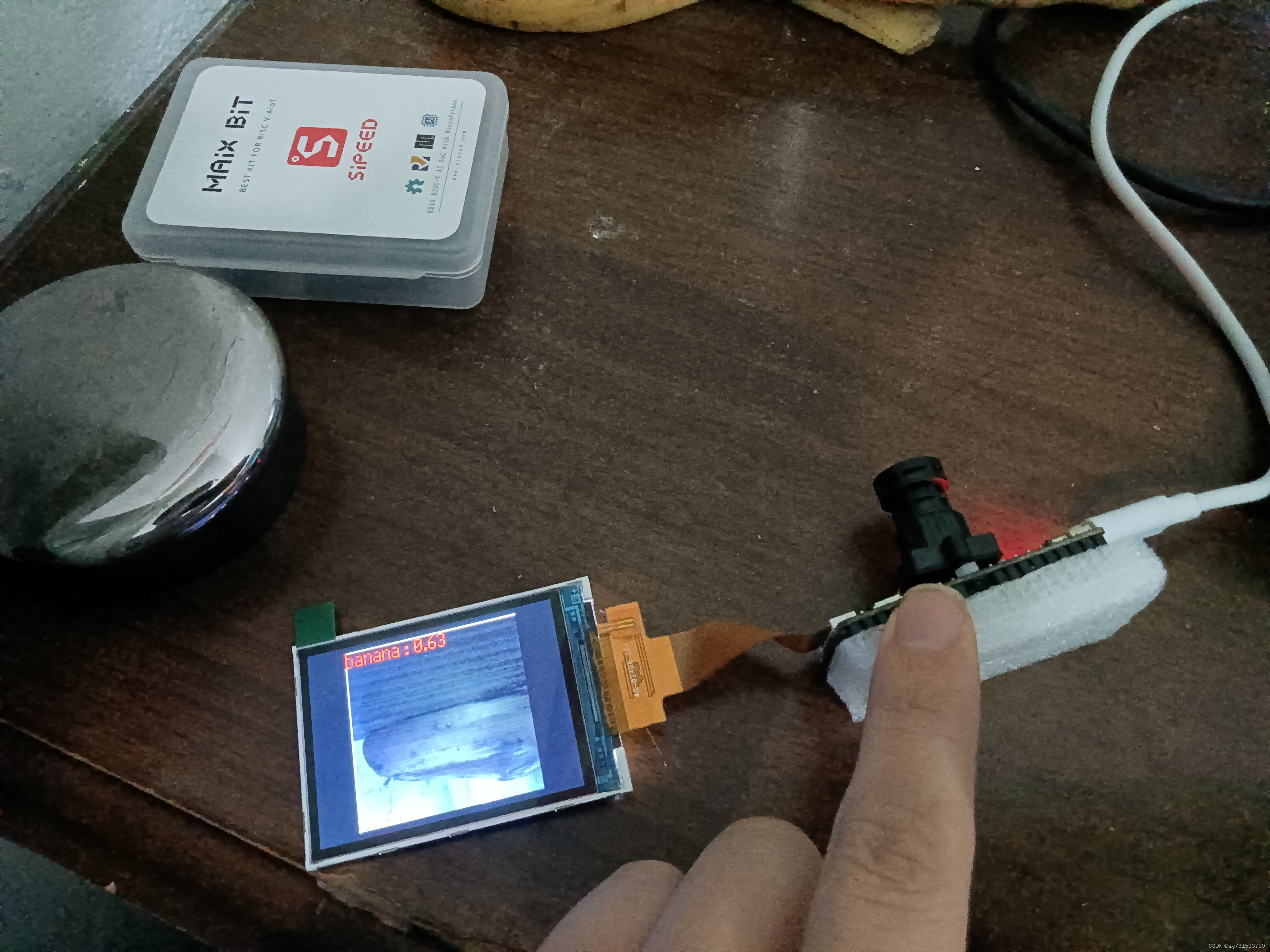

刷机完成之后,就会出现这个屏幕显示

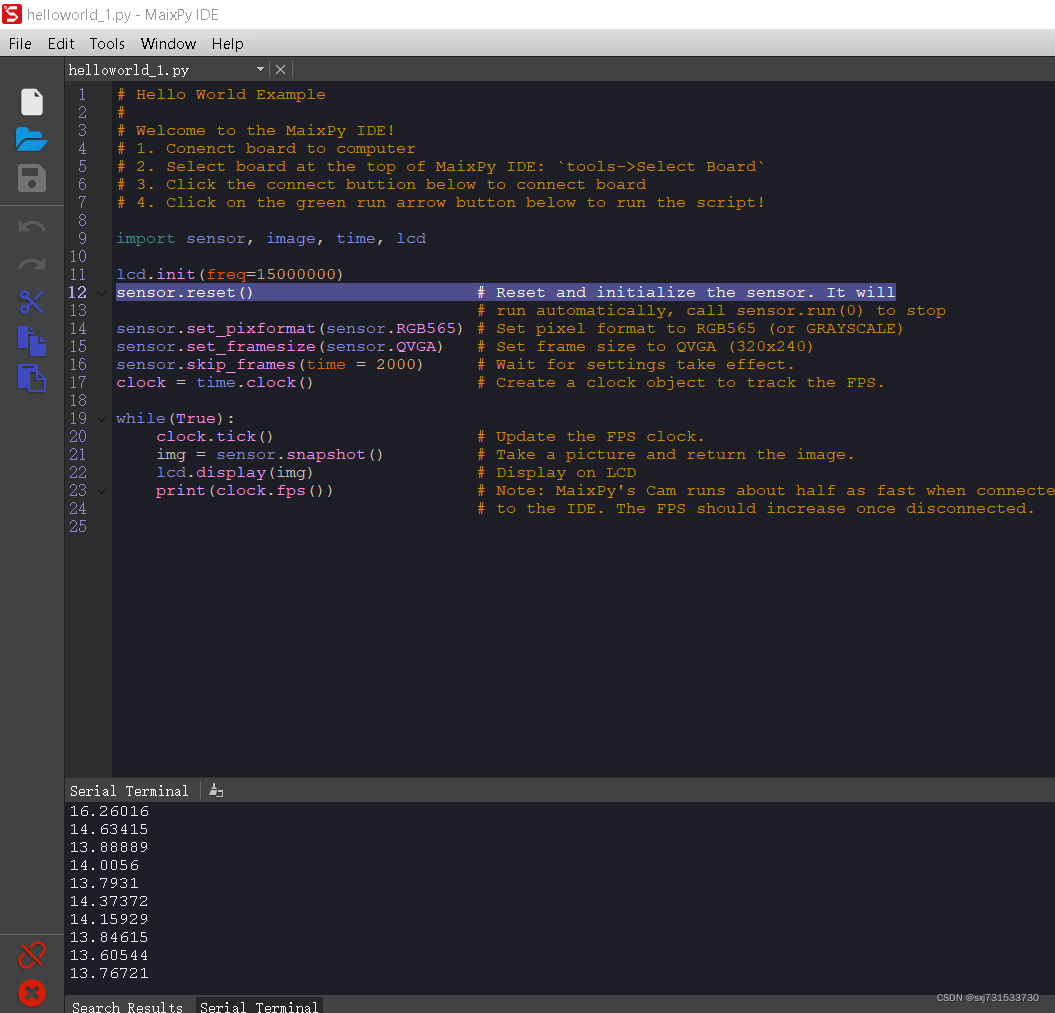

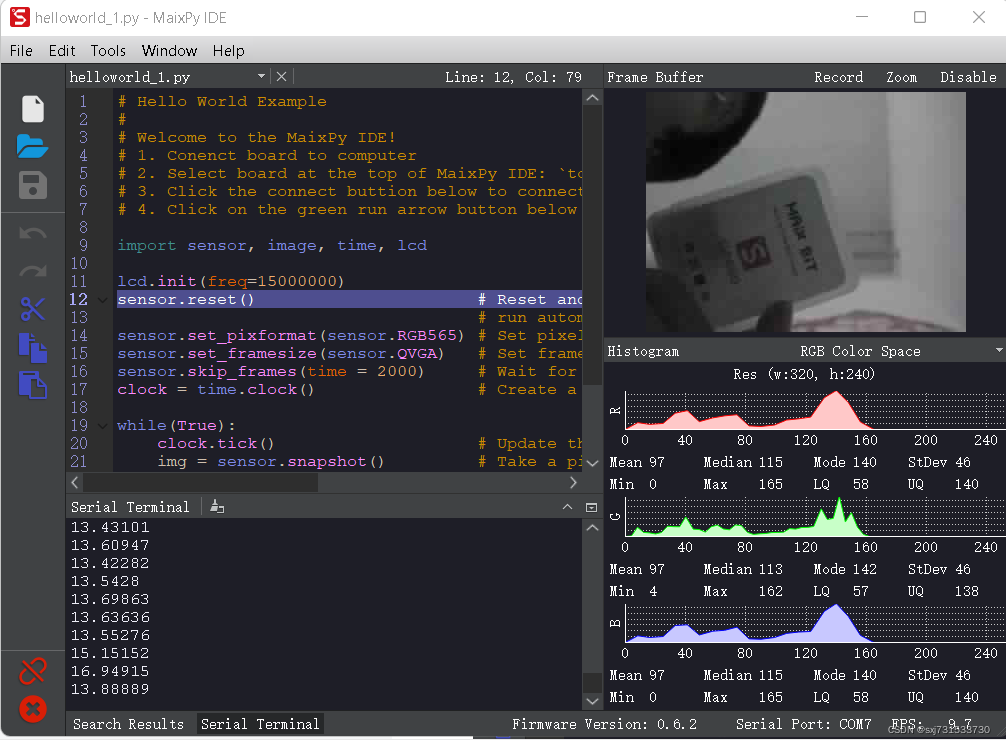

第二步 :下载window上的IDE开发工具,进行example 测试

https://dl.sipeed.com/fileDownload?verify_code=okhb&file_url=MAIX/MaixPy/ide/v0.2.5/maixpy-ide-windows-0.2.5.exe

这界面和openmv似乎一模一样

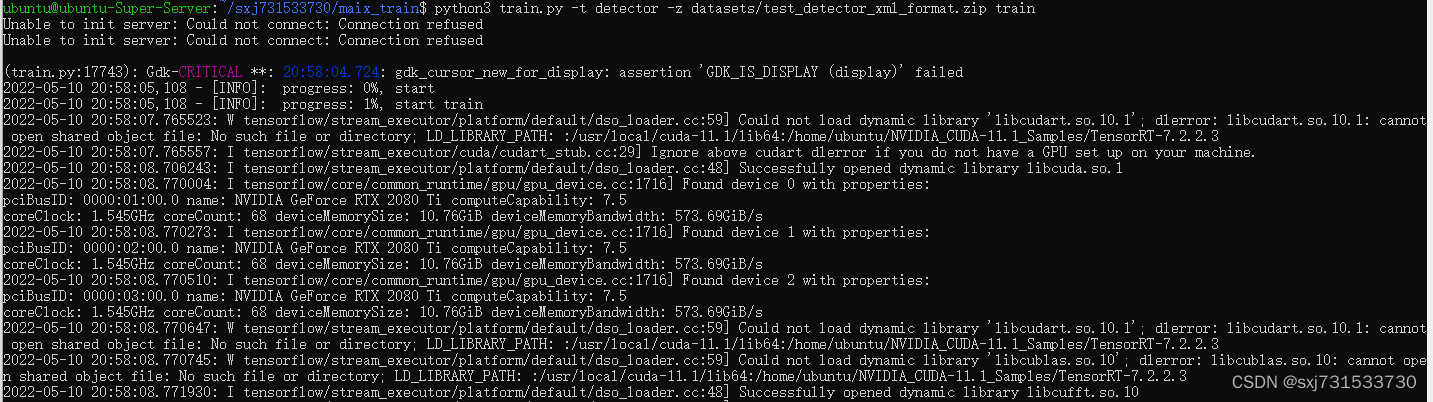

第三步:配置cuda和cudnn 和tensorflow,下载训练代码

ubuntu@ubuntu:~$ pip install tensorflow-gpu==2.3.0 -i https://pypi.mirrors.ustc.edu.cn/simple

下载代码,根据github说明导入ncc插件,并且给chmod 777 权限

ubuntu@ubuntu:~$ git clone https://github.com/sipeed/maix_train.git参考官网训练即可

ubuntu@ubuntu:~$ python3 train.py -t detector -z datasets/test_detector_xml_format.zip train测试训练的yolov2

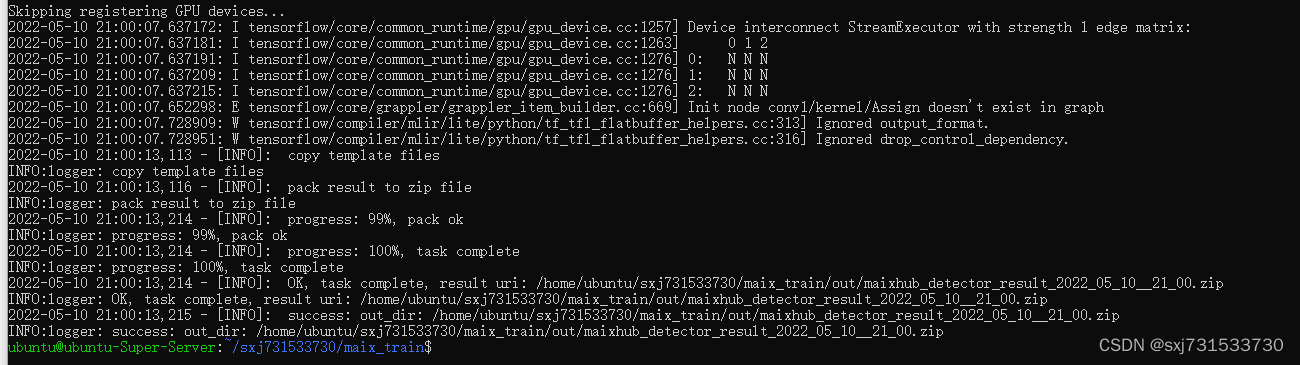

训练结束和文件生成

生成的模型

第四步:训练自己的数据,将voc数据集转成要求的数据集类型 各个子类文件存放的t图片和xml 注意图片要求((224, 224, 3), (240, 240, 3))

链接:https://pan.baidu.com/s/1QBTNboPYBzOekwSk73C0ZA

提取码:gi0l

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ tree -L 2 datasets/sxj_test_detector_xml_format

datasets/sxj_test_detector_xml_format

├── images

│ ├── apple

│ ├── banana

│ ├── battery

│ ├── bottle

│ ├── drug

│ ├── smoke

│ └── ylg

├── labels.txt

└── xml

├── apple

├── banana

├── battery

├── bottle

├── drug

├── smoke

└── ylg

16 directories, 1 file压缩zip

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ zip -r datasets/sxj_test_detector_xml_format.zip datasets/sxj_test_detector_xml_format/

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ python3 train.py -t detector -z datasets/sxj_test_detector_xml_format.zip train

2022-05-11 03:18:48,202 - [INFO]: load datasets complete, check pass, images num:1692, bboxes num:1692

2022-05-11 03:18:48,409 - [INFO]: train, labels:['drug', 'battery', 'apple', 'bottle', 'banana', 'smoke', 'ylg']

2022-05-11 03:18:48,409 - [DEBUG]: train, datasets dir:/home/ubuntu/sxj731533730/maix_train/out/datasets/sxj_test_detector_xml_format

2022-05-11 03:18:48,411 - [INFO]: bboxes num: 1692, first bbox: [0.58482143 0.62053571]

2022-05-11 03:18:48,815 - [INFO]: bbox accuracy(IOU): 77.11%

2022-05-11 03:18:48,815 - [INFO]: bound boxes: (83.000000,116.50),(58.000000,53.00),(135.000000,130.00),(185.000000,143.00),(117.000000,184.00)

2022-05-11 03:18:48,815 - [INFO]: anchors: [2.59375, 3.640625000000001, 1.8125000000000002, 1.65625, 4.21875, 4.0625, 5.78125, 4.46875, 3.65625, 5.75]

2022-05-11 03:18:48,815 - [INFO]: w/h ratios: [0.64, 0.71, 1.04, 1.09, 1.29]

2022-05-11 03:18:48.869593: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-05-11 03:18:50.355142: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 9340 MB memory: -> device: 0, name: NVIDIA GeForce RTX 2080 Ti, pci bus id: 0000:01:00.0, compute capability: 7.5

2022-05-11 03:18:50.355834: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /job:localhost/replica:0/task:0/device:GPU:1 with 9478 MB memory: -> device: 1, name: NVIDIA GeForce RTX 2080 Ti, pci bus id: 0000:02:00.0, compute capability: 7.5

2022-05-11 03:18:50.356382: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /job:localhost/replica:0/task:0/device:GPU:2 with 9502 MB memory: -> device: 2, name: NVIDIA GeForce RTX 2080 Ti, pci bus id: 0000:03:00.0, compute capability: 7.5

load local weight file: /home/ubuntu/sxj731533730/maix_train/train/detector/weights/mobilenet_7_5_224_tf_no_top.h5

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 224, 224, 3)] 0

mobilenet_0.75_224 (Functio (None, 7, 7, 768) 1832976

nal)

第五步测试

第六步:也可以使用pytorch训练,官方指导,训练自己的数据集 maix_train/pytorch at master · sipeed/maix_train · GitHub

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ cd pytorch/

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train/pytorch$ ls

classifier detector README.md

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train/pytorch$ cd detector/准备好voc数据集之后

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ python3 split_train_val.py --xml_path=/home/ubuntu/sxj731533730/maix_train/trainData/Annotations --txt_path=/home/ubuntu/sxj

731533730/maix_train/trainData/ImageSets/Main

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$ python3 voc.py

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train$修改一下函数/home/ubuntu/sxj731533730/maix_train/pytorch/detector/train.py的主函数

if __name__ == "__main__":

# classes = ["0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A", "B", "C", "D", "E", "mouse", "microbit", "ruler", "cat", "peer", "ship", "apple", "car", "pan", "dog", "umbrella", "airplane", "clock", "grape", "cup", "left", "right", "front", "stop", "back"]

# anchors = [[2.44, 2.25], [5.03, 4.91], [3.5, 3.53], [4.16, 3.94], [2.97, 2.84]]

# dataset_name = "cards2"

classes = ['drug', 'ylg', 'smoke', 'battery', 'bottle', 'apple', 'banana']

anchors = [[1.87, 5.32], [1.62, 3.28], [1.75, 3.78], [1.33, 3.66], [1.5, 4.51]]

dataset_name = "/home/ubuntu/sxj731533730/maix_train/trainData"

train = Train(classes,

"yolov2_slim",

dataset_name,

batch_size=32,

anchors=anchors,

input_shape=(3, 224, 224))

# dataset = Cards_Generator(classes, "datasets/cards/card_in", "datasets/cards/bg", 300,

# transform = SSDAugmentation(size=(224, 224), mean=(0.5, 0.5, 0.5), std=(128/255.0, 128/255.0, 128/255.0))

# )

# train.load_dataset("datasets/cards", load_num_workers = 16, dataset=dataset)

train.load_dataset(f"{dataset_name}", load_num_workers = 16)

训练命令

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train/pytorch/detector$ python3 train.py

/usr/local/lib/python3.8/dist-packages/torch/functional.py:445: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:2157.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

Loading the pretrained model ...

Loading the darknet_tiny ...

2022-05-10 22:47:18.640542: W tensorflow/stream_executor/platform/default/dso_loader.cc:59] Could not load dynamic library 'libcudart.so.10.1'; dlerror: libcudart.so.10.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /home/ubuntu/.local/lib/python3.8/site-packages/cv2/../../lib64::/usr/local/cuda-11.1/lib64:/home/ubuntu/NVIDIA_CUDA-11.1_Samples/TensorRT-7.2.2.3

2022-05-10 22:47:18.640578: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2022-05-10 22:47:18 - [DEBUG] - [MainProcess - MainThread] Falling back to TensorFlow client; we recommended you install the Cloud TPU client directly with pip install cloud-tpu-client.

2022-05-10 22:47:19 - [INFO] - [MainProcess - MainThread] check dataset in train

0/1522(0.0%), 76/1522(5.0%), 152/1522(10.0%), 228/1522(15.0%), 304/1522(20.0%), 380/1522(25.0%), 456/1522(30.0%), 532/1522(35.0%), 608/1522(39.9%), 684/1522(44.9%), 760/1522(49.9%), 836/1522(54.9%), 912/1522(59.9%), 988/1522(64.9%), 1064/1522(69.9%), 1140/1522(74.9%), 1216/1522(79.9%), 1292/1522(84.9%), 1368/1522(89.9%), 1444/1522(94.9%), 1520/1522(99.9%),

2022-05-10 22:47:19 - [INFO] - [MainProcess - MainThread] check dataset in val

0/170(0.0%), 8/170(4.7%), 16/170(9.4%), 24/170(14.1%), 32/170(18.8%), 40/170(23.5%), 48/170(28.2%), 56/170(32.9%), 64/170(37.6%), 72/170(42.4%), 80/170(47.1%), 88/170(51.8%), 96/170(56.5%), 104/170(61.2%), 112/170(65.9%), 120/170(70.6%), 128/170(75.3%), 136/170(80.0%), 144/170(84.7%), 152/170(89.4%), 160/170(94.1%), 168/170(98.8%),

2022-05-10 22:47:19 - [INFO] - [MainProcess - MainThread] dataset length: 1522

2022-05-10 22:47:19 - [INFO] - [MainProcess - MainThread] start train

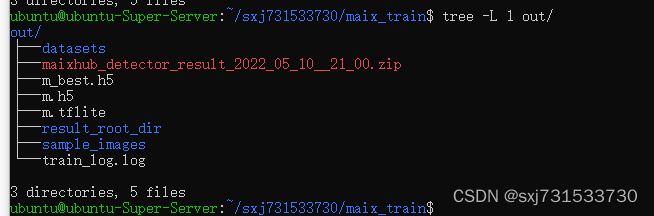

2022-05-10 22:47:19 - [INFO] - [MainProcess - MainThread] train epoch 0, lr: 0.001训练过程

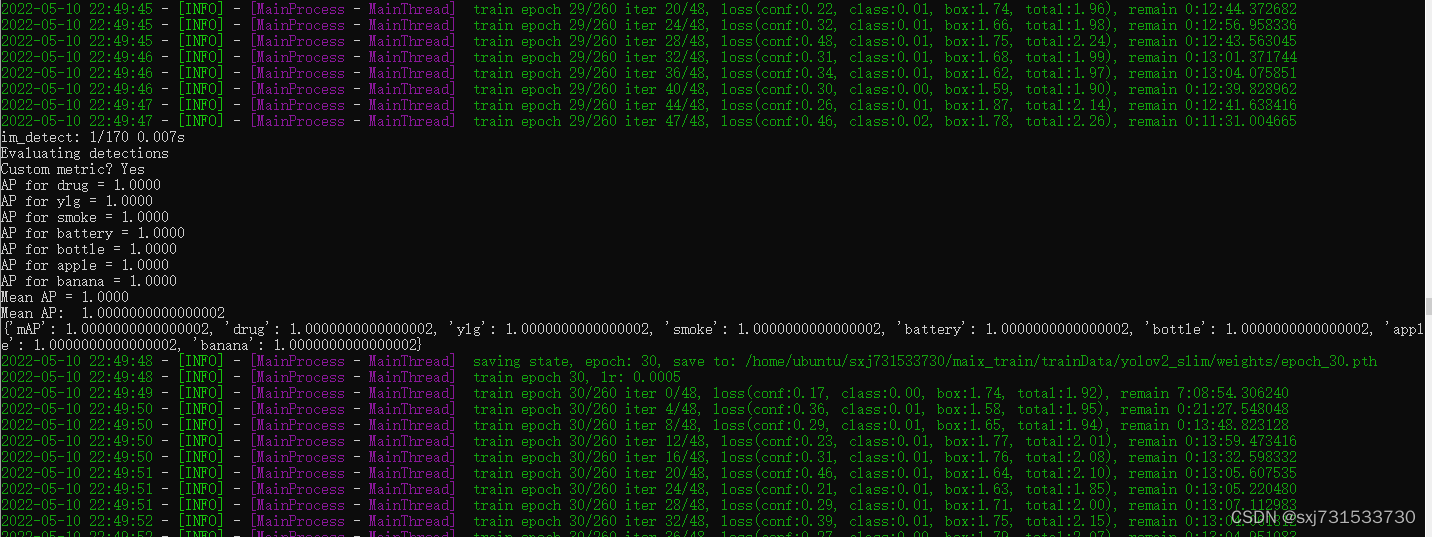

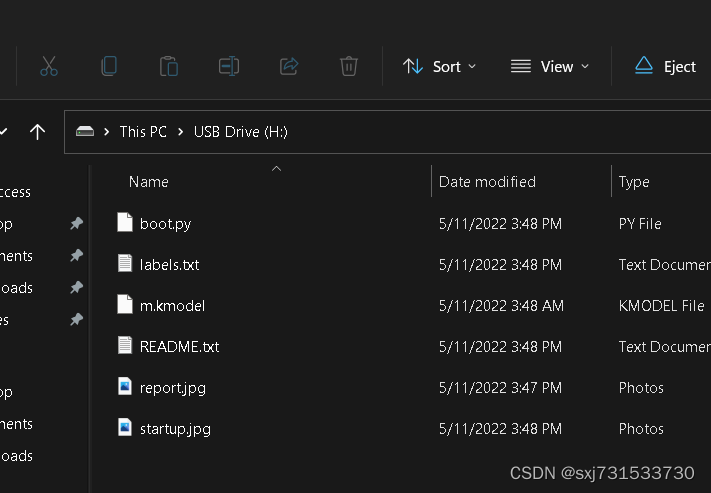

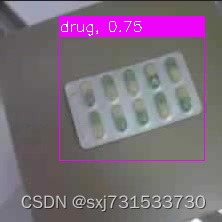

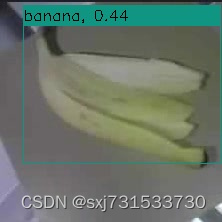

使用官方的IDE测试,这代码来自训练模型的压缩包,的示例代码中 outresult_root_dirmaixhub_detector_result_2022_05_11__03_48*

注意问题一:修改sd卡的方式 MaixPy 常见问题 - Sipeed Wiki

注意问题二:拷贝文件到sd卡

注意问题三:修改代码匹配你的模型尺寸

# object detector boot.py

# generated by maixhub.com

import sensor, image, lcd, time

import KPU as kpu

import gc, sys

def lcd_show_except(e):

import uio

err_str = uio.StringIO()

sys.print_exception(e, err_str)

err_str = err_str.getvalue()

img = image.Image(size=(224,224))

img.draw_string(0, 10, err_str, scale=1, color=(0xff,0x00,0x00))

lcd.display(img)

def main(anchors, labels = None, model_addr="/sd/m.kmodel", sensor_window=(224, 224), lcd_rotation=0, sensor_hmirror=False, sensor_vflip=False):

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

sensor.set_windowing(sensor_window)

sensor.set_hmirror(sensor_hmirror)

sensor.set_vflip(sensor_vflip)

sensor.run(1)

lcd.init(type=1)

lcd.rotation(lcd_rotation)

lcd.clear(lcd.WHITE)

if not labels:

with open('/sd/labels.txt','r') as f:

exec(f.read())

if not labels:

print("no labels.txt")

img = image.Image(size=(224, 224))

img.draw_string(90, 110, "no labels.txt", color=(255, 0, 0), scale=2)

lcd.display(img)

return 1

try:

img = image.Image("/sd/startup.jpg")

lcd.display(img)

except Exception:

img = image.Image(size=(224, 224))

img.draw_string(90, 110, "loading model...", color=(255, 255, 255), scale=2)

lcd.display(img)

task = kpu.load(model_addr)

kpu.init_yolo2(task, 0.5, 0.3, 5, anchors) # threshold:[0,1], nms_value: [0, 1]

try:

while 1:

img = sensor.snapshot()

t = time.ticks_ms()

objects = kpu.run_yolo2(task, img)

t = time.ticks_ms() - t

if objects:

for obj in objects:

pos = obj.rect()

img.draw_rectangle(pos)

img.draw_string(pos[0], pos[1], "%s : %.2f" %(labels[obj.classid()], obj.value()), scale=2, color=(255, 0, 0))

img.draw_string(0, 200, "t:%dms" %(t), scale=2, color=(255, 0, 0))

lcd.display(img)

except Exception as e:

raise e

finally:

kpu.deinit(task)

if __name__ == "__main__":

try:

labels = ["drug", "battery", "apple", "bottle", "banana", "smoke", "ylg"]

anchors = [3.8125, 3.8125, 5.375, 5.375, 7.1875, 7.1875, 11.25, 11.3125, 9.125, 9.125]

main(anchors = anchors, labels=labels, model_addr=0x300000, lcd_rotation=2, sensor_window=(224, 224))

# main(anchors = anchors, labels=labels, model_addr="/sd/m.kmodel")

except Exception as e:

sys.print_exception(e)

lcd_show_except(e)

finally:

gc.collect()结果

测试一下模型 test.py修改方式雷同train.py修改

ubuntu@ubuntu-Super-Server:~/sxj731533730/maix_train/pytorch/detector$ python3 test.py

test.py:3: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated since Python 3.3, and in 3.10 it will stop working

from collections import Iterable

2022-05-10 23:17:27 - [INFO] - [MainProcess - MainThread] check dataset in val

0/170(0.0%), 8/170(4.7%), 16/170(9.4%), 24/170(14.1%), 32/170(18.8%), 40/170(23.5%), 48/170(28.2%), 56/170(32.9%), 64/170(37.6%), 72/170(42.4%), 80/170(47.1%), 88/170(51.8%), 96/170(56.5%), 104/170(61.2%), 112/170(65.9%), 120/170(70.6%), 128/170(75.3%), 136/170(80.0%), 144/170(84.7%), 152/170(89.4%), 160/170(94.1%), 168/170(98.8%),

/usr/local/lib/python3.8/dist-packages/torch/functional.py:445: UserWarning: torch.meshgrid: in an upcoming release, it will be required to pass the indexing argument. (Triggered internally at ../aten/src/ATen/native/TensorShape.cpp:2157.)

return _VF.meshgrid(tensors, **kwargs) # type: ignore[attr-defined]

[0] see out/test.jpg, press any key to continue

[1] see out/test.jpg, press any key to continue

[2] see out/test.jpg, press any key to continue

[3] see out/test.jpg, press any key to continue

[4] see out/test.jpg, press any key to continue

[5] see out/test.jpg, press any key to continue

[6] see out/test.jpg, press any key to continue

[7] see out/test.jpg, press any key to continue

[8] see out/test.jpg, press any key to continue

[9] see out/test.jpg, press any key to continue测试效果

参考:

32 、 YOLO5训练自己的模型以及转ncnn模型_sxj731533730的博客-CSDN博客

31、TensorFlow训练模型转成tfilte,进行Android端进行车辆检测、跟踪、部署_sxj731533730的博客-CSDN博客_android车辆检测

maix_train/pytorch at master · sipeed/maix_train · GitHub

Maix Bit AIoT Developer Kit - Waveshare Wiki

https://github.com/sipeed/MaixPy

MaixPy 文档简介 - Sipeed Wiki

最后

以上就是冷艳发卡最近收集整理的关于29、MAix Bit K210开发板进行目标检测的全部内容,更多相关29、MAix内容请搜索靠谱客的其他文章。

发表评论 取消回复