安装环境centos6.4

按照参考文献2中的方法安装,可以成功,但是有些小问题。

1.安装过程全在root用户下进行。

2.安装完成后,浏览器访问ganglia出现各个节点的表格显示“ganglia no matching metrics detected”。

原因:/var/lib/ganglia/rrds中对各个节点相应的文件夹是小写,如果节点的hostname中包含大写字母的话,这样就发现找到数据了。

解决方法:修改gmetad.conf,将case_sensitive_hostnames的值设置为1

# In earlier versions of gmetad, hostnames were handled in a case

# sensitive manner

# If your hostname directories have been renamed to lower case,

# set this option to 0 to disable backward compatibility.

# From version 3.2, backwards compatibility will be disabled by default.

# default: 1 (for gmetad < 3.2)

# default: 0 (for gmetad >= 3.2)

case_sensitive_hostnames 1 #设置为1,则不会将大写变成小写3.按参考文献2的安装步骤,写了个客户端的安装脚本,即参考文献中第一步到第七步(不包含第七步中对配置文件修改的部分),且安装脚本不包含第六步。配置文件的配置采用master端修改,然后scp同步。安装脚本如下:

#!/bin/sh

yum install -y gcc gcc-c++ libpng freetype zlib libdbi apr* libxml2-devel pkg-config glib pixman pango pango-devel freetye-devel fontconfig cairo cairo-devel libart_lgpl libart_lgpl-devel pcre* rrdtool*

#install expat

cd /home/hadoop

wget http://jaist.dl.sourceforge.net/project/expat/expat/2.1.0/expat-2.1.0.tar.gz

tar -xf expat-2.1.0.tar.gz

cd expat-2.1.0

./configure --prefix=/usr/local/expat

make

make install

mkdir /usr/local/expat/lib64

cp -a /usr/local/expat/lib/* /usr/local/expat/lib64/

#install confuse

cd /home/hadoop

wget http://ftp.twaren.net/Unix/NonGNU//confuse/confuse-2.7.tar.gz

tar -xf confuse-2.7.tar.gz

cd confuse-2.7

./configure CFLAGS=-fPIC --disable-nls --prefix=/usr/local/confuse

make

make install

mkdir -p /usr/local/confuse/lib64

cp -a -f /usr/local/confuse/lib/* /usr/local/confuse/lib64/

#install ganglia

cd /home/hadoop

wget http://jaist.dl.sourceforge.net/project/ganglia/ganglia%20monitoring%20core/3.6.0/ganglia-3.6.0.tar.gz

tar -xf ganglia-3.6.0.tar.gz

cd ganglia-3.6.0

./configure --with-gmetad --enable-gexec --with-libconfuse=/usr/local/confuse --with-libexpat=/usr/local/expat --prefix=/usr/local/ganglia --sysconfdir=/etc/ganglia

make

make install

#gmond configuration

cd /home/hadoop/ganglia-3.6.0

cp -f gmond/gmond.init /etc/init.d/gmond

cp -f /usr/local/ganglia/sbin/gmond /usr/sbin/gmond

chkconfig --add gmond

gmond --default_config > /etc/ganglia/gmond.conf

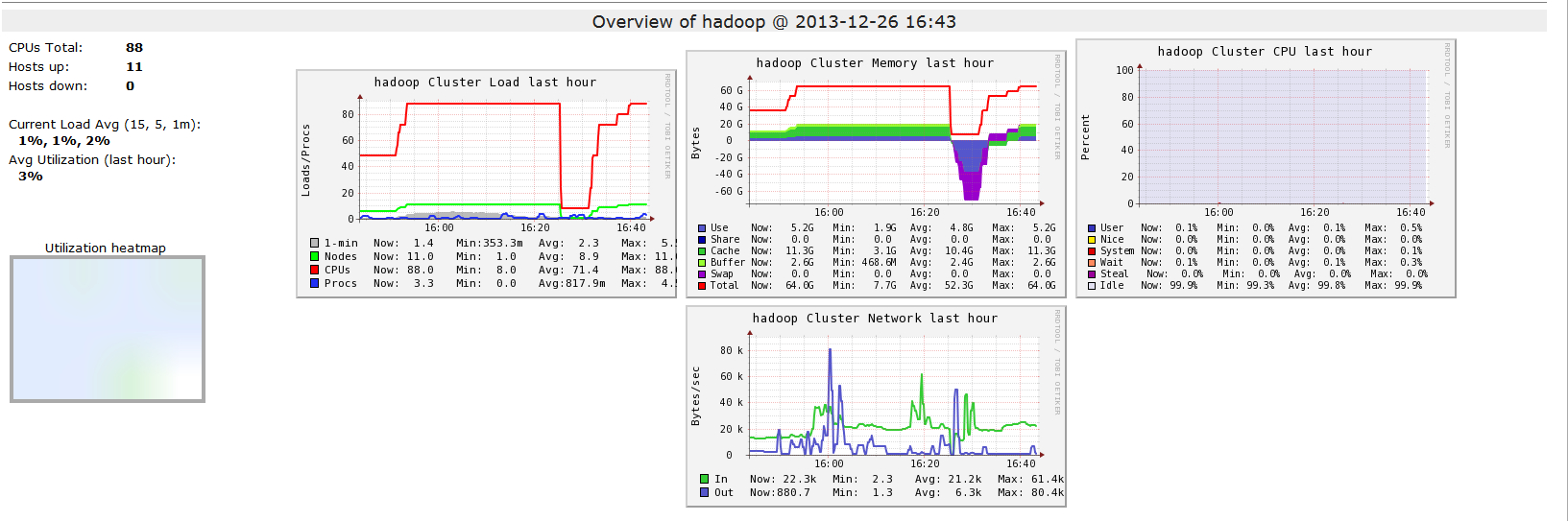

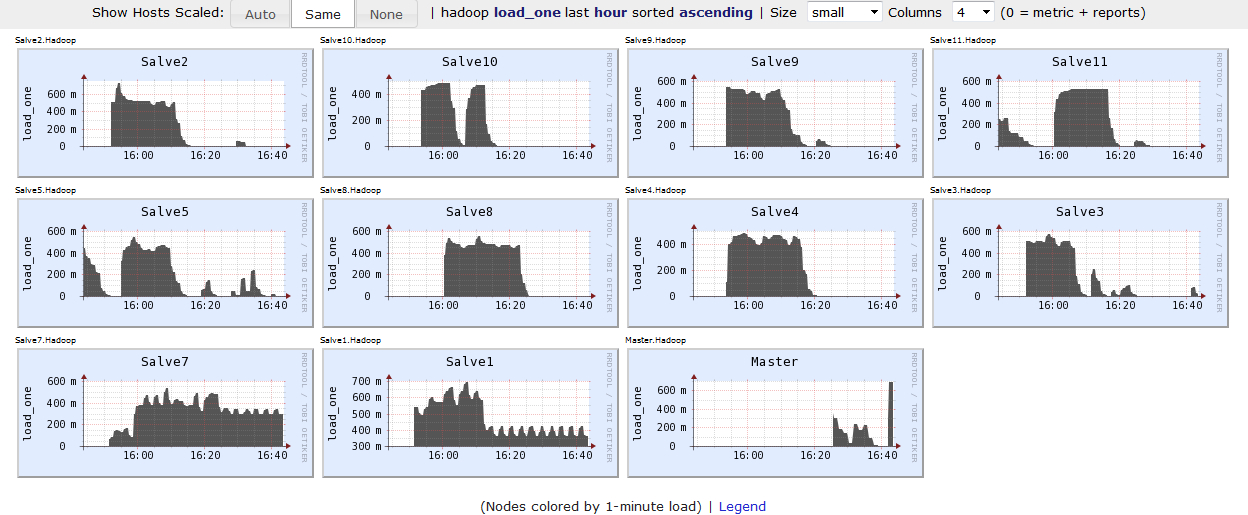

exit 04.网页显示参考

5.ganglia监控hadoop。

以上ganglia还没有采集hadoop的metrics,所以要用ganglia监控hadoop还要修改hadoop-metrics2.properties配置文件。注意:master节点和slave节点的hadoop-metrics2.properties文件不一样,我这里一开始按网上的方法配置ganglia得不到hadoop的metrics,后来才注意到配置文件貌似应该不一样,修改后成功采集。master和slave的配置文件如下:

master的hadoop-metrics2.properties:

# syntax: [prefix].[source|sink|jmx].[instance].[options]

# See package.html for org.apache.hadoop.metrics2 for details

*.sink.file.class=org.apache.hadoop.metrics2.sink.FileSink

#namenode.sink.file.filename=namenode-metrics.out

#datanode.sink.file.filename=datanode-metrics.out

#jobtracker.sink.file.filename=jobtracker-metrics.out

#tasktracker.sink.file.filename=tasktracker-metrics.out

#maptask.sink.file.filename=maptask-metrics.out

#reducetask.sink.file.filename=reducetask-metrics.out

#

# Below are for sending metrics to Ganglia

#

# for Ganglia 3.0 support

# *.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink30

#

# for Ganglia 3.1 support

*.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink31

*.sink.ganglia.period=10

# default for supportsparse is false

*.sink.ganglia.supportsparse=true

*.sink.ganglia.slope=jvm.metrics.gcCount=zero,jvm.metrics.memHeapUsedM=both

*.sink.ganglia.dmax=jvm.metrics.threadsBlocked=70,jvm.metrics.memHeapUsedM=40

namenode.sink.ganglia.servers=192.168.178.92:8649

#datanode.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649

jobtracker.sink.ganglia.servers=192.168.178.92:8649

#tasktracker.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649

#maptask.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649

#reducetask.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649slave的hadoop-metrics2.properties:

# syntax: [prefix].[source|sink|jmx].[instance].[options]

# See package.html for org.apache.hadoop.metrics2 for details

*.sink.file.class=org.apache.hadoop.metrics2.sink.FileSink

#namenode.sink.file.filename=namenode-metrics.out

#datanode.sink.file.filename=datanode-metrics.out

#jobtracker.sink.file.filename=jobtracker-metrics.out

#tasktracker.sink.file.filename=tasktracker-metrics.out

#maptask.sink.file.filename=maptask-metrics.out

#reducetask.sink.file.filename=reducetask-metrics.out

#

# Below are for sending metrics to Ganglia

#

# for Ganglia 3.0 support

# *.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink30

#

# for Ganglia 3.1 support

*.sink.ganglia.class=org.apache.hadoop.metrics2.sink.ganglia.GangliaSink31

*.sink.ganglia.period=10

# default for supportsparse is false

*.sink.ganglia.supportsparse=true

*.sink.ganglia.slope=jvm.metrics.gcCount=zero,jvm.metrics.memHeapUsedM=both

*.sink.ganglia.dmax=jvm.metrics.threadsBlocked=70,jvm.metrics.memHeapUsedM=40

#namenode.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649

datanode.sink.ganglia.servers=192.168.178.92:8649

#jobtracker.sink.ganglia.servers=yourgangliahost_1:8649,yourgangliahost_2:8649

tasktracker.sink.ganglia.servers=192.168.178.92:8649

maptask.sink.ganglia.servers=192.168.178.92:8649

reducetask.sink.ganglia.servers=192.168.178.92:8649参考文献

[1]:ganglia监控小集群http://hakunamapdata.com/ganglia-configuration-for-a-small-hadoop-cluster-and-some-troubleshooting/

[2]:ganglia安装http://blog.csdn.net/iam333/article/details/16358509

最后

以上就是雪白黑猫最近收集整理的关于ganglia 监控 hadoop的全部内容,更多相关ganglia内容请搜索靠谱客的其他文章。

![[ganglia]关于无法显示监控画面的一种莫名的解决办法](https://www.shuijiaxian.com/files_image/reation/bcimg5.png)

发表评论 取消回复