https://gameinstitute.qq.com/community/detail/123183

protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras)

{

BeginFrameRendering(renderContext, cameras);

GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear);

GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher;

SetupPerFrameShaderConstants();

#if ENABLE_VR && ENABLE_XR_MODULE

SetupXRStates();

#endif

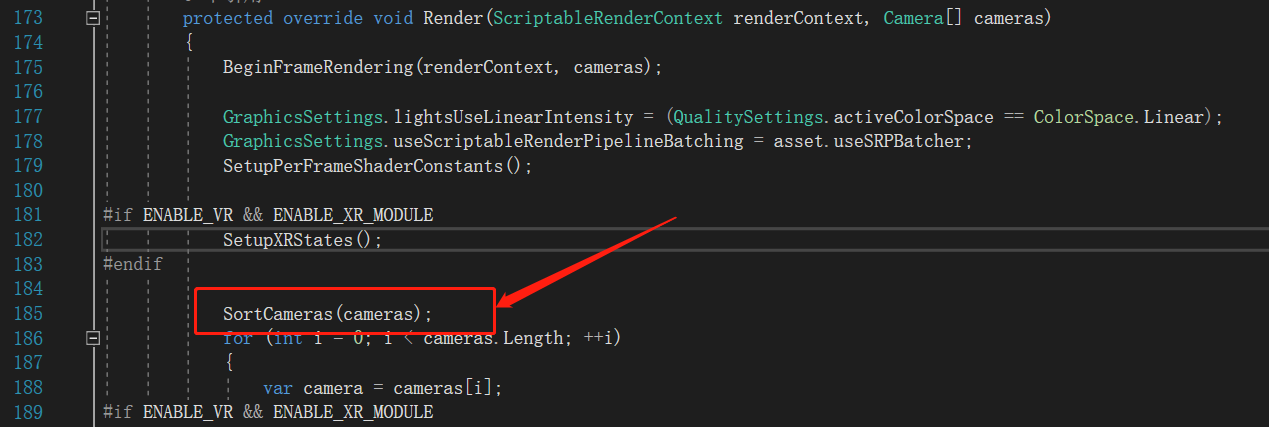

SortCameras(cameras);

for (int i = 0; i < cameras.Length; ++i)

{

var camera = cameras[i];

#if ENABLE_VR && ENABLE_XR_MODULE

if (IsStereoEnabled(camera) && xrSkipRender)

continue;

#endif

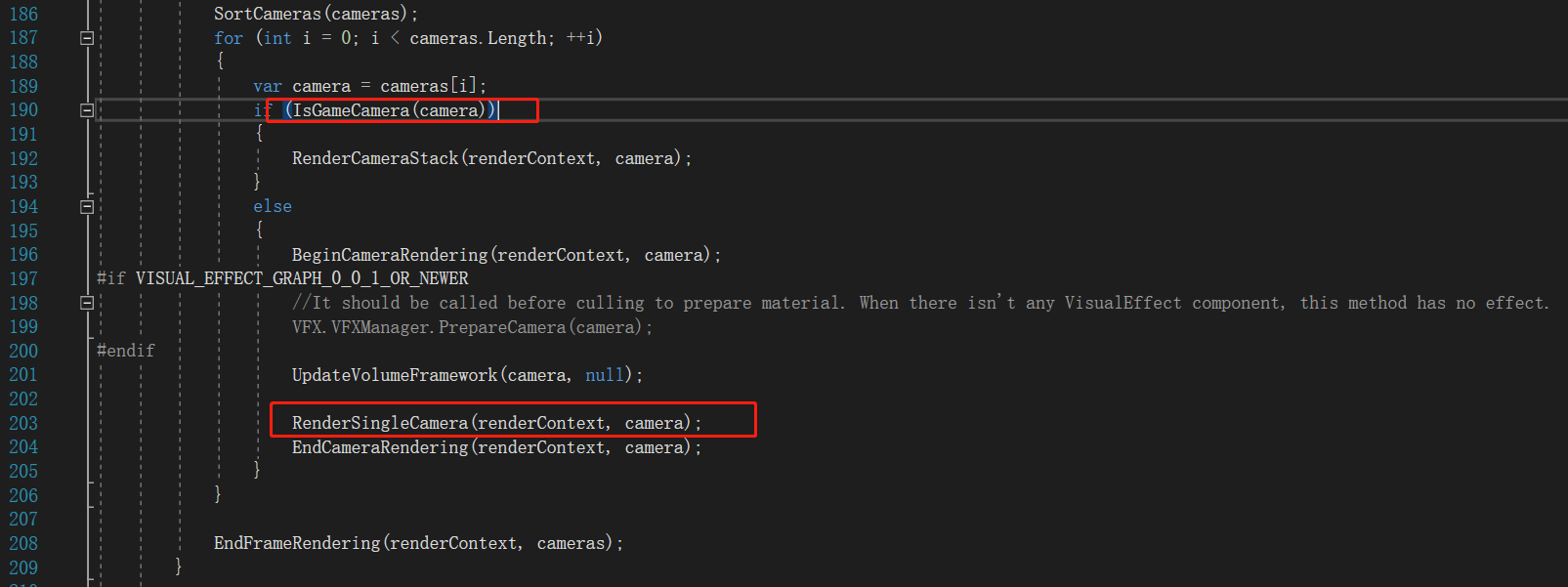

if (IsGameCamera(camera))

{

RenderCameraStack(renderContext, camera);

}

else

{

BeginCameraRendering(renderContext, camera);

#if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER

//It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect.

VFX.VFXManager.PrepareCamera(camera);

#endif

UpdateVolumeFramework(camera, null);

RenderSingleCamera(renderContext, camera);

EndCameraRendering(renderContext, camera);

}

}

EndFrameRendering(renderContext, cameras);

}

static void SetupPerFrameShaderConstants()

{

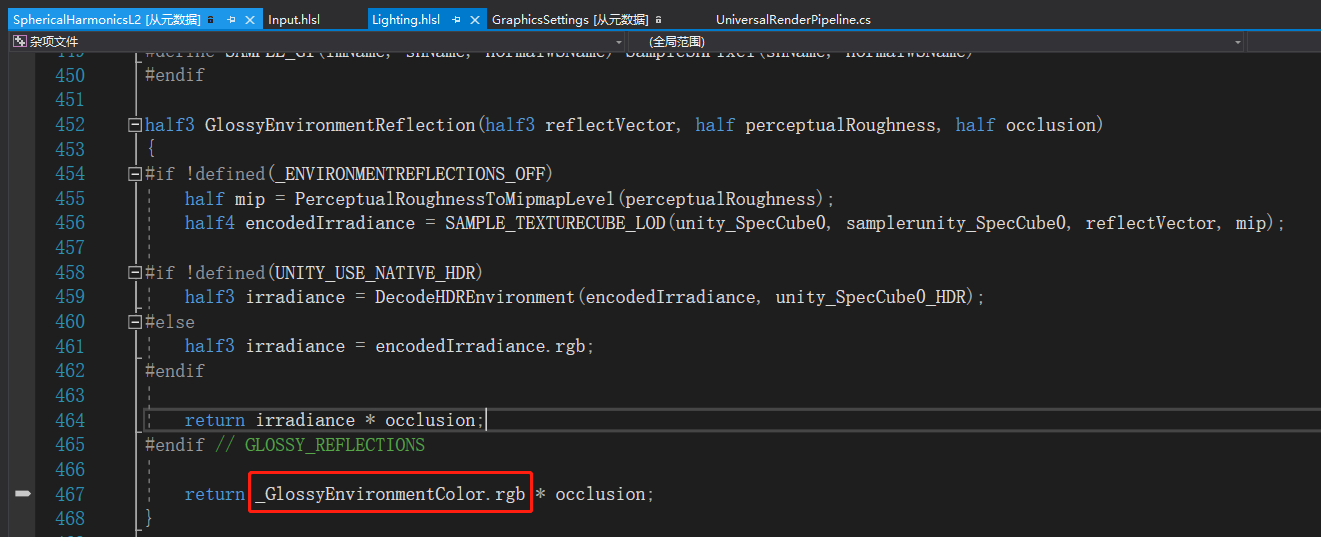

// When glossy reflections are OFF in the shader we set a constant color to use as indirect specular

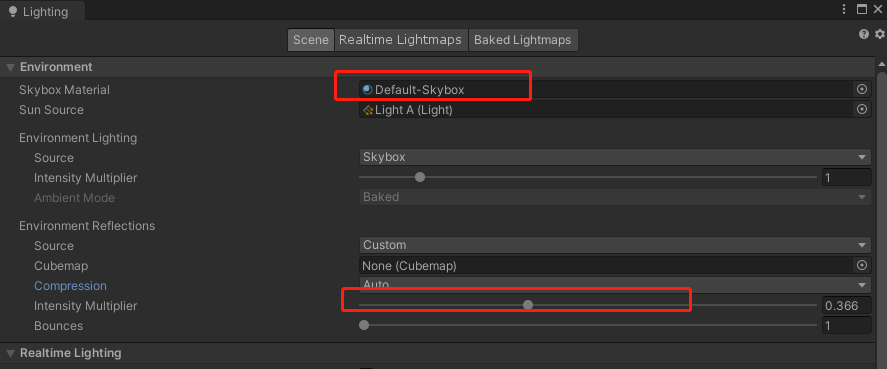

SphericalHarmonicsL2 ambientSH = RenderSettings.ambientProbe;

Color linearGlossyEnvColor = new Color(ambientSH[0, 0], ambientSH[1, 0], ambientSH[2, 0]) * RenderSettings.reflectionIntensity;

Color glossyEnvColor = CoreUtils.ConvertLinearToActiveColorSpace(linearGlossyEnvColor);

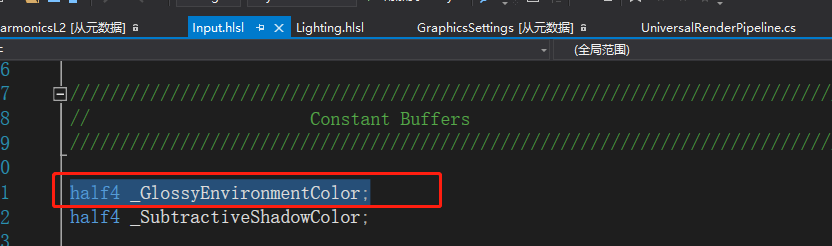

Shader.SetGlobalVector(PerFrameBuffer._GlossyEnvironmentColor, glossyEnvColor);

// Used when subtractive mode is selected

Shader.SetGlobalVector(PerFrameBuffer._SubtractiveShadowColor, CoreUtils.ConvertSRGBToActiveColorSpace(RenderSettings.subtractiveShadowColor));

}

https://www.cnblogs.com/murongxiaopifu/p/8997720.html

SphericalHarmonicsL2 ambientSH = RenderSettings.ambientProbe;

Color linearGlossyEnvColor = new Color(ambientSH[0, 0], ambientSH[1, 0], ambientSH[2, 0]) * RenderSettings.reflectionIntensity;

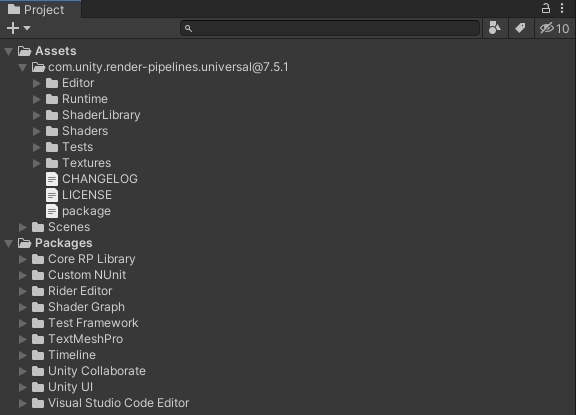

必须有个天空盒。才能正确的得到RenderSettings.ambientProbe的系数。

Color glossyEnvColor = CoreUtils.ConvertLinearToActiveColorSpace(linearGlossyEnvColor);

Shader.SetGlobalVector(PerFrameBuffer._GlossyEnvironmentColor, glossyEnvColor);

设置到shader的全局变量中。

PerFrameBuffer._GlossyEnvironmentColor = Shader.PropertyToID("_GlossyEnvironmentColor");

unity_SpecCube0

reference: https://catlikecoding.com/unity/tutorials/rendering/part-8/

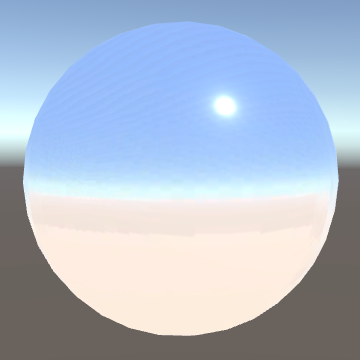

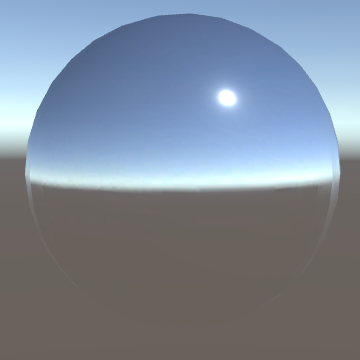

1.2 sampling the environment

to reflect the actual environment, we have to sample the skybox cube map.

it is defined as unity_SpecCube0 in UnityShaderVariables.

a cube map is sampled with a 3D vector, which specifies a sample direction.

we can use the UNITY_SAMPLE_TEXCUBE macor for that,

which takes care of the type differences for us.

let us begin by just using the normal vector as the sample direction.

#if defined(FORWARD_BASE_PASS)

indirectLight.diffuse += max(0, ShadeSH9(float4(i.normal, 1)));

float3 envSample = UNITY_SAMPLE_TEXCUBE(unity_SpecCube0, i.normal);

indirectLight.specular = envSample;

#endif

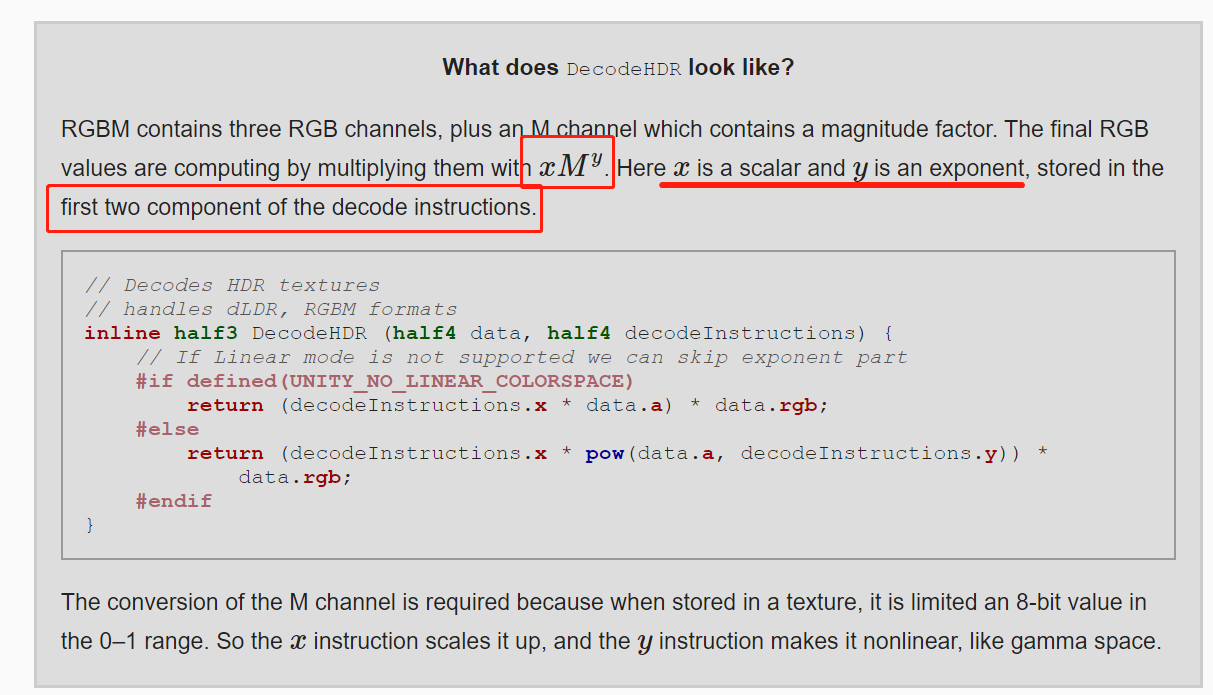

the skybox shows up, but it is far too bright.

that’s because the cube map contains hdr colors, which allows it to contain brightness values larger than one.

we have to convert the samples from HDR format to RGB.

unityCG contains the DecodeHDR function, which we can use.

the HDR data is stored in four channels, using the RGBM format, so we have to sample a float4 value, then convert.

indirectLight.diffuse += max(0, ShadeSH9(float4(i.normal, 1)));

float4 envSample = UNITY_SAMPLE_TEXCUBE(unity_SpecCube0, i.normal);

indirectLight.specular = DecodeHDR(envSample, unity_SpecCube0_HDR);

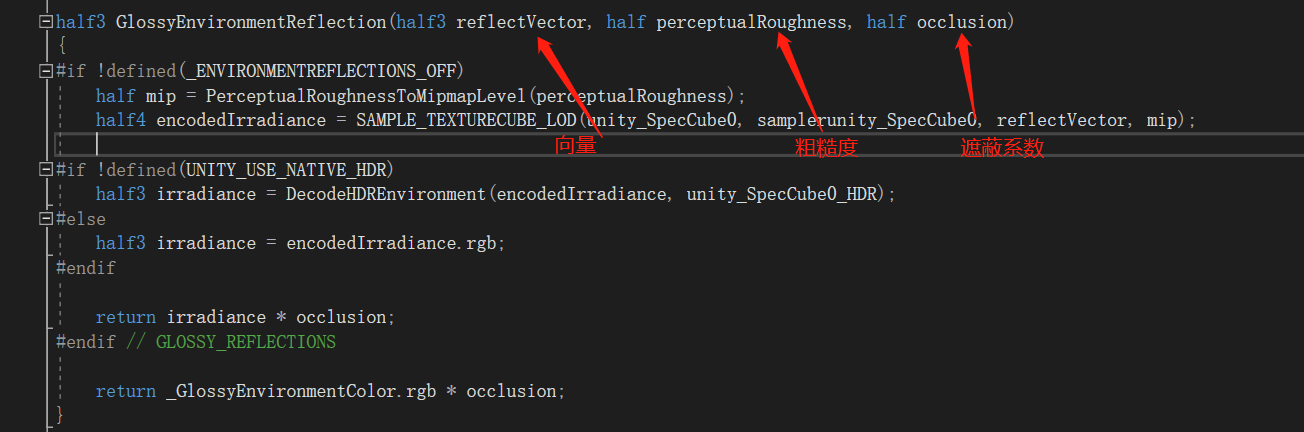

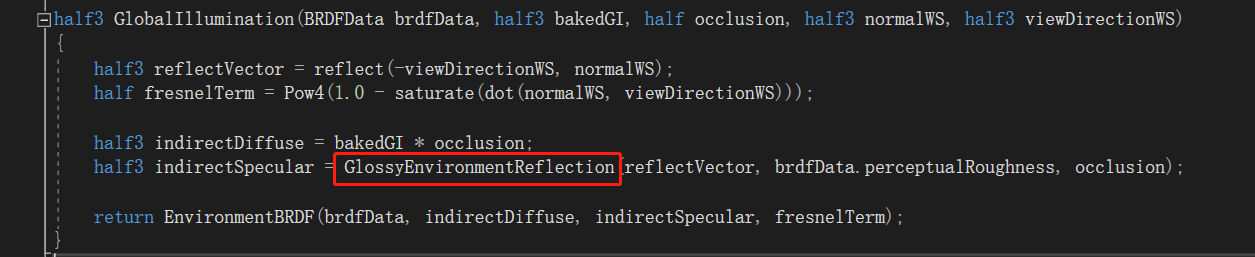

调用的地方:

half3 reflectVector = reflect(-viewDirectionWS, normalWS); //反射向量

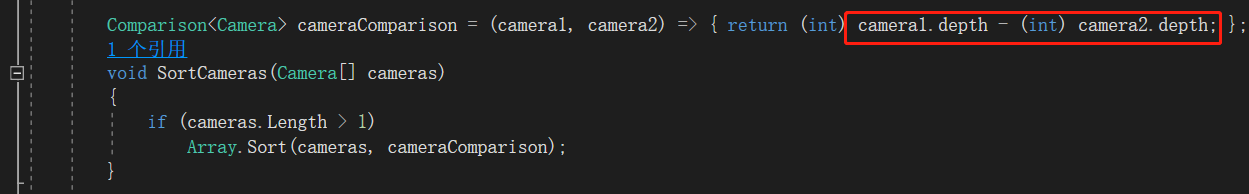

升序排列:

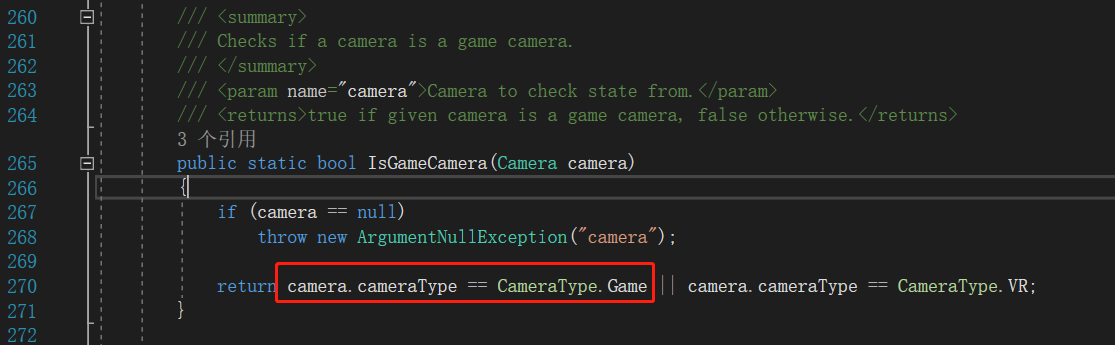

游戏相机:

/// <summary>

// Renders a camera stack. This method calls RenderSingleCamera for each valid camera in the stack.

// The last camera resolves the final target to screen.

/// </summary>

/// <param name="context">Render context used to record commands during execution.</param>

/// <param name="camera">Camera to render.</param>

static void RenderCameraStack(ScriptableRenderContext context, Camera baseCamera)

{

baseCamera.TryGetComponent<UniversalAdditionalCameraData>(out var baseCameraAdditionalData);

// Overlay cameras will be rendered stacked while rendering base cameras

if (baseCameraAdditionalData != null && baseCameraAdditionalData.renderType == CameraRenderType.Overlay)

return;

// renderer contains a stack if it has additional data and the renderer supports stacking

var renderer = baseCameraAdditionalData?.scriptableRenderer;

bool supportsCameraStacking = renderer != null && renderer.supportedRenderingFeatures.cameraStacking;

List<Camera> cameraStack = (supportsCameraStacking) ? baseCameraAdditionalData?.cameraStack : null;

bool anyPostProcessingEnabled = baseCameraAdditionalData != null && baseCameraAdditionalData.renderPostProcessing;

anyPostProcessingEnabled &= SystemInfo.graphicsDeviceType != GraphicsDeviceType.OpenGLES2;

// We need to know the last active camera in the stack to be able to resolve

// rendering to screen when rendering it. The last camera in the stack is not

// necessarily the last active one as it users might disable it.

int lastActiveOverlayCameraIndex = -1;

if (cameraStack != null && cameraStack.Count > 0)

{

#if POST_PROCESSING_STACK_2_0_0_OR_NEWER

if (asset.postProcessingFeatureSet != PostProcessingFeatureSet.PostProcessingV2)

{

#endif

// TODO: Add support to camera stack in VR multi pass mode

if (!IsMultiPassStereoEnabled(baseCamera))

{

var baseCameraRendererType = baseCameraAdditionalData?.scriptableRenderer.GetType();

for (int i = 0; i < cameraStack.Count; ++i)

{

Camera currCamera = cameraStack[i];

if (currCamera != null && currCamera.isActiveAndEnabled)

{

currCamera.TryGetComponent<UniversalAdditionalCameraData>(out var data);

if (data == null || data.renderType != CameraRenderType.Overlay)

{

Debug.LogWarning(string.Format("Stack can only contain Overlay cameras. {0} will skip rendering.", currCamera.name));

continue;

}

var currCameraRendererType = data?.scriptableRenderer.GetType();

if (currCameraRendererType != baseCameraRendererType)

{

var renderer2DType = typeof(Experimental.Rendering.Universal.Renderer2D);

if (currCameraRendererType != renderer2DType && baseCameraRendererType != renderer2DType)

{

Debug.LogWarning(string.Format("Only cameras with compatible renderer types can be stacked. {0} will skip rendering", currCamera.name));

continue;

}

}

anyPostProcessingEnabled |= data.renderPostProcessing;

lastActiveOverlayCameraIndex = i;

}

}

}

else

{

Debug.LogWarning("Multi pass stereo mode doesn't support Camera Stacking. Overlay cameras will skip rendering.");

}

#if POST_PROCESSING_STACK_2_0_0_OR_NEWER

}

else

{

Debug.LogWarning("Post-processing V2 doesn't support Camera Stacking. Overlay cameras will skip rendering.");

}

#endif

}

bool isStackedRendering = lastActiveOverlayCameraIndex != -1;

BeginCameraRendering(context, baseCamera);

#if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER

//It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect.

VFX.VFXManager.PrepareCamera(baseCamera);

#endif

UpdateVolumeFramework(baseCamera, baseCameraAdditionalData);

InitializeCameraData(baseCamera, baseCameraAdditionalData, !isStackedRendering, out var baseCameraData);

RenderSingleCamera(context, baseCameraData, anyPostProcessingEnabled);

EndCameraRendering(context, baseCamera);

if (!isStackedRendering)

return;

for (int i = 0; i < cameraStack.Count; ++i)

{

var currCamera = cameraStack[i];

if (!currCamera.isActiveAndEnabled)

continue;

currCamera.TryGetComponent<UniversalAdditionalCameraData>(out var currCameraData);

// Camera is overlay and enabled

if (currCameraData != null)

{

// Copy base settings from base camera data and initialize initialize remaining specific settings for this camera type.

CameraData overlayCameraData = baseCameraData;

bool lastCamera = i == lastActiveOverlayCameraIndex;

BeginCameraRendering(context, currCamera);

#if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER

//It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect.

VFX.VFXManager.PrepareCamera(currCamera);

#endif

UpdateVolumeFramework(currCamera, currCameraData);

InitializeAdditionalCameraData(currCamera, currCameraData, lastCamera, ref overlayCameraData);

RenderSingleCamera(context, overlayCameraData, anyPostProcessingEnabled);

EndCameraRendering(context, currCamera);

}

}

}

单个相机的渲染

/// <summary>

/// Standalone camera rendering. Use this to render procedural cameras.

/// This method doesn't call <c>BeginCameraRendering</c> and <c>EndCameraRendering</c> callbacks.

/// </summary>

/// <param name="context">Render context used to record commands during execution.</param>

/// <param name="camera">Camera to render.</param>

/// <seealso cref="ScriptableRenderContext"/>

public static void RenderSingleCamera(ScriptableRenderContext context, Camera camera)

{

UniversalAdditionalCameraData additionalCameraData = null;

if (IsGameCamera(camera))

camera.gameObject.TryGetComponent(out additionalCameraData);

if (additionalCameraData != null && additionalCameraData.renderType != CameraRenderType.Base)

{

Debug.LogWarning("Only Base cameras can be rendered with standalone RenderSingleCamera. Camera will be skipped.");

return;

}

InitializeCameraData(camera, additionalCameraData, true, out var cameraData);

RenderSingleCamera(context, cameraData, cameraData.postProcessEnabled);

}

渲染单个相机的方法:

/// <summary>

/// Renders a single camera. This method will do culling, setup and execution of the renderer.

/// </summary>

/// <param name="context">Render context used to record commands during execution.</param>

/// <param name="cameraData">Camera rendering data. This might contain data inherited from a base camera.</param>

/// <param name="anyPostProcessingEnabled">True if at least one camera has post-processing enabled in the stack, false otherwise.</param>

static void RenderSingleCamera(ScriptableRenderContext context, CameraData cameraData, bool anyPostProcessingEnabled)

{

Camera camera = cameraData.camera;

var renderer = cameraData.renderer;

if (renderer == null)

{

Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name));

return;

}

if (!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters))

return;

ScriptableRenderer.current = renderer;

bool isSceneViewCamera = cameraData.isSceneViewCamera;

ProfilingSampler sampler = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? new ProfilingSampler(camera.name): _CameraProfilingSampler;

CommandBuffer cmd = CommandBufferPool.Get(sampler.name);

using (new ProfilingScope(cmd, sampler))

{

renderer.Clear(cameraData.renderType);

renderer.SetupCullingParameters(ref cullingParameters, ref cameraData);

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

#if UNITY_EDITOR

// Emit scene view UI

if (isSceneViewCamera)

{

ScriptableRenderContext.EmitWorldGeometryForSceneView(camera);

}

#endif

var cullResults = context.Cull(ref cullingParameters);

InitializeRenderingData(asset, ref cameraData, ref cullResults, anyPostProcessingEnabled, out var renderingData);

#if ADAPTIVE_PERFORMANCE_2_0_0_OR_NEWER

if (asset.useAdaptivePerformance)

ApplyAdaptivePerformance(ref renderingData);

#endif

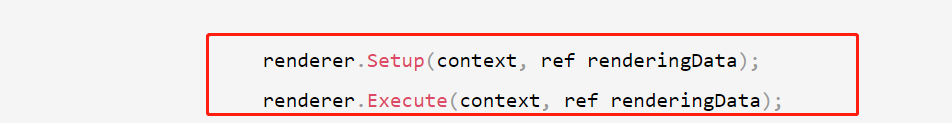

renderer.Setup(context, ref renderingData);

renderer.Execute(context, ref renderingData);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

context.Submit();

ScriptableRenderer.current = null;

}

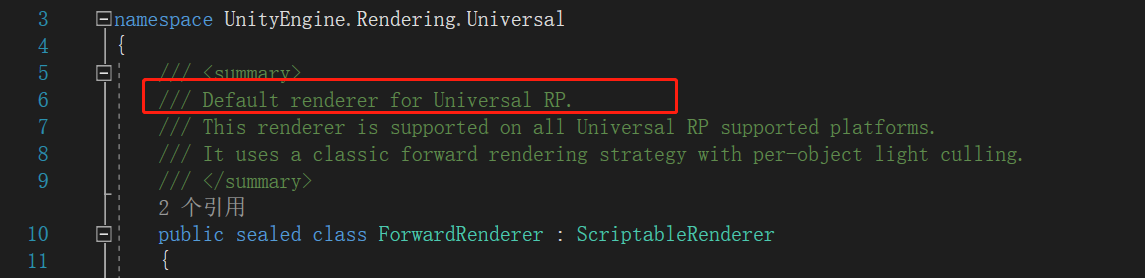

public override void Setup(ScriptableRenderContext context, ref RenderingData renderingData)

{

#if ADAPTIVE_PERFORMANCE_2_1_0_OR_NEWER

bool needTransparencyPass = !UniversalRenderPipeline.asset.useAdaptivePerformance || !AdaptivePerformance.AdaptivePerformanceRenderSettings.SkipTransparentObjects;

#endif

Camera camera = renderingData.cameraData.camera;

ref CameraData cameraData = ref renderingData.cameraData;

RenderTextureDescriptor cameraTargetDescriptor = renderingData.cameraData.cameraTargetDescriptor;

// Special path for depth only offscreen cameras. Only write opaques + transparents.

bool isOffscreenDepthTexture = cameraData.targetTexture != null && cameraData.targetTexture.format == RenderTextureFormat.Depth;

if (isOffscreenDepthTexture)

{

ConfigureCameraTarget(BuiltinRenderTextureType.CameraTarget, BuiltinRenderTextureType.CameraTarget);

for (int i = 0; i < rendererFeatures.Count; ++i)

{

if(rendererFeatures[i].isActive)

rendererFeatures[i].AddRenderPasses(this, ref renderingData);

}

EnqueuePass(m_RenderOpaqueForwardPass);

EnqueuePass(m_DrawSkyboxPass);

#if ADAPTIVE_PERFORMANCE_2_1_0_OR_NEWER

if (!needTransparencyPass)

return;

#endif

EnqueuePass(m_RenderTransparentForwardPass);

return;

}

// Should apply post-processing after rendering this camera?

bool applyPostProcessing = cameraData.postProcessEnabled;

// There's at least a camera in the camera stack that applies post-processing

bool anyPostProcessing = renderingData.postProcessingEnabled;

var postProcessFeatureSet = UniversalRenderPipeline.asset.postProcessingFeatureSet;

// We generate color LUT in the base camera only. This allows us to not break render pass execution for overlay cameras.

bool generateColorGradingLUT = cameraData.postProcessEnabled;

#if POST_PROCESSING_STACK_2_0_0_OR_NEWER

// PPv2 doesn't need to generate color grading LUT.

if (postProcessFeatureSet == PostProcessingFeatureSet.PostProcessingV2)

generateColorGradingLUT = false;

#endif

bool isSceneViewCamera = cameraData.isSceneViewCamera;

bool isPreviewCamera = cameraData.isPreviewCamera;

bool requiresDepthTexture = cameraData.requiresDepthTexture;

bool isStereoEnabled = cameraData.isStereoEnabled;

bool mainLightShadows = m_MainLightShadowCasterPass.Setup(ref renderingData);

bool additionalLightShadows = m_AdditionalLightsShadowCasterPass.Setup(ref renderingData);

bool transparentsNeedSettingsPass = m_TransparentSettingsPass.Setup(ref renderingData);

// Depth prepass is generated in the following cases:

// - If game or offscreen camera requires it we check if we can copy the depth from the rendering opaques pass and use that instead.

// - Scene or preview cameras always require a depth texture. We do a depth pre-pass to simplify it and it shouldn't matter much for editor.

bool requiresDepthPrepass = requiresDepthTexture && !CanCopyDepth(ref renderingData.cameraData);

requiresDepthPrepass |= isSceneViewCamera;

requiresDepthPrepass |= isPreviewCamera;

// The copying of depth should normally happen after rendering opaques.

// But if we only require it for post processing or the scene camera then we do it after rendering transparent objects

m_CopyDepthPass.renderPassEvent = (!requiresDepthTexture && (applyPostProcessing || isSceneViewCamera)) ? RenderPassEvent.AfterRenderingTransparents : RenderPassEvent.AfterRenderingOpaques;

// TODO: CopyDepth pass is disabled in XR due to required work to handle camera matrices in URP.

// IF this condition is removed make sure the CopyDepthPass.cs is working properly on all XR modes. This requires PureXR SDK integration.

if (isStereoEnabled && requiresDepthTexture)

requiresDepthPrepass = true;

bool isRunningHololens = false;

#if ENABLE_VR && ENABLE_VR_MODULE

isRunningHololens = UniversalRenderPipeline.IsRunningHololens(camera);

#endif

bool createColorTexture = RequiresIntermediateColorTexture(ref cameraData);

createColorTexture |= (rendererFeatures.Count != 0 && !isRunningHololens);

createColorTexture &= !isPreviewCamera;

// If camera requires depth and there's no depth pre-pass we create a depth texture that can be read later by effect requiring it.

bool createDepthTexture = cameraData.requiresDepthTexture && !requiresDepthPrepass;

createDepthTexture |= (cameraData.renderType == CameraRenderType.Base && !cameraData.resolveFinalTarget);

#if UNITY_ANDROID || UNITY_WEBGL

if (SystemInfo.graphicsDeviceType != GraphicsDeviceType.Vulkan)

{

// GLES can not use render texture's depth buffer with the color buffer of the backbuffer

// in such case we create a color texture for it too.

createColorTexture |= createDepthTexture;

}

#endif

// Configure all settings require to start a new camera stack (base camera only)

if (cameraData.renderType == CameraRenderType.Base)

{

m_ActiveCameraColorAttachment = (createColorTexture) ? m_CameraColorAttachment : RenderTargetHandle.CameraTarget;

m_ActiveCameraDepthAttachment = (createDepthTexture) ? m_CameraDepthAttachment : RenderTargetHandle.CameraTarget;

bool intermediateRenderTexture = createColorTexture || createDepthTexture;

// Doesn't create texture for Overlay cameras as they are already overlaying on top of created textures.

bool createTextures = intermediateRenderTexture;

if (createTextures)

CreateCameraRenderTarget(context, ref renderingData.cameraData);

// if rendering to intermediate render texture we don't have to create msaa backbuffer

int backbufferMsaaSamples = (intermediateRenderTexture) ? 1 : cameraTargetDescriptor.msaaSamples;

if (Camera.main == camera && camera.cameraType == CameraType.Game && cameraData.targetTexture == null)

SetupBackbufferFormat(backbufferMsaaSamples, isStereoEnabled);

}

else

{

m_ActiveCameraColorAttachment = m_CameraColorAttachment;

m_ActiveCameraDepthAttachment = m_CameraDepthAttachment;

}

ConfigureCameraTarget(m_ActiveCameraColorAttachment.Identifier(), m_ActiveCameraDepthAttachment.Identifier());

for (int i = 0; i < rendererFeatures.Count; ++i)

{

if(rendererFeatures[i].isActive)

rendererFeatures[i].AddRenderPasses(this, ref renderingData);

}

int count = activeRenderPassQueue.Count;

for (int i = count - 1; i >= 0; i--)

{

if(activeRenderPassQueue[i] == null)

activeRenderPassQueue.RemoveAt(i);

}

bool hasPassesAfterPostProcessing = activeRenderPassQueue.Find(x => x.renderPassEvent == RenderPassEvent.AfterRendering) != null;

if (mainLightShadows)

EnqueuePass(m_MainLightShadowCasterPass);

if (additionalLightShadows)

EnqueuePass(m_AdditionalLightsShadowCasterPass);

if (requiresDepthPrepass)

{

m_DepthPrepass.Setup(cameraTargetDescriptor, m_DepthTexture);

EnqueuePass(m_DepthPrepass);

}

if (generateColorGradingLUT)

{

m_ColorGradingLutPass.Setup(m_ColorGradingLut);

EnqueuePass(m_ColorGradingLutPass);

}

EnqueuePass(m_RenderOpaqueForwardPass);

#if POST_PROCESSING_STACK_2_0_0_OR_NEWER

#pragma warning disable 0618 // Obsolete

bool hasOpaquePostProcessCompat = applyPostProcessing &&

postProcessFeatureSet == PostProcessingFeatureSet.PostProcessingV2 &&

renderingData.cameraData.postProcessLayer.HasOpaqueOnlyEffects(RenderingUtils.postProcessRenderContext);

if (hasOpaquePostProcessCompat)

{

m_OpaquePostProcessPassCompat.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_ActiveCameraColorAttachment);

EnqueuePass(m_OpaquePostProcessPassCompat);

}

#pragma warning restore 0618

#endif

bool isOverlayCamera = cameraData.renderType == CameraRenderType.Overlay;

if (camera.clearFlags == CameraClearFlags.Skybox && RenderSettings.skybox != null && !isOverlayCamera)

EnqueuePass(m_DrawSkyboxPass);

// If a depth texture was created we necessarily need to copy it, otherwise we could have render it to a renderbuffer

if (!requiresDepthPrepass && renderingData.cameraData.requiresDepthTexture && createDepthTexture)

{

m_CopyDepthPass.Setup(m_ActiveCameraDepthAttachment, m_DepthTexture);

EnqueuePass(m_CopyDepthPass);

}

if (renderingData.cameraData.requiresOpaqueTexture)

{

// TODO: Downsampling method should be store in the renderer instead of in the asset.

// We need to migrate this data to renderer. For now, we query the method in the active asset.

Downsampling downsamplingMethod = UniversalRenderPipeline.asset.opaqueDownsampling;

m_CopyColorPass.Setup(m_ActiveCameraColorAttachment.Identifier(), m_OpaqueColor, downsamplingMethod);

EnqueuePass(m_CopyColorPass);

}

#if ADAPTIVE_PERFORMANCE_2_1_0_OR_NEWER

if (needTransparencyPass)

#endif

{

if (transparentsNeedSettingsPass)

{

EnqueuePass(m_TransparentSettingsPass);

}

EnqueuePass(m_RenderTransparentForwardPass);

}

EnqueuePass(m_OnRenderObjectCallbackPass);

bool lastCameraInTheStack = cameraData.resolveFinalTarget;

bool hasCaptureActions = renderingData.cameraData.captureActions != null && lastCameraInTheStack;

bool applyFinalPostProcessing = anyPostProcessing && lastCameraInTheStack &&

renderingData.cameraData.antialiasing == AntialiasingMode.FastApproximateAntialiasing;

// When post-processing is enabled we can use the stack to resolve rendering to camera target (screen or RT).

// However when there are render passes executing after post we avoid resolving to screen so rendering continues (before sRGBConvertion etc)

bool resolvePostProcessingToCameraTarget = !hasCaptureActions && !hasPassesAfterPostProcessing && !applyFinalPostProcessing;

#region Post-processing v2 support

#if POST_PROCESSING_STACK_2_0_0_OR_NEWER

// To keep things clean we'll separate the logic from builtin PP and PPv2 - expect some copy/pasting

if (postProcessFeatureSet == PostProcessingFeatureSet.PostProcessingV2)

{

// if we have additional filters

// we need to stay in a RT

if (hasPassesAfterPostProcessing)

{

// perform post with src / dest the same

if (applyPostProcessing)

{

m_PostProcessPassCompat.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_ActiveCameraColorAttachment);

EnqueuePass(m_PostProcessPassCompat);

}

//now blit into the final target

if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget)

{

if (renderingData.cameraData.captureActions != null)

{

m_CapturePass.Setup(m_ActiveCameraColorAttachment);

EnqueuePass(m_CapturePass);

}

m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment);

EnqueuePass(m_FinalBlitPass);

}

}

else

{

if (applyPostProcessing)

{

m_PostProcessPassCompat.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, RenderTargetHandle.CameraTarget);

EnqueuePass(m_PostProcessPassCompat);

}

else if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget)

{

m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment);

EnqueuePass(m_FinalBlitPass);

}

}

}

else

#endif

#endregion

{

if (lastCameraInTheStack)

{

// Post-processing will resolve to final target. No need for final blit pass.

if (applyPostProcessing)

{

var destination = resolvePostProcessingToCameraTarget ? RenderTargetHandle.CameraTarget : m_AfterPostProcessColor;

// if resolving to screen we need to be able to perform sRGBConvertion in post-processing if necessary

bool doSRGBConvertion = resolvePostProcessingToCameraTarget;

m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, destination, m_ActiveCameraDepthAttachment, m_ColorGradingLut, applyFinalPostProcessing, doSRGBConvertion);

EnqueuePass(m_PostProcessPass);

}

if (renderingData.cameraData.captureActions != null)

{

m_CapturePass.Setup(m_ActiveCameraColorAttachment);

EnqueuePass(m_CapturePass);

}

// if we applied post-processing for this camera it means current active texture is m_AfterPostProcessColor

var sourceForFinalPass = (applyPostProcessing) ? m_AfterPostProcessColor : m_ActiveCameraColorAttachment;

// Do FXAA or any other final post-processing effect that might need to run after AA.

if (applyFinalPostProcessing)

{

m_FinalPostProcessPass.SetupFinalPass(sourceForFinalPass);

EnqueuePass(m_FinalPostProcessPass);

}

// if post-processing then we already resolved to camera target while doing post.

// Also only do final blit if camera is not rendering to RT.

bool cameraTargetResolved =

// final PP always blit to camera target

applyFinalPostProcessing ||

// no final PP but we have PP stack. In that case it blit unless there are render pass after PP

(applyPostProcessing && !hasPassesAfterPostProcessing) ||

// offscreen camera rendering to a texture, we don't need a blit pass to resolve to screen

m_ActiveCameraColorAttachment == RenderTargetHandle.CameraTarget;

// We need final blit to resolve to screen

if (!cameraTargetResolved)

{

m_FinalBlitPass.Setup(cameraTargetDescriptor, sourceForFinalPass);

EnqueuePass(m_FinalBlitPass);

}

}

// stay in RT so we resume rendering on stack after post-processing

else if (applyPostProcessing)

{

m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_AfterPostProcessColor, m_ActiveCameraDepthAttachment, m_ColorGradingLut, false, false);

EnqueuePass(m_PostProcessPass);

}

}

#if UNITY_EDITOR

if (isSceneViewCamera)

{

// Scene view camera should always resolve target (not stacked)

Assertions.Assert.IsTrue(lastCameraInTheStack, "Editor camera must resolve target upon finish rendering.");

m_SceneViewDepthCopyPass.Setup(m_DepthTexture);

EnqueuePass(m_SceneViewDepthCopyPass);

}

#endif

}

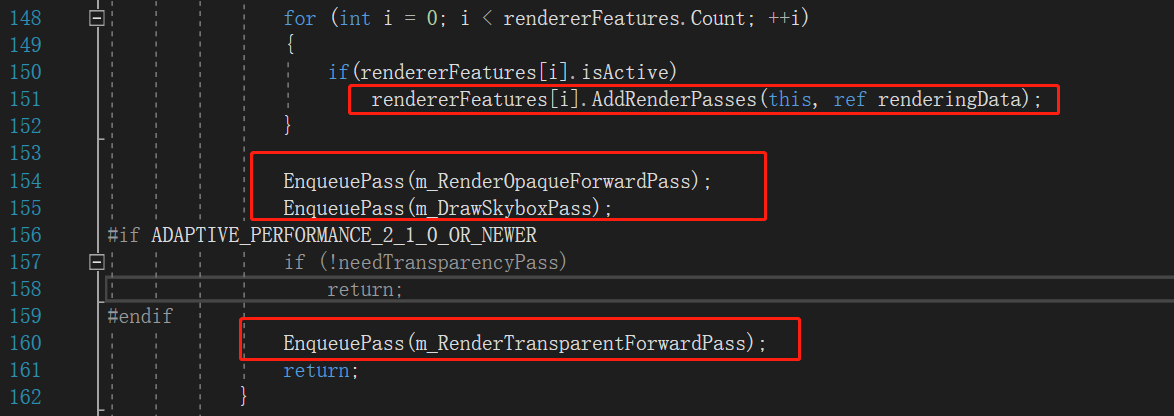

加入特性绘制pass,自定义的,比如水。

加入不透明物体pass的绘制。

加入天空盒pass的绘制。

加入透明pass的绘制。

ScriptableRenderer的执行方法:

完整代码:

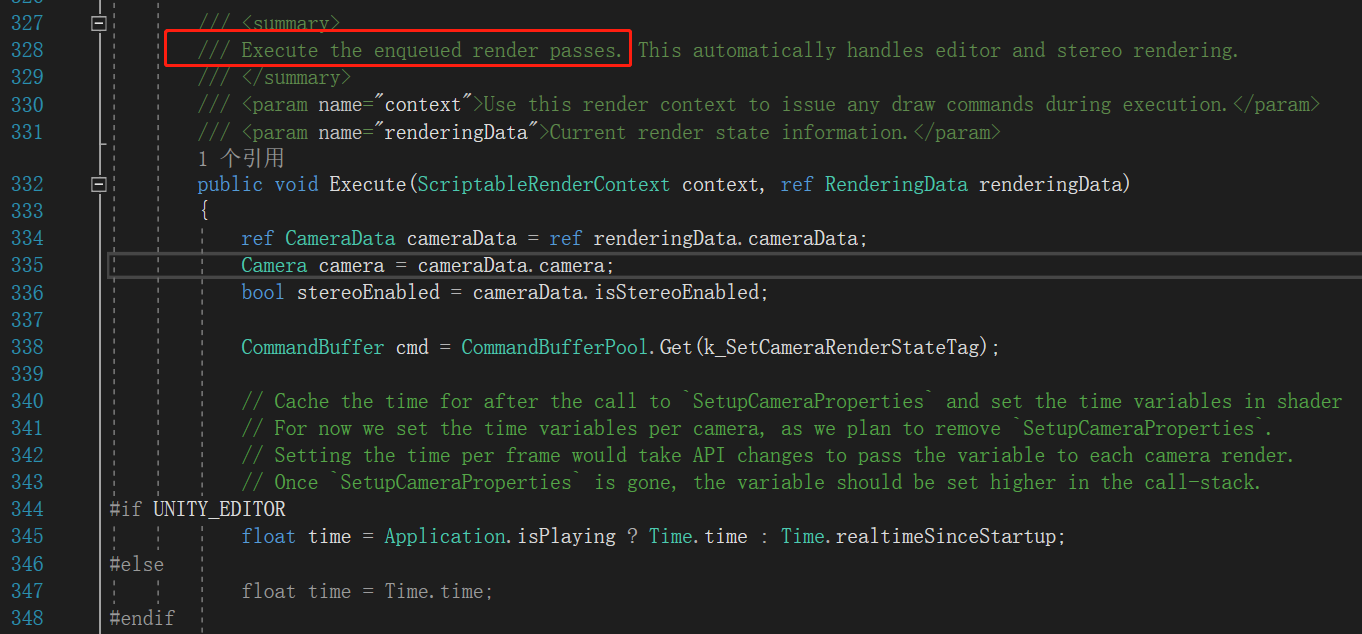

/// <summary>

/// Execute the enqueued render passes. This automatically handles editor and stereo rendering.

/// </summary>

/// <param name="context">Use this render context to issue any draw commands during execution.</param>

/// <param name="renderingData">Current render state information.</param>

public void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

ref CameraData cameraData = ref renderingData.cameraData;

Camera camera = cameraData.camera;

bool stereoEnabled = cameraData.isStereoEnabled;

CommandBuffer cmd = CommandBufferPool.Get(k_SetCameraRenderStateTag);

// Cache the time for after the call to `SetupCameraProperties` and set the time variables in shader

// For now we set the time variables per camera, as we plan to remove `SetupCameraProperties`.

// Setting the time per frame would take API changes to pass the variable to each camera render.

// Once `SetupCameraProperties` is gone, the variable should be set higher in the call-stack.

#if UNITY_EDITOR

float time = Application.isPlaying ? Time.time : Time.realtimeSinceStartup;

#else

float time = Time.time;

#endif

float deltaTime = Time.deltaTime;

float smoothDeltaTime = Time.smoothDeltaTime;

// Initialize Camera Render State

ClearRenderingState(cmd);

SetPerCameraShaderVariables(cmd, ref cameraData);

SetShaderTimeValues(cmd, time, deltaTime, smoothDeltaTime);

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

// Sort the render pass queue

SortStable(m_ActiveRenderPassQueue);

// Upper limits for each block. Each block will contains render passes with events below the limit.

NativeArray<RenderPassEvent> blockEventLimits = new NativeArray<RenderPassEvent>(k_RenderPassBlockCount, Allocator.Temp);

blockEventLimits[RenderPassBlock.BeforeRendering] = RenderPassEvent.BeforeRenderingPrepasses;

blockEventLimits[RenderPassBlock.MainRenderingOpaque] = RenderPassEvent.AfterRenderingOpaques;

blockEventLimits[RenderPassBlock.MainRenderingTransparent] = RenderPassEvent.AfterRenderingPostProcessing;

blockEventLimits[RenderPassBlock.AfterRendering] = (RenderPassEvent)Int32.MaxValue;

NativeArray<int> blockRanges = new NativeArray<int>(blockEventLimits.Length + 1, Allocator.Temp);

// blockRanges[0] is always 0

// blockRanges[i] is the index of the first RenderPass found in m_ActiveRenderPassQueue that has a ScriptableRenderPass.renderPassEvent higher than blockEventLimits[i] (i.e, should be executed after blockEventLimits[i])

// blockRanges[blockEventLimits.Length] is m_ActiveRenderPassQueue.Count

FillBlockRanges(blockEventLimits, blockRanges);

blockEventLimits.Dispose();

SetupLights(context, ref renderingData);

// Before Render Block. This render blocks always execute in mono rendering.

// Camera is not setup. Lights are not setup.

// Used to render input textures like shadowmaps.

ExecuteBlock(RenderPassBlock.BeforeRendering, blockRanges, context, ref renderingData);

for (int eyeIndex = 0; eyeIndex < renderingData.cameraData.numberOfXRPasses; ++eyeIndex)

{

// This is still required because of the following reasons:

// - XR Camera Matrices. This condition should be lifted when Pure XR SDK lands.

// - Camera billboard properties.

// - Camera frustum planes: unity_CameraWorldClipPlanes[6]

// - _ProjectionParams.x logic is deep inside GfxDevice

// NOTE: The only reason we have to call this here and not at the beginning (before shadows)

// is because this need to be called for each eye in multi pass VR.

// The side effect is that this will override some shader properties we already setup and we will have to

// reset them.

context.SetupCameraProperties(camera, stereoEnabled, eyeIndex);

SetCameraMatrices(cmd, ref cameraData, true);

// Reset shader time variables as they were overridden in SetupCameraProperties. If we don't do it we might have a mismatch between shadows and main rendering

SetShaderTimeValues(cmd, time, deltaTime, smoothDeltaTime);

#if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER

//Triggers dispatch per camera, all global parameters should have been setup at this stage.

VFX.VFXManager.ProcessCameraCommand(camera, cmd);

#endif

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

if (stereoEnabled)

BeginXRRendering(context, camera, eyeIndex);

// In the opaque and transparent blocks the main rendering executes.

// Opaque blocks...

ExecuteBlock(RenderPassBlock.MainRenderingOpaque, blockRanges, context, ref renderingData, eyeIndex);

// Transparent blocks...

ExecuteBlock(RenderPassBlock.MainRenderingTransparent, blockRanges, context, ref renderingData, eyeIndex);

// Draw Gizmos...

DrawGizmos(context, camera, GizmoSubset.PreImageEffects);

// In this block after rendering drawing happens, e.g, post processing, video player capture.

ExecuteBlock(RenderPassBlock.AfterRendering, blockRanges, context, ref renderingData, eyeIndex);

if (stereoEnabled)

EndXRRendering(context, renderingData, eyeIndex);

}

DrawGizmos(context, camera, GizmoSubset.PostImageEffects);

InternalFinishRendering(context, cameraData.resolveFinalTarget);

blockRanges.Dispose();

CommandBufferPool.Release(cmd);

}

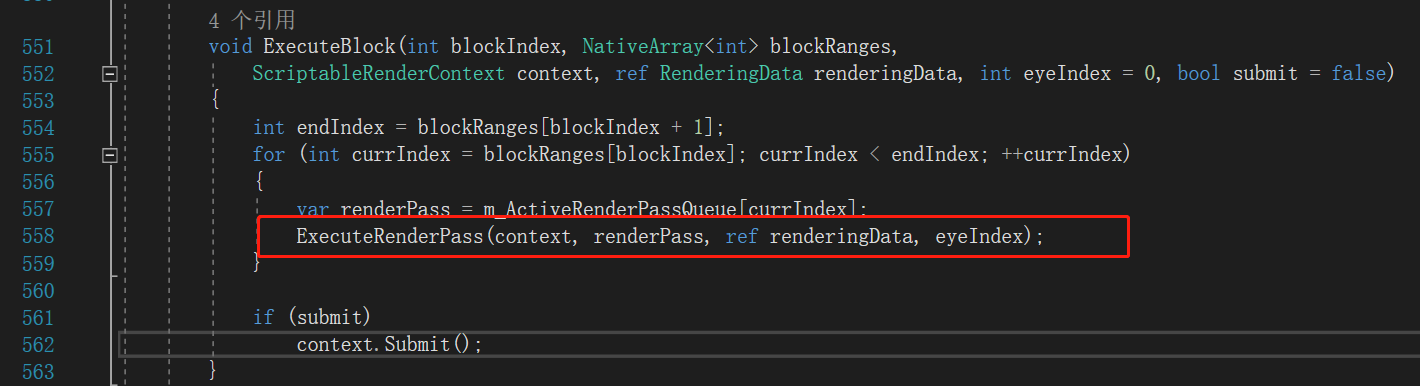

void ExecuteRenderPass(ScriptableRenderContext context, ScriptableRenderPass renderPass, ref RenderingData renderingData, int eyeIndex)

{

ref CameraData cameraData = ref renderingData.cameraData;

Camera camera = cameraData.camera;

bool firstTimeStereo = false;

CommandBuffer cmd = CommandBufferPool.Get(k_SetRenderTarget);

renderPass.Configure(cmd, cameraData.cameraTargetDescriptor);

renderPass.eyeIndex = eyeIndex;

SetRenderPassAttachments(cmd, renderPass, ref cameraData, ref firstTimeStereo);

// We must execute the commands recorded at this point because potential call to context.StartMultiEye(cameraData.camera) below will alter internal renderer states

// Also, we execute the commands recorded at this point to ensure SetRenderTarget is called before RenderPass.Execute

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

if (firstTimeStereo && cameraData.isStereoEnabled )

{

// The following call alters internal renderer states (we can think of some of the states as global states).

// So any cmd recorded before must be executed before calling into that built-in call.

context.StartMultiEye(camera, eyeIndex);

XRUtils.DrawOcclusionMesh(cmd, camera);

}

renderPass.Execute(context, ref renderingData);

}

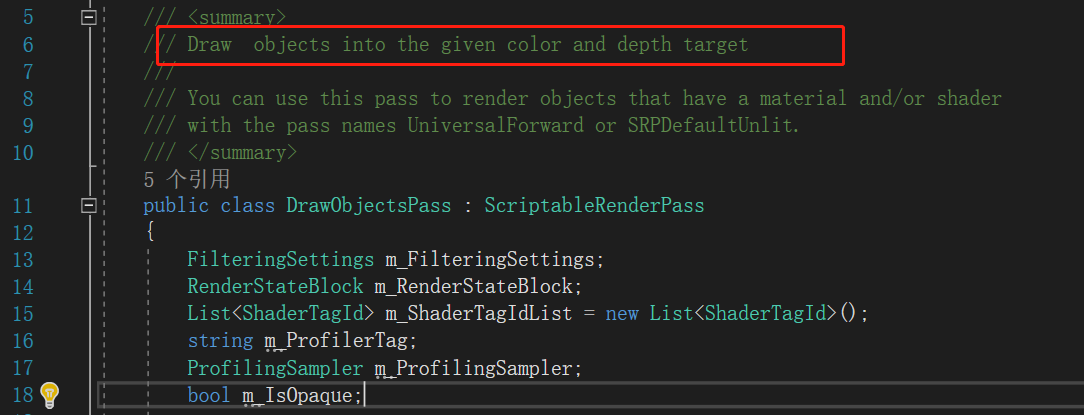

画到哪里:

void SetRenderPassAttachments(CommandBuffer cmd, ScriptableRenderPass renderPass, ref CameraData cameraData, ref bool firstTimeStereo)

{

Camera camera = cameraData.camera;

ClearFlag cameraClearFlag = GetCameraClearFlag(ref cameraData);

// Invalid configuration - use current attachment setup

// Note: we only check color buffers. This is only technically correct because for shadowmaps and depth only passes

// we bind depth as color and Unity handles it underneath. so we never have a situation that all color buffers are null and depth is bound.

uint validColorBuffersCount = RenderingUtils.GetValidColorBufferCount(renderPass.colorAttachments);

if (validColorBuffersCount == 0)

return;

// We use a different code path for MRT since it calls a different version of API SetRenderTarget

if (RenderingUtils.IsMRT(renderPass.colorAttachments))

{

// In the MRT path we assume that all color attachments are REAL color attachments,

// and that the depth attachment is a REAL depth attachment too.

// Determine what attachments need to be cleared. ----------------

bool needCustomCameraColorClear = false;

bool needCustomCameraDepthClear = false;

int cameraColorTargetIndex = RenderingUtils.IndexOf(renderPass.colorAttachments, m_CameraColorTarget);

if (cameraColorTargetIndex != -1 && (m_FirstTimeCameraColorTargetIsBound || (cameraData.isXRMultipass && m_XRRenderTargetNeedsClear) ))

{

m_FirstTimeCameraColorTargetIsBound = false; // register that we did clear the camera target the first time it was bound

firstTimeStereo = true;

// Overlay cameras composite on top of previous ones. They don't clear.

// MTT: Commented due to not implemented yet

// if (renderingData.cameraData.renderType == CameraRenderType.Overlay)

// clearFlag = ClearFlag.None;

// We need to specifically clear the camera color target.

// But there is still a chance we don't need to issue individual clear() on each render-targets if they all have the same clear parameters.

needCustomCameraColorClear = (cameraClearFlag & ClearFlag.Color) != (renderPass.clearFlag & ClearFlag.Color)

|| CoreUtils.ConvertSRGBToActiveColorSpace(camera.backgroundColor) != renderPass.clearColor;

if(cameraData.isXRMultipass && m_XRRenderTargetNeedsClear)

// For multipass mode, if m_XRRenderTargetNeedsClear == true, then both color and depth buffer need clearing (not just color)

needCustomCameraDepthClear = (cameraClearFlag & ClearFlag.Depth) != (renderPass.clearFlag & ClearFlag.Depth);

m_XRRenderTargetNeedsClear = false; // register that the XR camera multi-pass target does not need clear any more (until next call to BeginXRRendering)

}

// Note: if we have to give up the assumption that no depthTarget can be included in the MRT colorAttachments, we might need something like this:

// int cameraTargetDepthIndex = IndexOf(renderPass.colorAttachments, m_CameraDepthTarget);

// if( !renderTargetAlreadySet && cameraTargetDepthIndex != -1 && m_FirstTimeCameraDepthTargetIsBound)

// { ...

// }

if (renderPass.depthAttachment == m_CameraDepthTarget && m_FirstTimeCameraDepthTargetIsBound)

// note: should be split m_XRRenderTargetNeedsClear into m_XRColorTargetNeedsClear and m_XRDepthTargetNeedsClear and use m_XRDepthTargetNeedsClear here?

{

m_FirstTimeCameraDepthTargetIsBound = false;

//firstTimeStereo = true; // <- we do not call this here as the first render pass might be a shadow pass (non-stereo)

needCustomCameraDepthClear = (cameraClearFlag & ClearFlag.Depth) != (renderPass.clearFlag & ClearFlag.Depth);

//m_XRRenderTargetNeedsClear = false; // note: is it possible that XR camera multi-pass target gets clear first when bound as depth target?

// in this case we might need need to register that it does not need clear any more (until next call to BeginXRRendering)

}

// Perform all clear operations needed. ----------------

// We try to minimize calls to SetRenderTarget().

// We get here only if cameraColorTarget needs to be handled separately from the rest of the color attachments.

if (needCustomCameraColorClear)

{

// Clear camera color render-target separately from the rest of the render-targets.

if ((cameraClearFlag & ClearFlag.Color) != 0)

SetRenderTarget(cmd, renderPass.colorAttachments[cameraColorTargetIndex], renderPass.depthAttachment, ClearFlag.Color, CoreUtils.ConvertSRGBToActiveColorSpace(camera.backgroundColor));

if ((renderPass.clearFlag & ClearFlag.Color) != 0)

{

uint otherTargetsCount = RenderingUtils.CountDistinct(renderPass.colorAttachments, m_CameraColorTarget);

var nonCameraAttachments = m_TrimmedColorAttachmentCopies[otherTargetsCount];

int writeIndex = 0;

for (int readIndex = 0; readIndex < renderPass.colorAttachments.Length; ++readIndex)

{

if (renderPass.colorAttachments[readIndex] != m_CameraColorTarget && renderPass.colorAttachments[readIndex] != 0)

{

nonCameraAttachments[writeIndex] = renderPass.colorAttachments[readIndex];

++writeIndex;

}

}

if (writeIndex != otherTargetsCount)

Debug.LogError("writeIndex and otherTargetsCount values differed. writeIndex:" + writeIndex + " otherTargetsCount:" + otherTargetsCount);

SetRenderTarget(cmd, nonCameraAttachments, m_CameraDepthTarget, ClearFlag.Color, renderPass.clearColor);

}

}

// Bind all attachments, clear color only if there was no custom behaviour for cameraColorTarget, clear depth as needed.

ClearFlag finalClearFlag = ClearFlag.None;

finalClearFlag |= needCustomCameraDepthClear ? (cameraClearFlag & ClearFlag.Depth) : (renderPass.clearFlag & ClearFlag.Depth);

finalClearFlag |= needCustomCameraColorClear ? 0 : (renderPass.clearFlag & ClearFlag.Color);

// Only setup render target if current render pass attachments are different from the active ones.

if (!RenderingUtils.SequenceEqual(renderPass.colorAttachments, m_ActiveColorAttachments) || renderPass.depthAttachment != m_ActiveDepthAttachment || finalClearFlag != ClearFlag.None)

{

int lastValidRTindex = RenderingUtils.LastValid(renderPass.colorAttachments);

if (lastValidRTindex >= 0)

{

int rtCount = lastValidRTindex + 1;

var trimmedAttachments = m_TrimmedColorAttachmentCopies[rtCount];

for (int i = 0; i < rtCount; ++i)

trimmedAttachments[i] = renderPass.colorAttachments[i];

SetRenderTarget(cmd, trimmedAttachments, renderPass.depthAttachment, finalClearFlag, renderPass.clearColor);

}

}

}

else

{

// Currently in non-MRT case, color attachment can actually be a depth attachment.

RenderTargetIdentifier passColorAttachment = renderPass.colorAttachment;

RenderTargetIdentifier passDepthAttachment = renderPass.depthAttachment;

// When render pass doesn't call ConfigureTarget we assume it's expected to render to camera target

// which might be backbuffer or the framebuffer render textures.

if (!renderPass.overrideCameraTarget)

{

// Default render pass attachment for passes before main rendering is current active

// early return so we don't change current render target setup.

if (renderPass.renderPassEvent < RenderPassEvent.BeforeRenderingOpaques)

return;

passColorAttachment = m_CameraColorTarget;

passDepthAttachment = m_CameraDepthTarget;

}

ClearFlag finalClearFlag = ClearFlag.None;

Color finalClearColor;

if (passColorAttachment == m_CameraColorTarget && (m_FirstTimeCameraColorTargetIsBound || (cameraData.isXRMultipass && m_XRRenderTargetNeedsClear)))

{

m_FirstTimeCameraColorTargetIsBound = false; // register that we did clear the camera target the first time it was bound

finalClearFlag |= (cameraClearFlag & ClearFlag.Color);

finalClearColor = CoreUtils.ConvertSRGBToActiveColorSpace(camera.backgroundColor);

firstTimeStereo = true;

if (m_FirstTimeCameraDepthTargetIsBound || (cameraData.isXRMultipass && m_XRRenderTargetNeedsClear))

{

// m_CameraColorTarget can be an opaque pointer to a RenderTexture with depth-surface.

// We cannot infer this information here, so we must assume both camera color and depth are first-time bound here (this is the legacy behaviour).

m_FirstTimeCameraDepthTargetIsBound = false;

finalClearFlag |= (cameraClearFlag & ClearFlag.Depth);

}

m_XRRenderTargetNeedsClear = false; // register that the XR camera multi-pass target does not need clear any more (until next call to BeginXRRendering)

}

else

{

finalClearFlag |= (renderPass.clearFlag & ClearFlag.Color);

finalClearColor = renderPass.clearColor;

}

// Condition (m_CameraDepthTarget!=BuiltinRenderTextureType.CameraTarget) below prevents m_FirstTimeCameraDepthTargetIsBound flag from being reset during non-camera passes (such as Color Grading LUT). This ensures that in those cases, cameraDepth will actually be cleared during the later camera pass.

if ( (m_CameraDepthTarget!=BuiltinRenderTextureType.CameraTarget ) && (passDepthAttachment == m_CameraDepthTarget || passColorAttachment == m_CameraDepthTarget) && m_FirstTimeCameraDepthTargetIsBound )

// note: should be split m_XRRenderTargetNeedsClear into m_XRColorTargetNeedsClear and m_XRDepthTargetNeedsClear and use m_XRDepthTargetNeedsClear here?

{

m_FirstTimeCameraDepthTargetIsBound = false;

finalClearFlag |= (cameraClearFlag & ClearFlag.Depth);

//firstTimeStereo = true; // <- we do not call this here as the first render pass might be a shadow pass (non-stereo)

// finalClearFlag |= (cameraClearFlag & ClearFlag.Color); // <- m_CameraDepthTarget is never a color-surface, so no need to add this here.

//m_XRRenderTargetNeedsClear = false; // note: is it possible that XR camera multi-pass target gets clear first when bound as depth target?

// in this case we might need need to register that it does not need clear any more (until next call to BeginXRRendering)

}

else

finalClearFlag |= (renderPass.clearFlag & ClearFlag.Depth);

// Only setup render target if current render pass attachments are different from the active ones

if (passColorAttachment != m_ActiveColorAttachments[0] || passDepthAttachment != m_ActiveDepthAttachment || finalClearFlag != ClearFlag.None)

SetRenderTarget(cmd, passColorAttachment, passDepthAttachment, finalClearFlag, finalClearColor);

}

}

设置target:

internal static void SetRenderTarget(CommandBuffer cmd, RenderTargetIdentifier colorAttachment, RenderTargetIdentifier depthAttachment, ClearFlag clearFlag, Color clearColor)

{

m_ActiveColorAttachments[0] = colorAttachment;

for (int i = 1; i < m_ActiveColorAttachments.Length; ++i)

m_ActiveColorAttachments[i] = 0;

m_ActiveDepthAttachment = depthAttachment;

RenderBufferLoadAction colorLoadAction = ((uint)clearFlag & (uint)ClearFlag.Color) != 0 ?

RenderBufferLoadAction.DontCare : RenderBufferLoadAction.Load;

RenderBufferLoadAction depthLoadAction = ((uint)clearFlag & (uint)ClearFlag.Depth) != 0 ?

RenderBufferLoadAction.DontCare : RenderBufferLoadAction.Load;

TextureDimension dimension = (m_InsideStereoRenderBlock) ? XRGraphics.eyeTextureDesc.dimension : TextureDimension.Tex2D;

SetRenderTarget(cmd, colorAttachment, colorLoadAction, RenderBufferStoreAction.Store,

depthAttachment, depthLoadAction, RenderBufferStoreAction.Store, clearFlag, clearColor, dimension);

}

static void SetRenderTarget(

CommandBuffer cmd,

RenderTargetIdentifier colorAttachment,

RenderBufferLoadAction colorLoadAction,

RenderBufferStoreAction colorStoreAction,

ClearFlag clearFlags,

Color clearColor,

TextureDimension dimension)

{

if (dimension == TextureDimension.Tex2DArray)

CoreUtils.SetRenderTarget(cmd, colorAttachment, clearFlags, clearColor, 0, CubemapFace.Unknown, -1);

else

CoreUtils.SetRenderTarget(cmd, colorAttachment, colorLoadAction, colorStoreAction, clearFlags, clearColor);

}

static void SetRenderTarget(

CommandBuffer cmd,

RenderTargetIdentifier colorAttachment,

RenderBufferLoadAction colorLoadAction,

RenderBufferStoreAction colorStoreAction,

RenderTargetIdentifier depthAttachment,

RenderBufferLoadAction depthLoadAction,

RenderBufferStoreAction depthStoreAction,

ClearFlag clearFlags,

Color clearColor,

TextureDimension dimension)

{

if (depthAttachment == BuiltinRenderTextureType.CameraTarget)

{

SetRenderTarget(cmd, colorAttachment, colorLoadAction, colorStoreAction, clearFlags, clearColor,

dimension);

}

else

{

if (dimension == TextureDimension.Tex2DArray)

CoreUtils.SetRenderTarget(cmd, colorAttachment, depthAttachment,

clearFlags, clearColor, 0, CubemapFace.Unknown, -1);

else

CoreUtils.SetRenderTarget(cmd, colorAttachment, colorLoadAction, colorStoreAction,

depthAttachment, depthLoadAction, depthStoreAction, clearFlags, clearColor);

}

}

最后

以上就是过时柜子最近收集整理的关于URP代码分析的全部内容,更多相关URP代码分析内容请搜索靠谱客的其他文章。

发表评论 取消回复