安装TensorFlow

#展示anaconda中所有的环境

conda env list

#选择TensorFlow安装在哪个环境

conda activate 环境名称

#安装TensorFlow

pip install tensorflow -i https://pypi.douban.com/simple

这里推荐pip安装,其他方式安装有时会报错

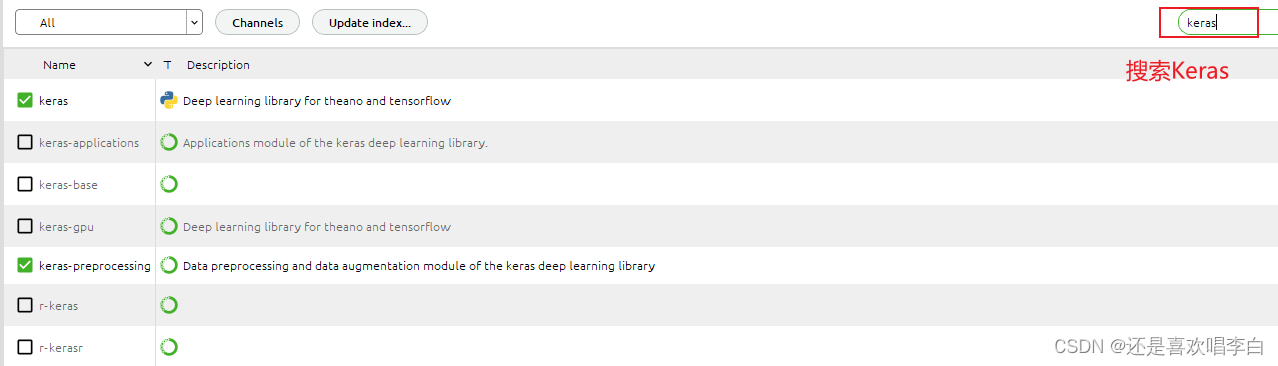

安装Keras

从anaconda包列表中直接安装

测试代码

在Spyder中进行运行

# -*- coding: utf-8 -*-

"""

Created on Wed Feb 8 17:26:25 2023

@author: hczhu

"""

# mnist attention

import numpy as np

np.random.seed(1337)

from keras.datasets import mnist

from keras.utils import np_utils

from keras.layers import *

from keras.models import *

from keras.optimizers import Adam

TIME_STEPS = 28

INPUT_DIM = 28

lstm_units = 64

# data pre-processing

(X_train, y_train), (X_test, y_test) = mnist.load_data('mnist.npz')

X_train = X_train.reshape(-1, 28, 28) / 255.

X_test = X_test.reshape(-1, 28, 28) / 255.

y_train = np_utils.to_categorical(y_train, num_classes=10)

y_test = np_utils.to_categorical(y_test, num_classes=10)

print('X_train shape:', X_train.shape)

print('X_test shape:', X_test.shape)

# first way attention

def attention_3d_block(inputs):

#input_dim = int(inputs.shape[2])

a = Permute((2, 1))(inputs)

a = Dense(TIME_STEPS, activation='softmax')(a)

a_probs = Permute((2, 1), name='attention_vec')(a)

#output_attention_mul = merge([inputs, a_probs], name='attention_mul', mode='mul')

output_attention_mul = multiply([inputs, a_probs], name='attention_mul')

return output_attention_mul

# build RNN model with attention

inputs = Input(shape=(TIME_STEPS, INPUT_DIM))

drop1 = Dropout(0.3)(inputs)

lstm_out = Bidirectional(LSTM(lstm_units, return_sequences=True), name='bilstm')(drop1)

attention_mul = attention_3d_block(lstm_out)

attention_flatten = Flatten()(attention_mul)

drop2 = Dropout(0.3)(attention_flatten)

output = Dense(10, activation='sigmoid')(drop2)

model = Model(inputs=inputs, outputs=output)

# second way attention

# inputs = Input(shape=(TIME_STEPS, INPUT_DIM))

# units = 32

# activations = LSTM(units, return_sequences=True, name='lstm_layer')(inputs)

#

# attention = Dense(1, activation='tanh')(activations)

# attention = Flatten()(attention)

# attention = Activation('softmax')(attention)

# attention = RepeatVector(units)(attention)

# attention = Permute([2, 1], name='attention_vec')(attention)

# attention_mul = merge([activations, attention], mode='mul', name='attention_mul')

# out_attention_mul = Flatten()(attention_mul)

# output = Dense(10, activation='sigmoid')(out_attention_mul)

# model = Model(inputs=inputs, outputs=output)

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

print(model.summary())

print('Training------------')

model.fit(X_train, y_train, epochs=10, batch_size=16)

print('Testing--------------')

loss, accuracy = model.evaluate(X_test, y_test)

print('test loss:', loss)

print('test accuracy:', accuracy)

最后

以上就是瘦瘦春天最近收集整理的关于Anaconda快速安装TensorFlow和Keras安装TensorFlow安装Keras测试代码的全部内容,更多相关Anaconda快速安装TensorFlow和Keras安装TensorFlow安装Keras测试代码内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复