目录

- 一、K8S 多节点部署

- 1.1 拓补图与主机分配

- 1.2 mster02部署

- 1.2.1 复制master中的三个组件启动脚本kube-apiserver.service kube-controller-manager.service kube-scheduler.service

- 1.2.2 修改配置文件kube-apiserver中的IP

- 1.2.3 拷贝etcd证书给master02使用

- 1.2.4 启动master02中的三个组件服务

- 1.2.5 增加环境变量

- 1.2.6 查看节点

- 1.3 nginx负载均衡集群部署

- 1.3.1 安装nginx 并开启四层转发(nginx02 同)

- 1.3.1 启动nginx服务

- 1.3.2 两台nginx主机部署keepalived服务(nginx01的操作,nginx02有细微区别 )

- 1.3.2 创建监控脚本,启动keepalived服务,查看VIP地址

- 1.3.3 漂移地址的验证与恢复

- 1.3.4 修改两个node节点配置文件(ootstrap.kubeconfig 、)

- 1.3.5 重启两个node节点的服务

- 1.3.6 在nginx01上查看k8s日志

- 1.4 master节点测试创建pod

一、K8S 多节点部署

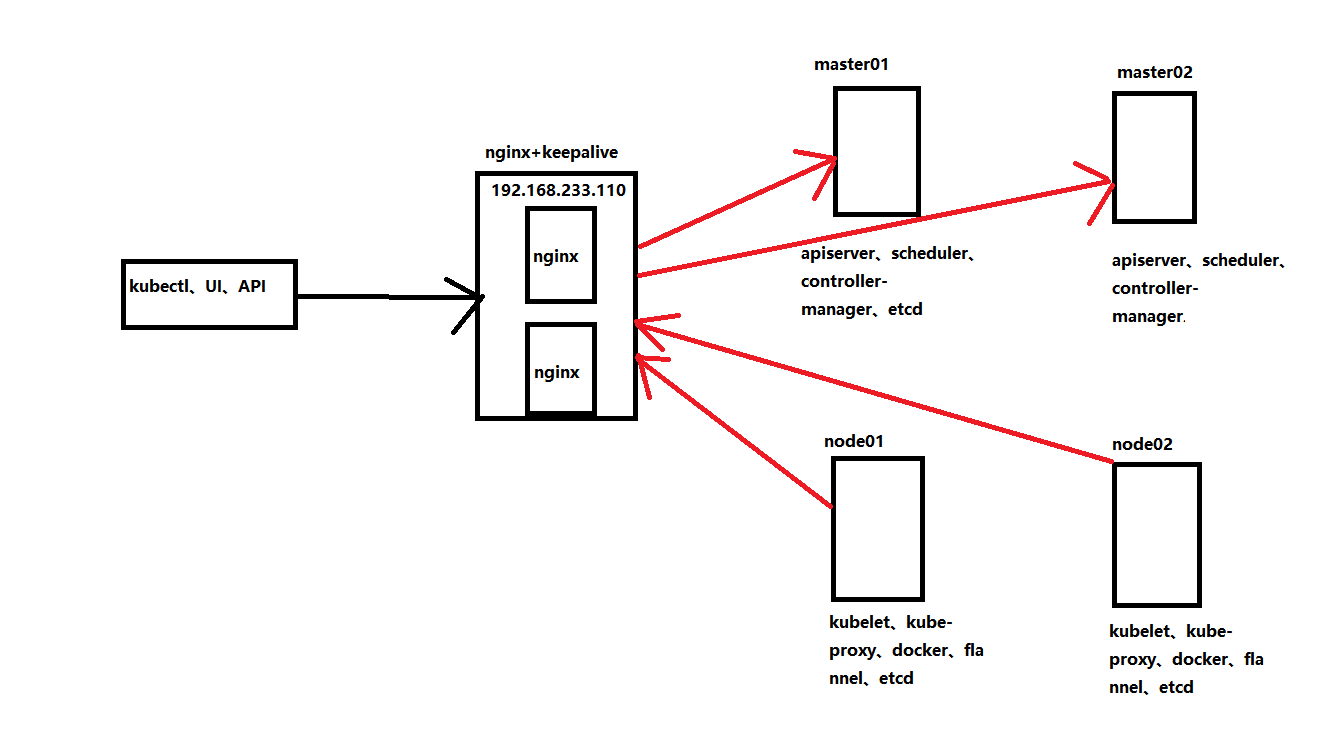

1.1 拓补图与主机分配

| 主机名 | IP地址 | 所需部署组件 |

|---|---|---|

| master01 | 192.168.233.100 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd |

| node01 | 192.168.233.200 | kubelet、kube-proxy、docker、flannel、etcd |

| node02 | 192.168.233.180 | kubelet、kube-proxy、docker、flannel、etcd |

| VIP | 192.168.233.110 | |

| master02 | 192.168.233.50 | apiserver、scheduler、controller-manager |

| nginx01 | 192.168.233.30 | nginx、keepalived |

| nginx02 | 192.168.233.127 | nginx、keepalived |

1.2 mster02部署

//在master01上操作

//复制kubernetes目录到master02

[root@master01 k8s]# scp -r /opt/kubernetes/ root@192.168.233.50:/opt

1.2.1 复制master中的三个组件启动脚本kube-apiserver.service kube-controller-manager.service kube-scheduler.service

[root@master01 k8s]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.233.50:/usr/lib/systemd/system/

1.2.2 修改配置文件kube-apiserver中的IP

[root@master02 ~]# cd /opt/kubernetes/cfg/

[root@master02 cfg]# vim kube-apiserver

--bind-address=192.168.233.50

--advertise-address=192.168.233.50

1.2.3 拷贝etcd证书给master02使用

## master02一定要有etcd证书

[root@master01 k8s]# scp -r /opt/etcd/ root@192.168.233.50:/opt/

1.2.4 启动master02中的三个组件服务

[root@master02 cfg]# systemctl start kube-apiserver.service

[root@master02 cfg]# systemctl start kube-controller-manager.service

[root@master02 cfg]# systemctl start kube-scheduler.service

1.2.5 增加环境变量

[root@master01 cfg]# vim /etc/profile

#末尾添加

export PATH=$PATH:/opt/kubernetes/bin/

[root@master01 cfg]# source /etc/profile

1.2.6 查看节点

[root@master02 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.233.200 Ready <none> 23h v1.12.3

192.168.233.180 Ready <none> 23h v1.12.3

1.3 nginx负载均衡集群部署

- 关闭防火墙和核心防护,编辑nginx yum源

[root@nginx01 ~]# systemctl stop firewalld && systemctl disable firewalld '//关闭防火墙与核心防护'

[root@nginx01 ~]# setenforce 0 && sed -i "s/SELINUX=enforcing/SELNIUX=disabled/g" /etc/selinux/config

[root@nginx01 ~]# vi /etc/yum.repos.d/nginx.repo '//编辑nginx的yum源'

[nginx]

name=nginx.repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

enabled=1

gpgcheck=0

[root@nginx01 ~]# yum clean all

1.3.1 安装nginx 并开启四层转发(nginx02 同)

[root@nginx01 ~]# yum -y install nginx '//安装nginx'

[root@nginx01 ~]# vi /etc/nginx/nginx.conf

...省略内容

13 stream {

14

15 log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

16 access_log /var/log/nginx/k8s-access.log main; ##指定日志目录

17

18 upstream k8s-apiserver {

19 #此处为master的ip地址和端口

20 server 192.168.233.100:6443; '//6443是apiserver的端口号'

21 #此处为master02的ip地址和端口

22 server 192.168.233.50:6443;

23 }

24 server {

25 listen 6443;

26 proxy_pass k8s-apiserver;

27 }

28 }

。。。省略内容

1.3.1 启动nginx服务

[root@nginx01 ~]# nginx -t '//检查nginx语法'

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@nginx01 ~]# systemctl start nginx '//开启服务'

[root@nginx01 ~]# systemctl status nginx

[root@nginx01 ~]# netstat -ntap |grep nginx '//会检测出来6443端口'

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 1849/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1849/nginx: master

1.3.2 两台nginx主机部署keepalived服务(nginx01的操作,nginx02有细微区别 )

[root@nginx01 ~]# yum -y install keepalived

[root@nginx01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh" '//keepalived服务检查脚本的位置'

}

vrrp_instance VI_1 {

state MASTER '//nginx02设置为BACKUP'

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的 nginx02 与nginx01一样

priority 100 '//优先级,nginx02设置 90'

advert_int 1 '//指定VRRP 心跳包通告间隔时间,默认1秒 '

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.233.100/24 '//VIP地址'

}

track_script {

check_nginx

}

}

1.3.2 创建监控脚本,启动keepalived服务,查看VIP地址

[root@nginx01 ~]# mkdir -p /usr/local/nginx/sbin/ '//创建监控脚本目录' nginx02 也要写

[root@nginx01 ~]# vim /usr/local/nginx/sbin/check_nginx.sh '//编写监控脚本配置文件'

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

[root@nginx01 ~]# chmod +x /usr/local/nginx/sbin/check_nginx.sh '//给权限'

[root@nginx01 ~]# systemctl start keepalived '//开启服务'

[root@nginx01 ~]# systemctl status keepalived

[root@nginx01 ~]# ip a '//两个nginx服务器查看IP地址'

VIP在nginx01上

[root@nginx02 ~]# ip a

1.3.3 漂移地址的验证与恢复

[root@nginx01 ~]# pkill nginx '//关闭nginx服务'

[root@nginx01 ~]# systemctl status keepalived '//发现keepalived服务关闭了'

[root@nginx02 ~]# ip a '//现在发现VIP地址跑到nginx02上了'

[root@nginx01 ~]# systemctl start nginx

[root@nginx01 ~]# systemctl start keepalived '//先开启nginx,在启动keepalived服务'

[root@nginx01 ~]# ip a '//再次查看,发现VIP回到了nginx01节点上'

1.3.4 修改两个node节点配置文件(ootstrap.kubeconfig 、)

[root@node01 ~]# vi /opt/k8s/cfg/bootstrap.kubeconfig

server: https://192.168.233.110:6443 '//此地址修改为VIP地址'

[root@node01 ~]# vi /opt/k8s/cfg/kubelet.kubeconfig

server: https://192.168.233.110:6443 '//此地址修改为VIP地址'

[root@node01 ~]# vi /opt/k8s/cfg/kube-proxy.kubeconfig

server: https://192.168.233.110:6443 '//此地址修改为VIP地址'

1.3.5 重启两个node节点的服务

[root@node01 ~]# systemctl restart kubelet

[root@node01 ~]# systemctl restart kube-proxy

[root@node01 ~]# cd /opt/k8s/cfg/

[root@node01 cfg]# grep 100 * '//VIP修改成功'

bootstrap.kubeconfig: server: https://192.168.233.110:6443

kubelet.kubeconfig: server: https://192.168.233.110:6443

kube-proxy.kubeconfig: server: https://192.168.233.110:6443

1.3.6 在nginx01上查看k8s日志

[root@nginx01 ~]# tail /var/log/nginx/k8s-access.log '//下面的日志是重启服务的时候产生的'

192.168.233.200 192.168.233.100:6443 - [01/May/2020:01:25:59 +0800] 200 1121

192.168.233.200 192.168.233.100:6443 - [01/May/2020:01:25:59 +0800] 200 1121

1.4 master节点测试创建pod

[root@master01 ~]# kubectl run nginx --image=nginx '//创建一个nginx测试pod'

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

[root@master01 ~]# kubectl get pods '//查看状态,是正在创建'

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-5s6h7 0/1 ContainerCreating 0 13s

[root@master02 ~]# kubectl get pods '//稍等一下再次查看,发现pod已经创建完成,在master02节点也可以查看'

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-5s6h7 1/1 Running 0 23s

最后

以上就是老迟到鲜花最近收集整理的关于k8s多master节点使用二进制部署群集一、K8S 多节点部署的全部内容,更多相关k8s多master节点使用二进制部署群集一、K8S内容请搜索靠谱客的其他文章。

本图文内容来源于网友提供,作为学习参考使用,或来自网络收集整理,版权属于原作者所有。

发表评论 取消回复