一、回顾

1.数据倾斜

数据倾斜/数据热点

数据倾斜产生的原因

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-RyLR8FIy-1596276415302)(0726_随堂笔记.assets/image-20200726081058329.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180758911.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-pLwXsrpZ-1596276415304)(0726_随堂笔记.assets/image-20200726082518125.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180810603.png)

数据倾斜大部分情况下是不可避免的。

数据倾斜解决方案

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-IxakTA3Z-1596276415307)(0726_随堂笔记.assets/image-20200726084713034.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180823831.png)

2.自定义函数

Hive不是MySQL

Hive的底层是Java

MySQL中的count()是MySQL提供的功能 , MySQL底层是C

Hive中的count()其实就是Java中的一个方法!

我们就自己写一个简单的方法 , 导入Hive中完成一个简单的需求。

统计字符串长度

select word,charcount(word) from wc;

hadoop 6

java 4

world 5

…

实现代码详见源代码

(1)将jar包添加至Hive库

hive (default)> add jar /opt/datas/charcount.jar;

Added [/opt/datas/charcount.jar] to class path

Added resources: [/opt/datas/charcount.jar]

(2)在Hive中创建函数声明

其实就是将jar包和函数名称“绑”在一起

hive (default)> create function charcount as 'com.myhive.test.CharCount';

OK

Time taken: 0.136 seconds

(3)重启Hive测试函数

如果调用失败,Hive重启几次试试

hive (default)> add jar /opt/datas/charcount.jar;

Added [/opt/datas/charcount.jar] to class path

Added resources: [/opt/datas/charcount.jar]

hive (default)> create function charcount as 'com.myhive.test.CharCount';

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.FunctionTask. AlreadyExistsE

xception(message:Function charcount already exists)hive (default)> select word,charcount(word) from wc;

OK

word _c1

hadoop 6

spark 5

mysql 5

hadoop 6

hadoop 6

mysql 5

Time taken: 0.364 seconds, Fetched: 6 row(s)

二、SQOOP

1.SQOOP介绍

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-3jvfYabm-1596276415310)(0726_随堂笔记.assets/image-20200726103507568.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180833654.png)

Hive是数据仓库,所有的数据其实都是存放在hdfs上的文件。

Hive分析的结果我也保存成文件了。

hdfs上的文件很难被共享读取,将结果展示。都没有MySQL方便!

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-a3zFDEMb-1596276415313)(0726_随堂笔记.assets/image-20200726104111388.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180844367.png)

针对单个需求比较简单 我们自己可以写mr程序来完成。

需求环境比较复杂,那么是不是有个统一的框架可以来完成这件事情呢?

SQOOP诞生了!!!!

sqoop和hive一样一样的也是Java写的

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-tPmZ1dHg-1596276415314)(0726_随堂笔记.assets/image-20200726105003817.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180853531.png)

2.让SQOOP跑起来

- 解压sqoop软件

[hadoop@hadoop212 tools]$ tar -zxf sqoop-1.4.6-cdh5.7.6.tar.gz -C /opt/modules/

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-oaVWyB8T-1596276415316)(0726_随堂笔记.assets/image-20200726105850781.png)]](https://www.shuijiaxian.com/files_image/2023060923/2020080118090531.png)

- 将2个jar包存放至lib目录

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ cp /opt/tools/mysql-connector-java-5.1.27-bin.jar ./lib/

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ cp /opt/tools/java-json.jar ./lib/

- 修改配置文件

[hadoop@hadoop212 conf]$ mv sqoop-env-template.sh ./sqoop-env.sh

[hadoop@hadoop212 conf]$ vim sqoop-env.sh

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-lCkQLNCO-1596276415318)(0726_随堂笔记.assets/image-20200726110443607.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180914667.png)

- 第一个测试案例

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop list-tables

> --connect jdbc:mysql://hadoop212:3306/metastore

> --username root

> --password Mysql_1234

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-f7kUklPo-1596276415320)(0726_随堂笔记.assets/image-20200726111335061.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180924148.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-KmLQC4nt-1596276415321)(0726_随堂笔记.assets/image-20200726111356425.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180933249.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-FkF479UD-1596276415322)(0726_随堂笔记.assets/image-20200726111455603.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180944849.png)

3.使用SQOOP将MySQL转换位hfile

sqoop本质上,使用Java写的,连接数据库和hdfs的工具

sqoop将数据库的数据转换位hdfs上的文件,

mysql数据 《==》 hdfs上的结构化文件

Hive是不是对hdfs上的文件进行分析,映射关系存在元数据当中,

sqoop能够访问数据库内容,也能读取元数据信息,

将sqoop扩展至将数据库和hive之间进行沟通。其实还不完美。

mysql> create database db_user;

Query OK, 1 row affected (0.00 sec)

mysql> use db_user;

Database changed

mysql> create table tb_user(

-> id int(11),

-> name varchar(50)

-> );

Query OK, 0 rows affected (0.02 sec)

mysql> show tables;

+-------------------+

| Tables_in_db_user |

+-------------------+

| tb_user |

+-------------------+

1 row in set (0.00 sec)

mysql> insert into tb_user (id,name) values (1,'jack'),(2,'tom'),(3,'rose');

Query OK, 3 rows affected (0.00 sec)

Records: 3 Duplicates: 0 Warnings: 0

mysql> select * from tb_user;

+------+------+

| id | name |

+------+------+

| 1 | jack |

| 2 | tom |

| 3 | rose |

+------+------+

3 rows in set (0.00 sec)

确认下,集群是否已经开启?

确认下,java-json.jar包是否已经在lib目录下?

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop import

> --connect jdbc:mysql://hadoop212:3306/db_user

> --username root

> --password Mysql_1234

> --table tb_user

> --delete-target-dir

> --target-dir /datas/sqoop/tb_user

> --fields-terminated-by 't'

> -m 1

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../accumulo does not exist! Accumulo imports will fai

l.Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../zookeeper does not exist! Accumulo imports will fa

il.Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

20/07/26 14:29:59 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.6

20/07/26 14:29:59 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consi

der using -P instead.20/07/26 14:29:59 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

20/07/26 14:29:59 INFO tool.CodeGenTool: Beginning code generation

20/07/26 14:30:00 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 14:30:00 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 14:30:00 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/modules/hadoop-2.6.0-cdh5.7.6

Note: /tmp/sqoop-hadoop/compile/f427e16aa8c3dcf1963cc1ac570d3a6e/tb_user.java uses or overrides a depre

cated API.Note: Recompile with -Xlint:deprecation for details.

20/07/26 14:30:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/f427e16aa8c3

dcf1963cc1ac570d3a6e/tb_user.jar20/07/26 14:30:09 INFO tool.ImportTool: Destination directory /datas/sqoop/tb_user is not present, henc

e not deleting.20/07/26 14:30:09 WARN manager.MySQLManager: It looks like you are importing from mysql.

20/07/26 14:30:09 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

20/07/26 14:30:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

20/07/26 14:30:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

20/07/26 14:30:09 INFO mapreduce.ImportJobBase: Beginning import of tb_user

20/07/26 14:30:09 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.

jar20/07/26 14:30:09 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduc

e.job.maps20/07/26 14:30:10 INFO client.RMProxy: Connecting to ResourceManager at hadoop212/192.168.17.212:8032

20/07/26 14:30:14 INFO db.DBInputFormat: Using read commited transaction isolation

20/07/26 14:30:14 INFO mapreduce.JobSubmitter: number of splits:1

20/07/26 14:30:14 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1595727782509_0001

20/07/26 14:30:15 INFO impl.YarnClientImpl: Submitted application application_1595727782509_0001

20/07/26 14:30:15 INFO mapreduce.Job: The url to track the job: http://hadoop212:8088/proxy/application

_1595727782509_0001/20/07/26 14:30:15 INFO mapreduce.Job: Running job: job_1595727782509_0001

20/07/26 14:30:42 INFO mapreduce.Job: Job job_1595727782509_0001 running in uber mode : false

20/07/26 14:30:42 INFO mapreduce.Job: map 0% reduce 0%

20/07/26 14:30:52 INFO mapreduce.Job: map 100% reduce 0%

20/07/26 14:30:52 INFO mapreduce.Job: Job job_1595727782509_0001 completed successfully

20/07/26 14:30:52 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=141483

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=20

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=7292

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=7292

Total vcore-seconds taken by all map tasks=7292

Total megabyte-seconds taken by all map tasks=7467008

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=615

CPU time spent (ms)=1950

Physical memory (bytes) snapshot=210378752

Virtual memory (bytes) snapshot=2766528512

Total committed heap usage (bytes)=184549376

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=20

20/07/26 14:30:52 INFO mapreduce.ImportJobBase: Transferred 20 bytes in 42.8102 seconds (0.4672 bytes/s

ec)20/07/26 14:30:52 INFO mapreduce.ImportJobBase: Retrieved 3 records.

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9i9jLwOd-1596276415325)(0726_随堂笔记.assets/image-20200726143237133.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181007699.png)

注意,参数的顺序是有要求的,不能随意调换。

bin/sqoop import

# 定义连接数据库

> --connect jdbc:mysql://hadoop212:3306/db_user

# 定义连接的用户名称

> --username root

# 定义连接的数据库密码

> --password Mysql_1234

# 定义需要导入的数据表名称

> --table tb_user

# 可选的,如果目标文件夹存在则删除

> --delete-target-dir

# 定义导出的目标文件夹

> --target-dir /datas/sqoop/tb_user

# 定义字段分隔字符

> --fields-terminated-by 't'

# 可选,定义reduceTask为1

> -m 1

4.将MySQL数据导入到Hive表中

本质上和第3步是一样的,但是多了一个需要读取Hive的配置参数,以能够读取Hive的元数据。

就能指定,hfile放在哪里。

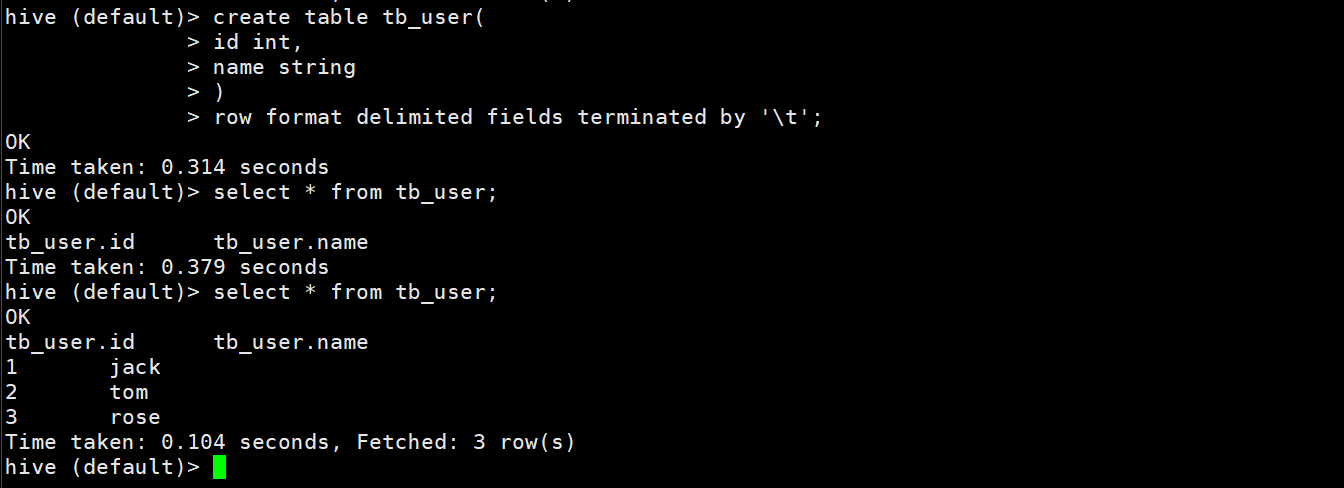

- 事先先准备好Hive的数据表(先创建映射关系)

悲伤的故事:目前的版本 只支持导出到default数据库。

个人猜想,只支持导入到warehouse根目录下。

想导出到自定义数据库,语法上支持,但会抛异常!

hive (default)> create table tb_user(

> id int,

> name string

> )

> row format delimited fields terminated by 't';

OK

Time taken: 0.314 seconds

hive (default)> select * from tb_user;

OK

tb_user.id tb_user.name

Time taken: 0.379 seconds

- 需要将hive的jar包目录导入到当前会话的环境变量中

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/opt/modules/hive-1.1.0-cdh5.7.6/lib/*

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ echo $HADOOP_CLASSPATH

:/opt/modules/hive-1.1.0-cdh5.7.6/lib/*

- 编写sqoop代码完成导入

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop import

> --connect jdbc:mysql://hadoop212:3306/db_user

> --username root

> --password Mysql_1234

> --table tb_user

> --delete-target-dir

> --hive-import

> --hive-database default

> --hive-table tb_user

> --fields-terminated-by 't'

> -m 1

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../accumulo does not exist! Accumulo imports will fai

l.Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../zookeeper does not exist! Accumulo imports will fa

il.Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

20/07/26 14:49:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.6

20/07/26 14:49:55 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consi

der using -P instead.20/07/26 14:49:55 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

20/07/26 14:49:55 INFO tool.CodeGenTool: Beginning code generation

20/07/26 14:49:56 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 14:49:56 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 14:49:56 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/modules/hadoop-2.6.0-cdh5.7.6

Note: /tmp/sqoop-hadoop/compile/92ec9d58d2c7b0dff7a77605c54f3b2c/tb_user.java uses or overrides a depre

cated API.Note: Recompile with -Xlint:deprecation for details.

20/07/26 14:49:58 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/92ec9d58d2c7

b0dff7a77605c54f3b2c/tb_user.jar20/07/26 14:49:59 INFO tool.ImportTool: Destination directory tb_user is not present, hence not deletin

g.20/07/26 14:49:59 WARN manager.MySQLManager: It looks like you are importing from mysql.

20/07/26 14:49:59 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

20/07/26 14:49:59 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

20/07/26 14:49:59 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

20/07/26 14:49:59 INFO mapreduce.ImportJobBase: Beginning import of tb_user

20/07/26 14:49:59 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.

jar20/07/26 14:49:59 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduc

e.job.maps20/07/26 14:49:59 INFO client.RMProxy: Connecting to ResourceManager at hadoop212/192.168.17.212:8032

20/07/26 14:50:02 INFO db.DBInputFormat: Using read commited transaction isolation

20/07/26 14:50:02 INFO mapreduce.JobSubmitter: number of splits:1

20/07/26 14:50:03 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1595727782509_0002

20/07/26 14:50:03 INFO impl.YarnClientImpl: Submitted application application_1595727782509_0002

20/07/26 14:50:03 INFO mapreduce.Job: The url to track the job: http://hadoop212:8088/proxy/application

_1595727782509_0002/20/07/26 14:50:03 INFO mapreduce.Job: Running job: job_1595727782509_0002

20/07/26 14:50:15 INFO mapreduce.Job: Job job_1595727782509_0002 running in uber mode : false

20/07/26 14:50:15 INFO mapreduce.Job: map 0% reduce 0%

20/07/26 14:50:26 INFO mapreduce.Job: map 100% reduce 0%

20/07/26 14:50:27 INFO mapreduce.Job: Job job_1595727782509_0002 completed successfully

20/07/26 14:50:27 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=141610

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=20

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=8535

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=8535

Total vcore-seconds taken by all map tasks=8535

Total megabyte-seconds taken by all map tasks=8739840

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=609

CPU time spent (ms)=2200

Physical memory (bytes) snapshot=208531456

Virtual memory (bytes) snapshot=2767486976

Total committed heap usage (bytes)=182976512

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=20

20/07/26 14:50:27 INFO mapreduce.ImportJobBase: Transferred 20 bytes in 28.1052 seconds (0.7116 bytes/s

ec)20/07/26 14:50:27 INFO mapreduce.ImportJobBase: Retrieved 3 records.

20/07/26 14:50:27 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 14:50:27 INFO hive.HiveImport: Loading uploaded data into Hive

Logging initialized using configuration in jar:file:/opt/modules/hive-1.1.0-cdh5.7.6/lib/hive-common-1.

1.0-cdh5.7.6.jar!/hive-log4j.propertiesOK

Time taken: 10.002 seconds

Loading data to table default.tb_user

Table default.tb_user stats: [numFiles=1, numRows=0, totalSize=20, rawDataSize=0]

OK

Time taken: 0.741 seconds

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$

5.增量导入

当我导入完成后,MySQL数据库中又新增了一条或多条数据,

如果再执行上面的代码,那么会产生重复导入。

hfile没有主键约束。

企业中一般一个时间段一起导入,例如凌晨1点将昨天的所有数据一起导入hive进行分析。

所有这个功能使用场景比较窄。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-QPZDqkEK-1596276415328)(0726_随堂笔记.assets/image-20200726145922744.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181032172.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-9wsoOWsG-1596276415330)(0726_随堂笔记.assets/image-20200726150354111.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181043361.png)

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop import

> --connect jdbc:mysql://hadoop212:3306/db_user

> --username root

> --password Mysql_1234

> --table tb_user

> --incremental append

> --check-column id

> --last-value 3

> --hive-import

> --hive-database default

> --hive-table tb_user

> --fields-terminated-by 't'

> -m 1

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../accumulo does not exist! Accumulo imports will fai

l.Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../zookeeper does not exist! Accumulo imports will fa

il.Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

20/07/26 15:04:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.6

20/07/26 15:04:10 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consi

der using -P instead.20/07/26 15:04:10 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

20/07/26 15:04:10 INFO tool.CodeGenTool: Beginning code generation

20/07/26 15:04:11 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 15:04:11 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 15:04:11 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/modules/hadoop-2.6.0-cdh5.7.6

Note: /tmp/sqoop-hadoop/compile/4a15a97bdbdf0b345490e6afffec6531/tb_user.java uses or overrides a depre

cated API.Note: Recompile with -Xlint:deprecation for details.

20/07/26 15:04:13 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/4a15a97bdbdf

0b345490e6afffec6531/tb_user.jar20/07/26 15:04:14 INFO tool.ImportTool: Maximal id query for free form incremental import: SELECT MAX(`

id`) FROM `tb_user`20/07/26 15:04:14 INFO tool.ImportTool: Incremental import based on column `id`

20/07/26 15:04:14 INFO tool.ImportTool: Lower bound value: 3

20/07/26 15:04:14 INFO tool.ImportTool: Upper bound value: 5

20/07/26 15:04:14 WARN manager.MySQLManager: It looks like you are importing from mysql.

20/07/26 15:04:14 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

20/07/26 15:04:14 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

20/07/26 15:04:14 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

20/07/26 15:04:14 INFO mapreduce.ImportJobBase: Beginning import of tb_user

20/07/26 15:04:14 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.

jar20/07/26 15:04:14 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduc

e.job.maps20/07/26 15:04:15 INFO client.RMProxy: Connecting to ResourceManager at hadoop212/192.168.17.212:8032

20/07/26 15:04:18 INFO db.DBInputFormat: Using read commited transaction isolation

20/07/26 15:04:18 INFO mapreduce.JobSubmitter: number of splits:1

20/07/26 15:04:19 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1595727782509_0003

20/07/26 15:04:19 INFO impl.YarnClientImpl: Submitted application application_1595727782509_0003

20/07/26 15:04:19 INFO mapreduce.Job: The url to track the job: http://hadoop212:8088/proxy/application

_1595727782509_0003/20/07/26 15:04:19 INFO mapreduce.Job: Running job: job_1595727782509_0003

20/07/26 15:04:28 INFO mapreduce.Job: Job job_1595727782509_0003 running in uber mode : false

20/07/26 15:04:28 INFO mapreduce.Job: map 0% reduce 0%

20/07/26 15:04:34 INFO mapreduce.Job: map 100% reduce 0%

20/07/26 15:04:34 INFO mapreduce.Job: Job job_1595727782509_0003 completed successfully

20/07/26 15:04:34 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=142256

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=15

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4218

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4218

Total vcore-seconds taken by all map tasks=4218

Total megabyte-seconds taken by all map tasks=4319232

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=268

CPU time spent (ms)=2000

Physical memory (bytes) snapshot=185819136

Virtual memory (bytes) snapshot=2766028800

Total committed heap usage (bytes)=175636480

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=15

20/07/26 15:04:34 INFO mapreduce.ImportJobBase: Transferred 15 bytes in 19.9317 seconds (0.7526 bytes/s

ec)20/07/26 15:04:34 INFO mapreduce.ImportJobBase: Retrieved 2 records.

20/07/26 15:04:34 INFO util.AppendUtils: Creating missing output directory - tb_user

20/07/26 15:04:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_user` AS t LIMI

T 120/07/26 15:04:34 INFO hive.HiveImport: Loading uploaded data into Hive

Logging initialized using configuration in jar:file:/opt/modules/hive-1.1.0-cdh5.7.6/lib/hive-common-1.

1.0-cdh5.7.6.jar!/hive-log4j.propertiesOK

Time taken: 9.427 seconds

Loading data to table default.tb_user

Table default.tb_user stats: [numFiles=2, numRows=0, totalSize=35, rawDataSize=0]

OK

Time taken: 0.755 seconds

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-GZOX5x8v-1596276415331)(0726_随堂笔记.assets/image-20200726150630569.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181052811.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-aYhHGDOD-1596276415332)(0726_随堂笔记.assets/image-20200726150738821.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181108944.png)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-jQSGkKzm-1596276415334)(0726_随堂笔记.assets/image-20200726150801655.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181118999.png)

6.从hive导出到MySQL数据库中

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-y6BdwrCB-1596276415336)(0726_随堂笔记.assets/image-20200726151523377.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181133592.png)

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop export

> --connect jdbc:mysql://hadoop212:3306/db_user

> --username root

> --password Mysql_1234

> --table wc

> --export-dir /user/hive/warehouse/wc/wc.txt

> --fields-terminated-by ','

> -m 1

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../accumulo does not exist! Accumulo imports will fai

l.Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/modules/sqoop-1.4.6-cdh5.7.6/bin/../../zookeeper does not exist! Accumulo imports will fa

il.Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

20/07/26 15:15:07 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.6

20/07/26 15:15:07 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consi

der using -P instead.20/07/26 15:15:07 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

20/07/26 15:15:07 INFO tool.CodeGenTool: Beginning code generation

20/07/26 15:15:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `wc` AS t LIMIT 1

20/07/26 15:15:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `wc` AS t LIMIT 1

20/07/26 15:15:08 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/modules/hadoop-2.6.0-cdh5.7.6

Note: /tmp/sqoop-hadoop/compile/e23e3d007b037b4d4f28d8b46b4d8c73/wc.java uses or overrides a deprecated

API.Note: Recompile with -Xlint:deprecation for details.

20/07/26 15:15:10 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/e23e3d007b03

7b4d4f28d8b46b4d8c73/wc.jar20/07/26 15:15:10 INFO mapreduce.ExportJobBase: Beginning export of wc

20/07/26 15:15:10 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.

jar20/07/26 15:15:10 INFO Configuration.deprecation: mapred.map.max.attempts is deprecated. Instead, use m

apreduce.map.maxattempts20/07/26 15:15:11 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecat

ed. Instead, use mapreduce.reduce.speculative20/07/26 15:15:11 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated.

Instead, use mapreduce.map.speculative20/07/26 15:15:11 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduc

e.job.maps20/07/26 15:15:11 INFO client.RMProxy: Connecting to ResourceManager at hadoop212/192.168.17.212:8032

20/07/26 15:15:14 INFO input.FileInputFormat: Total input paths to process : 1

20/07/26 15:15:14 INFO input.FileInputFormat: Total input paths to process : 1

20/07/26 15:15:15 INFO mapreduce.JobSubmitter: number of splits:1

20/07/26 15:15:15 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated.

Instead, use mapreduce.map.speculative20/07/26 15:15:15 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1595727782509_0004

20/07/26 15:15:15 INFO impl.YarnClientImpl: Submitted application application_1595727782509_0004

20/07/26 15:15:15 INFO mapreduce.Job: The url to track the job: http://hadoop212:8088/proxy/application

_1595727782509_0004/20/07/26 15:15:15 INFO mapreduce.Job: Running job: job_1595727782509_0004

20/07/26 15:15:27 INFO mapreduce.Job: Job job_1595727782509_0004 running in uber mode : false

20/07/26 15:15:27 INFO mapreduce.Job: map 0% reduce 0%

20/07/26 15:15:35 INFO mapreduce.Job: map 100% reduce 0%

20/07/26 15:15:35 INFO mapreduce.Job: Job job_1595727782509_0004 completed successfully

20/07/26 15:15:35 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=141242

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=186

HDFS: Number of bytes written=0

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=6068

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=6068

Total vcore-seconds taken by all map tasks=6068

Total megabyte-seconds taken by all map tasks=6213632

Map-Reduce Framework

Map input records=6

Map output records=6

Input split bytes=132

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=471

CPU time spent (ms)=1100

Physical memory (bytes) snapshot=192774144

Virtual memory (bytes) snapshot=2761129984

Total committed heap usage (bytes)=183500800

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

20/07/26 15:15:35 INFO mapreduce.ExportJobBase: Transferred 186 bytes in 23.6237 seconds (7.8735 bytes/

sec)20/07/26 15:15:35 INFO mapreduce.ExportJobBase: Exported 6 records.

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-tfVIkNK2-1596276415337)(0726_随堂笔记.assets/image-20200726151604485.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181146661.png)

7.使用手册使用指南

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Dsz8OA2c-1596276415338)(0726_随堂笔记.assets/image-20200726151951286.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181157574.png)

很少有人主动去看,都是反过来,知道一个命令参数,去 查这个参数是干什么的?

8.SQOOP执行文件

sqoop的执行文件不允许有空格!!

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-0v85STP3-1596276415339)(0726_随堂笔记.assets/image-20200726152847605.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181207971.png)

[hadoop@hadoop212 sqoop-1.4.6-cdh5.7.6]$ bin/sqoop --options-file /opt/datas/wc.sqoop

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-YkJ747S5-1596276415340)(0726_随堂笔记.assets/image-20200726152943606.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801181224442.png)

课程安排

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Gm1Mxc4s-1596276415341)(0726_随堂笔记.assets/image-20200726140604368.png)]](https://www.shuijiaxian.com/files_image/2023060923/20200801180955494.png)

最后

以上就是忧伤酒窝最近收集整理的关于Day14[20200726]一、回顾二、SQOOP课程安排的全部内容,更多相关Day14[20200726]一、回顾二、SQOOP课程安排内容请搜索靠谱客的其他文章。

![Day14[20200726]一、回顾二、SQOOP课程安排](https://www.shuijiaxian.com/files_image/reation/bcimg4.png)

发表评论 取消回复