CentOS7.5安装OpenStack Rocky版本

刚刚更新了版本,就忍不住想安装一下,因时间有限,只安装到了dashboard

搭建过程中,跟着官网走了遍流程,基本上没啥问题

建议还是跟着官网搭一遍会舒服很多 https://docs.openstack.org/install-guide/

因为是自己搭着玩,为了方便,所有关于密码的设置,都设置成000000

配置

| 主机 | 系统 | 网卡1:eth0 | 网卡2:eth1 |

| controller | CentOS7.5 | 192.168.100.10 | 192.168.200.10 |

| compute | CentOS7.5 | 192.168.100.20 | 192.168.200.20 |

关闭防火墙

# systemctl restart network

# systemctl stop firewalld

# systemctl disable firewalld

# setenforce 0

# sed -i 's/=enforcing/=disabled/' /etc/selinux/config

更新软件包

# yum upgrade -y

更新完成后重启系统

# reboot

设置主机名

# hostnamectl set-hostname controller

# hostnamectl set-hostname compute

添加主机映射

# cat << EOF >> /etc/hosts

192.168.100.10 controller

192.168.100.20 compute

EOF

配置时间同步

controller节点

安装软件包

[root@controller ~]# yum install -y chrony

编辑/etc/chrony.conf文件

server controller iburst

allow 192.168.0.0/16

启动服务

[root@controller ~]# systemctl start chronyd

[root@controller ~]# systemctl enable chronyd

compute节点

安装软件包

[root@compute ~]# yum install -y chrony

编辑/etc/chrony.conf文件

server controller iburst

启动服务

[root@compute ~]# systemctl start chronyd

[root@compute ~]# systemctl enable chronyd

配置OpenStack-rocky的yum源文件

官网是yum安装centos-release-openstack-rocky,用的是国外的源,会比较慢,这里我自己手动配置了阿里的源

# cat << EOF >> /etc/yum.repos.d/openstack.repo

[openstack-rocky]

name=openstack-rocky

baseurl=https://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-rocky/

enabled=1

gpgcheck=0

[qume-kvm]

name=qemu-kvm

baseurl= https://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

enabled=1

gpgcheck=0

EOF

安装OpenStack客户端和selinux服务

# yum install -y python-openstackclient openstack-selinux

安装数据库服务

在controller节点安装数据库

[root@controller ~]# yum install -y mariadb mariadb-server python2-PyMySQL

修改数据库配置文件

新建数据库配置文件/etc/my.cnf.d/openstack.cnf,添加以下内容

[mysqld]

bind-address = 192.168.100.10

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

启动数据库服务

[root@controller ~]# systemctl enable mariadb.service

[root@controller ~]# systemctl start mariadb.service

设置数据库密码

运行mysql_secure_installation命令,创建数据库root密码

[root@controller ~]# mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MariaDB

root user without the proper authorisation.

Set root password? [Y/n] y

New password: ## 此处为root用户密码,这里设为000000

Re-enter new password:

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n] y

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n] n

... skipping.

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n] y

Dropping test database...

... Success!

Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n] y

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!

安装消息队列服务

在controller节点安装rabbitmq-server

[root@controller ~]# yum install -y rabbitmq-server -y

启动消息队列服务

[root@controller ~]# systemctl start rabbitmq-server.service

[root@controller ~]# systemctl enable rabbitmq-server.service

Created symlink from /etc/systemd/system/multi-user.target.wants/rabbitmq-server.service to /usr/lib/systemd/system/rabbitmq-server.service.

添加openstack用户

[root@controller ~]# rabbitmqctl add_user openstack 000000

Creating user "openstack" ...

设置openstack用户最高权限

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...

安装memcached 服务

在controller节点上安装memcached

[root@controller ~]# yum install -y memcached

修改memcached配置文件

编辑/etc/sysconfig/memcached,修改以下内容

修改OPTIONS="-l 127.0.0.1,::1"为

OPTIONS="-l 127.0.0.1,::1,controller"

启动memcached服务

[root@controller ~]# systemctl start memcached.service

[root@controller ~]# systemctl enable memcached.service

安装etcd服务

在controller节点上安装etcd服务

[root@controller ~]# yum install etcd -y

修改etcd配置文件,使其他节点能够访问

编辑/etc/etcd/etcd.conf,在各自的位置修改以下内容

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://192.168.100.10:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.100.10:2379"

ETCD_NAME="controller"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.100.10:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.100.10:2379"

ETCD_INITIAL_CLUSTER="controller=http://192.168.100.10:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

启动etcd服务

[root@controller ~]# systemctl start etcd

[root@controller ~]# systemctl enable etcd

(在我想查看集群的时候,报错了,但是因为不影响,所以我先跳过这里)

[root@controller ~]# etcdctl cluster-health

cluster may be unhealthy: failed to list members

Error: client: etcd cluster is unavailable or misconfigured; error #0: dial tcp 127.0.0.1:4001: getsockopt: connection refused

; error #1: dial tcp 127.0.0.1:2379: getsockopt: connection refused

error #0: dial tcp 127.0.0.1:4001: getsockopt: connection refused

error #1: dial tcp 127.0.0.1:2379: getsockopt: connection refused

安装keystone服务

创建数据库

[root@controller ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or g.

Your MariaDB connection id is 9

Server version: 10.1.20-MariaDB MariaDB Server

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost'

IDENTIFIED BY '000000';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY '000000';

Query OK, 0 rows affected (0.00 sec)

安装软件包

[root@controller ~]# yum install openstack-keystone httpd mod_wsgi -y

编辑配置文件/etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:000000@controller/keystone

[token]

provider = fernet

同步数据库

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

初始化fernet key库

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

引导身份认证

[root@controller ~]# keystone-manage bootstrap --bootstrap-password 000000

--bootstrap-admin-url http://controller:5000/v3/

--bootstrap-internal-url http://controller:5000/v3/

--bootstrap-public-url http://controller:5000/v3/

--bootstrap-region-id RegionOne

编辑httpd配置文件/etc/httpd/conf/httpd.conf

ServerName controller

创建文件链接

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启动httpd服务

[root@controller ~]# systemctl start httpd

[root@controller ~]# systemctl enable httpd

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service.

编写环境变量脚本admin-openrc

export OS_USERNAME=admin

export OS_PASSWORD=000000

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

创建service项目

[root@controller ~]# openstack project create --domain default

--description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 617e64ff415b45ef975b8faf3d5207dd |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

验证

[root@controller ~]# openstack user list

+----------------------------------+-------+

| ID | Name |

+----------------------------------+-------+

| 5238d646322346be9e3f9750422bcf4d | admin |

+----------------------------------+-------+

[root@controller ~]# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2018-09-03T14:30:02+0000 |

| id | gAAAAABbjTdauHEUmA_PQ1deLrPsMXiITgOyGu325OkqBYxhwYK5pS5A217gFJcnt_T50T6vfVXDTPR1HJ-HM7_Dlmm5GbPBAe_4KuWygSebGPAU7_NQoZT5gH0gjtyW5aF0mw-dyqvVykcXQWeeZ_q15HOjUZ2ujn_O2GYfjFhUmhaagrUvYys |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| user_id | 5238d646322346be9e3f9750422bcf4d |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

glance安装

创建数据库

[root@controller ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or g.

Your MariaDB connection id is 17

Server version: 10.1.20-MariaDB MariaDB Server

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY '000000';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY '000000';

Query OK, 0 rows affected (0.00 sec)

创建用户、服务等

[root@controller ~]# source admin-openrc

[root@controller ~]# openstack user create --domain default --password-prompt glance

User Password:000000

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 73e040b3ca46485dad6ce8c49bfbd8e2 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user glance admin

[root@controller ~]# openstack service create --name glance

--description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | e61eb0929ae842e48c2b1f029e67578b |

| name | glance |

| type | image |

+-------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | ee8719ec7a5547fbaa1ca685fca1d8e0 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e61eb0929ae842e48c2b1f029e67578b |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 27379aa551644711b2f3568a5387e003 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e61eb0929ae842e48c2b1f029e67578b |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b9f6c2bfee5f46bf8d654336094c4360 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e61eb0929ae842e48c2b1f029e67578b |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

安装软件包

[root@controller ~]# yum install -y openstack-glance

编辑配置文件/etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:000000@controller/glance

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 000000

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

编辑配置文件/etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:000000@controller/glance

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 000000

[paste_deploy]

flavor = keystone

同步数据库

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:1352: OsloDBDeprecationWarning: EngineFacade is deprecated; please use oslo_db.sqlalchemy.enginefacade

expire_on_commit=expire_on_commit, _conf=conf)

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> liberty, liberty initial

INFO [alembic.runtime.migration] Running upgrade liberty -> mitaka01, add index on created_at and updated_at columns of 'images' table

INFO [alembic.runtime.migration] Running upgrade mitaka01 -> mitaka02, update metadef os_nova_server

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_expand01, add visibility to images

INFO [alembic.runtime.migration] Running upgrade ocata_expand01 -> pike_expand01, empty expand for symmetry with pike_contract01

INFO [alembic.runtime.migration] Running upgrade pike_expand01 -> queens_expand01

INFO [alembic.runtime.migration] Running upgrade queens_expand01 -> rocky_expand01, add os_hidden column to images table

INFO [alembic.runtime.migration] Running upgrade rocky_expand01 -> rocky_expand02, add os_hash_algo and os_hash_value columns to images table

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_expand02, current revision(s): rocky_expand02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database migration is up to date. No migration needed.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade mitaka02 -> ocata_contract01, remove is_public from images

INFO [alembic.runtime.migration] Running upgrade ocata_contract01 -> pike_contract01, drop glare artifacts tables

INFO [alembic.runtime.migration] Running upgrade pike_contract01 -> queens_contract01

INFO [alembic.runtime.migration] Running upgrade queens_contract01 -> rocky_contract01

INFO [alembic.runtime.migration] Running upgrade rocky_contract01 -> rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Upgraded database to: rocky_contract02, current revision(s): rocky_contract02

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Database is synced successfully.

启动服务

[root@controller ~]# systemctl start openstack-glance-api.service openstack-glance-registry.service

[root@controller ~]# systemctl enable openstack-glance-api.service openstack-glance-registry.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-glance-api.service to /usr/lib/systemd/system/openstack-glance-api.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-glance-registry.service to /usr/lib/systemd/system/openstack-glance-registry.service.

验证

[root@controller ~]#. admin-openrc

[root@controller ~]# wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

[root@controller ~]# openstack image create "cirros" --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

| container_format | bare |

| created_at | 2018-09-03T13:49:12Z |

| disk_format | qcow2 |

| file | /v2/images/8faa9dc9-7f29-4570-ae87-9bab0d01aa63/file |

| id | 8faa9dc9-7f29-4570-ae87-9bab0d01aa63 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | 1a74d2a87e734feea8577477955e0b06 |

| properties | os_hash_algo='sha512', os_hash_value='1b03ca1bc3fafe448b90583c12f367949f8b0e665685979d95b004e48574b953316799e23240f4f739d1b5eb4c4ca24d38fdc6f4f9d8247a2bc64db25d6bbdb2', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 13287936 |

| status | active |

| tags | |

| updated_at | 2018-09-03T13:49:13Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 8faa9dc9-7f29-4570-ae87-9bab0d01aa63 | cirros | active |

+--------------------------------------+--------+--------+

安装nova服务

controller节点

创建数据库

# mysql -u root -p000000

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> CREATE DATABASE placement;

Grant proper access to the databases:

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%'

IDENTIFIED BY '000000';

创建相关用户、服务

[root@controller ~]# openstack user create --domain default --password-prompt nova

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | ea181b4b1de3430e8646795f133ad8fe |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user nova admin

[root@controller ~]# openstack service create --name nova

--description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 52a1c2cd42fb45df9ab5ac0782faae4e |

| name | nova |

| type | compute |

+-------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 4f009d7ff354428ab5dafadf0ed0095d |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 52a1c2cd42fb45df9ab5ac0782faae4e |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 5191feea83ba4a17b79a4a7d83f85651 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 52a1c2cd42fb45df9ab5ac0782faae4e |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2fa5622c3f134f0ba8215baab1bad899 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 52a1c2cd42fb45df9ab5ac0782faae4e |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack user create --domain default --password-prompt placement

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | ab7f16a5e08c4140b396f27f8fc75f69 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user placement admin

[root@controller ~]# openstack service create --name placement

--description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | be7f6d35fbd448c79b04d816df68e2d1 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 443ad9ccf38c4930be407e6c755c37fd |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | be7f6d35fbd448c79b04d816df68e2d1 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

placement internal http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8827a5950f1a49fbb77267812daae462 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | be7f6d35fbd448c79b04d816df68e2d1 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 2e5bb38b860643f1b2bf7c2cd6ff6447 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | be7f6d35fbd448c79b04d816df68e2d1 |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

安装软件包

[root@controller ~]# yum install openstack-nova-api openstack-nova-conductor

openstack-nova-console openstack-nova-novncproxy

openstack-nova-scheduler openstack-nova-placement-api -y

编辑配置文件/etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

[api_database]

connection = mysql+pymysql://nova:000000@controller/nova_api

[database]

connection = mysql+pymysql://nova:000000@controller/nova

[placement_database]

connection = mysql+pymysql://placement:000000@controller/placement

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 000000

[DEFAULT]

my_ip = 192.168.100.10

[DEFAULT]

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 000000

编辑/etc/httpd/conf.d/00-nova-placement-api.conf,添加以下内容

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

重启httpd服务

[root@controller ~]# systemctl restart httpd

同步nova_api数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

注册cell0数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

创建cell1单元

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

54e6c270-7390-4390-8702-02b72874c5a7

同步nova数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:166: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

验证cell0和cell1注册成功

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | 54e6c270-7390-4390-8702-02b72874c5a7 | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+----------+

启动服务

[root@controller ~]# systemctl start openstack-nova-api.service

openstack-nova-scheduler.service openstack-nova-conductor.service

openstack-nova-novncproxy.service openstack-nova-conductor

[root@controller ~]# systemctl enable openstack-nova-api.service

openstack-nova-scheduler.service openstack-nova-conductor.service

openstack-nova-novncproxy.service openstack-nova-conductor

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-api.service to /usr/lib/systemd/system/openstack-nova-api.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-scheduler.service to /usr/lib/systemd/system/openstack-nova-scheduler.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-conductor.service to /usr/lib/systemd/system/openstack-nova-conductor.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-novncproxy.service to /usr/lib/systemd/system/openstack-nova-novncproxy.service.

官网没有启动nova-conductor服务,这个服务是交互数据库的,如果不启动这个服务,虚拟机创建不成功

compute节点

安装软件包

[root@compute ~]# yum install openstack-nova-compute -y

编辑配置文件/etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 000000

[DEFAULT]

my_ip = 192.168.100.20

[DEFAULT]

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http:// 192.168.100.10:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 000000

检查是否支持虚拟化

# egrep -c '(vmx|svm)' /proc/cpuinfo

如果等于0,则要在/etc/nova/nova.conf的[libvirt]下添加以下参数

[libvirt]

virt_type = qemu

启动服务

[root@compute ~] # systemctl start libvirtd.service openstack-nova-compute.service

[root@compute ~] # systemctl enable libvirtd.service openstack-nova-compute.service

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-nova-compute.service to /usr/lib/systemd/system/openstack-nova-compute.service.

controller节点

确认数据库中有计算节点

# . admin-openrc

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+---------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------+------+---------+-------+----------------------------+

| 6 | nova-compute | compute | nova | enabled | up | 2018-09-03T14:16:10.000000 |

+----+--------------+---------+------+---------+-------+----------------------------+

发现计算节点

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 54e6c270-7390-4390-8702-02b72874c5a7

Checking host mapping for compute host 'compute': 39d80423-6001-4036-a546-5287c1e93ec5

Creating host mapping for compute host 'compute': 39d80423-6001-4036-a546-5287c1e93ec5

Found 1 unmapped computes in cell: 54e6c270-7390-4390-8702-02b72874c5a7

如果想要自动发现新compute节点,可以在/etc/nova/nova.conf的[scheduler]下添加以下参数

[scheduler]

discover_hosts_in_cells_interval = 300

安装neutron服务

controller节点

创建数据库

[root@controller ~]# mysql -uroot -p000000

MariaDB [(none)] CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost'

IDENTIFIED BY '000000';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%'

IDENTIFIED BY '000000';

创建用户、服务

[root@controller ~]# openstack user create --domain default --password-prompt neutron

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | d5b2036ead024ac0b09d3cf4c1b00e7c |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

[root@controller ~]# openstack role add --project service --user neutron admin

[root@controller ~]# openstack service create --name neutron

--description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | bfad907188c74a6f99120124b36b5113 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

network public http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | bcd2134aab2d4202aa8ca0ca0de32d5a |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bfad907188c74a6f99120124b36b5113 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

network internal http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 3ca0c46da89749cfba9b0f117e3ac201 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bfad907188c74a6f99120124b36b5113 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne

network admin http://controller:9696

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | cf69a76a963b41e0a0dd327072c3b5e4 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | bfad907188c74a6f99120124b36b5113 |

| service_name | neutron |

| service_type | network |

| url | http://controller:9696 |

+--------------+----------------------------------+

配置provider network网络

安装软件包

[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2

openstack-neutron-linuxbridge ebtables -y

编辑/etc/neutron/neutron.conf配置文件

[database]

connection = mysql+pymysql://neutron:000000@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins =

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 000000

[DEFAULT]

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 000000

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

编辑配置文件/etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan

[ml2]

tenant_network_types =

[ml2]

mechanism_drivers = linuxbridge

[ml2]

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = true

编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini配置文件

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

编辑配置文件/etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置Self-service网络

安装软件包

# yum install openstack-neutron openstack-neutron-ml2

openstack-neutron-linuxbridge ebtables -y

配置/etc/neutron/neutron.conf文件

[database]

connection = mysql+pymysql://neutron:000000@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 000000

[DEFAULT]

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 000000

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件

[ml2]

type_drivers = flat,vlan,vxlan

[ml2]

tenant_network_types = vxlan

[ml2]

mechanism_drivers = linuxbridge,l2population

[ml2]

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = true

local_ip = 192.168.200.10

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

编辑/etc/neutron/l3_agent.ini文件

[DEFAULT]

interface_driver = linuxbridge

编辑/etc/neutron/dhcp_agent.ini文件

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

编辑/etc/neutron/metadata_agent.ini文件

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

编辑/etc/nova/nova.conf文件

[neutron]

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 000000

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

创建链接

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

同步数据库

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

Running upgrade for neutron ...

INFO [alembic.runtime.migration] Context impl MySQLImpl.

INFO [alembic.runtime.migration] Will assume non-transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> kilo

INFO [alembic.runtime.migration] Running upgrade kilo -> 354db87e3225

INFO [alembic.runtime.migration] Running upgrade 354db87e3225 -> 599c6a226151

INFO [alembic.runtime.migration] Running upgrade 599c6a226151 -> 52c5312f6baf

INFO [alembic.runtime.migration] Running upgrade 52c5312f6baf -> 313373c0ffee

INFO [alembic.runtime.migration] Running upgrade 313373c0ffee -> 8675309a5c4f

INFO [alembic.runtime.migration] Running upgrade 8675309a5c4f -> 45f955889773

INFO [alembic.runtime.migration] Running upgrade 45f955889773 -> 26c371498592

INFO [alembic.runtime.migration] Running upgrade 26c371498592 -> 1c844d1677f7

INFO [alembic.runtime.migration] Running upgrade 1c844d1677f7 -> 1b4c6e320f79

INFO [alembic.runtime.migration] Running upgrade 1b4c6e320f79 -> 48153cb5f051

INFO [alembic.runtime.migration] Running upgrade 48153cb5f051 -> 9859ac9c136

INFO [alembic.runtime.migration] Running upgrade 9859ac9c136 -> 34af2b5c5a59

INFO [alembic.runtime.migration] Running upgrade 34af2b5c5a59 -> 59cb5b6cf4d

INFO [alembic.runtime.migration] Running upgrade 59cb5b6cf4d -> 13cfb89f881a

INFO [alembic.runtime.migration] Running upgrade 13cfb89f881a -> 32e5974ada25

INFO [alembic.runtime.migration] Running upgrade 32e5974ada25 -> ec7fcfbf72ee

INFO [alembic.runtime.migration] Running upgrade ec7fcfbf72ee -> dce3ec7a25c9

INFO [alembic.runtime.migration] Running upgrade dce3ec7a25c9 -> c3a73f615e4

INFO [alembic.runtime.migration] Running upgrade c3a73f615e4 -> 659bf3d90664

INFO [alembic.runtime.migration] Running upgrade 659bf3d90664 -> 1df244e556f5

INFO [alembic.runtime.migration] Running upgrade 1df244e556f5 -> 19f26505c74f

INFO [alembic.runtime.migration] Running upgrade 19f26505c74f -> 15be73214821

INFO [alembic.runtime.migration] Running upgrade 15be73214821 -> b4caf27aae4

INFO [alembic.runtime.migration] Running upgrade b4caf27aae4 -> 15e43b934f81

INFO [alembic.runtime.migration] Running upgrade 15e43b934f81 -> 31ed664953e6

INFO [alembic.runtime.migration] Running upgrade 31ed664953e6 -> 2f9e956e7532

INFO [alembic.runtime.migration] Running upgrade 2f9e956e7532 -> 3894bccad37f

INFO [alembic.runtime.migration] Running upgrade 3894bccad37f -> 0e66c5227a8a

INFO [alembic.runtime.migration] Running upgrade 0e66c5227a8a -> 45f8dd33480b

INFO [alembic.runtime.migration] Running upgrade 45f8dd33480b -> 5abc0278ca73

INFO [alembic.runtime.migration] Running upgrade 5abc0278ca73 -> d3435b514502

INFO [alembic.runtime.migration] Running upgrade d3435b514502 -> 30107ab6a3ee

INFO [alembic.runtime.migration] Running upgrade 30107ab6a3ee -> c415aab1c048

INFO [alembic.runtime.migration] Running upgrade c415aab1c048 -> a963b38d82f4

INFO [alembic.runtime.migration] Running upgrade kilo -> 30018084ec99

INFO [alembic.runtime.migration] Running upgrade 30018084ec99 -> 4ffceebfada

INFO [alembic.runtime.migration] Running upgrade 4ffceebfada -> 5498d17be016

INFO [alembic.runtime.migration] Running upgrade 5498d17be016 -> 2a16083502f3

INFO [alembic.runtime.migration] Running upgrade 2a16083502f3 -> 2e5352a0ad4d

INFO [alembic.runtime.migration] Running upgrade 2e5352a0ad4d -> 11926bcfe72d

INFO [alembic.runtime.migration] Running upgrade 11926bcfe72d -> 4af11ca47297

INFO [alembic.runtime.migration] Running upgrade 4af11ca47297 -> 1b294093239c

INFO [alembic.runtime.migration] Running upgrade 1b294093239c -> 8a6d8bdae39

INFO [alembic.runtime.migration] Running upgrade 8a6d8bdae39 -> 2b4c2465d44b

INFO [alembic.runtime.migration] Running upgrade 2b4c2465d44b -> e3278ee65050

INFO [alembic.runtime.migration] Running upgrade e3278ee65050 -> c6c112992c9

INFO [alembic.runtime.migration] Running upgrade c6c112992c9 -> 5ffceebfada

INFO [alembic.runtime.migration] Running upgrade 5ffceebfada -> 4ffceebfcdc

INFO [alembic.runtime.migration] Running upgrade 4ffceebfcdc -> 7bbb25278f53

INFO [alembic.runtime.migration] Running upgrade 7bbb25278f53 -> 89ab9a816d70

INFO [alembic.runtime.migration] Running upgrade a963b38d82f4 -> 3d0e74aa7d37

INFO [alembic.runtime.migration] Running upgrade 3d0e74aa7d37 -> 030a959ceafa

INFO [alembic.runtime.migration] Running upgrade 030a959ceafa -> a5648cfeeadf

INFO [alembic.runtime.migration] Running upgrade a5648cfeeadf -> 0f5bef0f87d4

INFO [alembic.runtime.migration] Running upgrade 0f5bef0f87d4 -> 67daae611b6e

INFO [alembic.runtime.migration] Running upgrade 89ab9a816d70 -> c879c5e1ee90

INFO [alembic.runtime.migration] Running upgrade c879c5e1ee90 -> 8fd3918ef6f4

INFO [alembic.runtime.migration] Running upgrade 8fd3918ef6f4 -> 4bcd4df1f426

INFO [alembic.runtime.migration] Running upgrade 4bcd4df1f426 -> b67e765a3524

INFO [alembic.runtime.migration] Running upgrade 67daae611b6e -> 6b461a21bcfc

INFO [alembic.runtime.migration] Running upgrade 6b461a21bcfc -> 5cd92597d11d

INFO [alembic.runtime.migration] Running upgrade 5cd92597d11d -> 929c968efe70

INFO [alembic.runtime.migration] Running upgrade 929c968efe70 -> a9c43481023c

INFO [alembic.runtime.migration] Running upgrade a9c43481023c -> 804a3c76314c

INFO [alembic.runtime.migration] Running upgrade 804a3c76314c -> 2b42d90729da

INFO [alembic.runtime.migration] Running upgrade 2b42d90729da -> 62c781cb6192

INFO [alembic.runtime.migration] Running upgrade 62c781cb6192 -> c8c222d42aa9

INFO [alembic.runtime.migration] Running upgrade c8c222d42aa9 -> 349b6fd605a6

INFO [alembic.runtime.migration] Running upgrade 349b6fd605a6 -> 7d32f979895f

INFO [alembic.runtime.migration] Running upgrade 7d32f979895f -> 594422d373ee

INFO [alembic.runtime.migration] Running upgrade 594422d373ee -> 61663558142c

INFO [alembic.runtime.migration] Running upgrade 61663558142c -> 867d39095bf4, port forwarding

INFO [alembic.runtime.migration] Running upgrade b67e765a3524 -> a84ccf28f06a

INFO [alembic.runtime.migration] Running upgrade a84ccf28f06a -> 7d9d8eeec6ad

INFO [alembic.runtime.migration] Running upgrade 7d9d8eeec6ad -> a8b517cff8ab

INFO [alembic.runtime.migration] Running upgrade a8b517cff8ab -> 3b935b28e7a0

INFO [alembic.runtime.migration] Running upgrade 3b935b28e7a0 -> b12a3ef66e62

INFO [alembic.runtime.migration] Running upgrade b12a3ef66e62 -> 97c25b0d2353

INFO [alembic.runtime.migration] Running upgrade 97c25b0d2353 -> 2e0d7a8a1586

INFO [alembic.runtime.migration] Running upgrade 2e0d7a8a1586 -> 5c85685d616d

OK

启动服务

[root@controller ~]# systemctl restart openstack-nova-api

[root@controller ~]# systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@controller ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-server.service to /usr/lib/systemd/system/neutron-server.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-dhcp-agent.service to /usr/lib/systemd/system/neutron-dhcp-agent.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-metadata-agent.service to /usr/lib/systemd/system/neutron-metadata-agent.service.

如果选择了Self-service网络,还需要启动这个服务

[root@controller ~]# systemctl start neutron-l3-agent.service

[root@controller ~]# systemctl enable neutron-l3-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-l3-agent.service to /usr/lib/systemd/system/neutron-l3-agent.service.

compute节点

安装软件包

[root@compute ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

编辑配置/etc/neutron/neutron.conf文件

[DEFAULT]

transport_url = rabbit://openstack:000000@controller

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 000000

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置provider网络

编辑配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置Self-service网络

编辑配置/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件

[linux_bridge]

physical_interface_mappings = provider:eth1

[vxlan]

enable_vxlan = true

local_ip = 192.168.200.20

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置nova配置/etc/nova/nova.conf文件

[neutron]

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 000000

启动服务

[root@compute ~]# systemctl restart openstack-nova-compute

[root@compute ~]# systemctl start neutron-linuxbridge-agent.service

[root@compute ~]# systemctl enable neutron-linuxbridge-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service.

验证

[root@controller ~]# openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 06323fbc-0b13-4c14-a05d-d414678177bf | Linux bridge agent | controller | None | :-) | UP | neutron-linuxbridge-agent |

| 4bd1d3eb-d178-4ff5-8d3f-7307a4415209 | Linux bridge agent | compute | None | :-) | UP | neutron-linuxbridge-agent |

| 74ba6229-1449-40c7-a0de-53688fbb560a | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

| d43e223f-c23d-4e60-88b6-ffe12243853f | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| da0e8763-8082-4a5e-8188-7161d7ad8a05 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

安装dashboard

controller节点

安装软件包

[root@controller ~]# yum install -y openstack-dashboard

编辑配置文件/etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', 'localhost']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

OPENSTACK_NEUTRON_NETWORK = {

...

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

}

编辑/etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

启动服务

[root@controller ~]# systemctl restart httpd.service memcached.service

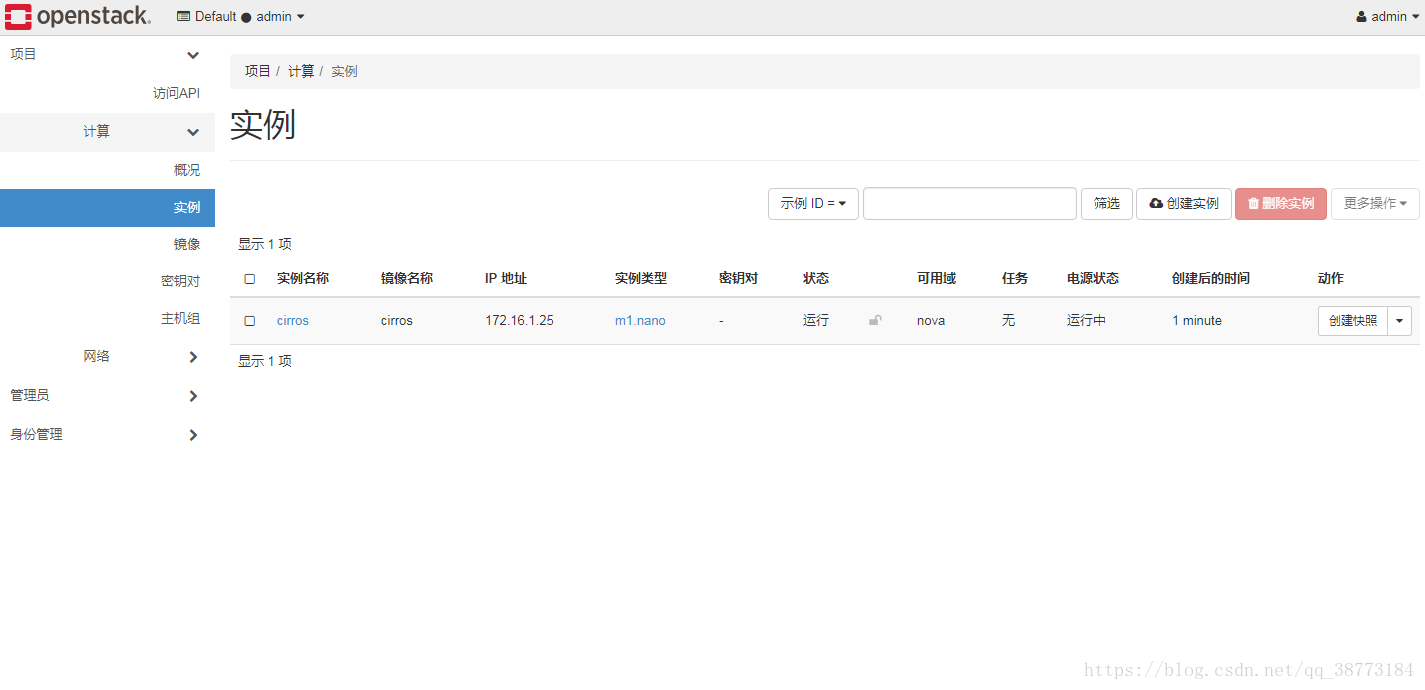

验证

浏览器打开192.168.100.10/dashboard

创建虚拟机

创建provider网络

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2018-09-03T15:02:08Z |

| description | |

| dns_domain | None |

| id | 2aa01a54-8f0b-4d13-a831-24c752fd0487 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1500 |

| name | provider |

| port_security_enabled | True |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| provider:network_type | flat |

| provider:physical_network | provider |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 0 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2018-09-03T15:02:08Z |

+---------------------------+--------------------------------------+#

创建子网

[root@controller ~]# openstack subnet create --network provider --allocation-pool start=192.168.200.100,end=192.168.200.200 --dns-nameserver 114.114.114.114 --gateway 192.168.200.1 --subnet-range 192.168.200.0/24 provider

+-------------------+--------------------------------------+

| Field | Value |

+-------------------+--------------------------------------+

| allocation_pools | 192.168.200.100-192.168.200.200 |

| cidr | 192.168.200.0/24 |

| created_at | 2018-09-03T15:03:51Z |

| description | |

| dns_nameservers | 114.114.114.114 |

| enable_dhcp | True |

| gateway_ip | 192.168.200.1 |

| host_routes | |

| id | 4d67937d-43ef-4a7f-941c-5dbef19732be |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | provider |

| network_id | 2aa01a54-8f0b-4d13-a831-24c752fd0487 |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2018-09-03T15:03:51Z |

+-------------------+--------------------------------------+

创建Self-service网络

[root@controller ~]# openstack network create selfservice

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2018-09-03T15:04:12Z |

| description | |

| dns_domain | None |

| id | 1c5078e9-8dbb-47d7-976d-5ac1d8b35181 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1450 |

| name | selfservice |

| port_security_enabled | True |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| provider:network_type | vxlan |

| provider:physical_network | None |

| provider:segmentation_id | 89 |

| qos_policy_id | None |

| revision_number | 1 |

| router:external | Internal |

| segments | None |

| shared | False |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2018-09-03T15:04:12Z |

+---------------------------+--------------------------------------+

[root@controller ~]# openstack subnet create --network selfservice --dns-nameserver 8.8.4.4 --gateway 172.16.1.1 --subnet-range 172.16.1.0/24 selfservice

+-------------------+--------------------------------------+

| Field | Value |

+-------------------+--------------------------------------+

| allocation_pools | 172.16.1.2-172.16.1.254 |

| cidr | 172.16.1.0/24 |

| created_at | 2018-09-03T15:04:19Z |

| description | |

| dns_nameservers | 8.8.4.4 |

| enable_dhcp | True |

| gateway_ip | 172.16.1.1 |

| host_routes | |

| id | fd6791d8-7a53-43fe-bc35-45168dbd13f0 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | selfservice |

| network_id | 1c5078e9-8dbb-47d7-976d-5ac1d8b35181 |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2018-09-03T15:04:19Z |

+-------------------+--------------------------------------+

创建路由

openstack router create router

创建子网接口

openstack router add subnet router selfservice

创建网关

openstack router set router --external-gateway provider

创建类型

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

创建一个Self-service网络的虚拟机

这里的net-id是openstack network list查看到的id

[root@controller ~]# openstack server create --flavor m1.nano --image cirros --nic net-id=1c5078e9-8dbb-47d7-976d-5ac1d8b35181 cirros

+-------------------------------------+-----------------------------------------------+

| Field | Value |

+-------------------------------------+-----------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | |

| OS-EXT-SRV-ATTR:host | None |

| OS-EXT-SRV-ATTR:hypervisor_hostname | None |

| OS-EXT-SRV-ATTR:instance_name | |

| OS-EXT-STS:power_state | NOSTATE |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | None |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | |

| adminPass | Y3Vh6RnFq4C7 |

| config_drive | |

| created | 2018-09-03T15:08:50Z |

| flavor | m1.nano (0) |

| hostId | |

| id | 38339165-fb68-4657-8ca6-457370a2202e |

| image | cirros (8faa9dc9-7f29-4570-ae87-9bab0d01aa63) |

| key_name | None |

| name | cirros |

| progress | 0 |

| project_id | 1a74d2a87e734feea8577477955e0b06 |

| properties | |

| security_groups | name='default' |

| status | BUILD |

| updated | 2018-09-03T15:08:50Z |

| user_id | 5238d646322346be9e3f9750422bcf4d |

| volumes_attached | |

+-------------------------------------+-----------------------------------------------+

查看是否创建成功

[root@controller ~]# openstack server list

+--------------------------------------+--------+--------+-------------------------+--------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+--------+--------+-------------------------+--------+---------+

| 38339165-fb68-4657-8ca6-457370a2202e | cirros | ACTIVE | selfservice=172.16.1.25 | cirros | m1.nano |

+--------------------------------------+--------+--------+-------------------------+--------+---------+

暂时只安装到这里,有空再研究研究

最后

以上就是勤恳身影最近收集整理的关于CentOS7.5安装OpenStack Rocky版本CentOS7.5安装OpenStack Rocky版本的全部内容,更多相关CentOS7.5安装OpenStack内容请搜索靠谱客的其他文章。

发表评论 取消回复