目录

高可用拓扑

安装前配置

安装 Kubeadm(ALL NODE)

部署外部 etcd 节点

安装HAProxy代理服务

初始化主节点(MASTER NODE)

初始化Master-01

安装网络-Flannel

其他Master节点接入Master集群

Work Node加入集群(WORK NODE)

通过kubernetes调度启动容器 (验证集群是否可用)

参考链接

高可用拓扑

| IP地址 | 角色1 | 角色2 | K8S版本 | ETCD版本 |

| master-01/192.168.35.7 | Master节点 | ETCD节点 | v1.20.4 | v3.4.14 |

| master-02/192.168.35.8 | Master节点 | ETCD节点 | v1.20.4 | v3.4.14 |

| master-03/192.168.35.9 | Master节点 | ETCD节点 | v1.20.4 | v3.4.14 |

| node-01/192.168.35.10 | Node节点 | HAProxy代理 | v1.19.3 | - |

安装前配置

见《【K8S 一】使用kubeadm工具快速部署Kubernetes集群(单Master)》

安装 Kubeadm(ALL NODE)

见《【K8S 一】使用kubeadm工具快速部署Kubernetes集群(单Master)》

部署外部 etcd 节点

注:Kubernetes1.20.4版本,初始化Master-01之后,其他Master节点加入Cluster创建ETCD Pod失败,所以我手动部署了外部etcd集群。不知道是否是1.20.4版本的BUG还是我操作有误,如有朋友使用local etcd集群搭建成功,可以给我留言,谢过~

见《【K8S etcd篇】部署etcd 3.4.14 集群》

安装HAProxy代理服务

安装HAProxy

# yum -y install haproxy

配置HAProxy

# vi /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemondefaults

mode tcp

log global

retries 3

timeout connect 10s

timeout client 1m

timeout server 1mfrontend kube-apiserver

bind *:6443 # 指定前端端口

mode tcp

default_backend masterbackend master # 指定后端机器及端口,负载方式为轮询

balance roundrobin

server master-01 192.168.35.7:6443 check maxconn 2000

server master-02 192.168.35.8:6443 check maxconn 2000

server master-03 192.168.35.9:6443 check maxconn 2000启动和设置开机自启动

# systemctl start haproxy

# systemctl enable haproxy

初始化主节点(MASTER NODE)

kubeadm config print init-defaults --component-configs KubeProxyConfiguration > kubeadm-config.yaml#修改初始化配置文件

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: liuyll.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.35.7

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.35.10:6443 ## 如果要部署高可用Master集群,此配置项必须配置。

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- "https://192.168.35.7:2379"

- "https://192.168.35.8:2379"

- "https://192.168.35.9:2379"

caFile: /opt/etcd/tls-certs/ca.pem

certFile: /opt/etcd/tls-certs/etcd.pem

keyFile: /opt/etcd/tls-certs/etcd-key.pem

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

podSubnet: 172.254.0.0/16

serviceSubnet: 10.254.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

iptables:

masqueradeAll: false

ipvs:

minSyncPeriod: 0s

scheduler: "rr"

kind: KubeProxyConfiguration

mode: "ipvs"

拷贝到各Master节点,然后执行下面的命令提前下载Kubernetes镜像(kube-apiserver、kube-controller-manager、kube-scheduler、kube-proxy、coredns、pause):

kubeadm config images pull --config=kubeadm-config.yaml初始化Master-01

# kubeadm init --config=kubeadm-config.yaml --upload-certs |tee kubeadm-init.log

[init] Using Kubernetes version: v1.20.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master-01] and IPs [10.254.0.1 192.168.35.7 192.168.35.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 62.010428 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

684fca0d14f339aa71c85b9830ea8610db8ec58900bd84678b03e643091d9b89

[mark-control-plane] Marking the node master-01 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node master-01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: liuyll.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.35.10:6443 --token liuyll.0123456789abcdef

--discovery-token-ca-cert-hash sha256:49df97b238db81a6a9cbdfce9e22945c49677b71868038ee4829e850bb5411fc

--control-plane --certificate-key 684fca0d14f339aa71c85b9830ea8610db8ec58900bd84678b03e643091d9b89Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.35.10:6443 --token liuyll.0123456789abcdef

--discovery-token-ca-cert-hash sha256:49df97b238db81a6a9cbdfce9e22945c49677b71868038ee4829e850bb5411fc

安装网络-Flannel

注:初始化完成Master-01之后,必须先安装网络插件,然后再将其他Master节点加入到Master Cluster,否则Flannel安装时,只有一个Master节点上Flannel Pod创建并启动成功,其他所有节点都会失败:频繁重启。

见《【K8S 一】使用kubeadm工具快速部署Kubernetes集群(单Master)》

其他Master节点接入Master集群

安装完网络插件,执行kubectl get po -n kube-system查看所有Pod都是Running状态之后,根据Master-01初始化回显提示,执行下面的命令将其他的Master节点接入的Cluster。

# kubeadm join 192.168.35.10:6443 --token liuyll.0123456789abcdef

> --discovery-token-ca-cert-hash sha256:49df97b238db81a6a9cbdfce9e22945c49677b71868038ee4829e850bb5411fc

> --control-plane --certificate-key 684fca0d14f339aa71c85b9830ea8610db8ec58900bd84678b03e643091d9b89

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master-02] and IPs [10.254.0.1 192.168.35.8 192.168.35.10]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Skipping etcd check in external mode

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[control-plane-join] using external etcd - no local stacked instance added

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node master-02 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node master-02 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

To start administering your cluster from this node, you need to run the following as a regular user:mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configRun 'kubectl get nodes' to see this node join the cluster.

其他Master节点全部加入到集群之后,执行kubectl get nodes查看:

# kubectl get node

NAME STATUS ROLES AGE VERSION

master-01 Ready control-plane,master 6h15m v1.20.4

master-02 Ready control-plane,master 6h11m v1.20.4

master-03 Ready control-plane,master 6h10m v1.20.4

Work Node加入集群(WORK NODE)

见《【K8S 一】使用kubeadm工具快速部署Kubernetes集群(单Master)》

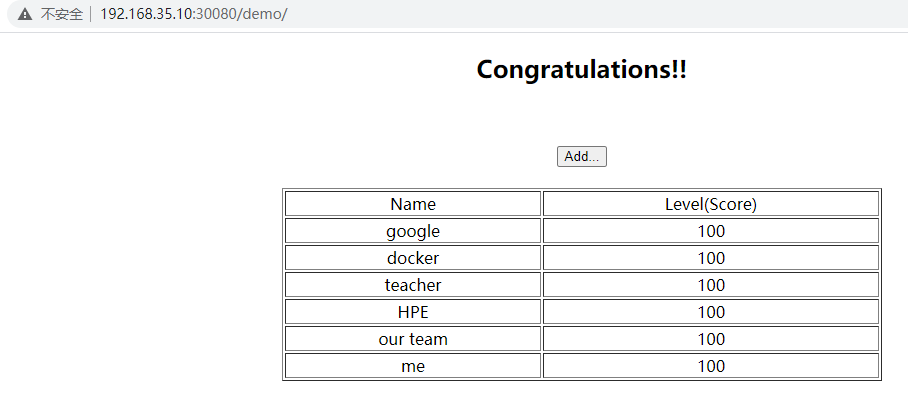

通过kubernetes调度启动容器 (验证集群是否可用)

见《【K8S 一】使用kubeadm工具快速部署Kubernetes集群(单Master)》

参考链接

kubeadm configuration file

kubeadm 使用外部etcd

生产环境-使用部署工具安装 Kubernetes:使用 kubeadm 引导集群

最佳实践-校验节点设置:节点一致性测试

最后

以上就是自由猎豹最近收集整理的关于【K8S 五】使用kubeadm工具快速部署Kubernetes集群(Master高可用集群)高可用拓扑安装前配置安装 Kubeadm(ALL NODE)部署外部 etcd 节点安装HAProxy代理服务初始化主节点(MASTER NODE)Work Node加入集群(WORK NODE)通过kubernetes调度启动容器 (验证集群是否可用)参考链接的全部内容,更多相关【K8S内容请搜索靠谱客的其他文章。

发表评论 取消回复