也许是LINUX操作系统最难的部分,太抽象。

页框:分页单元把所有的RAM分成固定长度的页框。页框是主存的一部分,因此也是一个存储区域。

页:每一个页框包含一个页。所以页框和页的长度一致。只是一个数据块,可以放在任何页框或者磁盘中。

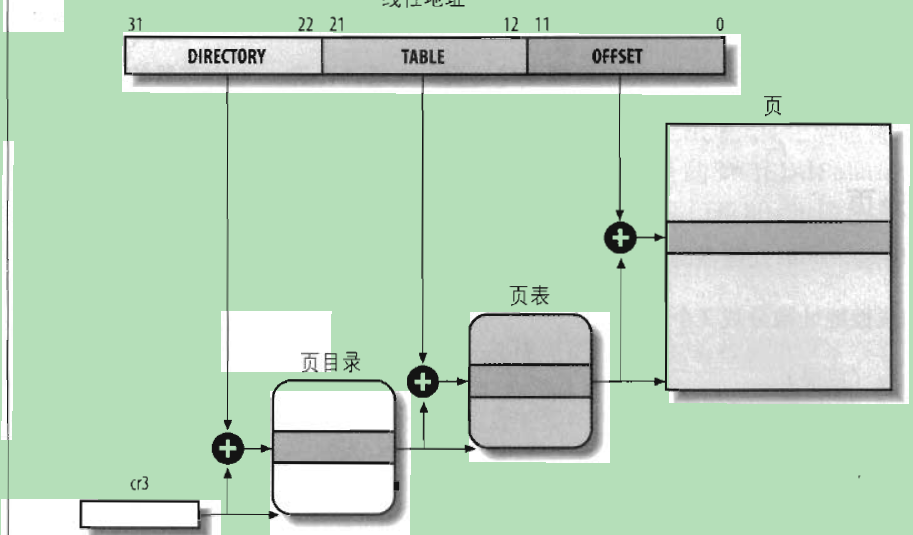

页目录表和页表:线性地址的转换分两步完成,每一步都基于一种转换表,第一种转换表称为页目录表(page directory);第二种转换表称为页表(page table)

图片取自《深入理解linux》

`

页表目录的每一项:写页表的物理地址/4K

页表的每一项:写页的物理地址/4K

如果0xC0010000页地址映射到0x00010000页框

在页表目录物理地址偏移的0b110000000000(0xC00)处写页表物理地址/4K

然后在页表物理地址/4K偏移0b0001000000处写页的物理地址/4K

do_page_fault

=>if (!user_mode(regs) && (address >= TASK_SIZE))

return SIGSEGV;//如果是内核态,退出

=>vma = find_vma(mm, address);

if (vma->vm_start <= address)

goto good_area;

=>ret = handle_mm_fault(mm, vma, address, is_write ? FAULT_FLAG_WRITE : 0);

=>__set_current_state(TASK_RUNNING);

=>pgd = pgd_offset(mm, address);

pud = pud_alloc(mm, pgd, address);

pmd = pmd_alloc(mm, pud, address);

pte = pte_alloc_map(mm, pmd, address);

=>return handle_pte_fault(mm, vma, address, pte, pmd, write_access);

=>if (!pte_present(entry))//如果页面不在内存

==>return do_linear_fault(mm, vma, address, pte, pmd, flags, entry);

=>return __do_fault(mm, vma, address, pmd, pgoff, flags, orig_pte);

=>page = alloc_page_vma(GFP_HIGHUSER_MOVABLE, vma, address);

//#define GFP_HIGHUSER_MOVABLE (__GFP_WAIT | __GFP_IO | //__GFP_FS |

//__GFP_HARDWALL | __GFP_HIGHMEM | 优先从高端内存分配

//__GFP_MOVABLE)

=>return __alloc_pages_nodemask(gfp, 0, zl, policy_nodemask(gfp, pol));//alloc_page_vma - Allocate a page for a VMA.

=>entry = pte_mkyoung(entry);//标记页面在内存里面

=>bad_area://非法地址处理流程

/* User mode accesses just cause a SIGSEGV */

if (user_mode(regs)) {

tsk->thread.cp0_badvaddr = address;

tsk->thread.error_code = write;

info.si_signo = SIGSEGV;

info.si_errno = 0;

/* info.si_code has been set above */

info.si_addr = (void __user *) address;

force_sig_info(SIGSEGV, &info, tsk);

=>action = &t->sighand->action[sig-1];

ignored = action->sa.sa_handler == SIG_IGN;

blocked = sigismember(&t->blocked, sig);

if (blocked || ignored) {

action->sa.sa_handler = SIG_DFL;

if (blocked) {

sigdelset(&t->blocked, sig);

recalc_sigpending_and_wake(t);

}

}

if (action->sa.sa_handler == SIG_DFL)=

t->signal->flags &= ~SIGNAL_UNKILLABLE;

ret = specific_send_sig_info(sig, info, t);

=>send_signal(sig, info, t, 0);

=>__send_signal(sig, info, t, group, from_ancestor_ns);

=>q = __sigqueue_alloc(sig, t, GFP_ATOMIC | __GFP_NOTRACK_FALSE_POSITIVE, override_rlimit);

=>list_add_tail(&q->list, &pending->list);

=>copy_siginfo(&q->info, info);

=>signalfd_notify(t, sig);

sigaddset(&pending->signal, sig);

complete_signal(sig, t, group);

return;

}

ioremap

=>__ioremap_caller(addr, size, _PAGE_NO_CACHE | _PAGE_GUARDED, __builtin_return_address(0));

=>if ((v = p_mapped_by_tlbcam(p)))//如果是已经定义好的TLB1映射,那么返回TLB1映射的虚拟地址

goto out;

=>for (i = 0; i < size && err == 0; i += PAGE_SIZE)//如果不是TLB1映射,那么进行TLB0映射

err = map_page(v+i, p+i, flags);

=>out://返回虚拟地址

return (void __iomem *) (v + ((unsigned long)addr & ~PAGE_MASK));

paging_init

=>for (; v < end; v += PAGE_SIZE)

map_page(v, 0, 0); /* XXX gross */

=>pd = pmd_offset(pud_offset(pgd_offset_k(va), va), va);

=>pg = pte_alloc_kernel(pd, va);

alloc_pages

=>alloc_pages_node

=>__alloc_pages(gfp_mask, order, node_zonelist(nid, gfp_mask));

=>__alloc_pages_nodemask(gfp_mask, order, zonelist, NULL);

=>page = get_page_from_freelist(gfp_mask|__GFP_HARDWALL, nodemask, order,

zonelist, high_zoneidx, ALLOC_WMARK_LOW|ALLOC_CPUSET,

preferred_zone, migratetype);

=>page = buffered_rmqueue(preferred_zone, zone, order,

gfp_mask, migratetype);

=>page = __rmqueue(zone, order, migratetype);

用户态malloc最后调用的是sys_brk,在内核定义如下:

YSCALL_DEFINE1 mmap.c,在《linux内存管理之用户态内存管理》里面展开描述

内核线程没有自己独立的页表集,他们使用刚刚在CPU上执行的普通进程的页表集。其34G的内核页表是共用的,而13G的普通页表对内核线程无意义。

powerpc MMU初始化

MMU_init

=>total_lowmem = total_memory = memblock_end_of_DRAM() - memstart_addr;

lowmem_end_addr = memstart_addr + total_lowmem;

=>adjust_total_lowmem();// fsl booke特有,映射768M到TLB1的3个entry

=>ram = min((phys_addr_t)__max_low_memory, (phys_addr_t)total_lowmem);

=>__max_low_memory = map_mem_in_cams(ram, CONFIG_LOWMEM_CAM_NUM);//计算内存大小,为TLB1映射做准备

=>for (i = 0; ram && i < max_cam_idx; i++)

settlbcam(i, virt, phys, cam_sz, PAGE_KERNEL_X, 0);//写寄存器,映射TLB1

=>MMU_init_hw();

=>flush_instruction_cache();//定义在archpowerpckernelmisc_32.S里面实现

=>#elif CONFIG_FSL_BOOKE

BEGIN_FTR_SECTION

mfspr r3,SPRN_L1CSR0

ori r3,r3,L1CSR0_CFI|L1CSR0_CLFC

/* msync; isync recommended here */

mtspr SPRN_L1CSR0,r3

isync

blr

=>mapin_ram();

=>s = mmu_mapin_ram(top);

=>tlbcam_addrs[tlbcam_index - 1].limit - PAGE_OFFSET + 1;

=>__mapin_ram_chunk(s, top);//剩下的max_low_mem (768M)内存用TLB0映射,实际max_low_mem在TLB1已经映射,剩下0M,不需要在TLB0映射,也就是说s和top相等

=>for (; s < top; s += PAGE_SIZE)

map_page(v, p, f);

linux的GDT定义

DEFINE_PER_CPU_PAGE_ALIGNED(struct gdt_page, gdt_page) = { .gdt = {

#ifdef CONFIG_X86_64

/*

* We need valid kernel segments for data and code in long mode too

* IRET will check the segment types kkeil 2000/10/28

* Also sysret mandates a special GDT layout

*

* TLS descriptors are currently at a different place compared to i386.

* Hopefully nobody expects them at a fixed place (Wine?)

*/

[GDT_ENTRY_KERNEL32_CS] = GDT_ENTRY_INIT(0xc09b, 0, 0xfffff),

[GDT_ENTRY_KERNEL_CS] = GDT_ENTRY_INIT(0xa09b, 0, 0xfffff),

[GDT_ENTRY_KERNEL_DS] = GDT_ENTRY_INIT(0xc093, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER32_CS] = GDT_ENTRY_INIT(0xc0fb, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_DS] = GDT_ENTRY_INIT(0xc0f3, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_CS] = GDT_ENTRY_INIT(0xa0fb, 0, 0xfffff),

#else

[GDT_ENTRY_KERNEL_CS] = GDT_ENTRY_INIT(0xc09a, 0, 0xfffff),

[GDT_ENTRY_KERNEL_DS] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_CS] = GDT_ENTRY_INIT(0xc0fa, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_DS] = GDT_ENTRY_INIT(0xc0f2, 0, 0xfffff),

/*

* Segments used for calling PnP BIOS have byte granularity.

* They code segments and data segments have fixed 64k limits,

* the transfer segment sizes are set at run time.

*/

/* 32-bit code */

[GDT_ENTRY_PNPBIOS_CS32] = GDT_ENTRY_INIT(0x409a, 0, 0xffff),

/* 16-bit code */

[GDT_ENTRY_PNPBIOS_CS16] = GDT_ENTRY_INIT(0x009a, 0, 0xffff),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_DS] = GDT_ENTRY_INIT(0x0092, 0, 0xffff),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_TS1] = GDT_ENTRY_INIT(0x0092, 0, 0),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_TS2] = GDT_ENTRY_INIT(0x0092, 0, 0),

/*

* The APM segments have byte granularity and their bases

* are set at run time. All have 64k limits.

*/

/* 32-bit code */

[GDT_ENTRY_APMBIOS_BASE] = GDT_ENTRY_INIT(0x409a, 0, 0xffff),

/* 16-bit code */

[GDT_ENTRY_APMBIOS_BASE+1] = GDT_ENTRY_INIT(0x009a, 0, 0xffff),

/* data */

[GDT_ENTRY_APMBIOS_BASE+2] = GDT_ENTRY_INIT(0x4092, 0, 0xffff),

[GDT_ENTRY_ESPFIX_SS] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

[GDT_ENTRY_PERCPU] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

GDT_STACK_CANARY_INIT

#endif

} };

malloc成功,仅仅改变了某个vma,页表不会变,物理内存的分配也不会变

关于maps

进程A的PID可以通过ps命令查看到

然后通过

cat /proc/PID/maps | grep xxx

可以找到该进程映射的文件,包括so

可以参考文章

linux /proc/pid/maps 信息分析

http://blog.csdn.net/mldxs/article/details/16993315

这篇文章也不错

linux内存管理之sys_brk实现分析

SYSCALL_DEFINE1(brk, unsigned long, brk)

http://blog.csdn.net/beyondhaven/article/details/6636561

linux内存管理浅析

https://my.oschina.net/u/1458728/blog/207208

Linux内存管理原理

http://www.cnblogs.com/zhaoyl/p/3695517.html

linux内存管理

http://www.cnblogs.com/autum/archive/2012/10/12/linuxmalloc.html

Linux内存管理

http://blog.csdn.net/pi9nc/article/details/11016907

关于slab如何调用buddy,这篇文章讲得很好

Kernel那些事儿之内存管理(8) — Slab(中)

http://richardguo.blog.51cto.com/9343720/1675411

认真分析mmap:是什么 为什么 怎么用

http://www.cnblogs.com/huxiao-tee/p/4660352.html

每个程序员都应该了解的“虚拟内存”知识

http://blog.jobbole.com/36303/?utm_source=blog.jobbole.com&utm_medium=relatedPosts

pmtest6.asm

https://blog.csdn.net/u012323667/article/details/79400225

linux内核内存管理学习之一(基本概念,分页及初始化)

https://blog.csdn.net/goodluckwhh/article/details/9970845

Linux内核内存管理架构 好文章

https://www.cnblogs.com/wahaha02/p/9392088.html

内存分页大小对性能的提升原理

http://www.360doc.com/content/14/0822/14/11253639_403814561.shtml

最后

以上就是顺心月亮最近收集整理的关于LINUX内存管理之页式管理 缺页管理的全部内容,更多相关LINUX内存管理之页式管理内容请搜索靠谱客的其他文章。

发表评论 取消回复