目录

限制问题日志

错误代码跟踪

异常问题分析

DruidDataSource源码

参考阅读

首先声明:这个锅不是 DruidDataSource的问题,请放心使用 DruidDataSource。

DS是关系型数据库服务(Relational Database Service)的简称,是一种即开即用、稳定可靠、可弹性伸缩的在线数据库服务。具有多重安全防护措施和完善的性能监控体系,并提供专业的数据库备份、恢复及优化方案,使您能专注于应用开发和业务发展。

问题描述:使用JDBC和Hikari连接RDS都可以正常启动服务,使用DruidDataSource连接RDS却启动不起来,最后是因为程序授权 license导致服务启动失败,而DruidDataSource的警告异常是系统安全级别的提示而已 。

限制问题日志

主要提示的是sun.misc.Unsafe.park(Native method) ,此问题可能是系统级别的安全限制,虽然也有AQS异常,本质上应该涉及到系统Native方法调用。

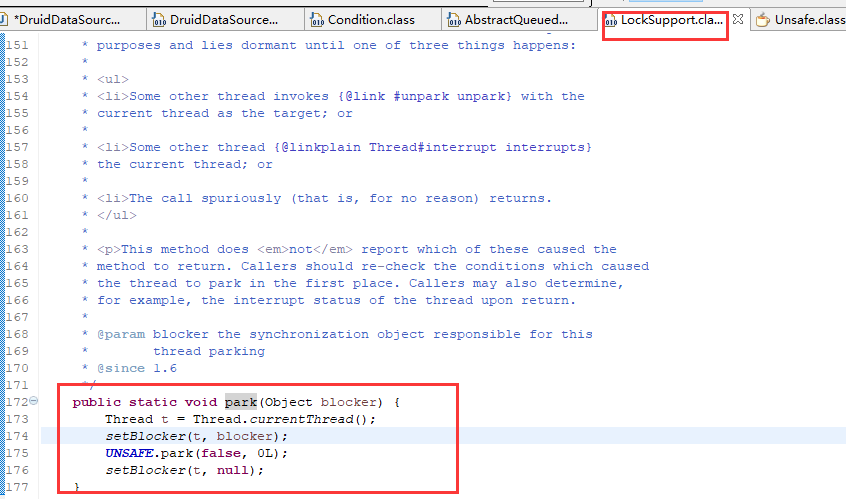

sun.misc.Unsafe.park(Native Method)

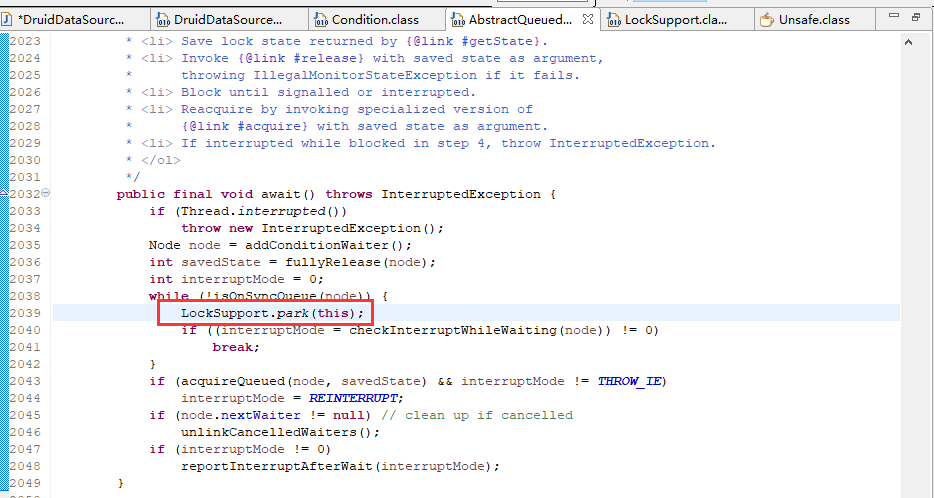

java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2039)

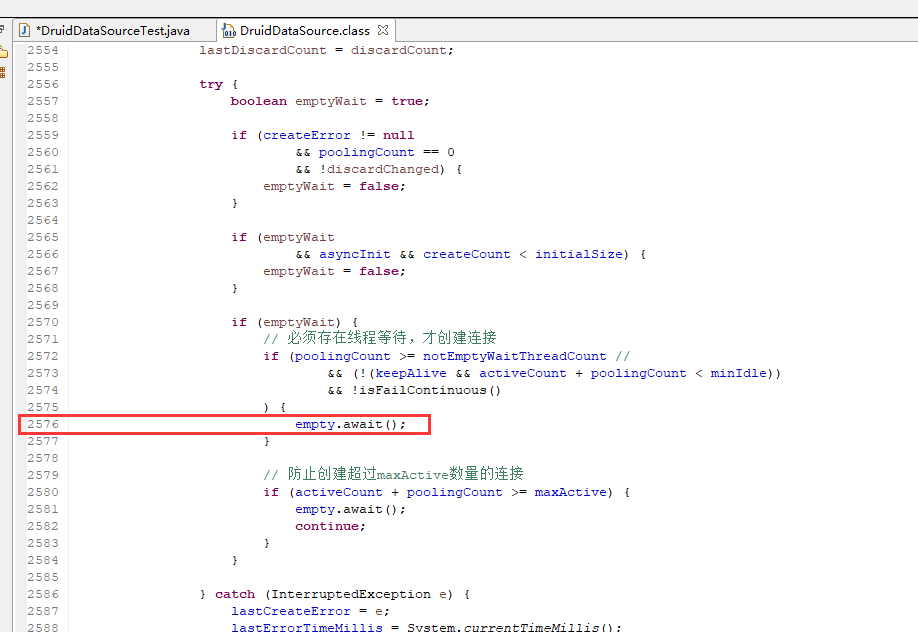

com.alibaba.druid.pool.DruidDataSource$CreateConnectionThread.run(DruidDataSource.java:2576)

17-Sep-2020 18:09:21.112 WARNING [localhost-startStop-1] org.apache.catalina.loader.WebappClassLoaderBase.clearReferencesThreads The web application [forestar-patrol-ywpt] appears to have started a thread named [Druid-ConnectionPool-Destroy-869651846] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread:

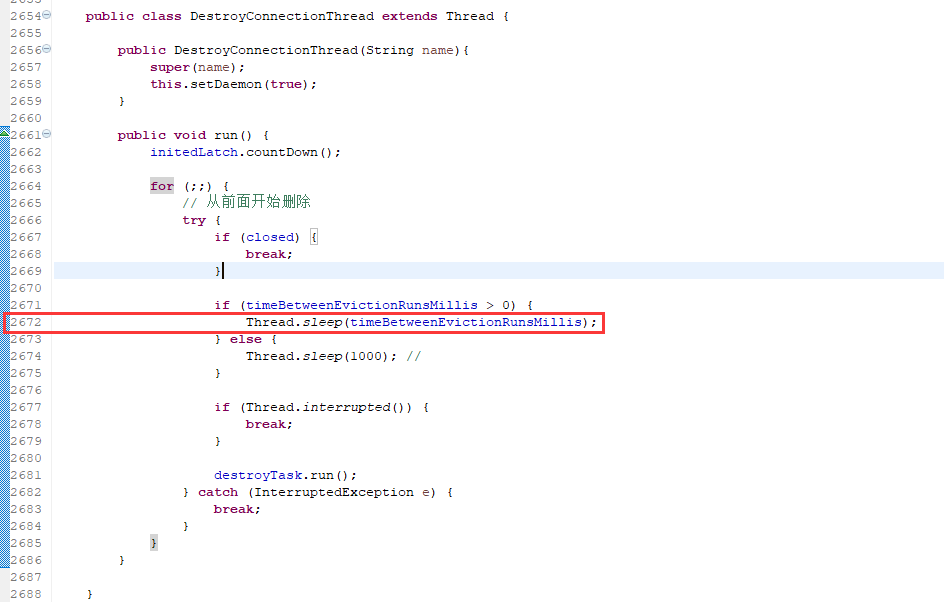

java.lang.Thread.sleep(Native Method)

com.alibaba.druid.pool.DruidDataSource$DestroyConnectionThread.run(DruidDataSource.java:2672)

17-Sep-2020 18:09:21.113 WARNING [localhost-startStop-1] org.apache.catalina.loader.WebappClassLoaderBase.clearReferencesThreads The web application [forestar-patrol-ywpt] appears to have started a thread named [fsCacheManager] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread:

java.net.SocketInputStream.socketRead0(Native Method)

java.net.SocketInputStream.socketRead(SocketInputStream.java:116)

java.net.SocketInputStream.read(SocketInputStream.java:171)

java.net.SocketInputStream.read(SocketInputStream.java:141)

java.io.BufferedInputStream.fill(BufferedInputStream.java:246)

java.io.BufferedInputStream.read1(BufferedInputStream.java:286)

java.io.BufferedInputStream.read(BufferedInputStream.java:345)

sun.net.www.http.HttpClient.parseHTTPHeader(HttpClient.java:735)

sun.net.www.http.HttpClient.parseHTTP(HttpClient.java:678)

sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1587)

sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1492)

net.sf.ehcache.util.UpdateChecker.getUpdateProperties(UpdateChecker.java:106)

net.sf.ehcache.util.UpdateChecker.doCheck(UpdateChecker.java:70)

net.sf.ehcache.util.UpdateChecker.checkForUpdate(UpdateChecker.java:60)

net.sf.ehcache.util.UpdateChecker.run(UpdateChecker.java:51)

java.util.TimerThread.mainLoop(Timer.java:555)

java.util.TimerThread.run(Timer.java:505)

17-Sep-2020 18:09:21.113 WARNING [localhost-startStop-1] org.apache.catalina.loader.WebappClassLoaderBase.clearReferencesThreads The web application [forestar-patrol-ywpt] appears to have started a thread named [SimplePageCachingFilter.data] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread:

sun.misc.Unsafe.park(Native Method)

java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:215)

java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2078)

java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(ScheduledThreadPoolExecutor.java:1093)

java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(ScheduledThreadPoolExecutor.java:809)

java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1074)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1134)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

java.lang.Thread.run(Thread.java:748)

17-Sep-2020 18:09:21.114 WARNING [localhost-startStop-1] org.apache.catalina.loader.WebappClassLoaderBase.clearReferencesThreads The web application [forestar-patrol-ywpt] appears to have started a thread named [MedataCache.data] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread:

sun.misc.Unsafe.park(Native Method)

java.util.concurrent.locks.LockSupport.parkNanos(LockSupport.java:215)

java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2078)

java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(ScheduledThreadPoolExecutor.java:1093)

java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(ScheduledThreadPoolExecutor.java:809)

java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1074)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1134)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

java.lang.Thread.run(Thread.java:748)

错误代码跟踪

错误点1:

错误点2:

异常问题分析

错误点1分析:这里是AQS队列阻塞。

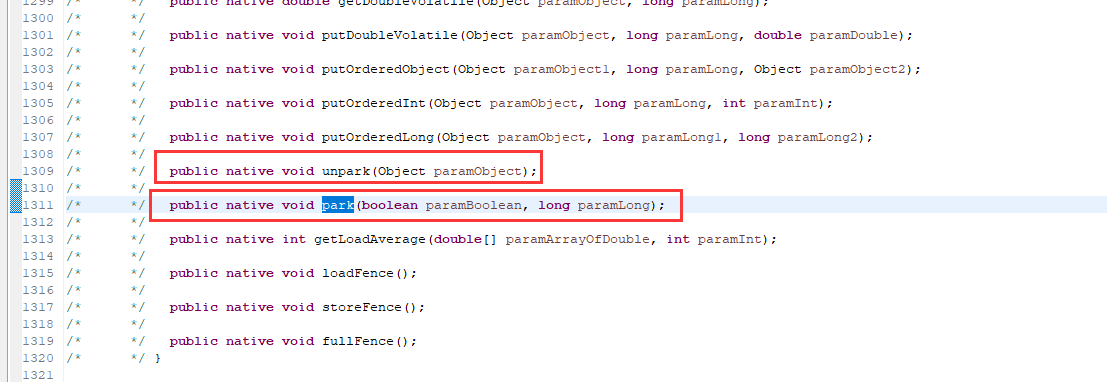

定位到park底层调用的是Native方法:这里就是将线程的进行阻塞的方法。

定位到park底层调用的是Native方法:这里就是将线程的进行阻塞的方法。

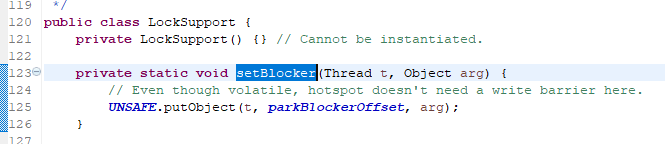

setBlocker方法本身是调用本地系统Native硬件方法:

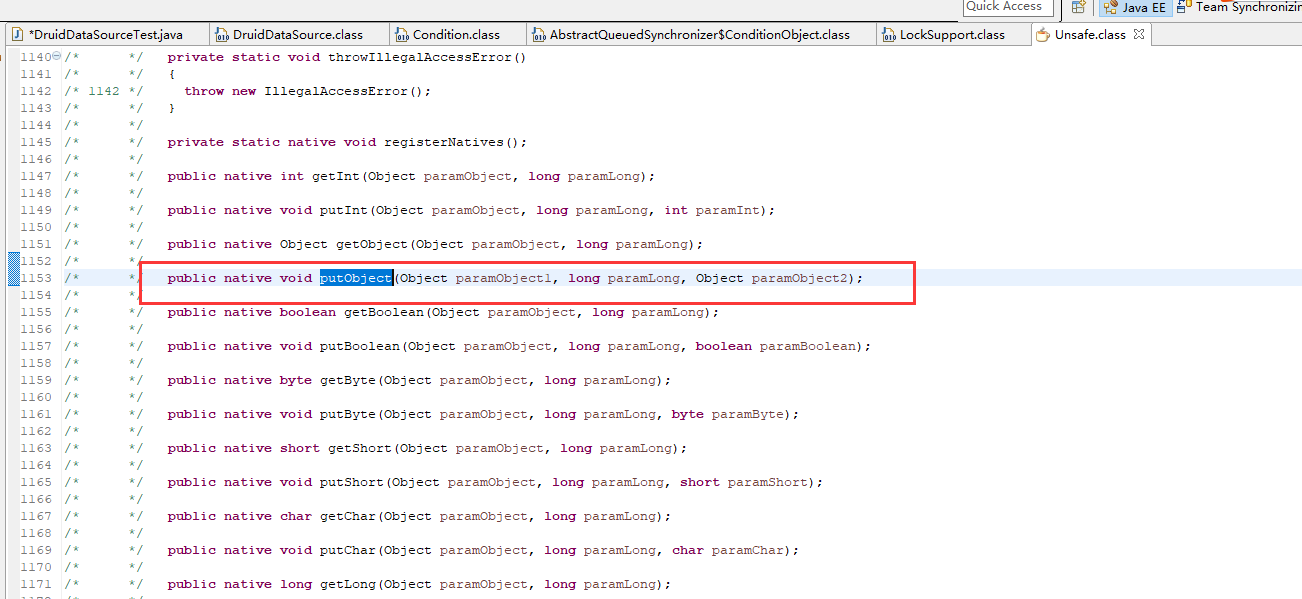

而UNSAFE的方法都是本地硬件Native方法:

问题点2是同个线程引发的问题实质上是问题1。

DruidDataSource源码

阿里Druid项目代码:https://github.com/alibaba/druid

解决方法参考:RDS的mysql无法正常连接 ,或者更换数据源

/*

* Copyright 1999-2018 Alibaba Group Holding Ltd.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.alibaba.druid.pool;

import static com.alibaba.druid.util.Utils.getBoolean;

import java.io.Closeable;

import java.security.AccessController;

import java.security.PrivilegedAction;

import java.sql.Connection;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.ConcurrentModificationException;

import java.util.Date;

import java.util.HashMap;

import java.util.Iterator;

import java.util.LinkedHashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import java.util.ServiceLoader;

import java.util.StringTokenizer;

import java.util.TimeZone;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.Future;

import java.util.concurrent.ScheduledFuture;

import java.util.concurrent.ScheduledThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicLongFieldUpdater;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import javax.management.JMException;

import javax.management.MBeanRegistration;

import javax.management.MBeanServer;

import javax.management.ObjectName;

import javax.naming.NamingException;

import javax.naming.Reference;

import javax.naming.Referenceable;

import javax.naming.StringRefAddr;

import javax.sql.ConnectionEvent;

import javax.sql.ConnectionEventListener;

import javax.sql.ConnectionPoolDataSource;

import javax.sql.PooledConnection;

import com.alibaba.druid.Constants;

import com.alibaba.druid.TransactionTimeoutException;

import com.alibaba.druid.VERSION;

import com.alibaba.druid.filter.AutoLoad;

import com.alibaba.druid.filter.Filter;

import com.alibaba.druid.filter.FilterChainImpl;

import com.alibaba.druid.mock.MockDriver;

import com.alibaba.druid.pool.DruidPooledPreparedStatement.PreparedStatementKey;

import com.alibaba.druid.pool.vendor.DB2ExceptionSorter;

import com.alibaba.druid.pool.vendor.InformixExceptionSorter;

import com.alibaba.druid.pool.vendor.MSSQLValidConnectionChecker;

import com.alibaba.druid.pool.vendor.MockExceptionSorter;

import com.alibaba.druid.pool.vendor.MySqlExceptionSorter;

import com.alibaba.druid.pool.vendor.MySqlValidConnectionChecker;

import com.alibaba.druid.pool.vendor.NullExceptionSorter;

import com.alibaba.druid.pool.vendor.OracleExceptionSorter;

import com.alibaba.druid.pool.vendor.OracleValidConnectionChecker;

import com.alibaba.druid.pool.vendor.PGExceptionSorter;

import com.alibaba.druid.pool.vendor.PGValidConnectionChecker;

import com.alibaba.druid.pool.vendor.SybaseExceptionSorter;

import com.alibaba.druid.proxy.DruidDriver;

import com.alibaba.druid.proxy.jdbc.DataSourceProxyConfig;

import com.alibaba.druid.proxy.jdbc.TransactionInfo;

import com.alibaba.druid.sql.ast.SQLStatement;

import com.alibaba.druid.sql.ast.statement.SQLSelectQuery;

import com.alibaba.druid.sql.ast.statement.SQLSelectQueryBlock;

import com.alibaba.druid.sql.ast.statement.SQLSelectStatement;

import com.alibaba.druid.sql.parser.SQLParserUtils;

import com.alibaba.druid.sql.parser.SQLStatementParser;

import com.alibaba.druid.stat.DruidDataSourceStatManager;

import com.alibaba.druid.stat.JdbcDataSourceStat;

import com.alibaba.druid.stat.JdbcSqlStat;

import com.alibaba.druid.stat.JdbcSqlStatValue;

import com.alibaba.druid.support.logging.Log;

import com.alibaba.druid.support.logging.LogFactory;

import com.alibaba.druid.util.JMXUtils;

import com.alibaba.druid.util.JdbcConstants;

import com.alibaba.druid.util.JdbcUtils;

import com.alibaba.druid.util.StringUtils;

import com.alibaba.druid.util.Utils;

import com.alibaba.druid.wall.WallFilter;

import com.alibaba.druid.wall.WallProviderStatValue;

/**

* @author ljw [ljw2083@alibaba-inc.com]

* @author wenshao [szujobs@hotmail.com]

*/

public class DruidDataSource extends DruidAbstractDataSource implements DruidDataSourceMBean, ManagedDataSource, Referenceable, Closeable, Cloneable, ConnectionPoolDataSource, MBeanRegistration {

private final static Log LOG = LogFactory.getLog(DruidDataSource.class);

private static final long serialVersionUID = 1L;

// stats

private volatile long recycleErrorCount = 0L;

private long connectCount = 0L;

private long closeCount = 0L;

private volatile long connectErrorCount = 0L;

private long recycleCount = 0L;

private long removeAbandonedCount = 0L;

private long notEmptyWaitCount = 0L;

private long notEmptySignalCount = 0L;

private long notEmptyWaitNanos = 0L;

private int keepAliveCheckCount = 0;

private int activePeak = 0;

private long activePeakTime = 0;

private int poolingPeak = 0;

private long poolingPeakTime = 0;

// store

private volatile DruidConnectionHolder[] connections;

private int poolingCount = 0;

private int activeCount = 0;

private volatile long discardCount = 0;

private int notEmptyWaitThreadCount = 0;

private int notEmptyWaitThreadPeak = 0;

//

private DruidConnectionHolder[] evictConnections;

private DruidConnectionHolder[] keepAliveConnections;

// threads

private volatile ScheduledFuture<?> destroySchedulerFuture;

private DestroyTask destroyTask;

private volatile Future<?> createSchedulerFuture;

private CreateConnectionThread createConnectionThread;

private DestroyConnectionThread destroyConnectionThread;

private LogStatsThread logStatsThread;

private int createTaskCount;

private final CountDownLatch initedLatch = new CountDownLatch(2);

private volatile boolean enable = true;

private boolean resetStatEnable = true;

private volatile long resetCount = 0L;

private String initStackTrace;

private volatile boolean closing = false;

private volatile boolean closed = false;

private long closeTimeMillis = -1L;

protected JdbcDataSourceStat dataSourceStat;

private boolean useGlobalDataSourceStat = false;

private boolean mbeanRegistered = false;

public static ThreadLocal<Long> waitNanosLocal = new ThreadLocal<Long>();

private boolean logDifferentThread = true;

private volatile boolean keepAlive = false;

private boolean asyncInit = false;

protected boolean killWhenSocketReadTimeout = false;

private static List<Filter> autoFilters = null;

private boolean loadSpifilterSkip = false;

protected static final AtomicLongFieldUpdater<DruidDataSource> recycleErrorCountUpdater

= AtomicLongFieldUpdater.newUpdater(DruidDataSource.class, "recycleErrorCount");

protected static final AtomicLongFieldUpdater<DruidDataSource> connectErrorCountUpdater

= AtomicLongFieldUpdater.newUpdater(DruidDataSource.class, "connectErrorCount");

protected static final AtomicLongFieldUpdater<DruidDataSource> resetCountUpdater

= AtomicLongFieldUpdater.newUpdater(DruidDataSource.class, "resetCount");

public DruidDataSource(){

this(false);

}

public DruidDataSource(boolean fairLock){

super(fairLock);

configFromPropety(System.getProperties());

}

public boolean isAsyncInit() {

return asyncInit;

}

public void setAsyncInit(boolean asyncInit) {

this.asyncInit = asyncInit;

}

public void configFromPropety(Properties properties) {

{

String property = properties.getProperty("druid.name");

if (property != null) {

this.setName(property);

}

}

{

String property = properties.getProperty("druid.url");

if (property != null) {

this.setUrl(property);

}

}

{

String property = properties.getProperty("druid.username");

if (property != null) {

this.setUsername(property);

}

}

{

String property = properties.getProperty("druid.password");

if (property != null) {

this.setPassword(property);

}

}

{

Boolean value = getBoolean(properties, "druid.testWhileIdle");

if (value != null) {

this.testWhileIdle = value;

}

}

{

Boolean value = getBoolean(properties, "druid.testOnBorrow");

if (value != null) {

this.testOnBorrow = value;

}

}

{

String property = properties.getProperty("druid.validationQuery");

if (property != null && property.length() > 0) {

this.setValidationQuery(property);

}

}

{

Boolean value = getBoolean(properties, "druid.useGlobalDataSourceStat");

if (value != null) {

this.setUseGlobalDataSourceStat(value);

}

}

{

Boolean value = getBoolean(properties, "druid.useGloalDataSourceStat"); // compatible for early versions

if (value != null) {

this.setUseGlobalDataSourceStat(value);

}

}

{

Boolean value = getBoolean(properties, "druid.asyncInit"); // compatible for early versions

if (value != null) {

this.setAsyncInit(value);

}

}

{

String property = properties.getProperty("druid.filters");

if (property != null && property.length() > 0) {

try {

this.setFilters(property);

} catch (SQLException e) {

LOG.error("setFilters error", e);

}

}

}

{

String property = properties.getProperty(Constants.DRUID_TIME_BETWEEN_LOG_STATS_MILLIS);

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setTimeBetweenLogStatsMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property '" + Constants.DRUID_TIME_BETWEEN_LOG_STATS_MILLIS + "'", e);

}

}

}

{

String property = properties.getProperty(Constants.DRUID_STAT_SQL_MAX_SIZE);

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

if (dataSourceStat != null) {

dataSourceStat.setMaxSqlSize(value);

}

} catch (NumberFormatException e) {

LOG.error("illegal property '" + Constants.DRUID_STAT_SQL_MAX_SIZE + "'", e);

}

}

}

{

Boolean value = getBoolean(properties, "druid.clearFiltersEnable");

if (value != null) {

this.setClearFiltersEnable(value);

}

}

{

Boolean value = getBoolean(properties, "druid.resetStatEnable");

if (value != null) {

this.setResetStatEnable(value);

}

}

{

String property = properties.getProperty("druid.notFullTimeoutRetryCount");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setNotFullTimeoutRetryCount(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.notFullTimeoutRetryCount'", e);

}

}

}

{

String property = properties.getProperty("druid.timeBetweenEvictionRunsMillis");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setTimeBetweenEvictionRunsMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.timeBetweenEvictionRunsMillis'", e);

}

}

}

{

String property = properties.getProperty("druid.maxWaitThreadCount");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setMaxWaitThreadCount(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.maxWaitThreadCount'", e);

}

}

}

{

String property = properties.getProperty("druid.maxWait");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setMaxWait(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.maxWait'", e);

}

}

}

{

Boolean value = getBoolean(properties, "druid.failFast");

if (value != null) {

this.setFailFast(value);

}

}

{

String property = properties.getProperty("druid.phyTimeoutMillis");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setPhyTimeoutMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.phyTimeoutMillis'", e);

}

}

}

{

String property = properties.getProperty("druid.phyMaxUseCount");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setPhyMaxUseCount(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.phyMaxUseCount'", e);

}

}

}

{

String property = properties.getProperty("druid.minEvictableIdleTimeMillis");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setMinEvictableIdleTimeMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.minEvictableIdleTimeMillis'", e);

}

}

}

{

String property = properties.getProperty("druid.maxEvictableIdleTimeMillis");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setMaxEvictableIdleTimeMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.maxEvictableIdleTimeMillis'", e);

}

}

}

{

Boolean value = getBoolean(properties, "druid.keepAlive");

if (value != null) {

this.setKeepAlive(value);

}

}

{

String property = properties.getProperty("druid.keepAliveBetweenTimeMillis");

if (property != null && property.length() > 0) {

try {

long value = Long.parseLong(property);

this.setKeepAliveBetweenTimeMillis(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.keepAliveBetweenTimeMillis'", e);

}

}

}

{

Boolean value = getBoolean(properties, "druid.poolPreparedStatements");

if (value != null) {

this.setPoolPreparedStatements0(value);

}

}

{

Boolean value = getBoolean(properties, "druid.initVariants");

if (value != null) {

this.setInitVariants(value);

}

}

{

Boolean value = getBoolean(properties, "druid.initGlobalVariants");

if (value != null) {

this.setInitGlobalVariants(value);

}

}

{

Boolean value = getBoolean(properties, "druid.useUnfairLock");

if (value != null) {

this.setUseUnfairLock(value);

}

}

{

String property = properties.getProperty("druid.driverClassName");

if (property != null) {

this.setDriverClassName(property);

}

}

{

String property = properties.getProperty("druid.initialSize");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setInitialSize(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.initialSize'", e);

}

}

}

{

String property = properties.getProperty("druid.minIdle");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setMinIdle(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.minIdle'", e);

}

}

}

{

String property = properties.getProperty("druid.maxActive");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setMaxActive(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.maxActive'", e);

}

}

}

{

Boolean value = getBoolean(properties, "druid.killWhenSocketReadTimeout");

if (value != null) {

setKillWhenSocketReadTimeout(value);

}

}

{

String property = properties.getProperty("druid.connectProperties");

if (property != null) {

this.setConnectionProperties(property);

}

}

{

String property = properties.getProperty("druid.maxPoolPreparedStatementPerConnectionSize");

if (property != null && property.length() > 0) {

try {

int value = Integer.parseInt(property);

this.setMaxPoolPreparedStatementPerConnectionSize(value);

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.maxPoolPreparedStatementPerConnectionSize'", e);

}

}

}

{

String property = properties.getProperty("druid.initConnectionSqls");

if (property != null && property.length() > 0) {

try {

StringTokenizer tokenizer = new StringTokenizer(property, ";");

setConnectionInitSqls(Collections.list(tokenizer));

} catch (NumberFormatException e) {

LOG.error("illegal property 'druid.initConnectionSqls'", e);

}

}

}

{

String property = System.getProperty("druid.load.spifilter.skip");

if (property != null && !"false".equals(property)) {

loadSpifilterSkip = true;

}

}

}

public boolean isKillWhenSocketReadTimeout() {

return killWhenSocketReadTimeout;

}

public void setKillWhenSocketReadTimeout(boolean killWhenSocketTimeOut) {

this.killWhenSocketReadTimeout = killWhenSocketTimeOut;

}

public boolean isUseGlobalDataSourceStat() {

return useGlobalDataSourceStat;

}

public void setUseGlobalDataSourceStat(boolean useGlobalDataSourceStat) {

this.useGlobalDataSourceStat = useGlobalDataSourceStat;

}

public boolean isKeepAlive() {

return keepAlive;

}

public void setKeepAlive(boolean keepAlive) {

this.keepAlive = keepAlive;

}

public String getInitStackTrace() {

return initStackTrace;

}

public boolean isResetStatEnable() {

return resetStatEnable;

}

public void setResetStatEnable(boolean resetStatEnable) {

this.resetStatEnable = resetStatEnable;

if (dataSourceStat != null) {

dataSourceStat.setResetStatEnable(resetStatEnable);

}

}

public long getDiscardCount() {

return discardCount;

}

public void restart() throws SQLException {

lock.lock();

try {

if (activeCount > 0) {

throw new SQLException("can not restart, activeCount not zero. " + activeCount);

}

if (LOG.isInfoEnabled()) {

LOG.info("{dataSource-" + this.getID() + "} restart");

}

this.close();

this.resetStat();

this.inited = false;

this.enable = true;

this.closed = false;

} finally {

lock.unlock();

}

}

public void resetStat() {

if (!isResetStatEnable()) {

return;

}

lock.lock();

try {

connectCount = 0;

closeCount = 0;

discardCount = 0;

recycleCount = 0;

createCount = 0L;

directCreateCount = 0;

destroyCount = 0L;

removeAbandonedCount = 0;

notEmptyWaitCount = 0;

notEmptySignalCount = 0L;

notEmptyWaitNanos = 0;

activePeak = activeCount;

activePeakTime = 0;

poolingPeak = 0;

createTimespan = 0;

lastError = null;

lastErrorTimeMillis = 0;

lastCreateError = null;

lastCreateErrorTimeMillis = 0;

} finally {

lock.unlock();

}

connectErrorCountUpdater.set(this, 0);

errorCountUpdater.set(this, 0);

commitCountUpdater.set(this, 0);

rollbackCountUpdater.set(this, 0);

startTransactionCountUpdater.set(this, 0);

cachedPreparedStatementHitCountUpdater.set(this, 0);

closedPreparedStatementCountUpdater.set(this, 0);

preparedStatementCountUpdater.set(this, 0);

transactionHistogram.reset();

cachedPreparedStatementDeleteCountUpdater.set(this, 0);

recycleErrorCountUpdater.set(this, 0);

resetCountUpdater.incrementAndGet(this);

}

public long getResetCount() {

return this.resetCount;

}

public boolean isEnable() {

return enable;

}

public void setEnable(boolean enable) {

lock.lock();

try {

this.enable = enable;

if (!enable) {

notEmpty.signalAll();

notEmptySignalCount++;

}

} finally {

lock.unlock();

}

}

public void setPoolPreparedStatements(boolean value) {

setPoolPreparedStatements0(value);

}

private void setPoolPreparedStatements0(boolean value) {

if (this.poolPreparedStatements == value) {

return;

}

this.poolPreparedStatements = value;

if (!inited) {

return;

}

if (LOG.isInfoEnabled()) {

LOG.info("set poolPreparedStatements " + this.poolPreparedStatements + " -> " + value);

}

if (!value) {

lock.lock();

try {

for (int i = 0; i < poolingCount; ++i) {

DruidConnectionHolder connection = connections[i];

for (PreparedStatementHolder holder : connection.getStatementPool().getMap().values()) {

closePreapredStatement(holder);

}

connection.getStatementPool().getMap().clear();

}

} finally {

lock.unlock();

}

}

}

public void setMaxActive(int maxActive) {

if (this.maxActive == maxActive) {

return;

}

if (maxActive == 0) {

throw new IllegalArgumentException("maxActive can't not set zero");

}

if (!inited) {

this.maxActive = maxActive;

return;

}

if (maxActive < this.minIdle) {

throw new IllegalArgumentException("maxActive less than minIdle, " + maxActive + " < " + this.minIdle);

}

if (LOG.isInfoEnabled()) {

LOG.info("maxActive changed : " + this.maxActive + " -> " + maxActive);

}

lock.lock();

try {

int allCount = this.poolingCount + this.activeCount;

if (maxActive > allCount) {

this.connections = Arrays.copyOf(this.connections, maxActive);

evictConnections = new DruidConnectionHolder[maxActive];

keepAliveConnections = new DruidConnectionHolder[maxActive];

} else {

this.connections = Arrays.copyOf(this.connections, allCount);

evictConnections = new DruidConnectionHolder[allCount];

keepAliveConnections = new DruidConnectionHolder[allCount];

}

this.maxActive = maxActive;

} finally {

lock.unlock();

}

}

@SuppressWarnings("rawtypes")

public void setConnectProperties(Properties properties) {

if (properties == null) {

properties = new Properties();

}

boolean equals;

if (properties.size() == this.connectProperties.size()) {

equals = true;

for (Map.Entry entry : properties.entrySet()) {

Object value = this.connectProperties.get(entry.getKey());

Object entryValue = entry.getValue();

if (value == null && entryValue != null) {

equals = false;

break;

}

if (!value.equals(entry.getValue())) {

equals = false;

break;

}

}

} else {

equals = false;

}

if (!equals) {

if (inited && LOG.isInfoEnabled()) {

LOG.info("connectProperties changed : " + this.connectProperties + " -> " + properties);

}

configFromPropety(properties);

for (Filter filter : this.filters) {

filter.configFromProperties(properties);

}

if (exceptionSorter != null) {

exceptionSorter.configFromProperties(properties);

}

if (validConnectionChecker != null) {

validConnectionChecker.configFromProperties(properties);

}

if (statLogger != null) {

statLogger.configFromProperties(properties);

}

}

this.connectProperties = properties;

}

public void init() throws SQLException {

if (inited) {

return;

}

// bug fixed for dead lock, for issue #2980

DruidDriver.getInstance();

final ReentrantLock lock = this.lock;

try {

lock.lockInterruptibly();

} catch (InterruptedException e) {

throw new SQLException("interrupt", e);

}

boolean init = false;

try {

if (inited) {

return;

}

initStackTrace = Utils.toString(Thread.currentThread().getStackTrace());

this.id = DruidDriver.createDataSourceId();

if (this.id > 1) {

long delta = (this.id - 1) * 100000;

this.connectionIdSeedUpdater.addAndGet(this, delta);

this.statementIdSeedUpdater.addAndGet(this, delta);

this.resultSetIdSeedUpdater.addAndGet(this, delta);

this.transactionIdSeedUpdater.addAndGet(this, delta);

}

if (this.jdbcUrl != null) {

this.jdbcUrl = this.jdbcUrl.trim();

initFromWrapDriverUrl();

}

for (Filter filter : filters) {

filter.init(this);

}

if (this.dbType == null || this.dbType.length() == 0) {

this.dbType = JdbcUtils.getDbType(jdbcUrl, null);

}

if (JdbcConstants.MYSQL.equals(this.dbType)

|| JdbcConstants.MARIADB.equals(this.dbType)

|| JdbcConstants.ALIYUN_ADS.equals(this.dbType)) {

boolean cacheServerConfigurationSet = false;

if (this.connectProperties.containsKey("cacheServerConfiguration")) {

cacheServerConfigurationSet = true;

} else if (this.jdbcUrl.indexOf("cacheServerConfiguration") != -1) {

cacheServerConfigurationSet = true;

}

if (cacheServerConfigurationSet) {

this.connectProperties.put("cacheServerConfiguration", "true");

}

}

if (maxActive <= 0) {

throw new IllegalArgumentException("illegal maxActive " + maxActive);

}

if (maxActive < minIdle) {

throw new IllegalArgumentException("illegal maxActive " + maxActive);

}

if (getInitialSize() > maxActive) {

throw new IllegalArgumentException("illegal initialSize " + this.initialSize + ", maxActive " + maxActive);

}

if (timeBetweenLogStatsMillis > 0 && useGlobalDataSourceStat) {

throw new IllegalArgumentException("timeBetweenLogStatsMillis not support useGlobalDataSourceStat=true");

}

if (maxEvictableIdleTimeMillis < minEvictableIdleTimeMillis) {

throw new SQLException("maxEvictableIdleTimeMillis must be grater than minEvictableIdleTimeMillis");

}

if (this.driverClass != null) {

this.driverClass = driverClass.trim();

}

initFromSPIServiceLoader();

if (this.driver == null) {

if (this.driverClass == null || this.driverClass.isEmpty()) {

this.driverClass = JdbcUtils.getDriverClassName(this.jdbcUrl);

}

if (MockDriver.class.getName().equals(driverClass)) {

driver = MockDriver.instance;

} else {

if (jdbcUrl == null && (driverClass == null || driverClass.length() == 0)) {

throw new SQLException("url not set");

}

driver = JdbcUtils.createDriver(driverClassLoader, driverClass);

}

} else {

if (this.driverClass == null) {

this.driverClass = driver.getClass().getName();

}

}

initCheck();

initExceptionSorter();

initValidConnectionChecker();

validationQueryCheck();

if (isUseGlobalDataSourceStat()) {

dataSourceStat = JdbcDataSourceStat.getGlobal();

if (dataSourceStat == null) {

dataSourceStat = new JdbcDataSourceStat("Global", "Global", this.dbType);

JdbcDataSourceStat.setGlobal(dataSourceStat);

}

if (dataSourceStat.getDbType() == null) {

dataSourceStat.setDbType(this.dbType);

}

} else {

dataSourceStat = new JdbcDataSourceStat(this.name, this.jdbcUrl, this.dbType, this.connectProperties);

}

dataSourceStat.setResetStatEnable(this.resetStatEnable);

connections = new DruidConnectionHolder[maxActive];

evictConnections = new DruidConnectionHolder[maxActive];

keepAliveConnections = new DruidConnectionHolder[maxActive];

SQLException connectError = null;

if (createScheduler != null && asyncInit) {

for (int i = 0; i < initialSize; ++i) {

createTaskCount++;

CreateConnectionTask task = new CreateConnectionTask(true);

this.createSchedulerFuture = createScheduler.submit(task);

}

} else if (!asyncInit) {

// init connections

while (poolingCount < initialSize) {

try {

PhysicalConnectionInfo pyConnectInfo = createPhysicalConnection();

DruidConnectionHolder holder = new DruidConnectionHolder(this, pyConnectInfo);

connections[poolingCount++] = holder;

} catch (SQLException ex) {

LOG.error("init datasource error, url: " + this.getUrl(), ex);

if (initExceptionThrow) {

connectError = ex;

break;

} else {

Thread.sleep(3000);

}

}

}

if (poolingCount > 0) {

poolingPeak = poolingCount;

poolingPeakTime = System.currentTimeMillis();

}

}

createAndLogThread();

createAndStartCreatorThread();

createAndStartDestroyThread();

initedLatch.await();

init = true;

initedTime = new Date();

registerMbean();

if (connectError != null && poolingCount == 0) {

throw connectError;

}

if (keepAlive) {

// async fill to minIdle

if (createScheduler != null) {

for (int i = 0; i < minIdle; ++i) {

createTaskCount++;

CreateConnectionTask task = new CreateConnectionTask(true);

this.createSchedulerFuture = createScheduler.submit(task);

}

} else {

this.emptySignal();

}

}

} catch (SQLException e) {

LOG.error("{dataSource-" + this.getID() + "} init error", e);

throw e;

} catch (InterruptedException e) {

throw new SQLException(e.getMessage(), e);

} catch (RuntimeException e){

LOG.error("{dataSource-" + this.getID() + "} init error", e);

throw e;

} catch (Error e){

LOG.error("{dataSource-" + this.getID() + "} init error", e);

throw e;

} finally {

inited = true;

lock.unlock();

if (init && LOG.isInfoEnabled()) {

String msg = "{dataSource-" + this.getID();

if (this.name != null && !this.name.isEmpty()) {

msg += ",";

msg += this.name;

}

msg += "} inited";

LOG.info(msg);

}

}

}

private void createAndLogThread() {

if (this.timeBetweenLogStatsMillis <= 0) {

return;

}

String threadName = "Druid-ConnectionPool-Log-" + System.identityHashCode(this);

logStatsThread = new LogStatsThread(threadName);

logStatsThread.start();

this.resetStatEnable = false;

}

protected void createAndStartDestroyThread() {

destroyTask = new DestroyTask();

if (destroyScheduler != null) {

long period = timeBetweenEvictionRunsMillis;

if (period <= 0) {

period = 1000;

}

destroySchedulerFuture = destroyScheduler.scheduleAtFixedRate(destroyTask, period, period,

TimeUnit.MILLISECONDS);

initedLatch.countDown();

return;

}

String threadName = "Druid-ConnectionPool-Destroy-" + System.identityHashCode(this);

destroyConnectionThread = new DestroyConnectionThread(threadName);

destroyConnectionThread.start();

}

protected void createAndStartCreatorThread() {

if (createScheduler == null) {

String threadName = "Druid-ConnectionPool-Create-" + System.identityHashCode(this);

createConnectionThread = new CreateConnectionThread(threadName);

createConnectionThread.start();

return;

}

initedLatch.countDown();

}

/**

* load filters from SPI ServiceLoader

*

* @see ServiceLoader

*/

private void initFromSPIServiceLoader() {

if (loadSpifilterSkip) {

return;

}

if (autoFilters == null) {

List<Filter> filters = new ArrayList<Filter>();

ServiceLoader<Filter> autoFilterLoader = ServiceLoader.load(Filter.class);

for (Filter filter : autoFilterLoader) {

AutoLoad autoLoad = filter.getClass().getAnnotation(AutoLoad.class);

if (autoLoad != null && autoLoad.value()) {

filters.add(filter);

}

}

autoFilters = filters;

}

for (Filter filter : autoFilters) {

if (LOG.isInfoEnabled()) {

LOG.info("load filter from spi :" + filter.getClass().getName());

}

addFilter(filter);

}

}

private void initFromWrapDriverUrl() throws SQLException {

if (!jdbcUrl.startsWith(DruidDriver.DEFAULT_PREFIX)) {

return;

}

DataSourceProxyConfig config = DruidDriver.parseConfig(jdbcUrl, null);

this.driverClass = config.getRawDriverClassName();

LOG.error("error url : '" + jdbcUrl + "', it should be : '" + config.getRawUrl() + "'");

this.jdbcUrl = config.getRawUrl();

if (this.name == null) {

this.name = config.getName();

}

for (Filter filter : config.getFilters()) {

addFilter(filter);

}

}

/**

* 会去重复

*

* @param filter

*/

private void addFilter(Filter filter) {

boolean exists = false;

for (Filter initedFilter : this.filters) {

if (initedFilter.getClass() == filter.getClass()) {

exists = true;

break;

}

}

if (!exists) {

filter.init(this);

this.filters.add(filter);

}

}

private void validationQueryCheck() {

if (!(testOnBorrow || testOnReturn || testWhileIdle)) {

return;

}

if (this.validConnectionChecker != null) {

return;

}

if (this.validationQuery != null && this.validationQuery.length() > 0) {

return;

}

String errorMessage = "";

if (testOnBorrow) {

errorMessage += "testOnBorrow is true, ";

}

if (testOnReturn) {

errorMessage += "testOnReturn is true, ";

}

if (testWhileIdle) {

errorMessage += "testWhileIdle is true, ";

}

LOG.error(errorMessage + "validationQuery not set");

}

protected void initCheck() throws SQLException {

if (JdbcUtils.ORACLE.equals(this.dbType)) {

isOracle = true;

if (driver.getMajorVersion() < 10) {

throw new SQLException("not support oracle driver " + driver.getMajorVersion() + "."

+ driver.getMinorVersion());

}

if (driver.getMajorVersion() == 10 && isUseOracleImplicitCache()) {

this.getConnectProperties().setProperty("oracle.jdbc.FreeMemoryOnEnterImplicitCache", "true");

}

oracleValidationQueryCheck();

} else if (JdbcUtils.DB2.equals(dbType)) {

db2ValidationQueryCheck();

} else if (JdbcUtils.MYSQL.equals(this.dbType)

|| JdbcUtils.MYSQL_DRIVER_6.equals(this.dbType)) {

isMySql = true;

}

if (removeAbandoned) {

LOG.warn("removeAbandoned is true, not use in productiion.");

}

}

private void oracleValidationQueryCheck() {

if (validationQuery == null) {

return;

}

if (validationQuery.length() == 0) {

return;

}

SQLStatementParser sqlStmtParser = SQLParserUtils.createSQLStatementParser(validationQuery, this.dbType);

List<SQLStatement> stmtList = sqlStmtParser.parseStatementList();

if (stmtList.size() != 1) {

return;

}

SQLStatement stmt = stmtList.get(0);

if (!(stmt instanceof SQLSelectStatement)) {

return;

}

SQLSelectQuery query = ((SQLSelectStatement) stmt).getSelect().getQuery();

if (query instanceof SQLSelectQueryBlock) {

if (((SQLSelectQueryBlock) query).getFrom() == null) {

LOG.error("invalid oracle validationQuery. " + validationQuery + ", may should be : " + validationQuery

+ " FROM DUAL");

}

}

}

private void db2ValidationQueryCheck() {

if (validationQuery == null) {

return;

}

if (validationQuery.length() == 0) {

return;

}

SQLStatementParser sqlStmtParser = SQLParserUtils.createSQLStatementParser(validationQuery, this.dbType);

List<SQLStatement> stmtList = sqlStmtParser.parseStatementList();

if (stmtList.size() != 1) {

return;

}

SQLStatement stmt = stmtList.get(0);

if (!(stmt instanceof SQLSelectStatement)) {

return;

}

SQLSelectQuery query = ((SQLSelectStatement) stmt).getSelect().getQuery();

if (query instanceof SQLSelectQueryBlock) {

if (((SQLSelectQueryBlock) query).getFrom() == null) {

LOG.error("invalid db2 validationQuery. " + validationQuery + ", may should be : " + validationQuery

+ " FROM SYSDUMMY");

}

}

}

private void initValidConnectionChecker() {

if (this.validConnectionChecker != null) {

return;

}

String realDriverClassName = driver.getClass().getName();

if (JdbcUtils.isMySqlDriver(realDriverClassName)) {

this.validConnectionChecker = new MySqlValidConnectionChecker();

} else if (realDriverClassName.equals(JdbcConstants.ORACLE_DRIVER)

|| realDriverClassName.equals(JdbcConstants.ORACLE_DRIVER2)) {

this.validConnectionChecker = new OracleValidConnectionChecker();

} else if (realDriverClassName.equals(JdbcConstants.SQL_SERVER_DRIVER)

|| realDriverClassName.equals(JdbcConstants.SQL_SERVER_DRIVER_SQLJDBC4)

|| realDriverClassName.equals(JdbcConstants.SQL_SERVER_DRIVER_JTDS)) {

this.validConnectionChecker = new MSSQLValidConnectionChecker();

} else if (realDriverClassName.equals(JdbcConstants.POSTGRESQL_DRIVER)

|| realDriverClassName.equals(JdbcConstants.ENTERPRISEDB_DRIVER)) {

this.validConnectionChecker = new PGValidConnectionChecker();

}

}

private void initExceptionSorter() {

if (exceptionSorter instanceof NullExceptionSorter) {

if (driver instanceof MockDriver) {

return;

}

} else if (this.exceptionSorter != null) {

return;

}

for (Class<?> driverClass = driver.getClass();;) {

String realDriverClassName = driverClass.getName();

if (realDriverClassName.equals(JdbcConstants.MYSQL_DRIVER) //

|| realDriverClassName.equals(JdbcConstants.MYSQL_DRIVER_6)) {

this.exceptionSorter = new MySqlExceptionSorter();

this.isMySql = true;

} else if (realDriverClassName.equals(JdbcConstants.ORACLE_DRIVER)

|| realDriverClassName.equals(JdbcConstants.ORACLE_DRIVER2)) {

this.exceptionSorter = new OracleExceptionSorter();

} else if (realDriverClassName.equals("com.informix.jdbc.IfxDriver")) {

this.exceptionSorter = new InformixExceptionSorter();

} else if (realDriverClassName.equals("com.sybase.jdbc2.jdbc.SybDriver")) {

this.exceptionSorter = new SybaseExceptionSorter();

} else if (realDriverClassName.equals(JdbcConstants.POSTGRESQL_DRIVER)

|| realDriverClassName.equals(JdbcConstants.ENTERPRISEDB_DRIVER)) {

this.exceptionSorter = new PGExceptionSorter();

} else if (realDriverClassName.equals("com.alibaba.druid.mock.MockDriver")) {

this.exceptionSorter = new MockExceptionSorter();

} else if (realDriverClassName.contains("DB2")) {

this.exceptionSorter = new DB2ExceptionSorter();

} else {

Class<?> superClass = driverClass.getSuperclass();

if (superClass != null && superClass != Object.class) {

driverClass = superClass;

continue;

}

}

break;

}

}

@Override

public DruidPooledConnection getConnection() throws SQLException {

return getConnection(maxWait);

}

public DruidPooledConnection getConnection(long maxWaitMillis) throws SQLException {

init();

if (filters.size() > 0) {

FilterChainImpl filterChain = new FilterChainImpl(this);

return filterChain.dataSource_connect(this, maxWaitMillis);

} else {

return getConnectionDirect(maxWaitMillis);

}

}

@Override

public PooledConnection getPooledConnection() throws SQLException {

return getConnection(maxWait);

}

@Override

public PooledConnection getPooledConnection(String user, String password) throws SQLException {

throw new UnsupportedOperationException("Not supported by DruidDataSource");

}

public DruidPooledConnection getConnectionDirect(long maxWaitMillis) throws SQLException {

int notFullTimeoutRetryCnt = 0;

for (;;) {

// handle notFullTimeoutRetry

DruidPooledConnection poolableConnection;

try {

poolableConnection = getConnectionInternal(maxWaitMillis);

} catch (GetConnectionTimeoutException ex) {

if (notFullTimeoutRetryCnt <= this.notFullTimeoutRetryCount && !isFull()) {

notFullTimeoutRetryCnt++;

if (LOG.isWarnEnabled()) {

LOG.warn("get connection timeout retry : " + notFullTimeoutRetryCnt);

}

continue;

}

throw ex;

}

if (testOnBorrow) {

boolean validate = testConnectionInternal(poolableConnection.holder, poolableConnection.conn);

if (!validate) {

if (LOG.isDebugEnabled()) {

LOG.debug("skip not validate connection.");

}

Connection realConnection = poolableConnection.conn;

discardConnection(realConnection);

continue;

}

} else {

Connection realConnection = poolableConnection.conn;

if (poolableConnection.conn.isClosed()) {

discardConnection(null); // 传入null,避免重复关闭

continue;

}

if (testWhileIdle) {

final DruidConnectionHolder holder = poolableConnection.holder;

long currentTimeMillis = System.currentTimeMillis();

long lastActiveTimeMillis = holder.lastActiveTimeMillis;

long lastKeepTimeMillis = holder.lastKeepTimeMillis;

if (lastKeepTimeMillis > lastActiveTimeMillis) {

lastActiveTimeMillis = lastKeepTimeMillis;

}

long idleMillis = currentTimeMillis - lastActiveTimeMillis;

long timeBetweenEvictionRunsMillis = this.timeBetweenEvictionRunsMillis;

if (timeBetweenEvictionRunsMillis <= 0) {

timeBetweenEvictionRunsMillis = DEFAULT_TIME_BETWEEN_EVICTION_RUNS_MILLIS;

}

if (idleMillis >= timeBetweenEvictionRunsMillis

|| idleMillis < 0 // unexcepted branch

) {

boolean validate = testConnectionInternal(poolableConnection.holder, poolableConnection.conn);

if (!validate) {

if (LOG.isDebugEnabled()) {

LOG.debug("skip not validate connection.");

}

discardConnection(realConnection);

continue;

}

}

}

}

if (removeAbandoned) {

StackTraceElement[] stackTrace = Thread.currentThread().getStackTrace();

poolableConnection.connectStackTrace = stackTrace;

poolableConnection.setConnectedTimeNano();

poolableConnection.traceEnable = true;

activeConnectionLock.lock();

try {

activeConnections.put(poolableConnection, PRESENT);

} finally {

activeConnectionLock.unlock();

}

}

if (!this.defaultAutoCommit) {

poolableConnection.setAutoCommit(false);

}

return poolableConnection;

}

}

/**

* 抛弃连接,不进行回收,而是抛弃

*

* @param realConnection

*/

public void discardConnection(Connection realConnection) {

JdbcUtils.close(realConnection);

lock.lock();

try {

activeCount--;

discardCount++;

if (activeCount <= minIdle) {

emptySignal();

}

} finally {

lock.unlock();

}

}

private DruidPooledConnection getConnectionInternal(long maxWait) throws SQLException {

if (closed) {

connectErrorCountUpdater.incrementAndGet(this);

throw new DataSourceClosedException("dataSource already closed at " + new Date(closeTimeMillis));

}

if (!enable) {

connectErrorCountUpdater.incrementAndGet(this);

throw new DataSourceDisableException();

}

final long nanos = TimeUnit.MILLISECONDS.toNanos(maxWait);

final int maxWaitThreadCount = this.maxWaitThreadCount;

DruidConnectionHolder holder;

for (boolean createDirect = false;;) {

if (createDirect) {

createStartNanosUpdater.set(this, System.nanoTime());

if (creatingCountUpdater.compareAndSet(this, 0, 1)) {

PhysicalConnectionInfo pyConnInfo = DruidDataSource.this.createPhysicalConnection();

holder = new DruidConnectionHolder(this, pyConnInfo);

holder.lastActiveTimeMillis = System.currentTimeMillis();

creatingCountUpdater.decrementAndGet(this);

directCreateCountUpdater.incrementAndGet(this);

if (LOG.isDebugEnabled()) {

LOG.debug("conn-direct_create ");

}

boolean discard = false;

lock.lock();

try {

if (activeCount < maxActive) {

activeCount++;

if (activeCount > activePeak) {

activePeak = activeCount;

activePeakTime = System.currentTimeMillis();

}

break;

} else {

discard = true;

}

} finally {

lock.unlock();

}

if (discard) {

JdbcUtils.close(pyConnInfo.getPhysicalConnection());

}

}

}

try {

lock.lockInterruptibly();

} catch (InterruptedException e) {

connectErrorCountUpdater.incrementAndGet(this);

throw new SQLException("interrupt", e);

}

try {

if (maxWaitThreadCount > 0

&& notEmptyWaitThreadCount >= maxWaitThreadCount) {

connectErrorCountUpdater.incrementAndGet(this);

throw new SQLException("maxWaitThreadCount " + maxWaitThreadCount + ", current wait Thread count "

+ lock.getQueueLength());

}

if (onFatalError

&& onFatalErrorMaxActive > 0

&& activeCount >= onFatalErrorMaxActive) {

connectErrorCountUpdater.incrementAndGet(this);

StringBuilder errorMsg = new StringBuilder();

errorMsg.append("onFatalError, activeCount ")

.append(activeCount)

.append(", onFatalErrorMaxActive ")

.append(onFatalErrorMaxActive);

if (lastFatalErrorTimeMillis > 0) {

errorMsg.append(", time '")

.append(StringUtils.formatDateTime19(

lastFatalErrorTimeMillis, TimeZone.getDefault()))

.append("'");

}

if (lastFatalErrorSql != null) {

errorMsg.append(", sql n")

.append(lastFatalErrorSql);

}

throw new SQLException(

errorMsg.toString(), lastFatalError);

}

connectCount++;

if (createScheduler != null

&& poolingCount == 0

&& activeCount < maxActive

&& creatingCountUpdater.get(this) == 0

&& createScheduler instanceof ScheduledThreadPoolExecutor) {

ScheduledThreadPoolExecutor executor = (ScheduledThreadPoolExecutor) createScheduler;

if (executor.getQueue().size() > 0) {

createDirect = true;

continue;

}

}

if (maxWait > 0) {

holder = pollLast(nanos);

} else {

holder = takeLast();

}

if (holder != null) {

activeCount++;

if (activeCount > activePeak) {

activePeak = activeCount;

activePeakTime = System.currentTimeMillis();

}

}

} catch (InterruptedException e) {

connectErrorCountUpdater.incrementAndGet(this);

throw new SQLException(e.getMessage(), e);

} catch (SQLException e) {

connectErrorCountUpdater.incrementAndGet(this);

throw e;

} finally {

lock.unlock();

}

break;

}

if (holder == null) {

long waitNanos = waitNanosLocal.get();

StringBuilder buf = new StringBuilder(128);

buf.append("wait millis ")//

.append(waitNanos / (1000 * 1000))//

.append(", active ").append(activeCount)//

.append(", maxActive ").append(maxActive)//

.append(", creating ").append(creatingCount)//

;

if (creatingCount > 0 && createStartNanos > 0) {

long createElapseMillis = (System.nanoTime() - createStartNanos) / (1000 * 1000);

if (createElapseMillis > 0) {

buf.append(", createElapseMillis ").append(createElapseMillis);

}

}

if (createErrorCount > 0) {

buf.append(", createErrorCount ").append(createErrorCount);

}

List<JdbcSqlStatValue> sqlList = this.getDataSourceStat().getRuningSqlList();

for (int i = 0; i < sqlList.size(); ++i) {

if (i != 0) {

buf.append('n');

} else {

buf.append(", ");

}

JdbcSqlStatValue sql = sqlList.get(i);

buf.append("runningSqlCount ").append(sql.getRunningCount());

buf.append(" : ");

buf.append(sql.getSql());

}

String errorMessage = buf.toString();

if (this.createError != null) {

throw new GetConnectionTimeoutException(errorMessage, createError);

} else {

throw new GetConnectionTimeoutException(errorMessage);

}

}

holder.incrementUseCount();

DruidPooledConnection poolalbeConnection = new DruidPooledConnection(holder);

return poolalbeConnection;

}

public void handleConnectionException(DruidPooledConnection pooledConnection, Throwable t, String sql) throws SQLException {

final DruidConnectionHolder holder = pooledConnection.getConnectionHolder();

errorCountUpdater.incrementAndGet(this);

lastError = t;

lastErrorTimeMillis = System.currentTimeMillis();

if (t instanceof SQLException) {

SQLException sqlEx = (SQLException) t;

// broadcastConnectionError

ConnectionEvent event = new ConnectionEvent(pooledConnection, sqlEx);

for (ConnectionEventListener eventListener : holder.getConnectionEventListeners()) {

eventListener.connectionErrorOccurred(event);

}

// exceptionSorter.isExceptionFatal

if (exceptionSorter != null && exceptionSorter.isExceptionFatal(sqlEx)) {

handleFatalError(pooledConnection, sqlEx, sql);

}

throw sqlEx;

} else {

throw new SQLException("Error", t);

}

}

protected final void handleFatalError(DruidPooledConnection conn, SQLException error, String sql) throws SQLException {

final DruidConnectionHolder holder = conn.holder;

if (conn.isTraceEnable()) {

activeConnectionLock.lock();

try {

if (conn.isTraceEnable()) {

activeConnections.remove(conn);

conn.setTraceEnable(false);

}

} finally {

activeConnectionLock.unlock();

}

}

long lastErrorTimeMillis = this.lastErrorTimeMillis;

if (lastErrorTimeMillis == 0) {

lastErrorTimeMillis = System.currentTimeMillis();

}

if (sql != null && sql.length() > 1024) {

sql = sql.substring(0, 1024);

}

boolean requireDiscard = false;

final ReentrantLock lock = conn.lock;

lock.lock();

try {

if ((!conn.isClosed()) || !conn.isDisable()) {

holder.setDiscard(true);

conn.disable(error);

requireDiscard = true;

}

lastFatalErrorTimeMillis = lastErrorTimeMillis;

onFatalError = true;

lastFatalError = error;

lastFatalErrorSql = sql;

} finally {

lock.unlock();

}

if (requireDiscard) {

if (holder.statementTrace != null) {

holder.lock.lock();

try {

for (Statement stmt : holder.statementTrace) {

JdbcUtils.close(stmt);

}

} finally {

holder.lock.unlock();

}

}

this.discardConnection(holder.getConnection());

holder.setDiscard(true);

}

LOG.error("discard connection", error);

}

/**

* 回收连接

*/

protected void recycle(DruidPooledConnection pooledConnection) throws SQLException {

final DruidConnectionHolder holder = pooledConnection.holder;

if (holder == null) {

LOG.warn("connectionHolder is null");

return;

}

if (logDifferentThread //

&& (!isAsyncCloseConnectionEnable()) //

&& pooledConnection.ownerThread != Thread.currentThread()//

) {

LOG.warn("get/close not same thread");

}

final Connection physicalConnection = holder.conn;

if (pooledConnection.traceEnable) {

Object oldInfo = null;

activeConnectionLock.lock();

try {

if (pooledConnection.traceEnable) {

oldInfo = activeConnections.remove(pooledConnection);

pooledConnection.traceEnable = false;

}

} finally {

activeConnectionLock.unlock();

}

if (oldInfo == null) {

if (LOG.isWarnEnabled()) {

LOG.warn("remove abandonded failed. activeConnections.size " + activeConnections.size());

}

}

}

final boolean isAutoCommit = holder.underlyingAutoCommit;

final boolean isReadOnly = holder.underlyingReadOnly;

final boolean testOnReturn = this.testOnReturn;

try {

// check need to rollback?

if ((!isAutoCommit) && (!isReadOnly)) {

pooledConnection.rollback();

}

// reset holder, restore default settings, clear warnings

boolean isSameThread = pooledConnection.ownerThread == Thread.currentThread();

if (!isSameThread) {

final ReentrantLock lock = pooledConnection.lock;

lock.lock();

try {

holder.reset();

} finally {

lock.unlock();

}

} else {

holder.reset();

}

if (holder.discard) {

return;

}

if (phyMaxUseCount > 0 && holder.useCount >= phyMaxUseCount) {

discardConnection(holder.conn);

return;

}

if (physicalConnection.isClosed()) {

lock.lock();

try {

activeCount--;

closeCount++;

} finally {

lock.unlock();

}

return;

}

if (testOnReturn) {

boolean validate = testConnectionInternal(holder, physicalConnection);

if (!validate) {

JdbcUtils.close(physicalConnection);

destroyCountUpdater.incrementAndGet(this);

lock.lock();

try {

activeCount--;

closeCount++;

} finally {

lock.unlock();

}

return;

}

}

if (!enable) {

discardConnection(holder.conn);

return;

}

boolean result;

final long currentTimeMillis = System.currentTimeMillis();

if (phyTimeoutMillis > 0) {

long phyConnectTimeMillis = currentTimeMillis - holder.connectTimeMillis;

if (phyConnectTimeMillis > phyTimeoutMillis) {

discardConnection(holder.conn);

return;

}

}

lock.lock();

try {

activeCount--;

closeCount++;

result = putLast(holder, currentTimeMillis);

recycleCount++;

} finally {

lock.unlock();

}

if (!result) {

JdbcUtils.close(holder.conn);

LOG.info("connection recyle failed.");

}

} catch (Throwable e) {

holder.clearStatementCache();

if (!holder.discard) {

this.discardConnection(physicalConnection);

holder.discard = true;

}

LOG.error("recyle error", e);

recycleErrorCountUpdater.incrementAndGet(this);

}

}

public long getRecycleErrorCount() {

return recycleErrorCount;

}

public void clearStatementCache() throws SQLException {

lock.lock();

try {

for (int i = 0; i < poolingCount; ++i) {

DruidConnectionHolder conn = connections[i];

if (conn.statementPool != null) {

conn.statementPool.clear();

}

}

} finally {

lock.unlock();

}

}

/**

* close datasource

*/

public void close() {

if (LOG.isInfoEnabled()) {

LOG.info("{dataSource-" + this.getID() + "} closing ...");

}

lock.lock();

try {

if (this.closed) {

return;

}

if (!this.inited) {

return;

}

this.closing = true;

if (logStatsThread != null) {

logStatsThread.interrupt();

}

if (createConnectionThread != null) {

createConnectionThread.interrupt();

}

if (destroyConnectionThread != null) {

destroyConnectionThread.interrupt();

}

if (createSchedulerFuture != null) {

createSchedulerFuture.cancel(true);

}

if (destroySchedulerFuture != null) {

destroySchedulerFuture.cancel(true);

}

for (int i = 0; i < poolingCount; ++i) {

DruidConnectionHolder connHolder = connections[i];

for (PreparedStatementHolder stmtHolder : connHolder.getStatementPool().getMap().values()) {

connHolder.getStatementPool().closeRemovedStatement(stmtHolder);

}

connHolder.getStatementPool().getMap().clear();

Connection physicalConnection = connHolder.getConnection();

try {

physicalConnection.close();

} catch (Exception ex) {

LOG.warn("close connection error", ex);

}

connections[i] = null;

destroyCountUpdater.incrementAndGet(this);

}

poolingCount = 0;

unregisterMbean();

enable = false;

notEmpty.signalAll();

notEmptySignalCount++;

this.closed = true;

this.closeTimeMillis = System.currentTimeMillis();

for (Filter filter : filters) {

filter.destroy();

}

} finally {

lock.unlock();

}

if (LOG.isInfoEnabled()) {

LOG.info("{dataSource-" + this.getID() + "} closed");

}

}

public void registerMbean() {

if (!mbeanRegistered) {

AccessController.doPrivileged(new PrivilegedAction<Object>() {

@Override

public Object run() {

ObjectName objectName = DruidDataSourceStatManager.addDataSource(DruidDataSource.this,

DruidDataSource.this.name);

DruidDataSource.this.setObjectName(objectName);

DruidDataSource.this.mbeanRegistered = true;

return null;

}

});

}

}

public void unregisterMbean() {

if (mbeanRegistered) {

AccessController.doPrivileged(new PrivilegedAction<Object>() {

@Override

public Object run() {

DruidDataSourceStatManager.removeDataSource(DruidDataSource.this);

DruidDataSource.this.mbeanRegistered = false;

return null;

}

});

}

}

public boolean isMbeanRegistered() {

return mbeanRegistered;

}

boolean putLast(DruidConnectionHolder e, long lastActiveTimeMillis) {

if (poolingCount >= maxActive) {

return false;

}

e.lastActiveTimeMillis = lastActiveTimeMillis;

connections[poolingCount] = e;

incrementPoolingCount();

if (poolingCount > poolingPeak) {

poolingPeak = poolingCount;

poolingPeakTime = lastActiveTimeMillis;

}

notEmpty.signal();

notEmptySignalCount++;

return true;

}

DruidConnectionHolder takeLast() throws InterruptedException, SQLException {

try {

while (poolingCount == 0) {

emptySignal(); // send signal to CreateThread create connection

if (failFast && isFailContinuous()) {

throw new DataSourceNotAvailableException(createError);

}

notEmptyWaitThreadCount++;

if (notEmptyWaitThreadCount > notEmptyWaitThreadPeak) {

notEmptyWaitThreadPeak = notEmptyWaitThreadCount;

}

try {

notEmpty.await(); // signal by recycle or creator

} finally {

notEmptyWaitThreadCount--;

}

notEmptyWaitCount++;

if (!enable) {

connectErrorCountUpdater.incrementAndGet(this);

throw new DataSourceDisableException();

}

}

} catch (InterruptedException ie) {

notEmpty.signal(); // propagate to non-interrupted thread

notEmptySignalCount++;

throw ie;

}

decrementPoolingCount();

DruidConnectionHolder last = connections[poolingCount];

connections[poolingCount] = null;

return last;

}

private DruidConnectionHolder pollLast(long nanos) throws InterruptedException, SQLException {

long estimate = nanos;

for (;;) {

if (poolingCount == 0) {

emptySignal(); // send signal to CreateThread create connection

if (failFast && isFailContinuous()) {

throw new DataSourceNotAvailableException(createError);

}

if (estimate <= 0) {

waitNanosLocal.set(nanos - estimate);

return null;

}

notEmptyWaitThreadCount++;

if (notEmptyWaitThreadCount > notEmptyWaitThreadPeak) {

notEmptyWaitThreadPeak = notEmptyWaitThreadCount;

}

try {

long startEstimate = estimate;

estimate = notEmpty.awaitNanos(estimate); // signal by

// recycle or

// creator

notEmptyWaitCount++;

notEmptyWaitNanos += (startEstimate - estimate);

if (!enable) {

connectErrorCountUpdater.incrementAndGet(this);

throw new DataSourceDisableException();

}

} catch (InterruptedException ie) {

notEmpty.signal(); // propagate to non-interrupted thread

notEmptySignalCount++;

throw ie;

} finally {

notEmptyWaitThreadCount--;

}

if (poolingCount == 0) {

if (estimate > 0) {

continue;

}

waitNanosLocal.set(nanos - estimate);

return null;

}

}

decrementPoolingCount();

DruidConnectionHolder last = connections[poolingCount];

connections[poolingCount] = null;

long waitNanos = nanos - estimate;

last.setLastNotEmptyWaitNanos(waitNanos);

return last;

}

}

private final void decrementPoolingCount() {

poolingCount--;

}

private final void incrementPoolingCount() {

poolingCount++;

}

@Override

public Connection getConnection(String username, String password) throws SQLException {

if (this.username == null

&& this.password == null

&& username != null

&& password != null) {

this.username = username;

this.password = password;

return getConnection();

}

if (!StringUtils.equals(username, this.username)) {

throw new UnsupportedOperationException("Not supported by DruidDataSource");

}

if (!StringUtils.equals(password, this.password)) {

throw new UnsupportedOperationException("Not supported by DruidDataSource");

}

return getConnection();

}

public long getCreateCount() {

return createCount;

}

public long getDestroyCount() {

return destroyCount;

}

public long getConnectCount() {

lock.lock();

try {

return connectCount;

} finally {

lock.unlock();

}

}

public long getCloseCount() {

return closeCount;

}

public long getConnectErrorCount() {

return connectErrorCountUpdater.get(this);

}

@Override

public int getPoolingCount() {

lock.lock();

try {

return poolingCount;

} finally {

lock.unlock();

}

}

public int getPoolingPeak() {

lock.lock();

try {

return poolingPeak;

} finally {

lock.unlock();

}

}

public Date getPoolingPeakTime() {

if (poolingPeakTime <= 0) {

return null;

}

return new Date(poolingPeakTime);

}

public long getRecycleCount() {

return recycleCount;

}

public int getActiveCount() {

lock.lock();

try {

return activeCount;

} finally {

lock.unlock();

}

}

public void logStats() {

final DruidDataSourceStatLogger statLogger = this.statLogger;

if (statLogger == null) {

return;

}

DruidDataSourceStatValue statValue = getStatValueAndReset();

statLogger.log(statValue);

}

public DruidDataSourceStatValue getStatValueAndReset() {

DruidDataSourceStatValue value = new DruidDataSourceStatValue();

lock.lock();

try {

value.setPoolingCount(this.poolingCount);

value.setPoolingPeak(this.poolingPeak);

value.setPoolingPeakTime(this.poolingPeakTime);

value.setActiveCount(this.activeCount);

value.setActivePeak(this.activePeak);

value.setActivePeakTime(this.activePeakTime);

value.setConnectCount(this.connectCount);

value.setCloseCount(this.closeCount);

value.setWaitThreadCount(lock.getWaitQueueLength(notEmpty));

value.setNotEmptyWaitCount(this.notEmptyWaitCount);

value.setNotEmptyWaitNanos(this.notEmptyWaitNanos);

value.setKeepAliveCheckCount(this.keepAliveCheckCount);

// reset

this.poolingPeak = 0;

this.poolingPeakTime = 0;

this.activePeak = 0;

this.activePeakTime = 0;

this.connectCount = 0;

this.closeCount = 0;

this.keepAliveCheckCount = 0;

this.notEmptyWaitCount = 0;

this.notEmptyWaitNanos = 0;

} finally {

lock.unlock();

}

value.setName(this.getName());

value.setDbType(this.dbType);

value.setDriverClassName(this.getDriverClassName());

value.setUrl(this.getUrl());

value.setUserName(this.getUsername());

value.setFilterClassNames(this.getFilterClassNames());

value.setInitialSize(this.getInitialSize());

value.setMinIdle(this.getMinIdle());

value.setMaxActive(this.getMaxActive());

value.setQueryTimeout(this.getQueryTimeout());

value.setTransactionQueryTimeout(this.getTransactionQueryTimeout());

value.setLoginTimeout(this.getLoginTimeout());

value.setValidConnectionCheckerClassName(this.getValidConnectionCheckerClassName());

value.setExceptionSorterClassName(this.getExceptionSorterClassName());

value.setTestOnBorrow(this.testOnBorrow);

value.setTestOnReturn(this.testOnReturn);

value.setTestWhileIdle(this.testWhileIdle);

value.setDefaultAutoCommit(this.isDefaultAutoCommit());

if (defaultReadOnly != null) {

value.setDefaultReadOnly(defaultReadOnly);

}

value.setDefaultTransactionIsolation(this.getDefaultTransactionIsolation());

value.setLogicConnectErrorCount(connectErrorCountUpdater.getAndSet(this, 0));

value.setPhysicalConnectCount(createCountUpdater.getAndSet(this, 0));

value.setPhysicalCloseCount(destroyCountUpdater.getAndSet(this, 0));

value.setPhysicalConnectErrorCount(createErrorCountUpdater.getAndSet(this, 0));

value.setExecuteCount(this.getAndResetExecuteCount());

value.setErrorCount(errorCountUpdater.getAndSet(this, 0));

value.setCommitCount(commitCountUpdater.getAndSet(this, 0));

value.setRollbackCount(rollbackCountUpdater.getAndSet(this, 0));

value.setPstmtCacheHitCount(cachedPreparedStatementHitCountUpdater.getAndSet(this,0));

value.setPstmtCacheMissCount(cachedPreparedStatementMissCountUpdater.getAndSet(this, 0));

value.setStartTransactionCount(startTransactionCountUpdater.getAndSet(this, 0));

value.setTransactionHistogram(this.getTransactionHistogram().toArrayAndReset());

value.setConnectionHoldTimeHistogram(this.getDataSourceStat().getConnectionHoldHistogram().toArrayAndReset());

value.setRemoveAbandoned(this.isRemoveAbandoned());

value.setClobOpenCount(this.getDataSourceStat().getClobOpenCountAndReset());

value.setBlobOpenCount(this.getDataSourceStat().getBlobOpenCountAndReset());

value.setSqlSkipCount(this.getDataSourceStat().getSkipSqlCountAndReset());

value.setSqlList(this.getDataSourceStat().getSqlStatMapAndReset());

return value;

}

public long getRemoveAbandonedCount() {

return removeAbandonedCount;

}

protected boolean put(PhysicalConnectionInfo physicalConnectionInfo) {

DruidConnectionHolder holder = null;

try {

holder = new DruidConnectionHolder(DruidDataSource.this, physicalConnectionInfo);

} catch (SQLException ex) {

lock.lock();

try {

if (createScheduler != null) {

createTaskCount--;

}

} finally {

lock.unlock();

}

LOG.error("create connection holder error", ex);

return false;

}

return put(holder);

}

private boolean put(DruidConnectionHolder holder) {

lock.lock();

try {

if (poolingCount >= maxActive) {

return false;

}

connections[poolingCount] = holder;

incrementPoolingCount();

if (poolingCount > poolingPeak) {

poolingPeak = poolingCount;

poolingPeakTime = System.currentTimeMillis();

}

notEmpty.signal();

notEmptySignalCount++;

if (createScheduler != null) {

createTaskCount--;

if (poolingCount + createTaskCount < notEmptyWaitThreadCount //

&& activeCount + poolingCount + createTaskCount < maxActive) {

emptySignal();

}

}

} finally {

lock.unlock();

}

return true;

}

public class CreateConnectionTask implements Runnable {

private int errorCount = 0;

private boolean initTask = false;

public CreateConnectionTask() {

}

public CreateConnectionTask(boolean initTask) {

this.initTask = initTask;

}

@Override

public void run() {

runInternal();

}

private void runInternal() {

for (;;) {

// addLast

lock.lock();

try {

if (closed || closing) {

createTaskCount--;

return;

}

boolean emptyWait = true;

if (createError != null && poolingCount == 0) {

emptyWait = false;

}

if (emptyWait) {

// 必须存在线程等待,才创建连接

if (poolingCount >= notEmptyWaitThreadCount //

&& (!(keepAlive && activeCount + poolingCount < minIdle))

&& (!initTask)

&& !isFailContinuous()

) {

createTaskCount--;

return;

}

// 防止创建超过maxActive数量的连接

if (activeCount + poolingCount >= maxActive) {

createTaskCount--;

return;

}

}

} finally {

lock.unlock();

}

PhysicalConnectionInfo physicalConnection = null;

try {

physicalConnection = createPhysicalConnection();

} catch (OutOfMemoryError e) {

LOG.error("create connection OutOfMemoryError, out memory. ", e);

errorCount++;

if (errorCount > connectionErrorRetryAttempts && timeBetweenConnectErrorMillis > 0) {

// fail over retry attempts

setFailContinuous(true);

if (failFast) {

lock.lock();

try {

notEmpty.signalAll();

} finally {

lock.unlock();

}

}

if (breakAfterAcquireFailure) {

lock.lock();

try {

createTaskCount--;

} finally {

lock.unlock();

}

return;

}

this.errorCount = 0; // reset errorCount

if (closing || closed) {

createTaskCount--;

return;

}

createSchedulerFuture = createScheduler.schedule(this, timeBetweenConnectErrorMillis, TimeUnit.MILLISECONDS);

return;

}

} catch (SQLException e) {

LOG.error("create connection SQLException, url: " + jdbcUrl, e);

errorCount++;

if (errorCount > connectionErrorRetryAttempts && timeBetweenConnectErrorMillis > 0) {

// fail over retry attempts

setFailContinuous(true);

if (failFast) {

lock.lock();

try {

notEmpty.signalAll();

} finally {

lock.unlock();

}

}

if (breakAfterAcquireFailure) {

lock.lock();

try {

createTaskCount--;

} finally {

lock.unlock();

}

return;

}

this.errorCount = 0; // reset errorCount

if (closing || closed) {

createTaskCount--;

return;

}

createSchedulerFuture = createScheduler.schedule(this, timeBetweenConnectErrorMillis, TimeUnit.MILLISECONDS);

return;

}

} catch (RuntimeException e) {

LOG.error("create connection RuntimeException", e);

// unknow fatal exception

setFailContinuous(true);

continue;

} catch (Error e) {

lock.lock();

try {

createTaskCount--;

} finally {