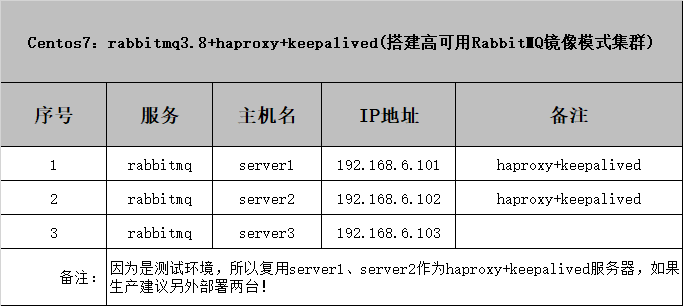

Centos7:rabbitmq3.8+haproxy+keepalived(搭建高可用RabbitMQ镜像模式集群)

https://blog.csdn.net/xjjj064/article/details/110539442

一、环境描述

注:三台服务器selinux设置为了disabled,firewalld已经stop,且已分别设置了主机名对应如下

[root@server3 lib]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.6.101 server1

192.168.6.102 server2

192.168.6.103 server3

二、部署jdk环境+erlang环境

此前已经部署过一台服务器

参考:Centos7下安装部署rabbitmq3.8.9+erlang23.0

server1已经部署过一套rabbitmq3.8.9+erlang23.0

所以将server1上的jdk、erlang、rabbitmq3.8.9 传输给server2、server3,并设置好环境变量。

传输erlang包

[root@server1 lib]# scp erlang.tar.gz root@192.168.6.102:/usr/lib/

root@192.168.6.102's password:

erlang.tar.gz 100% 55MB 11.1MB/s 00:05

[root@server1 lib]# scp erlang.tar.gz root@192.168.6.103:/usr/lib/

root@192.168.6.103's password:

erlang.tar.gz 100% 55MB 9.2MB/s 00:06

传输jdk包:

[root@server1 src]# scp jdk-8u121-linux-x64.gz root@192.168.6.102:/usr/local/src/

root@192.168.6.102's password:

jdk-8u121-linux-x64.gz 100% 175MB 7.9MB/s 00:22

[root@server1 src]# scp jdk-8u121-linux-x64.gz root@192.168.6.103:/usr/local/src/

root@192.168.6.103's password:

jdk-8u121-linux-x64.gz 84% 148MB 11.8MB/s 00:02 ETAljdk-8u121-linux-x64.gz 100% 175MB 9.7MB/s 00:18

server2、server3设置环境变量

unset i

unset -f pathmunge

source /opt/rh/devtoolset-9/enable

export JAVA_HOME=/usr/local/jdk

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

export PATH=$PATH:/usr/lib/erlang/bin:/usr/local/rabbitmq/sbin

测试erlang环境和jdk环境

erlang

[root@server2 src]# erl

Erlang/OTP 23 [erts-11.0] [source] [64-bit] [smp:4:4] [ds:4:4:10] [async-threads:1] [hipe]

Eshell V11.0 (abort with ^G)

1>

jdk

[root@server2 src]# java -version

java version "1.8.0_121"

Java(TM) SE Runtime Environment (build 1.8.0_121-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.121-b13, mixed mode)

统一 erlang.cookie 文件中 cookie 值

[root@server1 ~]# scp .erlang.cookie root@192.168.6.102:/root

root@192.168.6.102's password:

.erlang.cookie 100% 20 11.8KB/s 00:00

[root@server1 ~]# scp .erlang.cookie root@192.168.6.103:/root

root@192.168.6.103's password:

.erlang.cookie 100% 20 20.5KB/s 00:00

三、安装并启动rabbitmq

传送rabbitmq包到server2、server3

[root@server1 ~]# cd /usr/local/src/

[root@server1 src]# ls

jdk-8u121-linux-x64.gz otp_src_23.0 otp_src_23.0.tar.gz rabbitmq-server-generic-unix-3.8.9.tar redis-6.0.5 redis-6.0.5.tar.gz

[root@server1 src]# scp rabbitmq-server-generic-unix-3.8.9.tar root@192.168.6.102:/usr/local/src/

root@192.168.6.102's password:

rabbitmq-server-generic-unix-3.8.9.tar 100% 15MB 8.0MB/s 00:01

[root@server1 src]# scp rabbitmq-server-generic-unix-3.8.9.tar root@192.168.6.103:/usr/local/src/

root@192.168.6.103's password:

rabbitmq-server-generic-unix-3.8.9.tar 100% 15MB 10.7MB/s 00:01

解压到usr/local/rabbitmq 目录

[root@server2 src]# ls -l /usr/local/rabbitmq/

total 176

drwxr-xr-x. 2 root root 124 Sep 25 02:39 escript

drwxr-xr-x. 3 root root 22 Sep 25 02:39 etc

-rw-r--r--. 1 root root 75 Sep 25 02:39 INSTALL

-rw-r--r--. 1 root root 19534 Sep 25 02:39 LICENSE

-rw-r--r--. 1 root root 11354 Sep 25 02:39 LICENSE-APACHE2

-rw-r--r--. 1 root root 11360 Sep 25 02:39 LICENSE-APACHE2-excanvas

-rw-r--r--. 1 root root 11358 Sep 25 02:39 LICENSE-APACHE2-ExplorerCanvas

-rw-r--r--. 1 root root 10851 Sep 25 02:39 LICENSE-APL2-Stomp-Websocket

-rw-r--r--. 1 root root 1207 Sep 25 02:39 LICENSE-BSD-base64js

-rw-r--r--. 1 root root 1469 Sep 25 02:39 LICENSE-BSD-recon

-rw-r--r--. 1 root root 1274 Sep 25 02:39 LICENSE-erlcloud

-rw-r--r--. 1 root root 1471 Sep 25 02:39 LICENSE-httpc_aws

-rw-r--r--. 1 root root 757 Sep 25 02:39 LICENSE-ISC-cowboy

-rw-r--r--. 1 root root 1084 Sep 25 02:39 LICENSE-MIT-EJS

-rw-r--r--. 1 root root 1087 Sep 25 02:39 LICENSE-MIT-EJS10

-rw-r--r--. 1 root root 1057 Sep 25 02:39 LICENSE-MIT-Erlware-Commons

-rw-r--r--. 1 root root 1069 Sep 25 02:39 LICENSE-MIT-Flot

-rw-r--r--. 1 root root 1075 Sep 25 02:39 LICENSE-MIT-jQuery

-rw-r--r--. 1 root root 1076 Sep 25 02:39 LICENSE-MIT-jQuery164

-rw-r--r--. 1 root root 1087 Sep 25 02:39 LICENSE-MIT-Mochi

-rw-r--r--. 1 root root 1073 Sep 25 02:39 LICENSE-MIT-Sammy

-rw-r--r--. 1 root root 1076 Sep 25 02:39 LICENSE-MIT-Sammy060

-rw-r--r--. 1 root root 16725 Sep 25 02:39 LICENSE-MPL

-rw-r--r--. 1 root root 16726 Sep 25 02:39 LICENSE-MPL-RabbitMQ

-rw-r--r--. 1 root root 1471 Sep 25 02:39 LICENSE-rabbitmq_aws

drwxr-xr-x. 2 root root 4096 Sep 25 02:39 plugins

drwxr-xr-x. 2 root root 192 Sep 25 02:39 sbin

drwxr-xr-x. 3 root root 17 Sep 25 02:39 share

server2、server3 启动rabbitmq服务

此处只截图server2

[root@server2 src]# rabbitmq-server -detached

[root@server2 src]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1056/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1233/master

tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 1909/beam.smp

tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 2005/epmd

tcp6 0 0 :::22 :::* LISTEN 1056/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1233/master

tcp6 0 0 :::5672 :::* LISTEN 1909/beam.smp

tcp6 0 0 :::4369 :::* LISTEN 2005/epmd

server1上查看rabbitmq服务状态

[root@server1 src]# rabbitmqctl status

Status of node rabbit@server1 ...

Runtime

OS PID: 1647

OS: Linux

Uptime (seconds): 5992

Is under maintenance?: false

RabbitMQ version: 3.8.9

Node name: rabbit@server1

Erlang configuration: Erlang/OTP 23 [erts-11.0] [source] [64-bit] [smp:4:4] [ds:4:4:10] [async-threads:64] [hipe]

Erlang processes: 447 used, 1048576 limit

Scheduler run queue: 1

Cluster heartbeat timeout (net_ticktime): 60

Plugins

Enabled plugin file: /usr/local/rabbitmq/etc/rabbitmq/enabled_plugins

Enabled plugins:

* rabbitmq_management

* amqp_client

* rabbitmq_web_dispatch

* cowboy

* cowlib

* rabbitmq_management_agent

Data directory

Node data directory: /usr/local/rabbitmq/var/lib/rabbitmq/mnesia/rabbit@server1

Raft data directory: /usr/local/rabbitmq/var/lib/rabbitmq/mnesia/rabbit@server1/quorum/rabbit@server1

Config files

Log file(s)

* /usr/local/rabbitmq/var/log/rabbitmq/rabbit@server1.log

* /usr/local/rabbitmq/var/log/rabbitmq/rabbit@server1_upgrade.log

Alarms

(none)

Memory

Total memory used: 0.085 gb

Calculation strategy: rss

Memory high watermark setting: 0.4 of available memory, computed to: 3.2805 gb

code: 0.0278 gb (32.67 %)

other_proc: 0.0238 gb (28.03 %)

other_system: 0.0143 gb (16.86 %)

allocated_unused: 0.0076 gb (8.97 %)

reserved_unallocated: 0.0037 gb (4.33 %)

other_ets: 0.0032 gb (3.78 %)

plugins: 0.0023 gb (2.7 %)

atom: 0.0014 gb (1.67 %)

mgmt_db: 0.0003 gb (0.32 %)

metrics: 0.0002 gb (0.25 %)

binary: 0.0002 gb (0.2 %)

mnesia: 0.0001 gb (0.09 %)

quorum_ets: 0.0 gb (0.06 %)

connection_other: 0.0 gb (0.04 %)

msg_index: 0.0 gb (0.04 %)

connection_channels: 0.0 gb (0.0 %)

connection_readers: 0.0 gb (0.0 %)

connection_writers: 0.0 gb (0.0 %)

queue_procs: 0.0 gb (0.0 %)

queue_slave_procs: 0.0 gb (0.0 %)

quorum_queue_procs: 0.0 gb (0.0 %)

File Descriptors

Total: 2, limit: 927

Sockets: 0, limit: 832

Free Disk Space

Low free disk space watermark: 0.05 gb

Free disk space: 46.5476 gb

Totals

Connection count: 0

Queue count: 0

Virtual host count: 1

Listeners

Interface: [::], port: 15672, protocol: http, purpose: HTTP API

Interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

Interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

[root@server1 src]#

四、Rabbitmq 集群添加节点

①、将server2、server3加入server1组集群

因为server1建过管理账号,所以将server2、server3加入到server1,加入后通过登录server2、server3可以看到在后两台服务器没有新建管理账号的情况下,admin账号已经同步到server2和server3服务器。

[root@server2 ~]# rabbitmqctl stop_app

[root@server2 ~]# rabbitmqctl join_cluster --ram rabbit@server1

[root@server2 ~]# rabbitmqctl start_app

[root@server2 ~]# rabbitmq-plugins enable rabbitmq_management

[root@server3 ~]# rabbitmqctl stop_app

[root@server3 ~]# rabbitmqctl join_cluster --ram rabbit@server1

[root@server3 ~]# rabbitmqctl start_app

[root@server3 ~]# rabbitmq-plugins enable rabbitmq_management

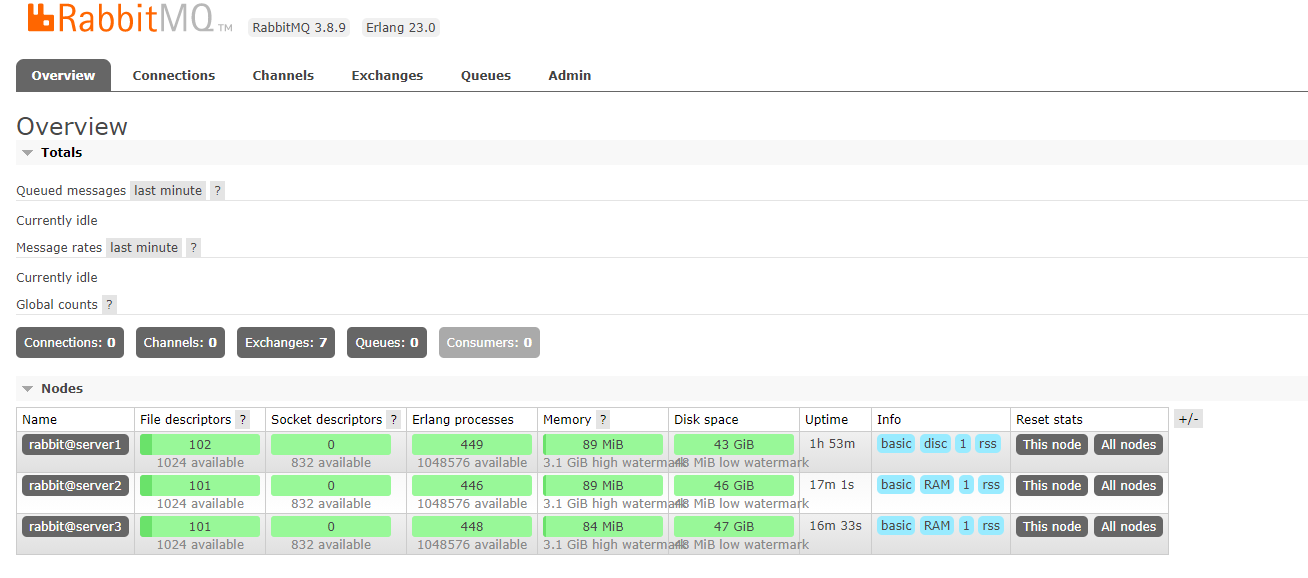

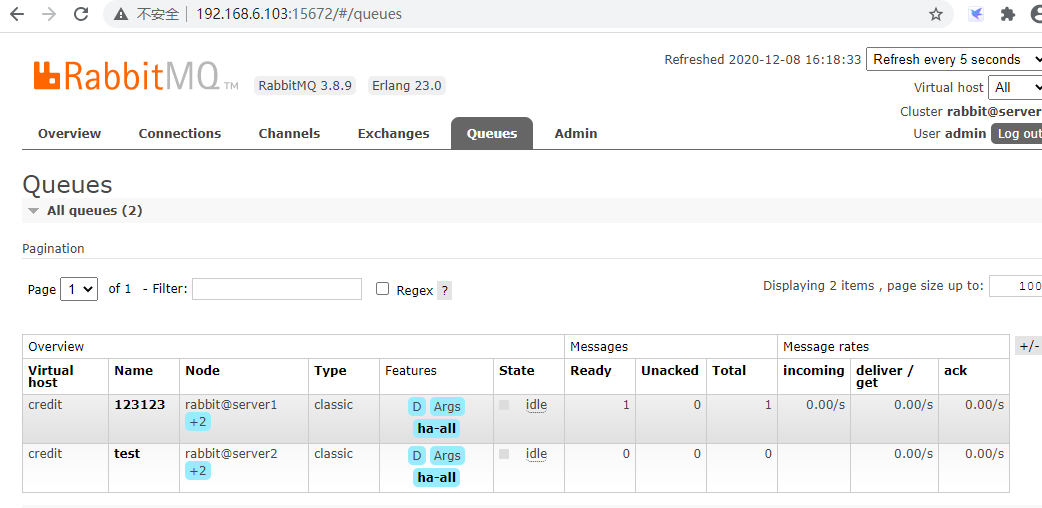

②、打开网页管理页面查看 nodes

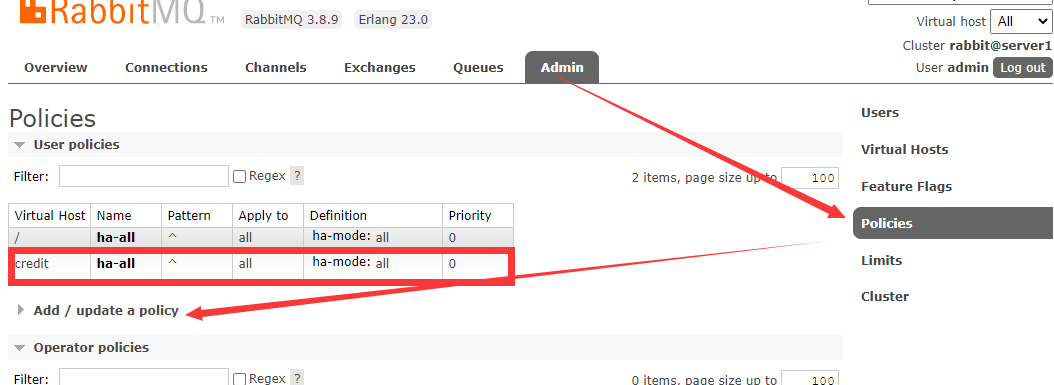

③、搭建 rabbitmq 的镜像高可用模式集群

镜像队列机制就是将队列在 N 个节点之间设置主从关系,消息会在 N 个节点之间进行自动同步,且如果其中一个节点不可用,并不会导致消息丢失或服务不可用的情况,提升 MQ 集群的整体高可用性。

这里在rabbitmq1节点操作(在任意节点操作都是可以的)

这一节要参考的文档是:http://www.rabbitmq.com/ha.html

首先镜像模式要依赖 policy 模块,这个模块是做什么用的呢?

policy 中文来说是政策,策略的意思就是要设置那些Exchanges或者queue的数据需要如何复制,同步。

[root@server1 ~]# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

ha-all:为策略名称。

:为匹配符,只有一个代表匹配所有,^qfedu为匹配名称为qfedu的exchanges或者queue。

ha-mode:为匹配类型,他分为3种模式:

all-所有(所有的 queue),

exctly-部分(需配置ha-params参数,此参数为int类型, 比如3,众多集群中的随机3台机器),

nodes-指定(需配置ha-params参数,此参数为数组类型比如[“rabbit@F”,“rabbit@G”]这样指定为F与G这2台机器。)。

[root@server1 src]# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

Setting policy "ha-all" for pattern "^" to "{"ha-mode":"all"}" with priority "0" for vhost "/" ...

注:此处策略是默认同步/ 这个默认的vhost,如果自己新建的vhost需要授权信的vhost

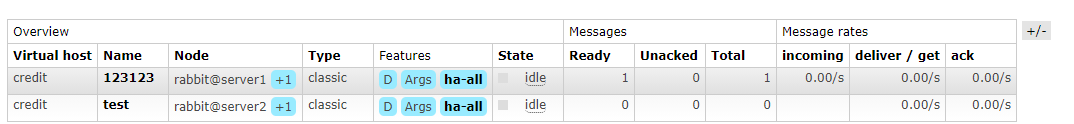

如图一

新建策略

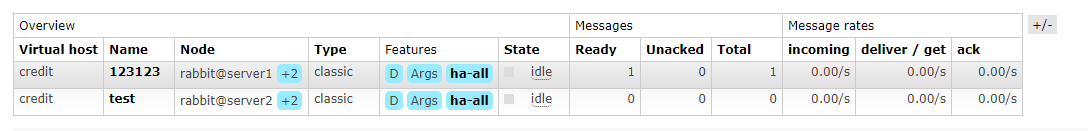

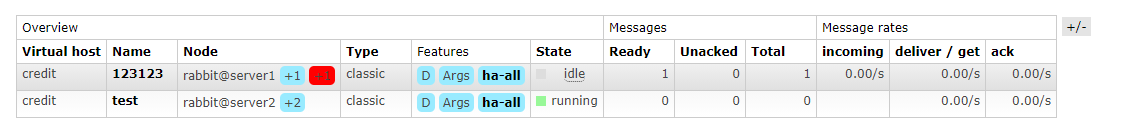

图二 测试推送消息到rabbitmq集群

图三 测试重启server3这个节点

Node 节点变成了+1

server3 启动之后

上图可以看出,其中一条 +1是红色 鼠标放上去提示的是:unsynchronized mirrors (消息未同步)

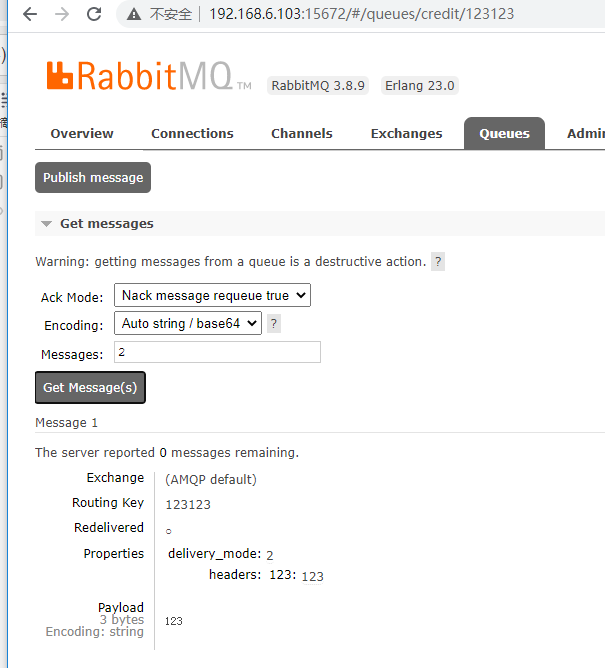

+2的是正常的,此时再server3上get messages 也是可以get到的。

在server3服务器执行同步命令

[root@server3 ~]# rabbitmqctl sync_queue --vhost credit 123123

Synchronising queue '123123' in vhost 'credit' ...

[root@server3 ~]#

再看 Node节点颜色变正常了!

参考:https://blog.51cto.com/11134648/2155934

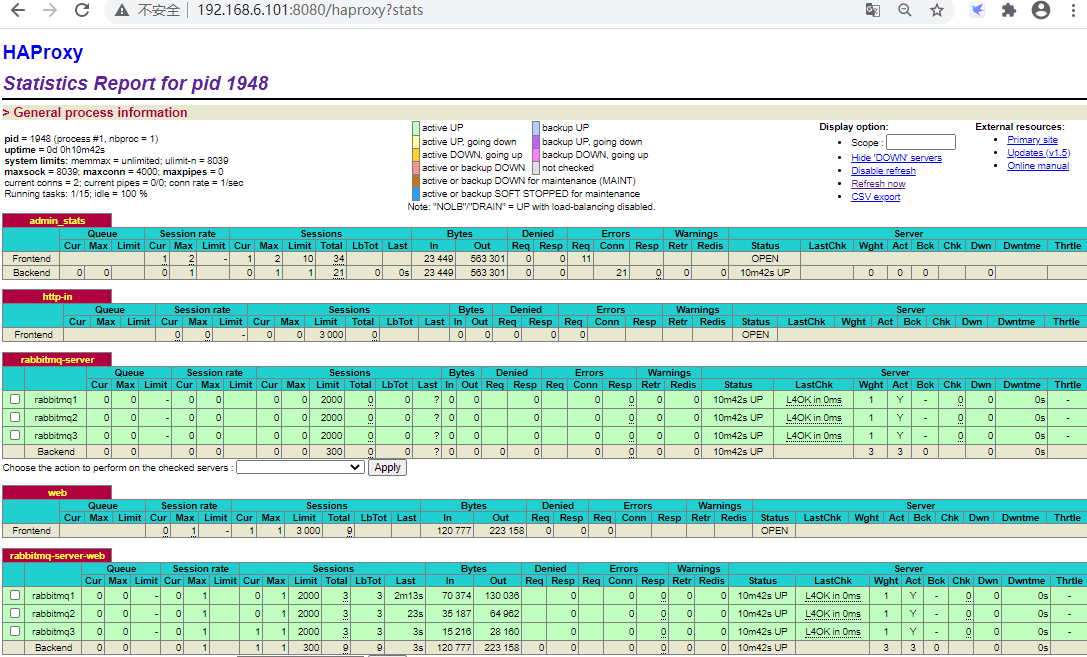

五、部署haproxy,实现访问5672端口时,轮询访问3个节点(明日补上)

①、haproxy环境:

haproxy1: server1 192.168.6.101

haproxy2: server2 192.168.6.102

②、server1、server2安装proxy(server1、server2相同操作)

[root@server1 ~]# yum -y install haproxy

[root@server1 keepalived]# haproxy -version

HA-Proxy version 1.5.18 2016/05/10

Copyright 2000-2016 Willy Tarreau <willy@haproxy.org>

③、修改配置文件

[root@server1 ~]# vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

mode http

option httplog

log global

maxconn 10

frontend http-in

bind 0.0.0.0:55672

mode tcp

log global

option httplog

option httpclose

default_backend rabbitmq-server

backend rabbitmq-server

mode tcp

balance roundrobin

server rabbitmq1 192.168.6.101:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq2 192.168.6.102:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq3 192.168.6.103:5672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

frontend web

bind 0.0.0.0:35672

mode tcp

log global

option httplog

option httpclose

default_backend rabbitmq-server-web

backend rabbitmq-server-web

mode tcp

balance roundrobin

server rabbitmq1 192.168.6.101:15672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq2 192.168.6.102:15672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

server rabbitmq3 192.168.6.103:15672 maxconn 2000 weight 1 check inter 5s rise 2 fall 2

因为rabbitmq和haproxy复用server1、server2两台服务器,所以haproxy的TCP端口修改为55672(只要不跟rabbitmq端口冲突即可)

将改文件Scp给server2。

[root@server1 ~]# scp /etc/haproxy/haproxy.cfg root@server2:/etc/haproxy/

The authenticity of host 'server2 (192.168.6.102)' can't be established.

ECDSA key fingerprint is SHA256:ZKnzuFmO3h+qD47qGguUwm676vlVxbxTIQbVj0dmXb4.

ECDSA key fingerprint is MD5:31:15:8b:6f:d6:48:ce:2f:0e:f3:cd:b6:51:bb:1a:c3.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'server2' (ECDSA) to the list of known hosts.

root@server2's password:

haproxy.cfg 100% 1587 1.5MB/s 00:00

[root@server1 ~]# ls

④、Haproxy rsyslog 日志配置(server1、server2相同操作)

将以下配置增加到配置文件最后

[root@haproxy1 ~]# vim /etc/rsyslog.conf

# Provides UDP syslog reception

$ModLoad imudp

$UDPServerRun 514

# Provides TCP syslog reception

$ModLoad imtcp

$InputTCPServerRun 514

local1.* /var/log/haproxy/redis.log

⑤、启动日志服务与haproxy代理服务(server1、server2相同操作)

[root@server1 ~]# mkdir /var/log/haproxy

[root@server1 ~]# systemctl restart rsyslog.service

[root@server1 ~]# systemctl start haproxy

[root@server1 ~]# systemctl enable haproxy #开机启动

[root@server1 ~]# cat /var/log/haproxy/rabbitmq.log

Sep 19 20:03:18 localhost haproxy[1654]: Proxy stats started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy http-in started.

Sep 19 20:03:18 localhost haproxy[1654]: Proxy html-server started.

查看启动的端口

8080为haproxy web状态查看端口

[root@server1 ~]# netstat -ntlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:514 0.0.0.0:* LISTEN 958/rsyslogd

tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 1608/beam.smp

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 1948/haproxy

tcp 0 0 0.0.0.0:4369 0.0.0.0:* LISTEN 1704/epmd

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 960/sshd

tcp 0 0 0.0.0.0:35672 0.0.0.0:* LISTEN 1948/haproxy

tcp 0 0 0.0.0.0:55672 0.0.0.0:* LISTEN 1948/haproxy

tcp 0 0 0.0.0.0:15672 0.0.0.0:* LISTEN 1608/beam.smp

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1124/master

tcp6 0 0 :::514 :::* LISTEN 958/rsyslogd

tcp6 0 0 :::5672 :::* LISTEN 1608/beam.smp

tcp6 0 0 :::4369 :::* LISTEN 1704/epmd

tcp6 0 0 :::22 :::* LISTEN 960/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1124/master

⑥、haproxy启动报错

Starting proxy haproxy_stats: cannot bind socket [0.0.0.0:89]

解决办法:

注意 selinux 是否为disabled状态!

[root@server1 ~]# getenforce

Disabled

其次根据百度到的解决方法是需要设置

vi /etc/sysctl.conf #修改内核参数

net.ipv4.ip_nonlocal_bind = 1 #没有就新增此条记录

sysctl -p #保存结果,使结果生效

说明:

net.ipv4.ip_nonlocal_bind = 1

意思是启动haproxy的时候,允许忽视VIP的存在

除上面的原因之外,还有可能造成Starting proxy linuxyw.com: cannot bind socket

确定你的haproxy服务器中是否开启有apache或nginx等WEB服务,如果有,请先停掉这些服务

否则就出现以上故障。

顺便提醒下,haproxy代理服务器同时也要打开内核的转发功能,如下参数:

net.ipv4.ip_forward = 1

六、部署Keepalived,实现主从热备、秒级切换(明日补上)

①、环境说明

keepalived 192.168.6.101

keepalived 192.168.6.102

VIP 192.168.6.253

②、安装keepalived

[root@server1 ~]# yum -y install keepalived

[root@server2 ~]# yum -y install keepalived

③、配置keepalived(server1 为master、server2为backup)

server1配置

[root@server1 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@server1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id director1

}

vrrp_script check_haproxy {

script "/etc/keepalived/haproxy_chk.sh" #检测haproxy服务是否运行

interval 5

}

vrrp_instance VI_1 {

state MASTER # server1 为master

interface ens160 # 网口名称

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.6.253/24

}

track_script {

check_haproxy

}

}

server2配置

配置与server1基本相同除state状态一个为MASTER、另一个为BACKUP

[root@server2 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@server2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id director2

}

vrrp_script check_haproxy {

script "/etc/keepalived/haproxy_chk.sh"

interval 5

}

vrrp_instance VI_1 {

state BACKUP # server2 服务vrrp状态为backup

interface ens160 # 服务器网口名称

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.6.253/24

}

track_script {

check_haproxy

}

}

④、检测haproxy脚本(Keepalived主、备都需要)

[root@redis1 ~]# vim /etc/keepalived/haproxy_chk.sh

#!/usr/bin/env bash

# test haproxy server running

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl start haproxy.service &>/dev/null

sleep 5

systemctl status haproxy.service &>/dev/null

if [ $? -ne 0 ];then

systemctl stop keepalived

fi

fi

⑤、开启服务验证是VIP

Server1启动keepalived

[root@server1 keepalived]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@server1 keepalived]# systemctl start keepalived

[root@server1 keepalived]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-12-09 14:19:49 CST; 4s ago

Process: 3257 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 3258 (keepalived)

CGroup: /system.slice/keepalived.service

├─3258 /usr/sbin/keepalived -D

├─3259 /usr/sbin/keepalived -D

└─3260 /usr/sbin/keepalived -D

Dec 09 14:19:49 server1 Keepalived_vrrp[3260]: VRRP_Script(check_haproxy) succeeded

Dec 09 14:19:50 server1 Keepalived_vrrp[3260]: VRRP_Instance(VI_1) Transition to MASTER STATE

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: VRRP_Instance(VI_1) Entering MASTER STATE

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: VRRP_Instance(VI_1) setting protocol VIPs.

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: Sending gratuitous ARP on ens160 for 192.168.6.253

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on ens160 for 192.168.6.253

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: Sending gratuitous ARP on ens160 for 192.168.6.253

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: Sending gratuitous ARP on ens160 for 192.168.6.253

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: Sending gratuitous ARP on ens160 for 192.168.6.253

Dec 09 14:19:51 server1 Keepalived_vrrp[3260]: Sending gratuitous ARP on ens160 for 192.168.6.253

[root@server1 keepalived]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:0f:45:7f brd ff:ff:ff:ff:ff:ff

inet 192.168.6.101/24 brd 192.168.6.255 scope global noprefixroute dynamic ens160

valid_lft 76385sec preferred_lft 76385sec

inet 192.168.6.253/24 scope global secondary ens160

valid_lft forever preferred_lft forever

inet6 fe80::ddbd:36be:b510:ef06/64 scope link noprefixroute

valid_lft forever preferred_lft forever

server2启动keepalived

[root@server2 keepalived]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@server2 keepalived]# systemctl start keepalived

[root@server2 keepalived]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-12-09 14:22:17 CST; 5s ago

Process: 478 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 479 (keepalived)

CGroup: /system.slice/keepalived.service

├─479 /usr/sbin/keepalived -D

├─480 /usr/sbin/keepalived -D

└─481 /usr/sbin/keepalived -D

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: Opening file '/etc/keepalived/keepalived.conf'.

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: WARNING - default user 'keepalived_script' for script execution does not exist - please create.

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: SECURITY VIOLATION - scripts are being executed but script_security not enabled.

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: VRRP_Instance(VI_1) removing protocol VIPs.

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: Using LinkWatch kernel netlink reflector...

Dec 09 14:22:17 server2 systemd[1]: Started LVS and VRRP High Availability Monitor.

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: VRRP_Instance(VI_1) Entering BACKUP STATE

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Dec 09 14:22:17 server2 Keepalived_vrrp[481]: VRRP_Script(check_haproxy) succeeded

Dec 09 14:22:17 server2 Keepalived_healthcheckers[480]: Opening file '/etc/keepalived/keepalived.conf'.

[root@server2 keepalived]# ip addr #此时server2为Backup状态,所以看不到VIP

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:34:7c:2a brd ff:ff:ff:ff:ff:ff

inet 192.168.6.102/24 brd 192.168.6.255 scope global noprefixroute dynamic ens160

valid_lft 80159sec preferred_lft 80159sec

inet6 fe80::b64c:812c:68f0:6591/64 scope link noprefixroute

valid_lft forever preferred_lft forever

⑥、测试将Server1 关机

[root@server2 keepalived]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:34:7c:2a brd ff:ff:ff:ff:ff:ff

inet 192.168.6.102/24 brd 192.168.6.255 scope global noprefixroute dynamic ens160

valid_lft 78313sec preferred_lft 78313sec

inet 192.168.6.253/24 scope global secondary ens160

valid_lft forever preferred_lft forever

inet6 fe80::b64c:812c:68f0:6591/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@server2 keepalived]#

以上,可知VIP(192.168.6.253)已经切换到192.168.6.102 服务器了!

⑦、在测试将server1服务器开起来

VIP抢回来了!

[root@server1 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:0f:45:7f brd ff:ff:ff:ff:ff:ff

inet 192.168.6.101/24 brd 192.168.6.255 scope global noprefixroute dynamic ens160

valid_lft 86373sec preferred_lft 86373sec

inet 192.168.6.253/24 scope global secondary ens160

valid_lft forever preferred_lft forever

inet6 fe80::ddbd:36be:b510:ef06/64 scope link noprefixroute

valid_lft forever preferred_lft forever

参考:https://blog.csdn.net/m0_49821830/article/details/108686163

参考:https://blog.csdn.net/qq_23191379/article/details/107065591

最后

以上就是优雅寒风最近收集整理的关于Centos7:rabbitmq3.8+haproxy+keepalived(搭建高可用RabbitMQ镜像模式集群)的全部内容,更多相关Centos7:rabbitmq3内容请搜索靠谱客的其他文章。

发表评论 取消回复