论文理解部分:

https://blog.csdn.net/qq_40859461/article/details/85161171

https://www.cnblogs.com/ocean1100/p/9381429.html

Inception网络理解

https://blog.csdn.net/wfei101/article/details/78309654

github上的pytorch代码:

https://github.com/zisianw/FaceBoxes.PyTorch

https://github.com/sfzhang15/FaceBoxes

下面是我做的检测部分生成的的网络结构(检测数据集是PASCAL): 运行代码: python3 test.py --dataset PASCAL

FaceBoxes(

(conv1): CRelu(

(conv): Conv2d(3, 24, kernel_size=(7, 7), stride=(4, 4), padding=(3, 3), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(conv2): CRelu(

(conv): Conv2d(48, 64, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), bias=False)

(bn): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(inception1): Inception(

(branch1x1): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch1x1_2): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce_2): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(inception2): Inception(

(branch1x1): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch1x1_2): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce_2): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(inception3): Inception(

(branch1x1): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch1x1_2): BasicConv2d(

(conv): Conv2d(128, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_reduce_2): BasicConv2d(

(conv): Conv2d(128, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(24, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(conv3_1): BasicConv2d(

(conv): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(conv3_2): BasicConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(conv4_1): BasicConv2d(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(conv4_2): BasicConv2d(

(conv): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(loc): Sequential(

(0): Conv2d(128, 84, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): Conv2d(256, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(2): Conv2d(256, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(conf): Sequential(

(0): Conv2d(128, 42, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): Conv2d(256, 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(2): Conv2d(256, 2, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

)

(softmax): Softmax()

)

Batchnorm原理详解:

https://blog.csdn.net/wzy_zju/article/details/81262453

我自己做了总结:batchnorm主要是对数据做归一化,可以加快训练速度,能对数据做去相关性,突出它们之间的分布相对差异。

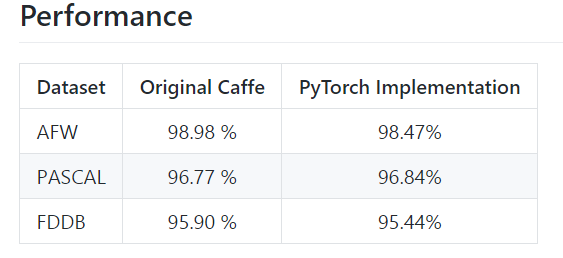

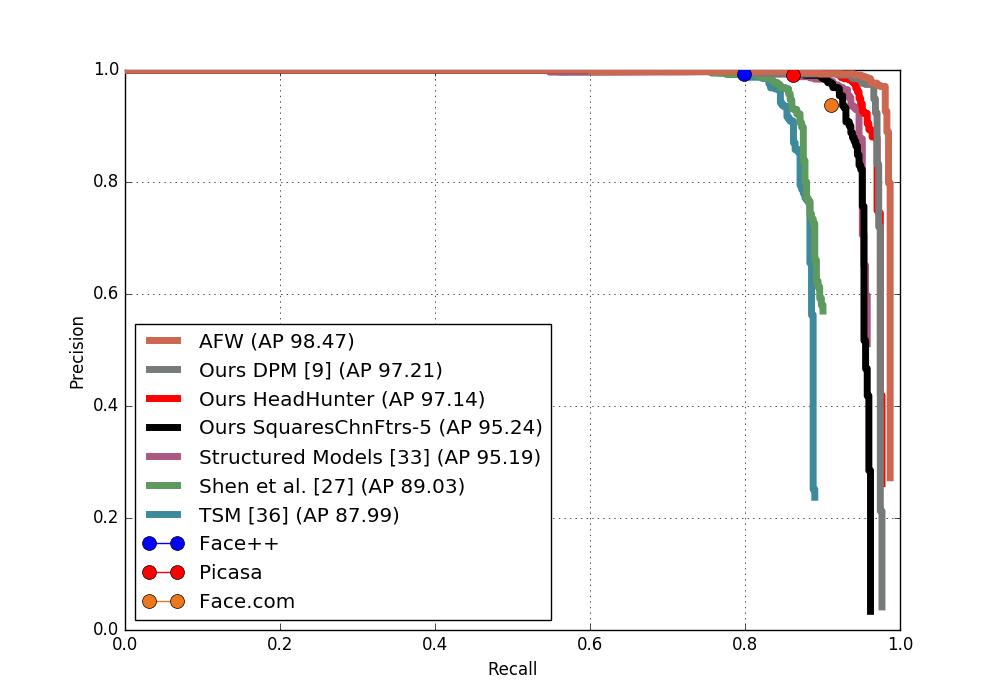

目前 用eval_tool 测得AFW精度为98.47%,其他数据集没有测成功,本次测试在服务器173上,文件夹位置/home/guochunhe/FaceBoxes_face_detect/FaceBoxes.PyTorch/marcopede-face-eval/

,后期尝试测试(在个人ubantu上测试,要求python2)。

最后

以上就是潇洒灯泡最近收集整理的关于FaceBoxes人脸检测(阅读整理)Inception网络理解的全部内容,更多相关FaceBoxes人脸检测(阅读整理)Inception网络理解内容请搜索靠谱客的其他文章。

![[CV Paper] FaceBoxes: A CPU Real-time Face Detector with High Accuracy](https://file2.kaopuke.com:8081/files_image/reation/bcimg14.png)

发表评论 取消回复