上一节其实已经学到了初始化的部分,就是求解相机和IMU的外参数:旋转。

接下来继续吧…

在processImage( )中,涉及到初始化的代码为:

//[4]判断是初始化还是非线性优化

if (solver_flag == INITIAL)//初始化

{

if (frame_count == WINDOW_SIZE)//如果帧数已经到达滑动窗口设定的帧数,就进行优化

{

bool result = false;

if( ESTIMATE_EXTRINSIC != 2 && (header.stamp.toSec() - initial_timestamp) > 0.1)

{

result = initialStructure();

initial_timestamp = header.stamp.toSec();

}

if(result)

{

solver_flag = NON_LINEAR;

solveOdometry();

slideWindow();

f_manager.removeFailures();

ROS_INFO("Initialization finish!");

last_R = Rs[WINDOW_SIZE];

last_P = Ps[WINDOW_SIZE];

last_R0 = Rs[0];

last_P0 = Ps[0];

}

else

slideWindow();

}

else//否则,就往滑动窗口中添加帧

frame_count++;

}

其中的initialStructure( )是视觉惯导联合初始化,注释如下:

分三部分来注释,第一部分为imu的可观性:

TicToc t_sfm;

//[1]check imu observibility

{

map<double, ImageFrame>::iterator frame_it;

Vector3d sum_g;

for (frame_it = all_image_frame.begin(), frame_it++; frame_it != all_image_frame.end(); frame_it++)

{

double dt = frame_it->second.pre_integration->sum_dt;

Vector3d tmp_g = frame_it->second.pre_integration->delta_v / dt;//表示重力加速度

sum_g += tmp_g;

}

Vector3d aver_g;

aver_g = sum_g * 1.0 / ((int)all_image_frame.size() - 1);//平均重力加速度

double var = 0;

for (frame_it = all_image_frame.begin(), frame_it++; frame_it != all_image_frame.end(); frame_it++)

{

double dt = frame_it->second.pre_integration->sum_dt;

Vector3d tmp_g = frame_it->second.pre_integration->delta_v / dt;

var += (tmp_g - aver_g).transpose() * (tmp_g - aver_g);//每次测量的重力与平均重力值的差值的总和

//cout << "frame g " << tmp_g.transpose() << endl;

}

var = sqrt(var / ((int)all_image_frame.size() - 1));//求这个指标代表什么啥呢?

//ROS_WARN("IMU variation %f!", var);

if(var < 0.25)

{

ROS_INFO("IMU excitation not enouth!");

//return false;

}

}

第二部分为求解相机的相对位姿

//【2】 global sfm

Quaterniond Q[frame_count + 1];

Vector3d T[frame_count + 1];

map<int, Vector3d> sfm_tracked_points;//用于存储后面SFM重建出来3D点的坐标

vector<SFMFeature> sfm_f;

for (auto &it_per_id : f_manager.feature)//FeatureManager f_manager;//滑窗内所有点 定义在feature_manager.h中

{

int imu_j = it_per_id.start_frame - 1;

SFMFeature tmp_feature;

tmp_feature.state = false;//是否三角化

tmp_feature.id = it_per_id.feature_id;

for (auto &it_per_frame : it_per_id.feature_per_frame)

{

imu_j++;//表示在一帧图像中特征点的数量

Vector3d pts_j = it_per_frame.point;//特征点的空间位置

tmp_feature.observation.push_back(make_pair(imu_j, Eigen::Vector2d{pts_j.x(), pts_j.y()}));//取归一化的二维坐标和特征点的起始帧数打包push进SFMFeature类型的变量tmp_feature中

}

sfm_f.push_back(tmp_feature);//sfm_f存储的是每个特征对应的所有的被观测数据

}

Matrix3d relative_R;

Vector3d relative_T;

int l;

if (!relativePose(relative_R, relative_T, l))//对帧间的相对位姿进行求解

{

ROS_INFO("Not enough features or parallax; Move device around");

return false;

}

GlobalSFM sfm;//参考链接 https://blog.csdn.net/jiweinanyi/article/details/100095460

if(!sfm.construct(frame_count + 1, Q, T, l,

relative_R, relative_T,

sfm_f, sfm_tracked_points))//l表示上面选出的参考帧的索引

{

ROS_DEBUG("global SFM failed!");

marginalization_flag = MARGIN_OLD;

return false;

}

在这一部分首先定义旋转量和平移量,建立一个map容器sfm_tracked_points用于存储后面SFM重建出来的特征点的坐标,建立一个SFMFeature类型的vector容器sfm_f,更新sfm_f,再调用relativePose(relative_R,relative_T,l)求解相对位姿,最后是sfm.construct(frame_count + 1, Q, T, l, relative_R, relative_T, sfm_f, sfm_tracked_points)构建全局SFM。

relativePose(relative_R,relative_T,l)代码注释如下:

bool Estimator::relativePose(Matrix3d &relative_R, Vector3d &relative_T, int &l)

{

// 在滑窗中找出和当前帧帧有有足够的correspondance和视差的帧作为参考帧

for (int i = 0; i < WINDOW_SIZE; i++)

{

vector<pair<Vector3d, Vector3d>> corres;

corres = f_manager.getCorresponding(i, WINDOW_SIZE);//计算corres

if (corres.size() > 20)

{

double sum_parallax = 0;

double average_parallax;

for (int j = 0; j < int(corres.size()); j++)

{

Vector2d pts_0(corres[j].first(0), corres[j].first(1));//取参考帧前两维坐标

Vector2d pts_1(corres[j].second(0), corres[j].second(1));//取当前帧前两维坐标

double parallax = (pts_0 - pts_1).norm();

sum_parallax = sum_parallax + parallax;

}

average_parallax = 1.0 * sum_parallax / int(corres.size());//求平均视差

if(average_parallax * 460 > 30 && m_estimator.solveRelativeRT(corres, relative_R, relative_T))//求出当前帧到参考帧的T

{

l = i;//把参考帧的索引赋值给变量l

ROS_DEBUG("average_parallax %f choose l %d and newest frame to triangulate the whole structure", average_parallax * 460, l);

return true;

}

}

}

return false;

}

首先获取窗口内每一帧与最后一帧之间所有的特征对应关系。具体地,对于每个特征,如果[i,WINDOW]区间被包含在其[start_frame,endFrame()]中,那么获取i和WINDOW分别对应的观测点坐标,存储在corres中,得到两帧之间的特征对应corres之后,如果其size大于20,计算其平均位移,如果具有足够的视差,那么就执行m_estimator.solveRelativeRT(corres, relative_R, relative_T)求解当前帧和最后一帧之间的相对位姿,然后把当前帧的索引赋值给变量l ,记录的是第一个与最后一帧具有足够的共视特征以及视差的帧的索引。

在上述代码中由调用了定义在solve_5pts.cpp中的solveRelativeRT(const vector<pair<Vector3d, Vector3d>> &corres, Matrix3d &Rotation, Vector3d &Translation)来求解相对位姿。代码如下:

bool MotionEstimator::solveRelativeRT(const vector<pair<Vector3d, Vector3d>> &corres, Matrix3d &Rotation, Vector3d &Translation)

{

if (corres.size() >= 15)

{

vector<cv::Point2f> ll, rr;

for (int i = 0; i < int(corres.size()); i++)//准备Point2f类型的点

{

ll.push_back(cv::Point2f(corres[i].first(0), corres[i].first(1)));//参考帧前两维

rr.push_back(cv::Point2f(corres[i].second(0), corres[i].second(1)));//当前帧前两维坐标

}

cv::Mat mask;

cv::Mat E = cv::findFundamentalMat(ll, rr, cv::FM_RANSAC, 0.3 / 460, 0.99, mask);//利用对极约束求解本质矩阵E

cv::Mat cameraMatrix = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

cv::Mat rot, trans;

int inlier_cnt = cv::recoverPose(E, ll, rr, cameraMatrix, rot, trans, mask);//求解出参考帧到当前帧旋转和平移

//cout << "inlier_cnt " << inlier_cnt << endl;

Eigen::Matrix3d R;//

Eigen::Vector3d T;

for (int i = 0; i < 3; i++)

{

T(i) = trans.at<double>(i, 0);

for (int j = 0; j < 3; j++)

R(i, j) = rot.at<double>(i, j);

}

Rotation = R.transpose();//求逆,求解出当前帧到参考帧的T

Translation = -R.transpose() * T;

if(inlier_cnt > 12)

return true;

else

return false;

}

return false;

}

下面是全局的SFM构建

construct(frame_count + 1, Q, T, l, relative_R, relative_T, sfm_f, sfm_tracked_points))

代码注释为:

// q w_R_cam t w_R_cam

// c_rotation cam_R_w

// c_translation cam_R_w

// relative_q[i][j] j_q_i

// relative_t[i][j] j_t_ji (j < i)

bool GlobalSFM::construct(int frame_num, Quaterniond* q, Vector3d* T, int l,

const Matrix3d relative_R, const Vector3d relative_T,

vector<SFMFeature> &sfm_f, map<int, Vector3d> &sfm_tracked_points)

{

//[1]初始化

feature_num = sfm_f.size();

//cout << "set 0 and " << l << " as known " << endl;

// have relative_r relative_t

// intial two view

q[l].w() = 1;

q[l].x() = 0;

q[l].y() = 0;

q[l].z() = 0;

T[l].setZero();

q[frame_num - 1] = q[l] * Quaterniond(relative_R);//没看懂这表示啥意思,relative_R表示的是当前帧到参考帧l的旋转

T[frame_num - 1] = relative_T;

//cout << "init q_l " << q[l].w() << " " << q[l].vec().transpose() << endl;

//cout << "init t_l " << T[l].transpose() << endl;

//[2]rotate to cam frame进行了取反操作并存储到Pose中

Matrix3d c_Rotation[frame_num];

Vector3d c_Translation[frame_num];

Quaterniond c_Quat[frame_num];

double c_rotation[frame_num][4];

double c_translation[frame_num][3];

Eigen::Matrix<double, 3, 4> Pose[frame_num];

c_Quat[l] = q[l].inverse();

c_Rotation[l] = c_Quat[l].toRotationMatrix();

c_Translation[l] = -1 * (c_Rotation[l] * T[l]);

Pose[l].block<3, 3>(0, 0) = c_Rotation[l];

Pose[l].block<3, 1>(0, 3) = c_Translation[l];

c_Quat[frame_num - 1] = q[frame_num - 1].inverse();

c_Rotation[frame_num - 1] = c_Quat[frame_num - 1].toRotationMatrix();

c_Translation[frame_num - 1] = -1 * (c_Rotation[frame_num - 1] * T[frame_num - 1]);

Pose[frame_num - 1].block<3, 3>(0, 0) = c_Rotation[frame_num - 1];

Pose[frame_num - 1].block<3, 1>(0, 3) = c_Translation[frame_num - 1];

//1: trangulate between l ----- frame_num - 1

//2: solve pnp l + 1; trangulate l + 1 ------- frame_num - 1;

//对于参考帧和当前帧之间的某一帧,先用pnp求解该帧位姿,再三角化该帧与当前帧的路标点

for (int i = l; i < frame_num - 1 ; i++)

{

// solve pnp

if (i > l)

{

Matrix3d R_initial = c_Rotation[i - 1];

Vector3d P_initial = c_Translation[i - 1];

if(!solveFrameByPnP(R_initial, P_initial, i, sfm_f))//更新两帧之间的位姿

return false;

c_Rotation[i] = R_initial;

c_Translation[i] = P_initial;

c_Quat[i] = c_Rotation[i];

Pose[i].block<3, 3>(0, 0) = c_Rotation[i];

Pose[i].block<3, 1>(0, 3) = c_Translation[i];

}

// triangulate point based on the solve pnp result第i帧和第frame-1帧三角化

triangulateTwoFrames(i, Pose[i], frame_num - 1, Pose[frame_num - 1], sfm_f);

}

//3: triangulate l-----l+1 l+2 ... frame_num -2

//对于参考帧和当前帧之间的某一帧,三角化参考帧到该帧的路标点

for (int i = l + 1; i < frame_num - 1; i++)

triangulateTwoFrames(l, Pose[l], i, Pose[i], sfm_f);

//4: solve pnp l-1; triangulate l-1 ----- l

// l-2 l-2 ----- l

//对于第一帧和参考帧之间的某一帧,先用pnp求解该帧位姿,然后三角化该帧到参考帧的路标点

for (int i = l - 1; i >= 0; i--)

{

//solve pnp

Matrix3d R_initial = c_Rotation[i + 1];

Vector3d P_initial = c_Translation[i + 1];

if(!solveFrameByPnP(R_initial, P_initial, i, sfm_f))

return false;

c_Rotation[i] = R_initial;

c_Translation[i] = P_initial;

c_Quat[i] = c_Rotation[i];

Pose[i].block<3, 3>(0, 0) = c_Rotation[i];

Pose[i].block<3, 1>(0, 3) = c_Translation[i];

//triangulate

triangulateTwoFrames(i, Pose[i], l, Pose[l], sfm_f);

}

//5: triangulate all other points

//三角化其它未恢复的路标点

for (int j = 0; j < feature_num; j++)

{

if (sfm_f[j].state == true)

continue;

if ((int)sfm_f[j].observation.size() >= 2)

{

Vector2d point0, point1;

int frame_0 = sfm_f[j].observation[0].first;

point0 = sfm_f[j].observation[0].second;

int frame_1 = sfm_f[j].observation.back().first;

point1 = sfm_f[j].observation.back().second;

Vector3d point_3d;

triangulatePoint(Pose[frame_0], Pose[frame_1], point0, point1, point_3d);

sfm_f[j].state = true;

sfm_f[j].position[0] = point_3d(0);

sfm_f[j].position[1] = point_3d(1);

sfm_f[j].position[2] = point_3d(2);

//cout << "trangulated : " << frame_0 << " " << frame_1 << " 3d point : " << j << " " << point_3d.transpose() << endl;

}

}

/*

for (int i = 0; i < frame_num; i++)

{

q[i] = c_Rotation[i].transpose();

cout << "solvePnP q" << " i " << i <<" " <<q[i].w() << " " << q[i].vec().transpose() << endl;

}

for (int i = 0; i < frame_num; i++)

{

Vector3d t_tmp;

t_tmp = -1 * (q[i] * c_Translation[i]);

cout << "solvePnP t" << " i " << i <<" " << t_tmp.x() <<" "<< t_tmp.y() <<" "<< t_tmp.z() << endl;

}

*/

//full BA

//BA优化滑窗内所有位姿

ceres::Problem problem;

ceres::LocalParameterization* local_parameterization = new ceres::QuaternionParameterization();

//cout << " begin full BA " << endl;

for (int i = 0; i < frame_num; i++)

{

//double array for ceres

c_translation[i][0] = c_Translation[i].x();

c_translation[i][1] = c_Translation[i].y();

c_translation[i][2] = c_Translation[i].z();

c_rotation[i][0] = c_Quat[i].w();

c_rotation[i][1] = c_Quat[i].x();

c_rotation[i][2] = c_Quat[i].y();

c_rotation[i][3] = c_Quat[i].z();

problem.AddParameterBlock(c_rotation[i], 4, local_parameterization);

problem.AddParameterBlock(c_translation[i], 3);

if (i == l)

{

problem.SetParameterBlockConstant(c_rotation[i]);

}

if (i == l || i == frame_num - 1)

{

problem.SetParameterBlockConstant(c_translation[i]);

}

}

for (int i = 0; i < feature_num; i++)

{

if (sfm_f[i].state != true)

continue;

for (int j = 0; j < int(sfm_f[i].observation.size()); j++)

{

int l = sfm_f[i].observation[j].first;

ceres::CostFunction* cost_function = ReprojectionError3D::Create(

sfm_f[i].observation[j].second.x(),

sfm_f[i].observation[j].second.y());

problem.AddResidualBlock(cost_function, NULL, c_rotation[l], c_translation[l],

sfm_f[i].position);

}

}

ceres::Solver::Options options;

options.linear_solver_type = ceres::DENSE_SCHUR;

//options.minimizer_progress_to_stdout = true;

options.max_solver_time_in_seconds = 0.2;

ceres::Solver::Summary summary;

ceres::Solve(options, &problem, &summary);

//std::cout << summary.BriefReport() << "n";

if (summary.termination_type == ceres::CONVERGENCE || summary.final_cost < 5e-03)

{

//cout << "vision only BA converge" << endl;

}

else

{

//cout << "vision only BA not converge " << endl;

return false;

}

for (int i = 0; i < frame_num; i++)

{

q[i].w() = c_rotation[i][0];

q[i].x() = c_rotation[i][1];

q[i].y() = c_rotation[i][2];

q[i].z() = c_rotation[i][3];

q[i] = q[i].inverse();

//cout << "final q" << " i " << i <<" " <<q[i].w() << " " << q[i].vec().transpose() << endl;

}

for (int i = 0; i < frame_num; i++)

{

T[i] = -1 * (q[i] * Vector3d(c_translation[i][0], c_translation[i][1], c_translation[i][2]));

//cout << "final t" << " i " << i <<" " << T[i](0) <<" "<< T[i](1) <<" "<< T[i](2) << endl;

}

for (int i = 0; i < (int)sfm_f.size(); i++)

{

if(sfm_f[i].state)

sfm_tracked_points[sfm_f[i].id] = Vector3d(sfm_f[i].position[0], sfm_f[i].position[1], sfm_f[i].position[2]);

}

return true;

}

对于这个函数的分析也可参考:https://blog.csdn.net/jiweinanyi/article/details/100095460

initialStructure( )的第三部分代码:

//【3】solve pnp for all frame

//对于滑窗内每一帧图像,都跟上一步SFM得到的所有3D路标点进行cv::solvepnp求解位姿

map<double, ImageFrame>::iterator frame_it;

map<int, Vector3d>::iterator it;

frame_it = all_image_frame.begin( );

for (int i = 0; frame_it != all_image_frame.end( ); frame_it++)

{

// provide initial guess初值估计

cv::Mat r, rvec, t, D, tmp_r;

if((frame_it->first) == Headers[i].stamp.toSec())

{

frame_it->second.is_key_frame = true;

frame_it->second.R = Q[i].toRotationMatrix() * RIC[0].transpose();//这里的R是针对哪个坐标系啊?

frame_it->second.T = T[i];

i++;

continue;

}

if((frame_it->first) > Headers[i].stamp.toSec())

{

i++;

}

Matrix3d R_inital = (Q[i].inverse()).toRotationMatrix();

Vector3d P_inital = - R_inital * T[i];

cv::eigen2cv(R_inital, tmp_r);

cv::Rodrigues(tmp_r, rvec);

cv::eigen2cv(P_inital, t);

frame_it->second.is_key_frame = false;

vector<cv::Point3f> pts_3_vector;

vector<cv::Point2f> pts_2_vector;

for (auto &id_pts : frame_it->second.points)//frame_it.second数据类型为ImageFrame,points的类型为map<int, vector<pair<int, Eigen::Matrix<double, 7, 1>> > > points;

{

int feature_id = id_pts.first;

for (auto &i_p : id_pts.second)

{

it = sfm_tracked_points.find(feature_id);// map<int, Vector3d> sfm_tracked_points;

if(it != sfm_tracked_points.end())

{

Vector3d world_pts = it->second;

cv::Point3f pts_3(world_pts(0), world_pts(1), world_pts(2));

pts_3_vector.push_back(pts_3);//准备pts_3_vector

Vector2d img_pts = i_p.second.head<2>();

cv::Point2f pts_2(img_pts(0), img_pts(1));

pts_2_vector.push_back(pts_2);//准备pts_2_vector

}

}

}

cv::Mat K = (cv::Mat_<double>(3, 3) << 1, 0, 0, 0, 1, 0, 0, 0, 1);

if(pts_3_vector.size() < 6)//判断pnp所用点对数量是否足够

{

cout << "pts_3_vector size " << pts_3_vector.size() << endl;

ROS_DEBUG("Not enough points for solve pnp !");

return false;

}

if (! cv::solvePnP(pts_3_vector, pts_2_vector, K, D, rvec, t, 1))//判断pnp能否求解出旋转向量rvec和平移向量t,D=Mat(),不太明白是啥

{

ROS_DEBUG("solve pnp fail!");

return false;

}

cv::Rodrigues(rvec, r);//旋转向量转化成旋转矩阵形式

MatrixXd R_pnp,tmp_R_pnp;

cv::cv2eigen(r, tmp_R_pnp);//Mat类型转eigen类型

R_pnp = tmp_R_pnp.transpose();

MatrixXd T_pnp;

cv::cv2eigen(t, T_pnp);

T_pnp = R_pnp * (-T_pnp);

frame_it->second.R = R_pnp * RIC[0].transpose();

frame_it->second.T = T_pnp;

}

在initialStructure()中第四部分的内容为:

//视觉的初始化对齐,包括求Bg,初始化速度、重力和尺度因子,这一部分很多都是根据公式推导而来的

if (visualInitialAlign())

return true;

else

{

ROS_INFO("misalign visual structure with IMU");

return false;

}

这一部分是求解相机和imu之间的旋转及平移之后需要继续求解的部分,包括陀螺仪bias的校正,求解初始化速度、重力和尺度因子,修正重力矢量等工作。接着来看visualInitialAlign( )函数,它首先调用了VisualIMUAlignment(all_image_frame, Bgs, g, x)函数

bool VisualIMUAlignment(map<double, ImageFrame> &all_image_frame, Vector3d* Bgs, Vector3d &g, VectorXd &x)

{

solveGyroscopeBias(all_image_frame, Bgs);

if(LinearAlignment(all_image_frame, g, x))

return true;

else

return false;

}

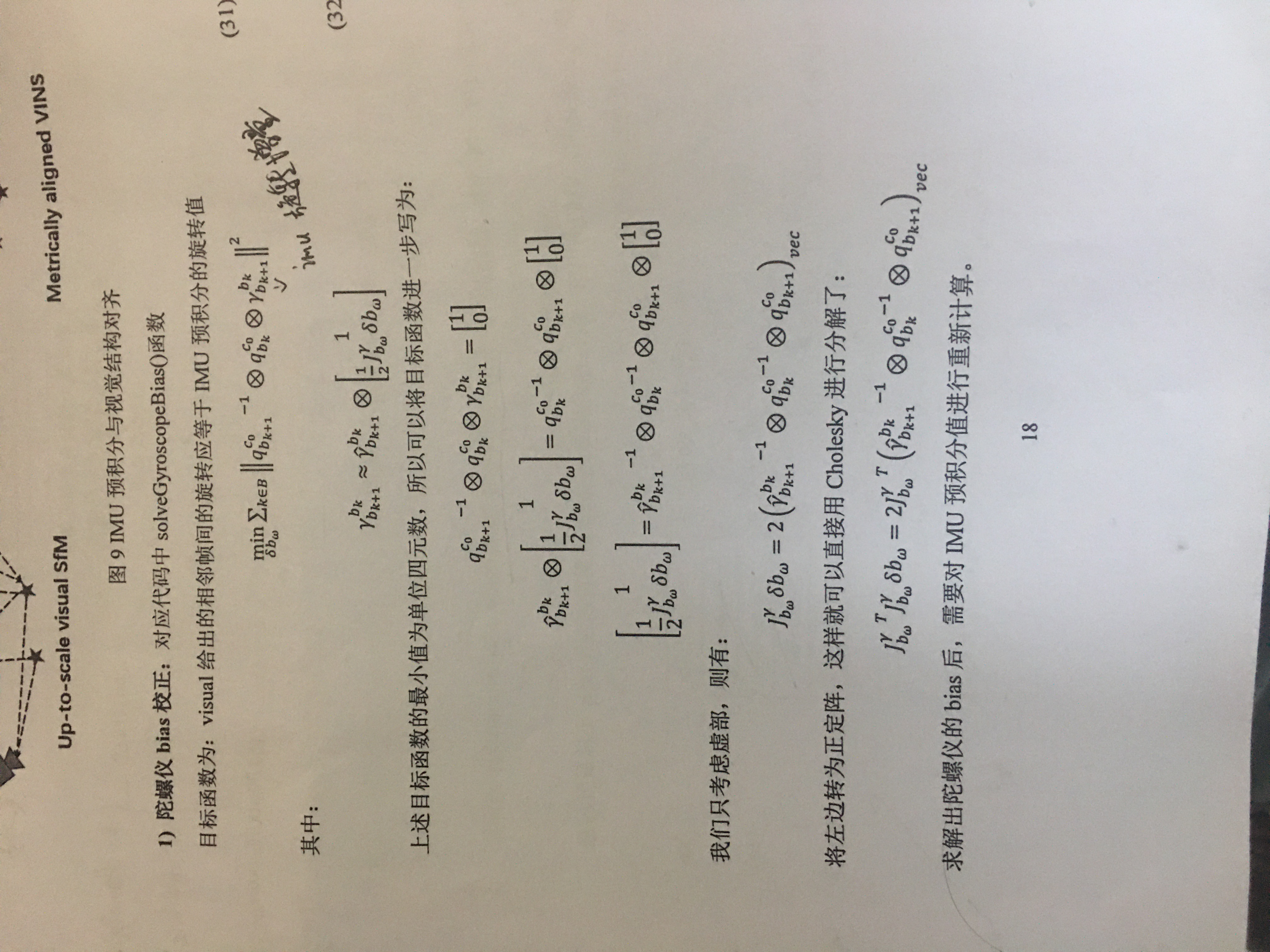

这里面主要是求Bg,在solveGyroscopeBias(all_image_frame, Bgs)函数中,LinearAlignment(all_image_frame, g, x)求解初始化速度,重力和尺度因子。solveGyroscopeBias(all_image_frame, Bgs)理论知识为:

对应代码为:

void solveGyroscopeBias(map<double, ImageFrame> &all_image_frame, Vector3d* Bgs)//求解陀螺仪的偏置Bg

{

Matrix3d A;

Vector3d b;

Vector3d delta_bg;//构建A*delta_bg=b的方程

A.setZero();

b.setZero();

map<double, ImageFrame>::iterator frame_i;

map<double, ImageFrame>::iterator frame_j;

for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end(); frame_i++)

{

frame_j = next(frame_i);//j是i的下一帧

MatrixXd tmp_A(3, 3);

tmp_A.setZero();

VectorXd tmp_b(3);

tmp_b.setZero();

Eigen::Quaterniond q_ij(frame_i->second.R.transpose() * frame_j->second.R);//这个R指相机和imu之间的旋转

tmp_A = frame_j->second.pre_integration->jacobian.template block<3, 3>(O_R, O_BG);//从雅克比矩阵中取出旋转对bg的导数dq_dbg

tmp_b = 2 * (frame_j->second.pre_integration->delta_q.inverse() * q_ij).vec();

A += tmp_A.transpose() * tmp_A;

b += tmp_A.transpose() * tmp_b;

}

delta_bg = A.ldlt().solve(b);//dlt分解求解

ROS_WARN_STREAM("gyroscope bias initial calibration " << delta_bg.transpose());

for (int i = 0; i <= WINDOW_SIZE; i++)

Bgs[i] += delta_bg;

for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end( ); frame_i++)

{

frame_j = next(frame_i);

frame_j->second.pre_integration->repropagate(Vector3d::Zero(), Bgs[0]);

}

}

没什么解释的。。。就是根据理论知识去构建方程组求解就可以了

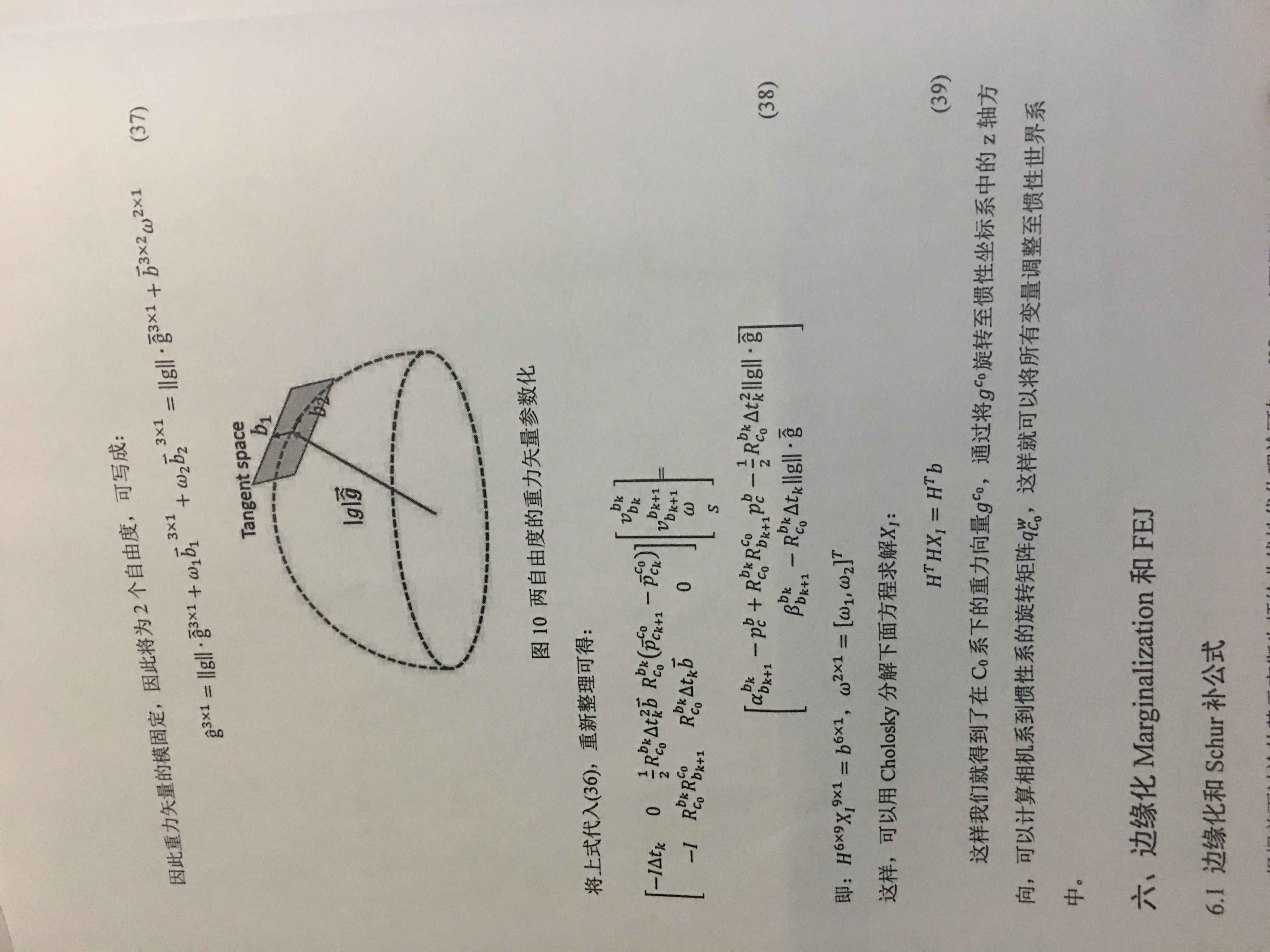

LinearAlignment(all_image_frame, g, x)求解初始化速度,重力和尺度因子,当中又修正重力矢量,函数为:RefineGravity(all_image_frame, g, x),这两部分的理论知识为:

具体代码实现在initial_alignment.cpp文件中。

具体代码实现在initial_alignment.cpp文件中。

初始化就算结束了。。。。

最后

以上就是动人绿草最近收集整理的关于VINS-Mono代码学习记录(八)--processImage( )续(包含初始化)的全部内容,更多相关VINS-Mono代码学习记录(八)--processImage(内容请搜索靠谱客的其他文章。

发表评论 取消回复